It is an age-old problem, that of having some data you want to store somewhere, and later bring it back. How do you format the data? Custom file formats are not that hard, but if you use an existing format you can probably steal code from a library to help you. Common choices include XML or the simpler JSON. However, neither of these are very concise. That’s where MessagePack comes in.

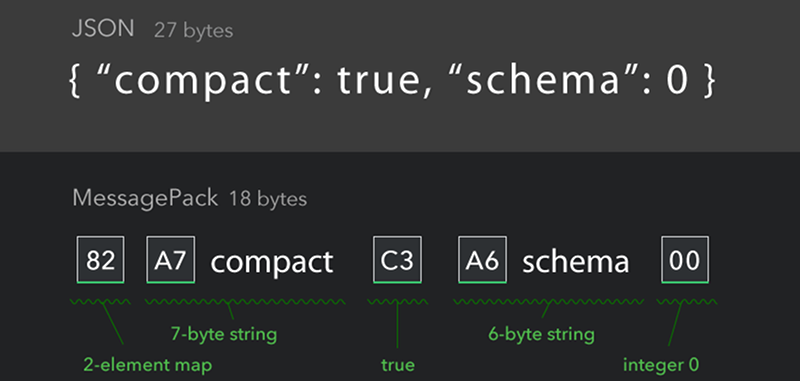

For example, consider this simple JSON stanza:

{"compact":true, "schema":0}

This is easy to understand and weighs in at 27 bytes. Using MessagePack, you’d signal some special binary fields by using bytes >80 hex. Here’s the same thing using the MessagePack format:

0x82 0xA7 c o m p a c t 0xC3 0xA6 s c h e m a 0x00

Of course, the spaces are there for readability; they would not be in the actual data stream which is now 18 bytes. The 0x82 indicates a two-byte map. The 0xA7 introduces a 7-byte string. The “true” part of the map is the 0xC3. Then there’s a six-byte string (0xA6). Finally, there’s a zero byte indicating a zero.

You can probably puzzle it out for the most part. Any byte that starts with a zero is a fixed integer. Numbers that start at 0x80 encode a map, so 0x84 is a four-element map. For arrays, the prefix is 9 instead of 8 and strings start with either 0xA0 or 0xB0, so you can have up to 32 characters easily encoded.

Of course, you might need an integer bigger than 0x7F, right? So there are other integer formats such as 0xCC for 8-bit unsigned or 0xD3 which is a 64-bit signed big-endian number. Prefixes of 0xCA and 0xCB store 32- or 64-bit IEEE 754 floating point numbers.

For larger strings there is str8 (0xd9), str16 (0xda), and str32 (0xdb). In each case, the number is the count of bits in the string length. So 0xd9 gets a single byte count and 0xdb gets four-bytes for the count. There are other formats, of course, and you can see them in the spec.

The real trick, of course, is the availability of library code. The project claims over 50 languages on their web page. So if you are writing in C, C++, Haskell, Dart, Kotlin, or Matlab, you can find code to help you.

We’ve seen a lot of JSON out there, and it will probably remain since most applications don’t care about the efficiency of representing data. While XML has fallen out of favor because of its complexity, there are still places you run into it.

let me guess, next week we’ll have an article about protobufs, and then the week after an article about how weird stuff isn’t worth it when gzip is available, so might as well use json anyways since the support is much broader? :P

Oh noez not the “gzipped json is just as good” idea! I’ve actually heard that as a real argument against msgpack, because some people will do anything to get rid of an extra dependancy.

It is about readabitity, not dependency.

Json is human-readable, and can be manipulated with most anything.

I wish I could upvote you.

JSON is king.

most unintelligent and useless thing i’ve ever heard. id expect this from a VB dev. human readability in protocols/formats is the dumbest idea ever. nobody interacts with protocols, formats, etc at the human level. that’s what an app is for. nobody also telnet’s ssh’s or anything like that into an application anymore. human readable, string based formats, are absolutely useless, slow, and dumb. you can do anything without JSON also. its a higher level format. that means it came from something lower level. i hope you didnt pay for a degree in computer science. binary is king. its not even arguable. messagepack is better, but not the best. binary key-less requests/responses are the way to go. never have i ever, when using an app, said, man, this app is so wonderful since its using a protocol/format i can understand and read! because the app is doing it for me….use binary. dont be stupid. ok? JSON is such an overrated, trendy, pile of hot garbage. i serialize/deserialize things 2/4x faster with our binary serializer. have fun “reading” the data your app sends to your backend. JSON is for people who have never dealt with old school sockets, or even know what berkely sockets are. people who have never written a real network application. making apps these days is so home depot. anyone can just go to NPM/nuget and grab a framework lol.

Valve had used JSON on their peripherals and just gzipped it. Sames goes for a lot of webservers which support gzip compression. It’s soooo tight. Stuff like puff.c make decompression a breeze as well. I’ve heard the extra “gzip dependency thing” but including a .c file in your build that takes 0.1 seconds to compile usually gets the dependency people to rabble down.

Can’t we just call gzipped JSON a JZON ?

good idea! Or more standardized: .json.gz

or maybe a whole range of “Captain Obvious to the rescue”-articles.

Episode 1: Binary Encoding is more efficient than Text encoding

Episode 2: IDs are shorter than names

Episode 3: Java is not more efficient than C

Episode 4: Heavier is less light.

.

.

.

Support may be broader, but sometimes you just don’t have the space to be stuffing JSON into things, so you look at formats such as MessagePack, EXI, BSON, CBOR, etc.

At my workplace, the use case for CBOR is we’re sending data over a 6LoWPAN network which will fragment IP datagrams bigger than 128 bytes. So 128 bytes has to carry the (compressed) IP and UDP header information, plus some request metadata in addition to the payload. The machines sending it are also low on RAM, so we can’t afford the string processing needed to generate and parse JSON. CBOR fits that bill nicely.

Brisbane WICEN also uses it, there, the machines are PocketBeagles, more than capable of dealing with JSON. However, the only network link is a 1200 baud AX.25 packet radio network, and APRS messaging explicitly forbids the use of { and } characters in a message. So what to do? We use Base64 encoding, which bloats things out a bit, but if we use CBOR, we gain quite a bit in terms of efficiency.

CBOR is annoying about representing negative integers…

My thoughts exactly. Should this messagepack gain traction, sooner or later someone will realize that the two ends of communication will need to agree on what fields they send. Which means you can use short field IDs instead of writing out the field names in full. And then they go out to badly re-implement protobufs.

Or later they realize that their communication protocol might be useful to other, they need to maintain it by removing / adding fields and protobuf is not longer the panacea.

Of course Kenton Varda went on to create Cap’nProto, so you could say Protobufs was itself a bad pre-implementation.

Why not use ASN.1? There are lots of implementations of that as well.

The nice thing about json and xml is that you can edit it with a text editor.

I was just looking for something like this. Thanks for the tip

http://Www.bjson.org (amongst at least one other)

There is also bencode https://en.wikipedia.org/wiki/Bencode as used in torrent files

ASN1 (ducks runs away)

+BER

was just about to mention ASN1+BER as well… Once you understood the TLV Structure it’s not that difficult…

It’s funny how over the last years we optimized binary formats into text formats that are easy to read and now want to optimize them back into something that is less readable? We either have to decide for one or simply live with the obvious fact that every problem has it’s own requirements wrt. to readability and number of bytes necessary/available…

There’s also CBOR which is an actual IETF standard: https://cbor.io/

Exactly, +1 for CBOR. Shout-out to Carsten :)

And use COSE (CBOR Object Signing and Encryption) to add a bit of security: https://tools.ietf.org/html/rfc8152

Another +1 for CBOR. It’s what we use in real products.

Poo! I’ll take JSON any day. Give me simplicity and readability. Efficiency is much overrated in most cases.

Yep, because even lowly Arduinos have gigabytes of RAM and what can be more readable than a JSON message that’s truncated because it didn’t fit in the datagram?

This comment hurts my brain on X different levels;

Are you suggesting that the MessagePack format is more readable than a truncated JSON file?

Are you suggesting that JSON should be used on lowly arduino’s?

Did you not read ‘..most cases.’?

Your post has an air of superiority around it, which I feel is completely uncalled for.

About the same air of superiority as “Give me simplicity and readability. Efficiency is much overrated in most cases.”

If you’re dealing with web programming and REsT APIs… definitely, JSON over HTTP is actually a pretty good system. The format is native to JavaScript (you can do

eval('(' + thejson + ')');if you must, but you really shouldn’t!) so easily handled by web browsers, unlike XML where one has to contend with a document object model.It’s expressive enough without being overly complicated. Unfortunately, as it’s all ASCII, it comes with significant overhead. On a desktop computer, you’re unlikely to “feel” that overhead.

On a small microcontroller, that overhead can be painful. Generating it isn’t too bad, especially if no strings are involved, you can get by with

snprintf. Parsing it is a pain because you have to tokenise the input, strip out whitespace at the correct points, then parse the tokens out. It takes lots of memory and CPU time.Then there’s the constrained nature of the transmission medium to contend with. Let’s suppose you’re sending a telemetery from an Arduino using Nordic nRF24L01+ devices. These have a 32-byte limit on payload size. How many 3-digit integer numbers can you send? You might squeeze 8 or 9 out of 32 bytes with JSON… CBOR will fit 20 easily. You can possibly go higher with a custom format, but who wants to spend their day writing encoders and parsers?

As for “most cases”… embedded devices outnumber desktop computers and smartphones 100:1. Look at home automation: are they using Core i7s with 32GB RAM with WiFi radios in those battery operated window sensors? No, they’re using lowly CC2538s (Cortex M3, 32kB RAM) and NRF52840s (Cortex M4F, 256kB RAM) with 802.15.4 radios. The only thing that comes close to being able to deal with JSON might be the “hub” they talk to.

Claiming “I’ll take JSON any day” is akin to saying this edge case doesn’t exist and that all embedded developers should throw away protocols like CBOR and CoAP, and just use JSON and HTTP on their firmware when it simply isn’t the right tool for the job.

JSON is easy. I’ve created a simple JSON parser for 8-bit embedded CPUs in assembly language – it works great, and so easy to use.

Sorry, “messagepack” isn’t going to happen.

This is more efficient like makefiles are more efficient. It’s not unless you really need it.

Yet Another Binary Encoding Of A Tree.

Yet Another Not Invented Here Syndrome.

wikipedia:Binary XML is littered with the corpses of other instances, all of which solve the same problem, and some of which are even standards.

So it is basically but is also something like huffman coding where something that is most common is encoded with fewer bytes, genius.

I think it misses one thing: table.

.

Of course one can use array of map but it is less compact.

Didn’t mean to reply you.

Why it’s there..

I’m pretty sure I’ve checked it.

Yep

{“compact”:true,”schema”:0}

27 bytes.

But

{“compact”:1,”schema”:0}

down to 24

{compact:1,schema:0}

down to 20. No binary in sight. About the same as the above (and why should there be quotes anyway… :-) )

The thing with json is that it is read/write in anything, not that it is supper space efficient. If the whole thing is to big, zip it.

If you need faster speed/better space, go to a full custom binary packet – it will be faster and smaller.

I just can’t see a niche for what they are suggesting in this article..

RC=0 stuartl@rikishi ~ $ ipython

importPython 3.6.9 (default, Jan 9 2020, 22:00:01)

Type "copyright", "credits" or "license" for more information.

IPython 5.4.1 -- An enhanced Interactive Python.

? -> Introduction and overview of IPython's features.

%quickref -> Quick reference.

help -> Python's own help system.

object? -> Details about 'object', use 'object??' for extra details.

In [1]: import cbor, binascii

In [2]: test = cbor.dumps({"compact":True,"schema":0})

In [3]: len(test)

Out[3]: 18

In [4]: binascii.b2a_hex(test)

Out[4]: b'a267636f6d70616374f566736368656d6100'

That’s without violating standards … whereas your not-quite-JSON does violate standards.

A big advantage is when you try to send floating point data:

In [5]: import json

In [6]: import math

In [7]: data = {"pi": math.pi, "foo": 1241.5363133451341}

In [8]: json_data = json.dumps(data)

In [9]: cbor_data = cbor.dumps(data)

In [10]: len(json_data)

Out[10]: 52

In [11]: len(cbor_data)

Out[11]: 26

In [12]: print(json_data)

{"pi": 3.141592653589793, "foo": 1241.5363133451342}

In [13]: binascii.b2a_hex(cbor_data)

Out[13]: b'a2627069fb400921fb54442d1863666f6ffb409366252f53570a'

Dissecting that blob (hopefully WordPress doesn’t butcher it):

a2 627069 fb400921fb54442d18 63666f6f fb409366252f53570a

| | | | |

| | | | '- Double: 1241.5363133451342

| | | '-------------------- Text String: "foo"

| | '---------------------------------------------- Double: 3.141592653589…

| '----------------------------------------------------- Text String: "pi"

'------------------------------------------------------- Map with 2 elements

You’ll note the floats are represented in standard IEEE-754 float. At worst your MCU is byte-swapping, which is a cheap operation compared to

strtod. Unsigned integers up to 23 are represented as a single byte (0x00 through 0x17), then two bytes for 24 through to 255… etc). Booleans are 0xf4 (false) and 0xf5 (true).For JSON, it not only has to do string processing, handling whitespace, etc… it also must do floating-point arithmetic to convert that string to native form.

Now, if space (code/RAM) or CPU time is tight it’s pretty obvious which is the more efficient choice.

Json strings are quoted.

yes, I realise that. But I’ve always thought that was a pretty iffy design decision :-), and much of the code I’ve seen doesn’t assume it. I was making a point that there were easier ways of making it smaller without going binary..

On the other post above – yep, one of the great benefits of binary – just wack it into something and use it..

So I’ve ended up using json for config stuff that is not time or space critical, or things with slow (& small) data transmission requirements, and binary fo the bigger faster stuff. Quite often json to describe what is IN the binary blob… :-)

Extract the schema elsewhere, and then you just need the data.

AVRO is one such managed, versioned mechanism for doing this – often used in messaging systems. Obviously, the schema management / remote calls might be overhead on a small bluetooth/wifi attached MCU, before you get excited about your home network messaging stuff.

Don’t want that? There are a bazillion compressed binary tree formats. One example, BSON, is used by MongoDB – http://bsonspec.org/

JSON is alright, too many quotation marks for me though.

>JSON is alright, too many quotation marks for me though.

Those quotation marks are delimiters. You still need delimiters in any kind of format, and quotation marks are easy to work with. Also, quotation marks are only needed for keys and string values.

Hi,

I worked on a project similar to this problem, but I wanted it more like a “remote procedure call”, event for embedded systems (bare metal)

I developed microparcel (https://github.com/lukh/microparcel) and microparcel-python (https://github.com/lukh/microparcel-python) that allows to access specific bits on a chunk of a message, encapsuled in a Frame.

Therefor, a “builder” make a Message structure from a schemas, and it allows to build messages like: “makeStuff(param1, enum, etc) .. On the other side, there is a parser and a processor that route the message to a virtual method, processStuff(param1, enum1)

In that way, the dev only has to create the message and implement the action…

See the example in https://github.com/lukh/microparcel-tools (the generator)

I would love to get feedback of this project

Best :)

In our Company we use for data transfer Google Brotocol Buffer. It has althoug good ration between data und transmitted bytes. It is even possible write the byte string in an file and read it out after.

Brotocol Buffer? Is the ACK a fistbump?

I’ll see myself out.

Ahhh, the great thing about standards is that there are just SO MANY to choose from!

Try sending messagepack over a network that only supports 7-bit characters…

Even around the 2010’s, there were Italian network operators who still used proxies that only proxied 7-bit ascii (they figured that WAP and HTML did not need more than 7-bits, and they were right of course).

I am mentioning Italian network operators because our application got bitten quite hard by it at the time, and we had to change our protocols to only use 7-bits.

I’m guessing sending graphics to those users must be a challenge too… JPEGs and PNGs are chock full of bytes with the 8th bit set. I’m sure Netflicks doesn’t stream in 7-bit.

Thankfully, the IETF have thought of this:

In [1]: import json, cbor, msgpack, binascii

In [2]: json_data = '{"compact":true, "schema":0}'

In [3]: raw_data = json.loads(json_data)

In [4]: msgpack_data = msgpack.dumps(raw_data)

In [5]: cbor_data = msgpack.dumps(raw_data)

In [6]: len(json_data)

Out[6]: 28

In [7]: len(msgpack_data)

Out[7]: 18

In [8]: len(cbor_data)

Out[8]: 18

In [9]: len(binascii.b2a_base64(msgpack_data))

Out[9]: 25

In [10]: len(binascii.b2a_base64(cbor_data))

Out[10]: 25

It is worth noting that Al’s example is not a good one as most of the content is ASCII strings. Usually if one is wanting to use one of these formats, they’ll keep strings to a minimum and use integers for most things.

Let’s take a more real-world example: here’s a message to a device to turn on some digital outputs:

In [1]: import json, cbor, msgpack

In [2]: json_data = '{"v":0,"c":{"30":true,"31":true,"32":true,"33":true}}'

In [3]: data = json.loads(json_data)

In [4]: len(json_data)

Out[4]: 53

In [5]: len(cbor.dumps(data))

Out[5]: 23

In [6]: len(msgpack.dumps(data))

Out[6]: 23

It was generated by a NodeJS application, one aberration of this is that as JavaScript objects have ASCII strings for property values, you see the channel numbers are encoded as strings instead of as integers. If I figure out a work-around to that, the message could be a lot smaller.

Already though, it’s less than half the size. Let’s suppose I need to send that over a 7-bit network though:

In [7]: import base64

In [8]: len(base64.b64encode(cbor.dumps(data)))

Out[8]: 32

In [9]: len(base64.b85encode(cbor.dumps(data)))

Out[9]: 29

Still much smaller than the JSON… and equally as compatible with 7-bit networks.

Just to point out… not my example, but the one that the project uses on its web site.

In your first example you mistakenly took cbor_data from msgpack.dumps.

But that doesn’t change a thing, because length of right cbor_data is 18bytes as well.

Since when are machines humans? Machines don’t need human readable code because they’re not humans. Many developers miss this and don’t write code for the machine, but rather for the developers. That’s why I see way too many major use sites wasting 80% of their bandwidth because developers are too lazy to implement minification, remove dead code and use gzip/brotli. And annoying users with poor performance at the same time. Ever hear of using build scripts to bring things to production?

XML was the greatest thing at one time. When JSON came out they said the same thing, I’ll take XML over JSON any day. But here we are with native JSON methods. If we never experimented with something new we might never have had JSON. We have HTTP/2 for binary headers, why not a binary data format too? But then I am biased from being a C developer for decades and having to invent new things the hard way before JavaScript became popular. Or is it we’re to complacent to learn something new. Maybe we need to use new development tools.

There are two reasons for using JSON. Either you are forced to use it because the spec says so, or you must have the possibility to specify numbers with arbitrary precision. Otherwise a binary format is probably better. Most binary formats has editors where you can read and edit the contents in ascii, so that is not a killer function in JSON. And in database applications it’s normally not feasible to gzip the records and fields.