Can you remember everything you’ve touched in a given day? If you’re being honest, the answer is, “Probably not.” We humans are a tactile species, with an outsized proportion of both our motor and sensory nerves sent directly to our hands. We interact with the world through our hands, and unfortunately that may mean inadvertently spreading disease.

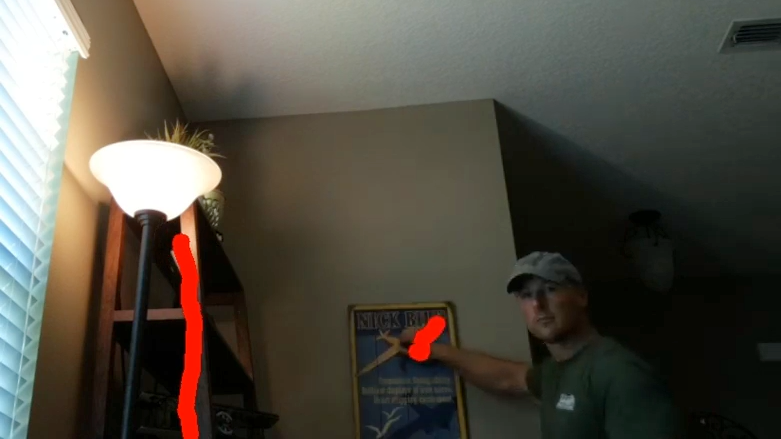

[Nick Bild] has a potential solution: a machine-vision system called Deep Clean, which monitors a scene and records anything in it that has been touched. [Nick]’s system uses Jetson Xavier and a stereo camera to detect depth in a scene; he built his camera from a pair of Raspberry Pi cams and a Pi 3B+, but other depth cameras like a Kinect could probably do the job. The idea is to watch the scene for human hands — OpenPose is the tool he chose for that job — and correlate their depth in the scene with the depth of objects. Touch a doorknob or a light switch, and a marker is left on the scene. The idea would be that a cleaning crew would be able to look at the scene to determine which areas need extra attention. We can think of plenty of applications that extend beyond the current crisis, as the ability to map areas that have been touched seems to be generally useful.

[Nick] has been getting some mileage out of that Xavier lately — he’s used it to build an AI umpire and shades that help you find lost stuff. Who knows what else he’ll find to do with them during this time of confinement?

or you could just feed your kids peanut butter sandwich and look for the thin coating of pb on all of your posessions

I thought Pb wasn’t allowed on kids anymore. . .

Yep because they kept leaving grubby finger prints every where

The layers of dust show me where I haven’t touched.