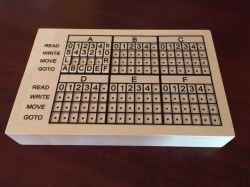

A Bayer array, or Bayer filter, is what lets a digital camera take color photos. It’s an array of tiny color filters that sit on top of a camera’s CCD. The filter makes it so that each sub-pixel in the image sensor only sees red, green, or blue light. The Bayer filter is an elegant tool that gives us color digital photos, but what would you do if you wanted to remove one?

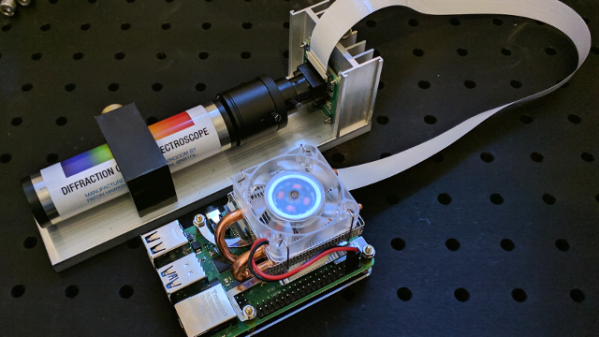

[Les Wright] has devised a way to remove the Bayer filter from the Raspberry Pi Camera. Along with filtering red, green, and blue light for their respective sensors, Bayer filters also greatly reduce the amount of UV and IR light that make it to the CCD sensor. [Les] uses the Raspberry Pi camera in his Pi-based Spectrometer, and he wants to remove the Bayer filter to improve and expand its sensitivity.

Of course, [Les] isn’t the first one to want to do this. Some have succeeded in physically scratching the filter off of the CCD, but because the Pi Camera has vital circuitry around the outside of the sensor, scratching the filter off would likely destroy the circuitry. Others have stripped it off using chemical means, so [Les] gave this a go and destroyed no small number of cameras in his attempt to strip the filter off with solvents like DMSO, brake fluid, and industrial paint stripper.

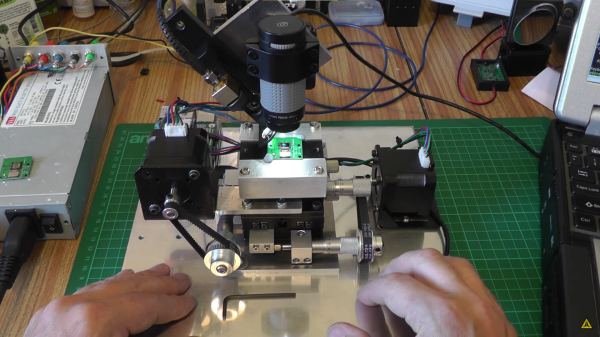

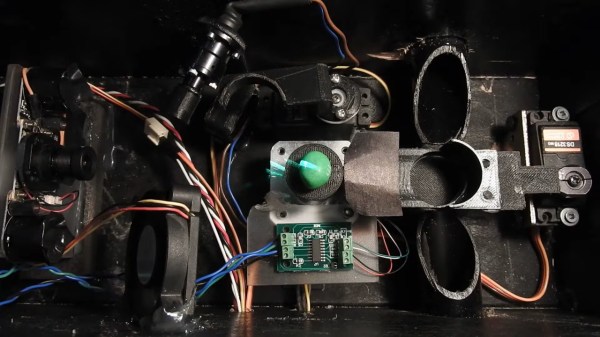

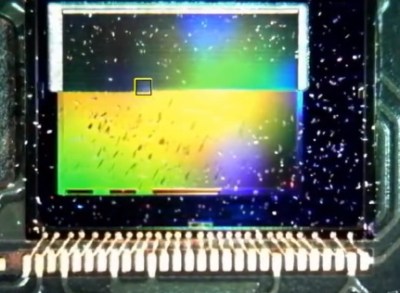

Inspired by techniques used in industry, [Les] eventually tried to use a several-kW nitrogen laser to burn off the filter (which seems appropriate given his experience with lasers). He built a rig that raster scans the laser across the sensor using stepper motors to drive micrometer bases. A USB microscope was included to allow progress to be monitored, and you can see a change in the sensor’s appearance as the filter is removed.

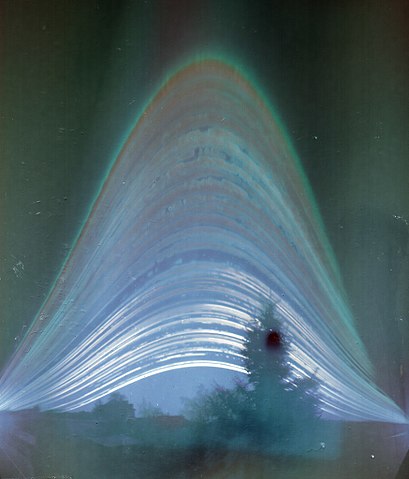

After blasting off the Bayer filter, [Les] plugged his improved camera into his home-built spectrometer and pointed it outside. The new camera gives the spectrometer much more uniform sensitivity and allows [Les] to see further into the IR and UV bands. The spectrometer can even detect the Fraunhofer lines—subtle dips in the sun’s spectrum from absorption by molecules in the atmosphere.

This is incredible for a DIY setup and instrument, and we can’t wait to see what [Les] does next to improve his measurements. If your spectrometry needs are more mass than visual, take a look at this home-built mass spectrometer. Home spectrometers aren’t just for examining light spectra—they can also be used to judge the ripeness of fruit!

Continue reading “Using A Laser To Blast Away A Bayer Array”