The Timecraft project by [Amy Zhao] and team members uses machine learning to figure out a way how an existing painting may have been originally been painted, stroke by stroke. In their paper titled ‘Painting Many Pasts: Synthesizing Time Lapse Videos of Paintings’, they describe how they trained a ML algorithm using existing time lapse videos of new paintings being created, allowing it to probabilistically generate the steps needed to recreate an already finished painting.

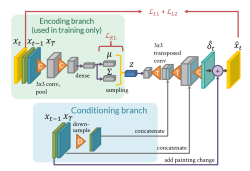

The probabilistic model is implemented using a convolutional neural network (CNN), with as output a time lapse video, spanning many minutes. In the paper they reference how they were inspired by artistic style transfer, where neural networks are used to generate works of art in a specific artist’s style, or to create mix-ups of different artists.

The probabilistic model is implemented using a convolutional neural network (CNN), with as output a time lapse video, spanning many minutes. In the paper they reference how they were inspired by artistic style transfer, where neural networks are used to generate works of art in a specific artist’s style, or to create mix-ups of different artists.

A lot of the complexity comes from the large variety of techniques and materials that are used in the creation of a painting, such as the exact brush used, the type of paint. Some existing approaches have focused on the the fine details here, including physics-based simulation of the paints and brush strokes. These come with significant caveats that Timecraft tried to avoid by going for a more high-level approach.

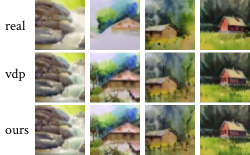

The time lapse videos that were generated during the experiment were evaluated through a survey performed via Amazon Mechanical Turk, with the 158 people who participated asked to compare the realism of the Timecraft videos versus that of the real time lapse videos. The results were that participants preferred the real videos, but would confuse the Timecraft videos for the real time lapse videos half the time.

The time lapse videos that were generated during the experiment were evaluated through a survey performed via Amazon Mechanical Turk, with the 158 people who participated asked to compare the realism of the Timecraft videos versus that of the real time lapse videos. The results were that participants preferred the real videos, but would confuse the Timecraft videos for the real time lapse videos half the time.

Although perhaps not perfect yet, it does show how ML can be used to deduce how a work of art was constructed, and figure out the individual steps with some degree of accuracy.

The A.I. recreating art is very interesting as it illustrates its capabilities in handling complex colors, strokes, pressures, counterfeiting…imagine what it can do with money?

That’s why money always has secondary security like the watermark, the silver thread and the “feel” of the paper/plastic. Granted, most of those can already be duplicated with enough effort and why there’s a constant battle between counterfeits and those who “make” the money.

So, did they use the many videos by Bob Ross to train some of the AI?

Paintings for people that hate paintings.

His techniques blow most painters out of the water.

Too bad his paintings are drab and unoriginal then.

Don’t invite the ire of the BRFC (Bob Ross Fan Club)! 🤣

I have been trained to paint and draw in traditional styles, so I know that there is no one way to go about painting any subject, in fact I can show you over a thousand distinctly different ways to paint the same subject. However, having pointed that out, this new work in not even close to the stated goal. It will be interesting to see if they can perfect the method, or if it will become a dead end, a fascinating cul de sac off to one side of where the technology ends up. So how does a human artist really paint? Well imagine a huge database of memories of brush strokes, how the variables of tool, media and surface interact, now search that data using a “Rorschach Test” like matching algorithm for any section of the image that you wish to render. Well it is a bit like that, unless you are a Hyperrealist using almost mechanistic reproduction techniques to render a photo like result. The other extreme is seen in Japanese woodblock prints where everything is interpreted and all of the marks are codified, derived from a lifetime of writing calligraphy. There is a particular set of marks for leaves, and each species has a distinct stroke or sub-set of strokes that represent e.g. “pine needle” or cluster thereoff. It is hard to explain in words but once you are alert to it you will know it when you see it. Give it a go, and enjoy that little “Ah ha!” moment when you finally get it.

Skynet is born!