When it comes to building particle accelerators the credo has always been “bigger, badder, better”. While the Large Hadron Collider (LHC) with its 27 km circumference and €7.5 billion budget is still the largest and most expensive scientific instrument ever built, it’s physics program is slowly coming to an end. In 2027, it will receive the last major upgrade, dubbed the High-Luminosity LHC, which is expected to complete operations in 2038. This may seem like a long time ahead but the scientific community is already thinking about what comes next.

Recently, CERN released an update of the future European strategy for particle physics which includes the feasibility study for a 100 km large Future Circular Collider (FCC). Let’s take a short break and look back into the history of “atom smashers” and the scientific progress they brought along.

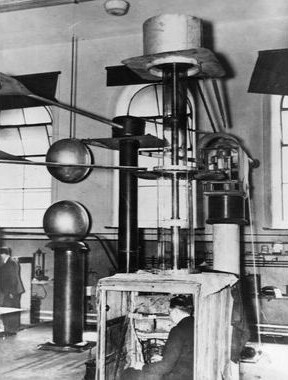

A Machine to Split the Atom

Credit: cambridgephysics.org

The main motivation to build accelerators arose at the beginning of the twentieth century when Ernest Rutherford discovered in 1919 that he could split nitrogen atoms by bombarding them with alpha particles from natural radioactive sources. To continue his research, he demanded a source of higher energy and higher intensity “atomic projectiles” than those provided by natural radioactive sources. Encouraged by Rutherford, in 1932 Cockcroft and Walton used a 400 kV generator to accelerate protons and shoot them onto a lithium target which resulted in the first entirely man-controlled splitting of the atom.

Particle acceleration using DC voltages like that of the Cockroft-Walton generator and later the Van de Graaff generator was limited by the maximum voltage that the machine could provide. To overcome this limitation, Swedish physicist Ising proposed the principle of resonant acceleration where the same voltage is applied repeatedly through a series of drift tubes hooked up to an RF generator. This was considered the true birth of particle accelerators and in fact, the current generation of linear colliders are still relying on the same principle. Rolf Winderöe was the first to build such an accelerator in 1928 in Germany to produce 50 keV potassium ions.

From Linear to Circular

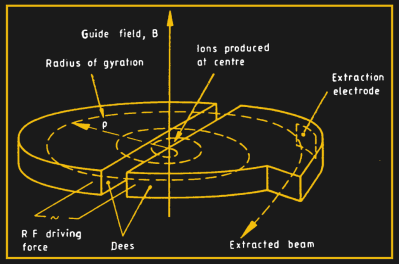

Credit: P.J. Bryant

One downside of the linear accelerator (linac) is that the length of the drift tubes has to be increased as the velocity increases making the machine rather large and difficult to construct for high energies.

In 1929 Ernest Lawrence came up with the much more compact cyclotron, which accelerates particles along a spiral path guided by a magnetic field. Together with his student M. Stanley Livingston, Lawrence built the first cyclotron which was only 4 inches in diameter but could accelerate protons to 1.25 MeV. The cyclotron finally made it possible to produce particles with much higher energies than those by radioactive sources and it stayed the most powerful type of accelerator until another technology came along in the 1950s.

Keeping Particles in Sync

As particles start to approach the speed of light they slow down due to relativistic effects some of the energy goes into the relativistic mass so they lose synchronization with the RF electric field of the cyclotron. This was compensated by varying the RF frequency and the machine and was dubbed the synchrocyclotron. Later also the guiding magnetic field was ramped up as the particle velocity increases so that the particles moved on a constant orbit. This was the birth of the synchrotron.

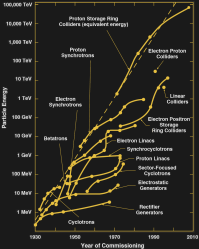

Credit: R. Ruth

The final advancement was made by moving from fixed target accelerators to storage ring colliders. Since the energy available for the production of new particles is given in the center-of-mass frame of the collision, it is much more efficient to collide particles head-on instead of shooting a beam on a fixed target.

A Plethora of New Particles

While before the 1950s new particles were mainly discovered through cosmic rays, powerful accelerators like the synchrotron heralded a “Golden Era” of particle physics. These new machines led to the discovery of many subatomic particles as listed in the table below.

Studying the structure of the atom on smaller scales and being able to produce particles with higher masses is what drove the development of accelerators with ever-higher energies. In a synchrotron reaching higher energies requires either a larger radius or stronger magnetic fields. Therefore, it was the use of superconducting magnets but also the possibility to build colliders underground, below property that is not owned by the laboratory running the machine, which enabled the construction of giant colliders such as the LHC.

| Year | Particle | Accelerator Name | Accelerator Type | Location |

|---|---|---|---|---|

| 1955 | antiproton | Bevatron | proton synchrotron | LBNL, U.S. |

| 1962 | muon neutrino | AGS | proton synchrotron | BNL, U.S. |

| 1974 | J/ψ meson | SLAC | electron linac | |

| 1975 | tau lepton | SLAC | electron linac | |

| 1978/1979 | gluon | DORIS/PETRA | electron synchrotron | DESY, Germany |

| 1983 | W, Z bosons | SPS | proton synchrotron | CERN, Switzerland |

| 1995 | top quark | Tevatron | proton synchrotron | Fermilab, U.S. |

| 2000 | tau neutrino | Tevatron | proton synchrotron | Fermilab, U.S. |

| 2012 | Higgs boson | LHC | proton synchrotron | CERN, Switzerland |

What’s Next?

Currently, particle physics is in a bit of a crisis because the final missing piece of the Standard Model, the Higgs boson, was discovered by the LHC but there is yet no evidence for new physics like supersymmetry. Although we know that the Standard Model cannot explain dark matter and dark energy, it is doubtful that a new giant collider such as the FCC will provide any answers which is why some people strongly argue against it. There is no reason for nature to be nice, so it might be that the mass of new particles lies far beyond what is technologically achievable.

It may also be that new physics is hiding somewhere in the low-energy regime which would require entirely different experiments. Nevertheless, there are some technological developments that may considerably lower the price tag of a new supercollider thereby making it more attractive. One would be the discovery of room-temperature superconductors the other is Wakefield acceleration which could ultimately lead to much more compact accelerators that can even fit on a table (again). So let us hope that pushing the energy frontier will keep providing us answers to the most fundamental questions in nature.

Wakefield accelerators are old news. Read up on https://achip.stanford.edu

Thanks for the tip I did not know about this. I would like to see a table top accelerator realized first though before I believe it could be realized on a chip.

Why don’t you believe in all the parts already done (which is quite a lot)?

The “on a chip” mentioned is just the accelerator structure — which was demonstrated 4 years ago.

They’ve made some huge improvements since then, and gotten many other parts of the total accelerator down to the speed and scale needed for a desktop accelerator.

The other fun part is reversing the accelerator structure to make an X-ray FEL on a desktop, with small fast pulses.

I’m a PhD student in accelerator physics and although my immediate research area isn’t wakefield accelerators, I know a bit about them. My impression (and that of most other researchers I know including one guy that worked on the accelerators on a chip project) is that it’s a promising technology, but always seems about ten years away from being used in practical applications. Kind of like the state of practical quantum computing or fusion power.

The research has come a long way in the past couple of years, but there are still a number of obstacles preventing them from being used in facilities. Namely the quality of the beam they produce is still too poor for stuff like free electron lasers (FELs). Staging and using multiple wakefield accelerators in series also hasn’t been demonstrated convincingly. Staging is a requirement to hit high energies since wakefield has only been shown using small sections so far. For plasma based methods (which achieve the most acceleration of any technique), there are also problems with introducing the beam into the plasma/gas region and then extracting it. Out of all of the problems I think the quality issue will be the most difficult to solve.

The 1974 and 1975 discoveries were at what is now called the SLAC National Accelerator Laboratory, then called the Stanford Linear Accelerator Center, in Palo Alto, CA.

Minor item:

“As particles start to approach the speed of light they slow down due to relativistic effects and loose synchronization with the RF electric field of the cyclotron”

The particles don’t slow down due to relativistic effects. Their acceleration relative to the fixed frame of the device is reduced.

Ah, that makes more sense. The original explanation was tying my brain into knots.

Yeah it’s like when you have helium balloons in your car and they hit the back of your head when you stomp on the gas…. (Not really but if we can drag Moritz into this it will be fun)

I thought it was a rather ‘loose’ description.

You are right I have phrased this a bit loosely. Will try to fix it.

A fascinating topic that I know next to nothing about. Now I know how I might spend the next couple days.

BTW, the author of that Scientific American article, Sabine Hossenfelder has a pretty good youtube channel. She’s one of the few people who seem to consistently make non-emotional, reasoned arguments, which is refreshing. Listening to her would unlikely lead you astray.

> Listening to her would unlikely lead you astray.

Unfortunately, I would recommend taking her opinions with a grain of salt. Sabine wrote a particularly disturbing article about LIGO which was so full of cherry picked and disingenuous arguments that I am not sure if I trust her on the areas of physics which I am not as familiar with.

Immediately after the discovery of gravitational waves, some scientists raised valid concerns over data analysis. It led to productive scientific debate in the community and was eventually resolved in a way that left most people satisfied. At the time, Sabine wrote an article about the criticism to LIGO and IMO did an OK job and ended by saying that she believed the issues to be resolved.

Bizarrely, a couple of months later she wrote a second article about LIGO where she basically said that the researchers were liars and were making up data so that they could get a Nobel prize. She cherry picked all of the criticism raised against LIGO after their first detection. Her article managed to leave out all of the valid rebuttals to the criticism (IE the reasons why even most of the ardent LIGO critics in the scientific community have now been won over and believe the results) and gave the dishonest impression that the researchers were engaged in some sort of conspiracy to commit fraud. She also made some strange personal attacks against some of the scientists.

I am not an expert in the field, but I know enough about the debate over gravitational waves to see what she was doing and the article left a bad taste in my mouth. It seems like Sabine has an axe to grind when it comes to big science and I would lend a critical eye to anything she write, especially when you’re not already familiar with the area of physics. For instance, I personally agree with her that we shouldn’t be building the next big collider. However, I have to watch out that I’m not just listening to her because it confirms my existing opinions.

I guess when you’re a physics layperson as I am, it’s hard to not be prone to getting duped. It’s even more difficult when there’s a lot of debate surrounding a subject and you can’t tell who are the cranks, who are the people merely towing some popular line but have done no work themselves, and those who are at least to the best of their knowledge making their arguments/claims in good faith with the data available to them. Recently I’ve read a few instances where a physicist claimed some researches came to faulty conclusions on some subject matter, perhaps knowingly, that resulted in a Nobel Prize. I didn’t hear about the one from Sabine, in which case she can get added to that list as well. It’s far beyond my abilities to determine the truth one way or another, but I know enough about human nature to know that dishonest science isn’t beyond the realm of possibility, especially when reputations and livelihoods are at stake. Though I readily admit that just because it’s plausible for that to happen, doesn’t mean it has. I also suppose that just because Sabine seems to vehemently argue in favor of going wherever the data leads to, even if the outcome is undesirable, doesn’t equate to her having unshakable integrity free of any hypocrisy.

Thanks for pointing this all out.

perhaps a bit better explanation is that as the limit of speed of a particle in a fixed reference approaches the speed of light the amount of energy necessary to accelerate it goes to infinity.

I won’t go on from there but the implication, I expect is obvious

I picked my wording to reference the frame of the machine and control, rather than the particle or why.

TO go more into why without getting too deep, I might say that the more closely the particle approaches the speed of light relative to the rest frame, the portion of the energy transferred from the rest frame to the particle that goes to increasing mass rather than speed increases (note that I carefully avoided momentum here. That is intentional so as to avoid the progression to the maths)

Better might be that the more closely the particle approaches the speed of light relative to the rest frame, the portion of the energy transferred from the rest frame to the particle that goes to increasing mass rather than speed goes from insignificant to dominant

As the speed embiggens, inertial mass goes bonkers, got it.

Gluon was discovered by the PETRA accelerator at DESY in Hamburg, Germany. DORIS is a different machine at the same institute.

There seems to be some controversy whether the PLUTO experiment did already discover the gluon in the year before at DORIS.

https://arxiv.org/ftp/arxiv/papers/1008/1008.1869.pdf

I added PETRA to the table though.

PLUTO and TASSO had different approaches to find gluon. PLUTO by narrow resonance Y and TASSO by 3 jet events, which is a more direct evidence of gluon. Unfortunately PLUTO didn’t have enough data for a discovery in 1979, so the discovery goes officially to TASSO. Although PLUTO data was later confirmed to be evident enough, but too late.

The paper you cited is authored by former members of PLUTO collaboration. ;-)

But leave it as is. The race for the gluon was won “um Haaresbreite”.

I want to build my own Cyclotron.

Will large rare-earth magnets supply the magnetic field I need?

…..hmmmmm!

My dad worked in the 1960s with a brilliant man, Karel (Carl) Svoboda, who built his own cyclotron as a teenager in the 1950s: http://johnpilgrim.net/Karel_Svoboda/ The huge electromagnets and vacuum chamber were still in his basement when we cleaned out his estate last year.

The article glosses over one of the major considerations for particle accelerators: luminosity and current.

Linear accelerators were not built because they were “easier” in any sense; indeed the first really effective particle accelerators were cyclotrons and the first truly “high energy” accelerators were synchotrons like the LHC. Those date back to the 1950s, which means that the LHC and future similar accelerators do NOT involve any cutting-edge acceleration concept.

The article’s implied pooh-poohing SLAC, for example, betrays a fundamental misunderstanding of what contribution linear accelerators are good for. For example, the article fails to even mention that you cannot accelerate electrons in a synchotron like the LHC; the electrons would radiate away energy as fast as you could provide it. Yet the protons and ions used at LHC are not fundamental particles, and thus produce much noisier an complicated interactions.

gack.

I had the pleasure of working at the Cornell High Energy Synchrotron Source last year and it was one of the coolest operations I have ever seen. With everyone always scurrying around it reminded me of a massive sealiner

Upon reading about the cern 100K accelerator I thought why not go big. I mean BIG. Take those (or newer) magnets and contract SpaceX to orbit them. There is a better vacuum in LEO than can be made on Earth. In the shade (so they need shades), the temp is perfect for superconducting. In the right orbit, plenty of sun for power. The curvature at 25,000 miles around is very slight. Take very few magnets. Maybe same number as LHC. With SpaceX, the cost should be quite low, especially on a quantity buy.

There is Not a better vacuum in LEO than can be made on Earth, and considering that the alignment has to be to a precision of tens of microns, the idea is infeasible. And the number of magnets required would still be very large, because… ah, geez, why do I bother?

This article takes me back. My father was a Physicist at the 88″ cyclotron at Berkeley from the 1960s to 1990s.

Always interesting hearing what he was doing when I was growing up – like alpha particle bit flip simulation for memory chips, analyzing wheat protein for India farming, analyzing ink in Gutenburg Bibles, searching for magnetic monopoles.

My favorite was: he needed U235 to put in the ion source to turn into a plasma to accelerate for an experiment. My father contacted the sister lab in Livermore that designs nuclear bombs, asking for a sample. They sent it inter-office mail in a shielded container. The experiment was run successfully. A while later, Livermore asked if there was any leftovers to return, so Dad let them know it was all used up. “OK thanks” was the reply. He said it was pyrophoric, so highly radioactive U235 ignited when they sandblasted the tungsten ion source to clean it (in a filtered glove box).

You can see E.O Lawrence memorabilia at the Lawrence Hall of Science above the Berkeley Lab including the first cyclotron and his Nobel Prize. Outside is a 60 ton cyclotron magnet that was used at UCLA in the 1930s-1960s.

Lawrence Hall of Science used to be one of my favorite museums as a kid. Brings back memories… Haven’t been there in 30 years?