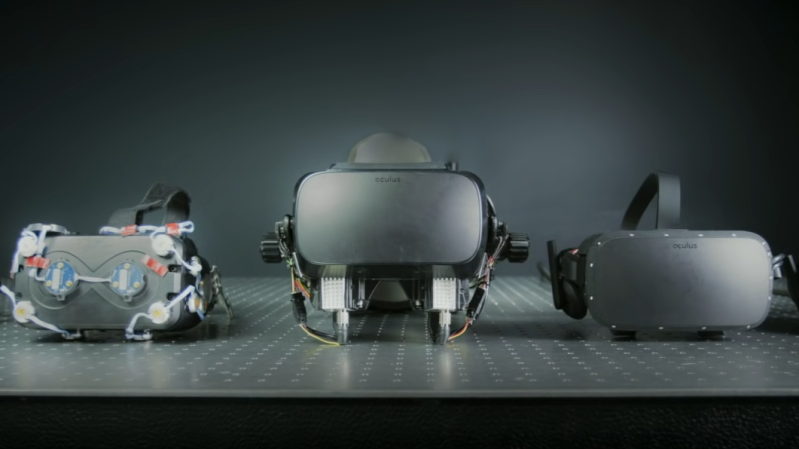

VR headsets are more and more common, but they aren’t perfect devices. That meant [Douglas Lanman] had a choice of problems to address when he joined Facebook Reality Labs several years ago. Right from the start, he perceived an issue no one seemed to be working on: the fact that the closer an object in VR is to one’s face, the less “real” it seems. There are several reasons for this, but the general way it presents is that the closer a virtual object is to the viewer, the more blurred and out of focus it appears to be. [Douglas] talks all about it and related issues in a great presentation from earlier this year (YouTube video) at the Electronic Imaging Symposium that sums up the state of the art for VR display technology while giving a peek at the kind of hard scientific work that goes into identifying and solving new problems.

[Douglas] chose to address seemingly-minor aspects of how the human eye and brain perceive objects and infer depth, and did so for two reasons: one was that no good solutions existed for it, and the other was that it was important because these cues play a large role in close-range VR interactions. Things within touching or throwing distance are a sweet spot for interactive VR content, and the state of the art wasn’t really delivering what human eyes and brain were expecting to see. This led to years of work on designing and testing varifocal and multi-focal displays which, among other things, were capable of presenting images in a variety of realistic focal planes instead of a single flat one. Not only that, but since the human eye expects things that are not in the correct focal plane to appear blurred (which is itself a depth cue), simulating that accurately was part of things, too.

The entire talk is packed full of interesting details and prototypes. If you have any interest in VR imaging and headset design and have a spare hour, watch it in the video embedded below.

Things have certainly come a long way since mobile phone-based VR headsets, and displays aren’t the only thing getting all the attention. Cameras have needed their own tweaks in order to deliver the most to the VR and AR landscape.

I would not damage my eyesight further for VR technology. Enough to deal with my natural eyesight problem. I have serious doubts that VR is at all suitable for longer use (3-4 hours a day)

https://www.bbc.com/news/technology-52992675

Forcing one’s eyes to focus on the exact same plane for hours on end would indeed be potentially bad for eyesight, which is precisely why this research is focused (pun unintended) on fixing that issue.

Your linked article is about one anecdote, which itself is an enhancement to a preexisting condition, and is then used to make a claim in a headline that could then be used to infer a larger issue.

Typical media these days.

I use VR ~3-4 hours a day, almost every day, and have for the last 2 years. I can still read markings on 0402 resistors and my long distance vision hasn’t degraded.

I know, an anecdote. Like your linked article. But no big headline from me. Read from it what you will.

…and I don’t use VR at all, but my vision has degraded nevertheless.

It’s called “aging”, the doctor said.

My left eye could focus down to about an inch, and it was great. Then I turned 45 and it went to 5 inches, just like my right eye. I never even noticed a gradual change, so it must have been over a period of mere months that it changed. Soon after that I ended up getting bifocals, but they’re actually stealth trifocals because I can focus out to 9-12 inches when I take them off, but no closer than about 18 inches through the near lenses. (yes, there’s a gap there)

Similarly I’m in my early 30s and have had a screen of some sort in front of my face for much of my life spanning back to my adolescence. And I have been a VR user off and on the past 2 years as well. Despite this my eye doctor has always commented every visit on having absolutely zero abnormal issues with my eyesight other than the basic near-sightedness (which started WAY early in my childhood).

That all said as someone who wears glasses, I did start putting more money investing in good ones a few years back with just about every addon including blue light blocking, anti-glare, etc. which did have a noticeable effect, before VR entered the picture, in virtually nipping eye strain in the bud even after sitting in front of a screen 8 hours a day 5 days a week just for work (not even sure the countless hours of personal screen use) and I do use these during VR sessions as well (haven’t yet jumped on the custom lens train, but when I do, blue light blocking is going to be a requirement) so that may have some merit.

That said, just as these are anecdotes, so is the single user mentioned in the article. And I’d wager there’s an extreme hint of confirmation bias on his end both as a long time VR developer but also going to an eye doctor who is a VR/headset user.

There are several other issues with VR headset ex

“so-called vergence-accommodation conflict. When you view the world normally, your eye first points the eyeballs—vergence—and then focuses the lenses—accommodation—on an object, and then these two processes are coupled to create a coherent picture.

Modern VR headsets achieve the illusion of depth by presenting each eye with a slightly different image on a flat screen. This means that, no matter how far away an object appears, the eyes remain focused on a fixed point, but they converge on something in the virtual distance.”

And the displays are just 20-40 mm from your eyes!

Your eyes are just not evolved for this type of usage.

FYI. Most of the presentation in the post is about solving the vergence-accommodation conflict.

> And the displays are just 20-40 mm from your eyes!

The real focus is around 1 meter for most headsets (was at infinity for the Oculus DK1), which makes sense since it’s mostly the distance where you’re looking at most of the time (arm’s reach). You almost never look for more than some seconds at longer distances.

Also the problem of vergence-accommodation is not specific to VR headsets but concerns every stereoscopic display. That’s why stereographers have been quite conservative in their usage of the depth budget on 3D movies, by using a comfort zone where the discrepancy between the distance of vergence and accommodation was limited (around 1/3 diopters if my memory serves well).

While it’s been known for quite some time that this conflict can provoke visual discomfort, eye fatigue and headaches, using stereoscopy has also been used to allow people who suffer from amblyopia to recover stereo vision, even after a long time. Look for “Fixing my gaze”, a book by a neuroscientist who recovered stereo vision this way after half a century.

There’s some recent research on UN-damaging your eyesight…

https://www.sciencedaily.com/releases/2020/06/200629120241.htm

Early headsets of the current VR iteration (og Vive & Rift) did kinda give me blurry vision if i’d play it for more then ~1.5 hours, but that was a temporary effect that never lasted for more then a few minutes after taking the thing off & i feel like it was less to do with my eyes and more to do with my brain going “whats with all these damn squares, enhance!”, when i played longer after that i would get some sort of vaguely drunk dizzy feeling when i would stop playing, but thats nothing to do with eyesight (indirectly related tho, its to do with brain having gotten used to the low framerate & resolution)

Being lucky enough to own a Valve Index now, my longest playsession on that was 7h straight, and i only stopped because i was exhausted af, moment i took of the headset my brain was like ‘lets go again!’ no dizzyness no blurry vision, just a desire to go to the toilet and then to bed xD

I’ve been playing around 20 hours of VR a week since the launch of the og Vive, and just to be safe i do have my eyesight checked every few months, but it hasn’t changed in years.

Nice to know you are having it checked regularly while using tech that might have a negative effect – I probably should get mine tested again, not a heavy VR user but played around for a few longer stints (every time It gets set up I use it a few hours most days before it gets put away again). I really can’t see why it would be any worse than staring at the TV/PC for hours – they might be further away but its another long period of focusing at one distance alone but always good to keep looking when nobody really knows yet if there is a downside.

So excellent to see a lot of this material again – particularly regarding the no-moving-parts focus system. I don’t recall which HaD article (or SIGGRAF video, perhaps) referenced that a few years ago, but it is truly brilliant and always a delight to watch the animation of stepping through all 64 focal lengths.

There are some people with manual/conscious control over the lenses in their eyes – setting focal length (accommodation) without adjust gaze direction or binocular adjustments (vergence). (I assumed this was a “hardware feature” in all humans, but apparently it’s not a common thing, perhaps I spent too much time with crossview/parallelview 3D as a kid?) It’s nice to see that they’ve developed systems that effectively work for these people, by stacking image planes and relying on an ophthalmoscope rather than gaze tracking.

It’s been an issue that’s annoyed me since VR was around on the Vive – everything looked fake because there was a single, fixed focus plane at virtually infinite depth. Parallax and occlusion work near-flawlessly in VR headsets, yet the final missing piece of the immersion puzzle was never available on commercial headsets. And yet, with research like this being published… the future looks bright :)

I was wondering that. He says that vergence-accommodation are linked, but I don’t have any problem looking at close objects in VR in focus, because I can control vergence and accommodation individually. Is this not common? That really surprises me.

It’s linked at the unconscious level but it doesn’t mean that you can’t “unlink” it voluntarily. For me it takes a bit of effort to view Magic Eye images, especially when I must alternate between cross-eye and parallel view. In VR or 3D movies it doesn’t take any effort, except for people with eye conditions.

Even the cheapie smartphone holders have it. Ideally you should set it for the furthest you can focus.

Sadly, VR is one of those things that only look good in movies, but in practice are next to useless. Even ignoring the fact that half the population is uncomfortable with first-person-perspective view, even when the screen is not strapped to your face, there are simply no important problems that could be solved with this technology. It’s a gimmick. Even the computer games that seem like a perfect fit have to be dumbed down to the most basic interactions to be feasible, and even then they are barely usable.

My only hope is that there is a little of additional research on the fringes, for things like accurate positioning in space, motion and posture tracking, haptic feedback, etc. — those can be useful on their own, especially if the stupid VR hype makes the sensors cheap and easy to obtain.

I think the most disturbing thing to me about first-person view in video games is that they completely remove your body model from the view. There’s just something strange about looking down and not seeing your hands and feet. I know there are reasons why being able to see yourself might not look so good, but it’s a big reason why I prefer third-person view.

Yeah its a little disconcerting. Though I kind of find the effect enjoyable in VR – a reminder of how cool what I’m doing is, and being see-though is really kind of fun.

“In practice are next to useless”

– Theres quite a few doctors and car designers (and prolly a few more occupations) around the world that would disagree, VR has made its way into operation rooms and all sorts of companies years before we got the current headsets, remember that VR has been around for like 50 years, they’ve had actually decent headsets (but like 50-100k a pop) in certain industry’s for a while now

“Even ignoring the fact that half the population is uncomfortable with first-person-perspective view, even when the screen is not strapped to your face”

– Got a source for this? it seems like a wild claim when you consider most games are first person shooters, and nobody has a problem with a first person view if they are in control of the camera

“there are simply no important problems that could be solved with this technology. It’s a gimmick.”

– Please refer to my earlier doctor/companies comment

” Even the computer games that seem like a perfect fit have to be dumbed down to the most basic interactions to be feasible, and even then they are barely usable.”

– Not sure what you base this on but it mostly comes across as random hating? The whole point of VR is that you do everything with your actual hands/body, i dont really see how that translates to dumbed down controls? if anything ‘flat’ games have way more dumbed down controls, do you open doors with a button in real life? reload a gun with a button in real life? pull pin and throw a nade with a button in real life? VR is the opposite of dumbed down lols. And just because you saw some sub-par controls in VR game A doesnt mean VR game B to Z have the same issue.

“My only hope is that there is a little of additional research on the fringes, for things like accurate positioning in space”

– The ‘lighthouse’ system used by SteamVR (that launched alongside the og Vive) has been tested by third parties to be precise up to 0.3 millimeter, idk what you got planned for your VR endeavors but that should be good enough to perform surgery.

“motion and posture tracking, haptic feedback, etc”

– These are all possible already, motion tracking is done by any half decent headset (how else is it gonna make you move around the virtual world when you move in the real world) posture tracking is possible with either a kinect or waiting on Oculus to release the hardware they are making for this, haptic feedback vests have been a thing for a few years now, mostly used in VR arcades.

“those can be useful on their own, especially if the stupid VR hype makes the sensors cheap and easy to obtain.”

– And here we get to the core of the problem, the fact that you label VR as “the stupid VR hype” says it all really, you’re just hating, VR is anything but stupid, and i wouldnt really call it a hype either, as a lot of people stay weary (because of people like yourself spreading misinformation because really you just hate the tech for whatever reason so dont want anybody else to enjoy it?..)

If you’ve made it trough the above, my 2 cents:

Just ignore VR, it would be a better use of your time then trying to bash VR with wildly incorrect points, and consider this, if you keep telling people VR is sh*t then how is it ever going to get better?

You are confusing VR with teleoperation.

They really are not – VR implies the 3d depth spoofing, motion tracking and headmounted display – all of these things are indeed used in industry/research and have been for a while (the first stereoscopic experiments were astonishingly long ago) – and since the VIVE first arrived with those very impressive lighthouse its been getting rather alot more use and attention because suddenly its pretty much free (just a small rounding error in big industry costings).

teleoperation could be VR, or could not. And has existed very very much longer.

It is interesting that so few of the comments are correct, and many are erroneous. Many people do not understand the basic principles behind vision and have not read the history nor studied the science of human vision. VR is intended to be the simulation of vision in real life, but does not always meet that goal. Most certainly images should be sharp from the near point all the way to infinity. Human accommodation and vergence are controlled by two different sets of muscles. The ciliary accommodation muscles are located inside of the eye itself, whereas the extraocular muscles that adjust vergence are called that because they are outside of the eyes. While both normally operate in concert with each other, they are not physically tied to each other. Therefore there is no such thing as an accommodation-vergence conflict. If there were, artificial stereoscopic images, such as 3-D movies as well as VR would not work. The brain compensates remarkably well for most differences, although minimizing these differences makes viewing more comfortable. Correctly done, virtual reality can be an extremely pleasurable visual experience.

Did you watch the video at all?

He is talking about addressing vergence-accommodation conflict most of the presentation.

Funny how you explain that people don’t know what they are talking about and in the next sentence you say that there is no such thing as an accommodation-vergence conflict.

Look up Google Scholar for this exact term and you’ll find plenty of papers about the subject, you can start with the ones from Martin Banks for a good start. This problem has been known for quite some time and it has nothing to do with a supposedly physical link between vergence and accommodation.

And it’s not about whether 3D and VR work or not – we know they do – it’s about them provoking visual discomfort, eye fatigue and headaches. It’s been thoroughly studied and we have known for more than two decades in which conditions it happens, it’s even explained in the Oculus documentation.

And while I agree that it’s perfectly possible to generate imagery that doesn’t provoke this conflict, it prevents said imagery to fully replicate what we would see in the real world.

Guys, at least read the post, and/or watch the video presentation (btw it’ a good one), before commenting.

The post writes about it, but does not use the name of the problem (vergence-accommodation conflict). Nearly the whole presentation is about addressing this issue. This also known as accommodation-convergence mismatch, is a well-known problem of head-mounted displays (HMDs).

Their system still does not seem to handle self-occluding translucent objects, so scenes like a glass-bowl of translucent green Jello cubes in multiple orientations would likely generate depth-cue inconsistencies while rendering. Such a system can’t truly replicate a volumetric environment like a Holodeck, but rather uses a shrink-wrapped surface world which at least is computationally feasible..

Personally, I think headset environments look like a cool medium, but they still give me vertigo after 5 minutes. My new XBOX HMD will likely have a barf-bag attachment upgrade.

;-)

I was going to say that it would also be problematic for reflecting surfaces like mirrors and translucent volumes like clouds, fog, smoke, fire, etc. but apparently they seem to handle this case with optimized blending when reading the paper.