If you ever thought that being a television camera operator was a simple job, this BBC training film on studio camera operations will quickly disabuse you of that notion.

The first thing that strikes you upon watching this 1982 gem is just how physical a job it is to stand behind a studio camera. Part of the physicality came from the sheer size of the gear being used. Not only were cameras of that vintage still largely tube-based and therefore huge — the EMI-2001 shown has four plumbicon image tubes along with tube amplifiers and weighed in at over 100 kg — but the pedestal upon which it sat was a beast as well. All told, a camera rig like that could come in at over 300 kg, and dragging something like that around a studio floor all day under hot lights had to be hard. It was a full-body workout, too; one needed a lot of upper-body strength to move the camera up and down against the hydropneumatic pedestal cylinder, and every day was leg day when you had to overcome all that inertia and get the camera moving to your next mark.

Operating a beast like this was not just about the bull work, though. There was a lot of fine motor control needed too, especially with focus pulling. The video goes into a lot of detail on maintaining a smooth focus while zooming or dollying, and shows just how bad it can look when the operator is inexperienced or not paying attention. Luckily, our hero Allan is killing it, and the results will look familiar to anyone who’s ever seen any BBC from the era, from Dr. Who to I, Claudius. Shows like these all had a distinctive “Beeb-ish” look to them, due in large part to the training their camera operators received with productions like this.

There’s a lot on offer here aside from the mechanical skills of camera operation, of course. Framing and composing shots are emphasized, as are the tricks to making it all look smooth and professional. There are a lot of technical details buried in the video too, particularly about the pedestal and how it works. There are also two follow-up training videos, one that focuses on the camera skills needed to shoot an interview program, and one that adds in the complications that arise when the on-air talent is actually moving. Watch all three and you’ll be well on your way to running a camera for the BBC — at least in 1982.

Continue reading “Retrotechtacular: Studio Camera Operation, The BBC Way”

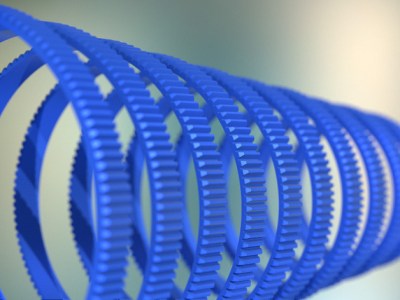

Unwilling to permanently modify his DSLR camera lens and dissatisfied with after-market lens gearing solutions, [Jaymis Loveday] learned enough OpenSCAD to generate gears from 50mm to 100mm in diameter in 0.5mm increments for a snug friction fit. Teamed up with commercially available focus pulling equipment, these lens gears should really help [Jaymis] get professional results from consumer lenses.

Unwilling to permanently modify his DSLR camera lens and dissatisfied with after-market lens gearing solutions, [Jaymis Loveday] learned enough OpenSCAD to generate gears from 50mm to 100mm in diameter in 0.5mm increments for a snug friction fit. Teamed up with commercially available focus pulling equipment, these lens gears should really help [Jaymis] get professional results from consumer lenses.