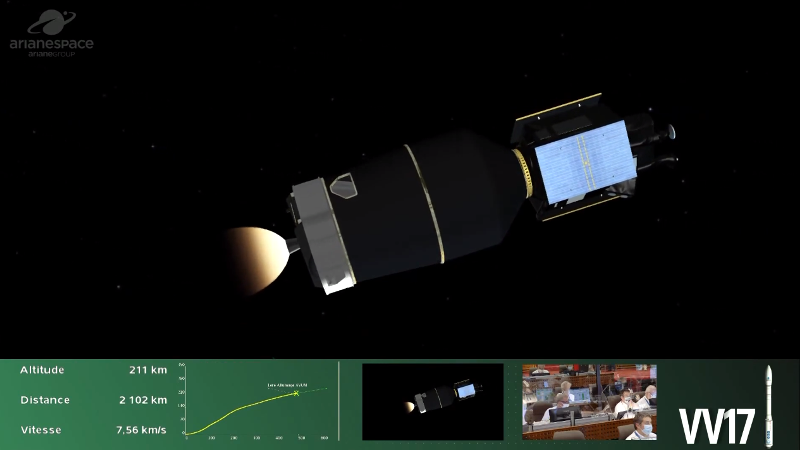

On November 17th, a Vega rocket lifted off from French Guiana with its payload of two Earth observation satellites. The booster, coincidentally the 17th Vega to fly, performed perfectly: the solid-propellant rocket engines that make up its first three stages burned in succession. But soon after the fourth stage of the Vega ignited its liquid-fueled RD-843 engine, it became clear that something was very wrong. While telemetry showed the engine was operating as expected, the vehicle’s trajectory and acceleration started to deviate from the expected values.

There was no dramatic moment that would have indicated to the casual observer that the booster had failed. But by the time the mission clock had hit twelve minutes, there was no denying that the vehicle wasn’t going to make its intended orbit. While the live stream hosts continued extolling the virtues of the Vega rocket and the scientific payloads it carried, the screens behind them showed that the mission was doomed.

Unfortunately, there’s little room for error when it comes to spaceflight. Despite reaching a peak altitude of roughly 250 kilometers (155 miles), the Vega’s Attitude Vernier Upper Module (AVUM) failed to maintain the velocity and heading necessary to achieve orbit. Eventually the AVUM and the two satellites it carried came crashing back down to Earth, reportedly impacting an uninhabited area not far from where the third stage was expected to fall.

Although we’ve gotten a lot better at it, getting to space remains exceptionally difficult. It’s an inescapable reality that rockets will occasionally fail and their payloads will be lost. Yet the fact that Vega has had two failures in as many years is somewhat troubling, especially since the booster has only flown 17 missions so far. A success rate of 88% isn’t terrible, but it’s certainly on the lower end of the spectrum. For comparison, boosters such as the Soyuz, Falcon 9, and Atlas have success rates of 95% or higher.

Further failures could erode customer trust in the relatively new rocket, which has only been flying since 2012 and is facing stiff competition from commercial launch providers. If Vega is to become the European workhorse that operator Arianespace hopes, figuring out what went wrong on this launch and making sure it never happens again is of the utmost importance.

Mixed Up Vectoring

Within hours, Arianespace and the European Space Agency (ESA) had already launched an investigation into the loss of the vehicle. These investigations often take weeks or even months to come to a conclusion, but in this case, the public didn’t need to wait nearly so long. By the next day, Arianespace put out a press release explaining that an issue with the thrust vector control (TVC) system of the RD-843 caused the AVUM to go into an uncontrollable tumble roughly eight minutes after liftoff.

In a call with reporters, Arianespace’s Chief Technical Officer Roland Lagier further explained that investigators believe cables connecting two of the TVC actuators was mistakenly swapped during final assembly of the AVUM. The end result is that the motion of the engine’s nozzle was the opposite of what was commanded by the avionics, and that when onboard systems tried to correct the issue, it only made things worse.

He clarified there was no inherent problem with the AVUM or its RD-843 engine, and that this was simply a mistake which hadn’t been caught in time, “This was clearly a production and quality issue, a series of human errors, and not a design one.” The solution therefore would be more stringent post-assembly checks, and likely changes to the cabling that would make it difficult or impossible to repeat the same mistake in the future.

The ESA still needs to verify the findings of Arianespace’s internal investigation, but it’s rumored that close-out images taken of the AVUM during assembly visually confirm the theory actuators were incorrectly wired. While clearly an embarrassing oversight, being able to attribute the failure to human error means that upcoming Vega launches will likely continue as planned.

This Side Up

Readers who may be experiencing a twinge of déjà vu at this point are likely remembering the Russian Proton-M rocket that failed due to very similar circumstances back in 2013. In that case a worker mistakenly installed several angular velocity sensors upside down, providing the booster’s avionics with invalid data. Unprepared for the possibility of such conflicting positional information, the flight control system attempted to correct the situation by commanding the TVC system to make a series of increasingly aggressive maneuvers.

At 23 seconds after liftoff, the Proton-M had completely inverted itself and was pointing downwards. Unfortunately, the flight termination system was designed so that the first stage engines couldn’t be shutdown before 40 seconds into the flight. This feature was in place to help ensure the vehicle had fully cleared the launch complex in the event of an early abort, but in this case, it meant the flight controllers could only watch helplessly as the rocket dove towards the ground at full power.

Investigators examined the debris and confirmed that the angular velocity sensors in question were clearly labeled with arrows that indicated the appropriate installation orientation. Moreover, they were intentionally shaped in such a way that installing them incorrectly was very difficult. In fact, it took so much force to install them improperly that the mounting plate they were attached to was found to have been damaged in the process.

Despite these clear warnings that something was wrong, the junior technician assigned to the task did not alert his superiors to the problem. The mistake also went unnoticed during inspections made by the technician’s supervisor and a quality control specialist, likely due to the fact that the sensors had passed their electrical connectivity tests. The system could detect when sensors hadn’t been wired properly, but it wasn’t designed to verify the data they were producing.

The Human Factor

While we don’t yet know who was responsible for connecting the TVC system of Vega’s AVUM, it wouldn’t come as a surprise to hear it was another junior technician assigned to what was considered a simple task. Unfortunately, there’s no such thing as a simple task when it comes to building an orbital rocket. Equipment worth hundreds of millions of dollars, and potentially even human life, are on the line when the countdown timer hits zero.

Both of these incidents are reminders that when it comes to spaceflight, even the smallest mistakes can have disastrous consequences. In the wake of the Proton-M failure, the Russian government had to conduct a full review of the manufacturing and quality control process that went into each rocket that rolled off the assembly line. The ESA will surely want to conduct a similar review of how the Vega is being built, though it’s too early to say what changes and modifications to existing procedures they may recommend.

In the end, the European Space Agency’s response will likely depend on how difficult it ends up being to incorrectly wire the TVC actuators. Only then will they know if Vega was brought down by a simple accident or gross negligence.

Humans think they are perfect, never make mistakes. Just look at all the hackaday commenters who think that C is a reasonable programming language, thoroughly ignoring the simple fact that humans have been proven over again that they cannot write proper programs in C, take one look at the mitre cve list. Until humans can get a handle on their own capabilities they should avoid stuff like rockets and nuclear materials so they don’t destroy themselves.

Until Humans Stop always thinking everyone else’s way is the wrong way. YOU might be right. :)

“commenters who think that C is a reasonable programming language” It’s not the languages fault, it’s the lazy people who don’t learn it. Moreover, most all other languages are build from C. Take Python for instance. q

Though I do agree, do we really need to put more crap in space.

“do we really need to put more crap in space.”

Yes, we do. It’s called science and we do it to learn.

We also have to put more crap into space, because a lot of business and some critical safety features count on crap in space.

I think, you could say that a lot of satellites really help ordinary people. And significant number of satellites help to saves lives.

There is also a lot of satellites, that bring people fun and entertainment. And I think they are important as well.

Tell we reach the point where that “crap” impedes future crap in space.

“Humans think they are perfect”

This is patently false. Many procedures are designed around the idea that a mistake may have been made. Unfortunately, this was not one of them.

“Until humans can get a handle on their own capabilities they should avoid stuff like rockets and nuclear materials so they don’t destroy themselves.”

Until we’re perfect we should avoid rocketry and nuclear power sources? How perfectly backwards. You might as well go live in the forest because wiring errors are a cause of houses burning down. We learn from mistakes, we don’t hide from them.

It’s much safer to be a couch potato and buy a new TV every time the remote control wears out.

Remember Murphy:

“If anything can go wrong, it will”.

When testing Terrier anti-aircraft missiles on the USS Norton Sound, we had one failure due to the missile technician installing a wiring plug without first removing the aluminum foil from the connector. The foil was a simple fix to assure no static or false signals would affect the electronics. We had a very limited number of telemetry signals so the error wasn’t caught. This was back in 1961. We haven’t improved people at all since then.

More come to mind: Little Joe II failure due to wiring mix-up which caused roll to be increased to airframe failure rather than being damped although that led to a perfect test of the command module escape system which was the item being tested. Proton rocket with sensors installed upside down. Very spectacular. NASA’s Genesis mission’s sample return capsule where the accelerometer/G-sensor was installed upside down on a PCB, so the capsule never sensed deceleration and, therefore, never sensed reentry and, therefore, never deployed it’s parachute.

The result of that last one. They did recover some of what they wanted to collect, though:

https://www.nasa.gov/images/content/65504main_Genesis1_516.jpg

They still managed to accomplish almost all of Genesis’ science goals, but it involved a lot more work to clean and classify the sample fragments.

> installed upside down on a PCB

No smoke testing after assembly?

This is totally on the designers. The two cables should have never been interchangeable. “Mistake”, “Didn’t catch it in time”, are all blame game slogans. Unless they modified the cables from the original design during assembly the root cause is that the design was defective because it allowed the system to be assembled incorrectly.

This. Exactly what i was going to say.

Second your this. The two cables should have had non-interchangeable connectors *and* different length such that the left one would not reach to fit the right connector. Just elementary design.

In this case I believe the connector is non-reversible, but the pins were wired in the wrong order during fabrication of the cable.

So no harness test jig. Terrible.

I hadn’t heard that detail yet either, but that would be the level of blinding stupidity I would only have expected from Boeing. If they’re doing that with no test jig, the “series of human errors” begins with top-level management cultivating a culture of absolute dumbfuckery.

Not only that, they tried to blame anyone but the design. They somehow understand that humans can make mistakes, but not that designs are made by humans…. who make mistakes. (facepalm)

“He clarified there was no inherent problem with the AVUM or its RD-843 engine, and that this was simply a mistake which hadn’t been caught in time, “This was clearly a production and quality issue, a series of human errors, and not a design one.” The solution therefore would be more stringent post-assembly checks, and likely changes to the cabling that would make it difficult or impossible to repeat the same mistake in the future.”

Poka Yoke FTW!

Just goes to show that no matter how much something is designed to be idiot proof, humans will find a way to screw it up. The hope is that at least a lesson is learned, and the design and/or process is altered to make it even harder to screw up the next time.

Thats the very definition of a design error. First year aerospace engineering will teach you that if they can assemble it wrong, they will. If its critical, it should be designed so no wrong connections will physically work. in and out hydraulic lines have different size connectors etc. If they were able to cross connect it then its a design fault. if they then didn’t pick it up its a quality and testing issue as well, but the root cause is you made something they could connect wrong.

I think there needs to be more testing that has an independently verifiable result, especially in electro-mechanical and electro-optical systems. Many years ago I was tasked with auditing a large project being done by Perkin-Elmer. I talked to a couple of people who had worked on the Hubble Space Telescope. There is a story that after all their assembly and modelling and testing, one of the Old Guys just wanted to “Point the damn thing at the actual starts and take a look”, He pointed out that a section of the roof was removeable and it could be done. As we know they didn’t do that , and there was a calculation error that caused the Actual Stars to be out of focus when the Hubble was finally in orbit. Amazingly they were able to make a corrective lens and have Space Shuttle astronauts install it.

How about running the vectoring systems in final test and LOOKING at the resulting movements??

What does Elon do??

Same story at Hughes space and comm, we pointed it out the window and saw it didn’t focus, were told to not tell anybody. Or so the story goes

> What does Elon do??

Well first of all, he uses his rockets more than once. There’s a reason SpaceX uses the term “flight tested”. This is the kind of error that a rocket can only have once, if you know what I mean.

They’re also designed to be tested on the pad before launch, except for the single engine in the second stage, and that can still get tested before stackng. That’s a big advantage of using restart-able engines.

Need to idiot proof stuff, like use different connectors that can’t be swapped or cable lengths that can’t be swapped, the latter being done on Saturn Vs after the unmanned Apollo 6 test flight.

Given we’ve been here before several times, it seems there is a problem with learning from mistakes – possibly compounded by time constraints – a typical human problem of not reading the literature before doing the fun stuff?

And lest we forget, the cause of the Columbia burning up on reentry was attributed to excessive amounts of PowerPoints such that people stopped paying attention. This makes me think that we need to start sending up techs and engineers on test flights to be 100% certain they triple-check their work. “You worked on this?” “Yep” “Suit-up and get ready to fly”

The space shuttle disasters were due to an attempt to make a re-usable spacecraft in an era without the technology to make a viable re-usable spacecraft.

Instead of a continuous improvement of ever better single use rockets it turned into a far to expensive rust bucket that stiffled innovation for some 30 years until it finally failed spectacularly.

And a lot of people must have known this before the first one was even built.

I wonder what the real story behind that is. I suspect the usual: Smart people get ignored and silenced by management that see personal prestige and money in a project.

Read Feynman’s report on the shuttle disaster. It was all on the NASA management who glossed over safety for sake of speed.

I am now imagining a technician looking at a part to send a rocket into space and they hire someone who can’t look at arrows and physically has to smash the part in place with a hammer to install it, then never questions this process.

if you are assembling a rocket you do not hire people like this. I am imagining the faces of the engineers if they saw the part installed like this. The contortions must have been incredible.

I once watched a man “fix” an 80,000 CNC machine with a 4×4 and 5 minutes with a sledgehammer. He was a previous boss. The doors of the machine would randomly fall off on me into the machine as it was running 8 inch diameter slitting saws after this.

After seeing that it doesn’t confuse me how people this dumb can exist because I’ve seen it in person. The truly frightening thing is thinking there are people like this even assembling our rockets for space. Stupidity can be hired anywhere….frightening.

What surprises me isn’t that a tech was hired who didn’t know what they were doing, but that the job they did wasn’t inspected by anyone else at any stage during assembly/testing. I would’ve thought for aerospace sort of stuff that there’d be multiple occasions for someone/anyone at all to catch these sort of flubs well before launch day, but I guess not.

Ha, hahahaha! You’ve never been in any machine shop, have you?

Sorry for laughing, but QC in America is often a joke. Ask me how I know! Thank God I know parts I reworked were good….If customers knew how much stuff was shipped knowingly bad and up to them to catch, the entire American industry would collapse overnight.

Yes, it’s sad. Some of us do find the flaws, and then management ships the parts anyway. Serious QC is when you need 100% inspection rates.

Fortunately many parts made for aerospace where I worked last had extensive testing done… but depending on the criticality, some things would pass.

It was like monkeys supervising monkeys at times in my career. Most places usually have one or two skilled Machinists who actually catch all the flaws and then the rest of the operation just kind of Moves Like a sentient being with a lobotomy onwards after their work is done, even if others screw it up…

I was always the guy fixing the parts sent back, screwed up by other people I worked with. I’ve seen some ridiculous things.

Lol right you are, I’m an embedded r&d engineer so as you guessed I don’t have experience in a machine shop. I just figured that since the stakes are higher for aerospace they’d fare better than say a consumer manufacturing floor, but I guess not.

The real people who actually keep the world around you running are probably surrounded buy a field of similarly lurching lobotomized humans that effectively just keep going through the motions to keep things running.

The only reason Society doesn’t collapse is those few people who actually understand how everything works or make it happen properly have to be utterly brilliant in the face of so many stupid people around them screwing up everything.

I honestly believe the only reason everything isn’t constantly on fire is because some of those people become truly brilliant and understand human social engineering and account for this in the way they interact with other people to control the stupidity to a degree by anticipating it.

A good tech is hard to find. And even harder to keep (because if they’re that good, they probably won’t be content being a tech). Treat them well and pay them what they’re worth to you…no matter what HR tells you.

Crazy how common it is that HR overwhelmingly determines whether you have monkey’s smashing square pegs into round holes on a task all the way to 20 year experience engineer’s doing inconsequential document changes (because if ANYTHING is wrong, you can say an engineer did it and so it’s obviously not your fault).

Many years ago in the UK, there were TV broadcasts in the wee small hours for the Open University. One such programme narrated the tale of an explosion on board a vessel undergoing repair at a dockyard and the following investigation. Air-powered tools were used below deck, from a ‘ring main’ around the docks. A worker felt unwell so went up on deck for a smoke. His cigarette ‘tasted funny’ and burnt quickly. Then there was an explosion below deck, killing at least one, and injuring more.

It was discovered that paint thinners and rags below deck were unaffected. Eventually it was revealed that an apprentice was asked to set up another air tool so went up to the ring mains supplying gases, and failing to attach his grinder to the compressed air, used an adaptor to plumb into the oxygen line. Recommendations from the inquiry included colour coding the pipework to prevent a repeat of the accident.

Human rated spaceflight gets a bad rap in the public view because of the levels of inspection required to achieve the high reliability that is required. I was a former Engineer working on the Space Shuttle Solid Rocket Booster IEA’s (Integrated Electronic Assembly). The boxes we worked on were routinely refurbished and made flight ready for the next launch. A single solder operation required an Operator (solderer), a QC Inspector, QA Engineer, In-house Customer QA Rep, and DCMA Government QA Inspector – in that order, for every solder joint, 100%. This is what drives the cost and time schedule up so high. Not every operation had this level of scrutiny, it depends on the desired reliability, given the criticality, time constraints, and cost. PFMEA (Process Failure Mode Effects Analysis) is performed to evaluate the potential of failures by rating the likelihood of errors occurring in a process along with the probability of catching the mistake through inspection. This analysis is the tool by which the amount of production controls and/or inspection are determined to mitigate failures. The analysis is often an educated guess at the probabilities involved. A miscalculation here can result in allowing for a much higher occurrence of failure through either lack of adequate process control, inspection, or both. It’s a tough balancing act when schedule and cost are the underlying drivers. As with anything in life, the lowest bid is not always the best option.

When I design boards, I always try for unique connectors. So you can’t plug something onto the wrong connector. If that doesn’t work, I try to make sure that GND and power are on the same pins, and that different power voltages are on different pins (consistent across the design). Als, if the connector isn’t polarised, I assign power and ground so that a reversed connector won’t connect one of them. GND is usually on the lowest pin number in my designs.

This is all stuff I’ve managed to learn through painful experience. It helps me for example, to know where I can find GND. And it keeps me from having to repair boards that were misconnected (assuming…and it’s a big assumption…that the cables were built correctly). But these tricks do help.

Oh, and BTW, the first place I look for problems is connectors: bad crimps, wrong pins, broken wires, pins not being retained, etc.

“15. (Shea’s Law) The ability to improve a design occurs primarily at the interfaces. This is also the prime location for screwing it up.”

From Akin’s Laws of Spacecraft Design, most of which apply to any effort to make things that work.

When you pickup a game that has the Y axis inverted, yet it doesn’t let you change the inversion, yet you let your brain work and reorient so that you can play? Imagine coding systems in such a way, that it recognizes that something is unexpected, lets try to invert those values? I do not code, I know it’s possible, but I imagine the coding would be a tremendous PITA.

Not that much of a problem. But that kind of coding can even be used in the “auto-testing” of the part/assembly, to that it can detect the anomalous situation, and the operator can decide if from then on all reading should be converted.

Instead of adding extra code to invert, it’s better to spend time on a design that can’t be inverted. For example, if something needs to be attached with 6 connectors, make them all different, such that it becomes physically impossible to mix them up.

Yeah then the tech needs six different tools and how do you keep him from using the wrong tool? How do you make sure the right connector is put on the right cable? You are just trading problems for other problems.

You’re trading something that has to be manually done right every time (installing the wiring harnesses correctly) for something that has to be manually done right once (building the wiring harness test jig correctly) and will then catch errors automatically (because no wiring harness leaves the bench unless the test jig gives it the green light). Any errors in building the wiring harness test jig get caught and rectified during the initial development testing, rather than surviving until the 17th flight.

And the tech doesn’t need six different tools — the burden is on purchasing and inventory management to stock six differently keyed connector housings. The tooling for assembling the wiring harnesses with those differently keyed housings is all common. And yes, connector families that offer multiple differently-keyed housings with the same electrical mating components exist for precisely this reason. You’ll find them everywhere in military and aerospace equipment.

This is a massive facepalm moment for anybody who works in aerospace. There are standard procedures for preventing exactly this kind of screwup, and Arianespace and/or their contractors just plain didn’t feel like following them.

A serious amount of fault-first design goes into space software (I have first-hand experience :)), but you have to draw the line somewhere on likelihood of fault types. Gross cross-wiring errors like this are considered unlikely due to it being detectable in commissioning tests… unfortunately people make assumptions about these things and they aren’t all documented or read widely enough, hence this gap between design assumptions and test assumptions.

How can you say that they are “considered unlikely” when crossed cables have already brought down several aircraft? Yet another human who cannot assess risk correctly.

I have been tasked once with such an issue. Motors could move a chute up or down through a set of relays and the position could be measured with a potentiometer. In the past all of them had been wired the wrong way around. So the onus was on the software to fix it and “just work anyway”. This just lead to code which was unmaintainable and would introduce a new bugs all the time. In the end I got away with just reporting an error when the chute seemed to go in the opposite direction. Then a technician had to rewire the components correctly before the machine was shipped.

Wow, the same mistake every RC pilot makes at least once in their career.

space is so hard, we don’t need easy mistakes like this.

This happened to me the first time I tried to load custom firmware on a drone. Didn’t realize the firmware used a different motor mappings than the prepackaged firmware and had it flip over 2-3 times before finally running it through a sequential motor test and seeing the wrong motors spin up.

It seems like it wouldn’t be that hard to run a rocket through a gimbaling test to verify that it’s moving as expected.

“This was clearly a production and quality issue, a series of human errors, and not a design one.”

One could argue that the design team should have used different connectors so things couldn’t be plugged in incorrectly

At this point, we don’t know that they didn’t. They tried to make it fool-proof on the Russian rocket, but ended up underestimating the fool. Could have happened here as well.

That was my first thought as well, but thinking about it … if a tech forced a sensor in place, damaging the plate to which it attaches to just to get it on there and be done with it I have little doubt even using different connectors or length wires would fail to stand a chance of stopping said tech from just splicing/bodging things to get it done. It’s a never ending arms race between idiot proofing and idiots!

Yeah but how do you make sure the correct connectors are installed on the cable? How do detect if they have been swapped in manufacturing? You don’t solve problems by trading them for other problems.

And then there was the 1999 $125 million Mars Climate Orbiter that did a swan dive into Mars after Lockheed used imperial units in its calculations and JPL used metric units in its calculations, and no one realized the difference throughout the time it took the orbiter to reach Mars and then started its program to go into orbit.

I don’t know if any of you are old enough to remember NASA’s space tether mission. The idea was that the shuttle would try to deploy a long cable and see if it generated electricity. They could only deploy the cable about 20 feet or so, if I remember, and the mission was a failure. It turned out that although extensive testing to the deployment mechanism was done pre-flight, the technician who mounted it in the shuttle used too long a bolt, and the unwinding mechanism (which moved back and forth along the cable reel) hit the bolt and stopped after the first pass. For the want of a (correct) bolt, the mission was lost.

OK, my memory sucks. I looked it up. They eventually got it out to 20,000 meters, and the mission was partially successful.

Oops I read it wrong. Only 256 meters were deployed. 20,000 was the target.

Aha! 8-bit overflow!

Ah, come on, what’s wrong with these guys? It’s not rocket sci… never mind.

It’s a prime example of what Ed Murphy originally said.

Do they not have “different color wires” technology?

Italian design: everything is either wine red or tomato red.

It’s not rocket science… Nintendo always seem to know what they are doing when they design connectors. You can’t plug any of their controllers in “the wrong way”. They’re child and idiot-proof.

:)

https://www.imore.com/how-fix-jammed-switch-controller

We need to differentiate seniority and stupidity. If somebody is junior or not if he crams connector incorrectly up to the point he destroys it, he or she is unfit for any mechanical task.