MIDI has been a great tool for musicians and artists since its invention in the 1980s. It allows a standard way to interface musical instruments to computers for easy recording, editing, and production of music. It does have a few weaknesses though, namely that without some specialized equipment the latency of the signals through the various connected devices can easily get too high to be useful in live performances. It’s not an impossible problem to surmount with the right equipment, as illustrated by [Philip Karlsson Gisslow].

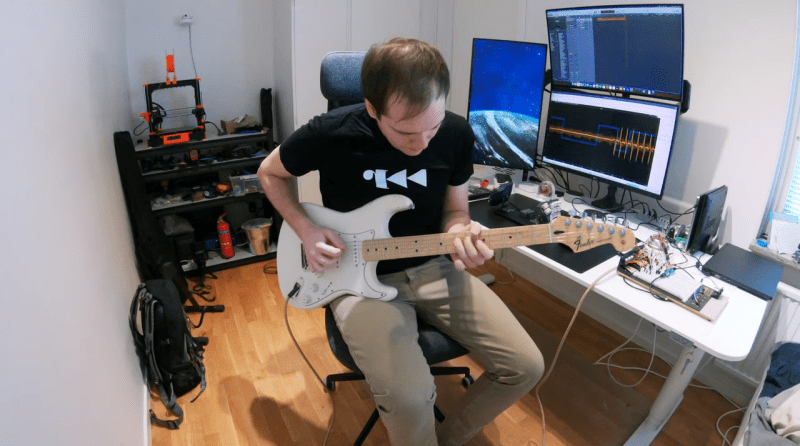

The low-latency MIDI interface that he created is built around a Raspberry Pi Pico. It runs a custom library created by [Philip] called MiGiC which specifically built as a MIDI to Guitar interface. The entire setup consists of a preamp to boost the guitar’s signal up to 3.3V where it is then fed to the Pi. This is where the MIDI sampling is done. From there it sends the information to a PC which is able to play the sound back quickly with no noticeable delay.

[Philip] also had to do a lot of extra work to port the software to the Pi which lacks a lot of the features of its original intended hardware on a Mac or Windows machine, and the results are impressive, especially at the end of the video where he uses the interface to play a drum machine via his guitar. And, while MIDI is certainly a powerful application for a guitarist, we have also seen the Pi put to other uses in this musical realm as well.

Thanks to [Josh] for the tip!

Isn’t that a breach of DMCA to publish this kind of stuff? MIDI, unlike CAN, isn’t a libre protocol.

Sure.

What part of the DMCA is he breaching?

Ummm, what? https://www.midi.org/forum/3781-what-is-midi-2-0-s-status-as-an-open-protocol

MIDI is a specification. If you are a member of the MIDI org you are free to use the standard in your implementation. It appears you are not allowed to modify the standard (which is understandable since it is supposed to be a standard specification). Your hardware and software implementing the standard are your business. In this case the person that wrote migic is porting it from PC and MAC to RASPI which seems perfectly fine. If you are a MIDI org member you could implement MIDI on your vacuum cleaner as long as you follow the standard as written.

The issue would be the migic software he is modifying. If I read this correctly, he is the original author of the migic software so of course he is free to do what he wants with it. In this case he simply ported his own software to a new platform. Whether he gives you permission to do so yourself would be up to him. It does not appear to be open source.

s/with no noticeable delay/with 4 to 15 ms latency/

Yes, 15 ms is yesterday in terms of percussive transients in sound.

It is not that fast but it is fast enough for real time performance. 15ms is not totally out of line compared to any other guitar effects processors. Guitarist often use lots of elements in their processing chains so 15 ms is probably not that alien to them. I am not sure I understand the premise of the article in that the author is attempting to reduce delay while he is porting from PC and MAC to the RASPI Pico. More compact for sure but way less powerful so it would seem hard to improve delay with it.

Tiny room? Check. Unenclosed 3D printer in the corner with odors to breathe in for hours? Check. Really nice RPi based low latency MIDI but not sure that specific type of slow and not exactly safe printer is setup ideally is all?

Yeah, it’s “bad” until you go outside and take a deep breath of all those lovely smog particles instead.

Go outside? What is this outside?

The post Covid era has really exposed the people who seem to think they can advise people on healthy habits; cast the first stone.

I am using an esp32 with it’s stripped down espnow protocol to get sub 5ms (maybe some tweaking I can probably get under 1 ms) response time.

I transmit to another esp32 that is attached to my DAW and have a python script translate to midi channel that I use in Ardour.

Next I want to transmit raw audio from mic/guitar :)

https://github.com/physiii/wireless-midi

Wow that story was poorly written. MIDI latency has literally nothing at all to do with the issues described here.

What the guy seems to be doing is playing a note on the guitar, using something like an FFT to figure out the fundamental frequency, and then turning that into a MIDI note to send to other instruments.

The delay comes from sampling the input from the guitar and detecting the note played. The two parts of that delay are the buffer size needed for accurate enough pitch detection and time to perform the pitch detection algorithm.

Notice that neither of those things involves MIDI in any way? That’s because the lead sentence of the article was 100% completely wrong.

Exactly. Also, MIDI is used in live performance everywhere by professionals. Not very often on guitar though.

MIDI is not a problem itself, the latency depends on all the elements you place in the loop. Each of them has a intrinsic delay, they all add up in the end. It’s not a MIDI problem, it’s a hardware problem. Here the guitar MIDI sensor (as a whole) is the major source of delay.

And it’s only a problem if it’s noticeable.

Even a 15ms total round trip latency is definitely noticeable while not insurmountable for most musicians.

however i believe the 15ms is only the A to D and note detection algorithm, there will be additional latency caused by the virtual instrument and D to A.

Sub 10ms total round trip latency is a good Target / rule of thumb though i know plenty of recording artists that want this closer to 4ms.

Excellent seeing the lengths gone to to keep delay as low as possible.

While I have heaps of DSP kit and way too many digital pedals – huge preference for analog unless it’s echo/delay, then any digitally induced delay is lost. Any small delay looses phase information, which when mixing using analog goodness, matters, at least to people who imagine it does like me…

If you are mixing multiple instruments, phase delay is not a thing. If anything, trying to make something sound like it was coming from multiple instruments when in reality it was from a single source will sound bad. My guess is that your concern over phase is from something you heard somewhere. In real life, it is almost never important.

If you want to reproduce it you will have to buy MiGiC, this is not open source or anything.

Buying MiGiC wont help you reproduce this project. The platform part is open source and available on Github. The MiGiC part is a special build for ARM that is unreleased. The author is looking in to if/how to publish a binary release for makers specifically for the Pi somewhere.

The platform code is open source and published on Github. The author is investigating if/how to make a binary release of the lib for makers using Pico.

I take that it cannot transmit pitch bend just note value at start of note and remain till note off. Correct?

Description in article makes the mistake when referring to a one way flow of whatever is topic. MIDI to guitar would be a player guitar including acoustic being played by solenoids and drivers being fed MIDI. There was someone featured here that made such an instrument.

Right, it figures out what the note is, and there’s no volume info either. So if you bent a string it would just detect a new note. I think it would be easier to just learn how to play a keyboard.