Have you ever noticed that people in old photographs looks a bit weird? Deep wrinkles, sunken cheeks, and exaggerated blemishes are commonplace in photos taken up to the early 20th century. Surely not everybody looked like this, right? Maybe it was an odd makeup trend — was it just a fashionable look back then?

Not quite — it turns out that the culprit here is the film itself. The earliest glass-plate emulsions used in photography were only sensitive to the highest-frequency light, that which fell in the blue to ultraviolet range. Perhaps unsurprisingly, when combined with the fact that humans have red blood, this posed a real problem. While some of the historical figures we see in old photos may have benefited from an improved skincare regimen, the primary source of their haunting visage was that the photographic techniques available at the time were simply incapable of capturing skin properly. This lead to the sharp creases and dark lips we’re so used to seeing.

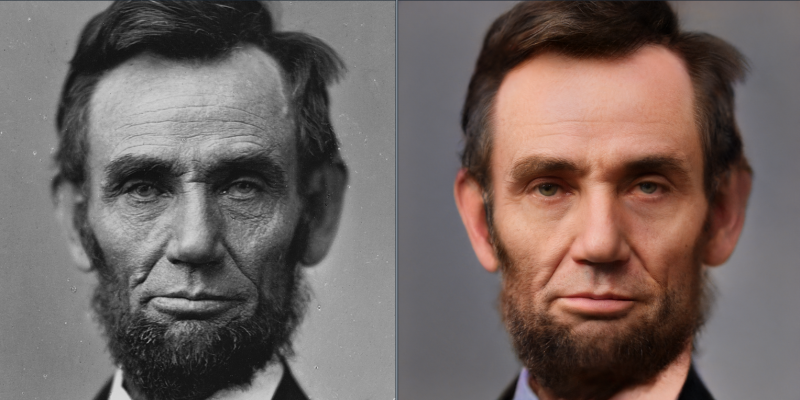

Of course, primitive film isn’t the only thing separating antique photos from the 42 megapixel behemoths that your camera can take nowadays. Film processing steps had the potential to introduce dust and other blemishes to the image, and over time the prints can fade and age in a variety of ways that depend upon the chemicals they were processed in. When rolled together, all of these factors make it difficult to paint an accurate portrait of some of history’s famous faces. Before you start to worry that you’ll never know just what Abraham Lincoln looked like, you might consider taking a stab at Time-Travel Rephotography.

Amazingly, Time-Travel Rephotography is a technique that actually lives up to how cool its name is. It uses a neural network (specifically, the StyleGAN2 framework) to take an old photo and project it into the space of high-res modern photos the network was trained on. This allows it to perform colorization, skin correction, upscaling, and various noise reduction and filtering operations in a single step which outputs remarkable results. Make sure you check out the project’s website to see some of the outputs at full-resolution.

We’ve seen AI upscaling before, but this project takes it to the next level by completely restoring antique photographs. We’re left wondering what techniques will be available 100 years from now to restore JPEGs stored way back in 2021, bringing them up to “modern” viewing standards.

Thanks to [Gus] for the tip!

It’s not restoring as the info never was there in the first place. Now this is called enhancing!

The fun fact is AI predicts color, but from paintings and the few remaining autochrome pictures we know the color palette in clotching and buildings was way more colorfull than AI is believing us to think. Also take a look at the pictures made by Prokudin-Gorski to see that peasants always wore very bright colors. Way more than we do now with our pale blue jeans.

Lets not forget its an altering of historical evidence and could in the long run be seen as the real thing. The question is: does it matter?

I think the colour ‘prediction’ is the worst aspect of this – in each case it’s notable that the sibling has been (manually) chosen to have a skin tone that they want the image to have, implying that little comes from the original (understandably so as the red component is lacking).

In several of the images, the clothing is miscoloured too – white collars turn skin-toned.

I also agree that this is _not_ restoring. It’s more akin to asking an artist to draw a picture of the person from a photo.

Not even enhancing, but re-imagining.

And the colors were never that bright until the invention of synthetic dyes for clothing. Paints and photographic emulsions could use compounds which provided vibrant colors, but wouldn’t stick to fabric or would have been extremely toxic to use. The early color photographs were a little bit embellished on that point.

Synthetic dyes started appearing around 1858, so about in period with the rise in photography, certainly before the invention of the dry plate gelatin process.

We assume the world was dull before colour photography came along, but we are swayed by the fact most photos are B&W. Painting don’t show that and if we look at the very early colour photography pioneers such as Sergey Prokudin-Gorsky, we see a world full of vibrant colours.

https://twistedsifter.com/2015/04/rare-color-photos-of-the-russian-empire-from-100-years-ago/

Wondering if the problem is being approached the wrong way. Instead of a detailed analysis of how photographs degrade.

An interesting aside to the colouring in problem is that the original art deco style was supposed to be pastel coloured buildings. Thanks to B&W photography sending images around the world this quickly changed to the all white style of buildings we now associate with art deco

Interesting, but perhaps not grass-roots DIY, hacking. On this website, I still enjoy the small-time player hacks. These pro-endevours and tech-demos create provocative posts, but two much pepper spoils the Hackaday soup.

+1

There do seem to be more write-ups of pro level stuff these days. I like it though as it breaks things up a bit. They’re quite nicely written too.

Used to read popular mechanics, but they’ve gone far too far the other way and everything is dumbed down. Some articles either have obvious errors (I don’t look for them), and others seem to be written from peculiar sensationalist prospectives.

Or more simply, from my prospective – Hackaday kicks popular mechanics butt.

I’m a professional photographer and historical technics expert. In this process I see only Instagram beauty filer applied to high detail images.

Do the scientific thing in this situation: get one of their origin images, apply Instagram’s beauty filter, and show us how it compares to the results shown here.

+1

I have some plate photos of my grandfather’s family from about 1895 and the detail is extraordinary – down to the weave of the cloth in their clothes and leather grain in shoes. Clearly the guy behind the shutter took great pains to get it exactly, perfectly correct. Applying the same “beauty” filters as this article does a great disservice to the painstaking craftsmanship of the original by removing detail and turning Abe into another Insta prima donna, of which we have a superabundance already.

Disturbingly, most talking-head television “news” these days is applying the same effect in real time – making the distinction between an AI commentator and a real one almost nil, beyond the AI version likely being more literate.

I recall being in high school and having shot hundreds of rolls of 35mm film in different grades and trying different things. I found a 4×5 view camera in the photography labs store room. No one had touched it many years. I was eager to play with it. One of the most vivid memories I have from the film photography days was taking that negative, we had one big old enlarger in the corner that held the negative between glass plates you had to carefully clean. Another piece no one had used in years, and I had a grain focus scope to focus the thing with. You get used to 35mm and how sharp things get before they fall past the peak and get fuzzy on the other side. This blew my socks off because I kept turning the focus knob and it just kept getting better. Amazing detail compared to 35mm.

People who tend to move, I can see them not making the sharpest images, but an old photo of something stationary, with decent optics and a big negative, the resolution would be hard to beat.

« We’re left wondering what techniques will be available 100 years from now to restore JPEGs stored way back in 2021, bringing them up to “modern” viewing standards. »

I added an entry in my Google Calendar for April 13th, 2121, telling myself (or I guess my AI-merged consciousness or *something*), to go on the Internet (if Vtubers have not replaced it) and come back here to answer this very comment with some information about what we now do with old JPEGs.

Hopefully future me has more interesting stuff to say than present me.

Arg, it’s not 2121 yet, but mentioning Vtubers already makes this comment dated as heck…

There is already e.g. https://topazlabs.com/jpeg-to-raw-ai/

In a way it is a quite simple problem for JPEG that has been compressed once, as there aren’t that many different JPEG encoders in existence, they are fully deterministic and the encoding parameters are available from the file. But for a repeatedly compressed file there will be compression errors of compression errors.

I had to look it up what that vtuber thing is.

https://en.wikipedia.org/wiki/Virtual_YouTuber

Reminds me of the Blue Man Group, Gorillaz, and that pop group with those helmets on (forgot their name, can’t bother to look it up). And there are probably others from decade’s before.

So what else is supposed to be new?

Daft Punk?

Or that 1980s group Devo?

I’m very surprised you hadn’t at least heard the name/concept of Vtubers mentioned *somewhere*.

It really had quite the cultural impact, on Youtube and Twitch, during the isolation/stay-home days of Covid-19 in 2020, where people watched a lot of Vtuber content.

It’s a bit like not having heard of Gangnam-Style in 2013 or the Ice Bucket Challenge in 2016…

Max headroom has got to be the canonical one, surely. Blipverts can’t be far off now…

“Max headroom” ?

Surprised you haven’t heard of it…

“primitive film isn’t the only thing separating antique photos from the 42 megapixel behemoths that your camera can take nowadays”

Primitive perhaps, but not necessarily low resolution. One of the most popular methods of the day wet plate collodion consisted of silver nitrate suspended in a liquid solution. Its resolution matches and surpasses modern sensors because basically a “pixel” is a single molecule of Silver Nitrate. Due to this modern scans often do not give justice to the amount of detail contained.

However they are tricky to make and they are not a negative so they cannot be re-produced.

The limiting factor of those old photographs was the lenses, not the size of the silver grains. Now, as ever, the larger the size of the image sensor/negative the better the quality of the results. The trade-off is in the size of the camera and weight/portability of the system.

Modern smart phones have tiny image sensors, but use lots of image processing and extremely high quality fixed lenses to compensate. There is however still a market for medium format and 35mm digital cameras purely because of the extra quality of the results and the flexibility that exchangeable lenses offer. Large format is, for the most part, impractical in the digital age because of the economics of manufacturing sensors on fixed size wafers with known defect rates.

Probably the bigger limiting factor was the length of exposure required. Even today if you use a 40 MP sensor it is very sensitive to even small movement. With exposure times sometimes in minutes, the camera needed to be clamped down and in terms of subjects they had to remain still for an extended period.

Modern camera lenses are a miracle of modern material science, but a lot of that is concentrated on wide or long lenses and reducing them down to a usable size. Old lenses can be remarkable good, especially when mounted on a large camera when the size of the lens is not a problem. Also black and white is less of a problem for lens because they don’t have to worry about things like fringing

I agree on the resolution. When a 35mm slide is magnified enough, you can see details even after the grain shows clearly. If the slide is duplicated with a full frame digital camera to 1:1, the pixels will show way before you can see any grain. Another plus with film is that the exposure/density relatioship is continuous instead of the stepped nature of digital.

When it comes to wet plates, you are slightly wrong. A Daguerreotype is made on a metal plate and will produce a very weak negative image that will show up as a positive under correct lighting. It is copyable by rephotography. The wet plate is made on glass and will produce a normal negativc, transparent image that is just as easy to print as any nitrate or acetate based negative. Dry plates act the same with the difference of being coated with a gelatin based silver halide emulsion instead of mixing the halide with collodion.

To me, the big difference is that film grain looks nice, while pixels or compression artifacts are ugly.

Really a molecule, or a small crystal?

Grain in film are clumps of silver nitrate, not individual molecules. This is why different developers give you different grain – they influence the way silver nitrate forms clumps.

Bigger clumps catch more light, which means that more sensitive film generally looks more grainy.

Collodion or wet plate has molecular resolution. It’s grainless. And for this problem you need a lot of light to register image.

Wet plate collodion could produce negatives. The image of Lincoln is literally from a wet plate collodion negative.

The Lincoln picture is made from a collodion wet plate negative.

Are we just not going to talk about how one side of Lincoln’s collar is now his skin?

Nice catch!

AI knows better silly hu-mon

Make them MOVE ! https://www.myheritage.com/deep-nostalgia

That’s a bit chilling, make your ancestors look like they’ve forgotten why they came into the room.

But will future generations know that I could wiggle my ears or flare my nostrils?

Colourizing old photos seems to reduce everyone to the same weird colour of ham…

Well, I think that just looks a retoched Charleton Heston, the new image (can’t call it a photo) has lost all the character of the original. Step backwards for me. Give an AI an airbrush, and end up with a Tellytubby.

And the whole right side seems out of focus and seems to be lacking huge detail in the hair and beard. Where did the fuzzy eyebrows go?

Am I the only one who wondered if Lincoln really needed a nose reduction?

And why in the above picture his collar is coloured differently on each end, one side being flesh-toned.

I’m wondering if it would make more sense to just take pictures of modern people with old camera technology and use *that* as the training data. Then you can compare modern photos and “historic” photos of the same person to predict *exactly* the impact of the technology.

The most interesting takeaway I saw here is how the original photos were almost on ultraviolet film. I had thought the black and white was more ore less an average of the visible spectrum. Looks like that was an unwarranted guess on my part. I wonder if there are any old photos that show details that wouldn’t register at all to the human eye, like how some flowers are ultraviolet colored.

+1 same here. The whole ‘sibling’ concept seems a bit silly (IMHO) – doesn’t seem you’re really enhancing an original at that point, and just ‘playing’ and creating a new image that kind of looks like the original person – but is something/someone different at that point, and seems to have 0 ‘historical’ value at that point.

These sorts of things always get attention as they trip people’s “novelty detectors” but in the end most of them end up gathering dust in the digital effects bin because almost nobody can use them well enough to have the result not look filtered or off in some way. Remember the faked HDR fad a while back and the awful mess people would make of images with it? I am glad people grew out of that delusion. If your tool marks are obvious, but not deliberate, then it tool is dictating the end result and you have mastered nothing.

Really relateble I just started my own travel blog and then covid hit and I could not travel anymore from my country. No travel planning for me at all 😦