With the advent of super powerful desktop computers, many developers make use of some sort of virtual or psuedo-virtual machines (VM). We run Windows in a VM and do kernel development in a VM, too. If you are emulating the same kind of computer you are on then the process is simpler, but it is possible to run, say, ARM code on an x86 (or vice versa) but with possibly slower performance than running natively. QEMU is probably the best-known program that allows a CPU to run code targeting a different CPU, but — by default — it targets desktop, laptop, and server-class machines, not tiny embedded boards. That’s where xPack QEMU Arm comes in. It allows you to run and debug embedded Cortex-M devices in an emulated environment on a host computer.

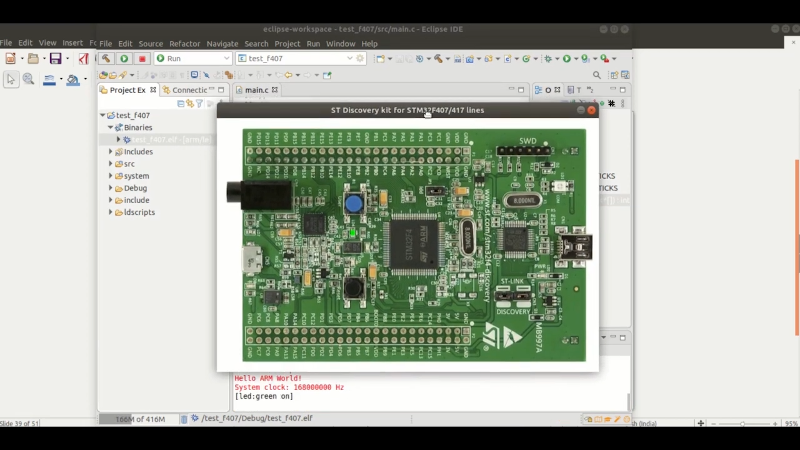

The tool supports boards like the Maple — which means it should support bluepill, along with popular boards such as the Nucleo, some discovery boards, and several from Olimex. They have plans to support several popular boards from TI, Freescale, and others, but no word on when that will happen. You can see a decidedly simple video example from [EmbeddedCraft] of blinking a virtual LED in the video below, although you might like to mute your audio before playing it.

Of course, there are limitations. You don’t get floating point M4 instructions, for example. The interrupt handling is reportedly not very high-fidelity. You can write debug messages to a UART, but you can use semihosting to write to a file descriptor on the host computer.

The code is made to work with Eclipse, although we bet it will work with other IDEs, too.

Not the only time we have been working on rebuilding transistor level simulation virtually though this is more hardware emulation. Though the 6502 isn’t exactly running at 100+ MHz like some ARM processors are doing now. More like around 2 MHz.

https://floooh.github.io/visual6502remix/ is a neat virtual 6502 example if you want to see it. Check Help -> About for a list of dependencies used in that project (lots of good stuff in there), the two most important being the original data sets from visual6502, and a C re-implementation of the transistor-level simulation, called perfect6502 and located at https://github.com/mist64/perfect6502.

This QEMU needs to support RPis, RP2040s, Arduinos and Teensies; so hardware like actual ARM M4 or M7 chips and the like and also handle a wide assortment of other attached hardware chips as well. Have to start somewhere. Let’s add JTAG and hardware VMs to really help with microcontrollers as well.

I have poked around with NRF52 emulation with Jumper (emulator), it worked better then expected. If i had found it a year earlier it could have saved me a lot of time…

https://medium.com/jumperiot/how-we-found-a-bug-in-nordics-sdk-using-jumper-s-emulator-55e5d5e00674

It is hard tosimulate a moden day *microcontrollers* as you’ll need to simulate peripherals with cycle accuracy. Ask people that have done their cycle accurate console simulators that also duplicate the hardware bugs. Simulating a *microprocessor* in a system like QUEM is much easier.

Without some detailed info of all the possible peripheral modes and interactions, this is not going get too useful as a replacement for SWD debugger. Do it well on one Arm series before losing focus trying to cover a bunch of boards from different vendors.

I’ll use a SWD debugger. If I for some stupid reasons want to use a simulator, I would run the official one with MKD – made by Arm. Paid software have an advantage as they can get inside info from the chip designers.

technically it’s just QEMU ARM, the xPack part is just the package system used by the Eclipse Embed CDT project and has nothing to do with the emulation topic.

why not using renode? much more advanced than qemu in the periperal department.

For me debugging falls into several categories.

One category is for fairly generic algorithms, and this works best with just compiling the code as a PC program and debugging it with whatever IDE you’re happy with.

Another category is microcontroller specific code. Interaction with peripherals, DMA, disruptions from ISR’s etc, and I’m with tekkineet. I do not see how this emulator can help much with this sort of code.

I started with uC’s … a long time ago. Way before “arduino”, and my first serious programs were for an ATmega8, which was one of the first processors with both GCC & Flash programmable (I found the asm for PIC16F84 horrible, only did a blinking led in that) Over time I’ve learned that debugging uC firmware with a logic analyzer works very good in some applications. It works with a few #define’d macro’s which can either result in generating debug code, or disappearing into “nothingness”, and when it inserts debug code, it just spits out some constants (so you know exactly where the uC is at that point) through some unused peripheral. Can be UsART, SPI, I2S, or other. Such writes are just a single instruction writing to some dataregister, so impact on timing is very small in the code.

One of the advantages in catching this data with a Logic Analyser (I use Sigrok / Pulseview) is that it places this debug information in time related to other I/O visible on the uC pins, and you can look back into the past into what happened before your software went afoul.

These day’s I’m in the progress to switching from the old AVR’s to 32 bit ARM-Cortex M3, and these chips also have a debugging interface, but I have not used that yet. But if you set a breakpoint and let your uC stop (try to find the right location…) then immediately all timing goes down the drain.

You’re talking about arm Single Wire Output SWO.

My problem with “microcontroller” emulation is that the problem is never in the generic code on the microcontroller — it’s either in the peripherals or their interaction with the outside world. ISS: It’s the System, Stupid!™

I remember debugging an ADC for half an hour — it was charge accumulating on the sample-and-hold capacitor as it multiplexed across the different channels, so the first read on any new channel would be more like the last read on the previous one. Slowing the ADC down, or tossing a dummy read, solved the problem. QEMU is not going to dredge this one up.

Does the “Maple” emulator follow the silicon bug in the I2C peripheral on the STM32F103s? (https://www.st.com/resource/en/errata_sheet/cd00190234-stm32f101x8-b-stm32f102x8-b-and-stm32f103x8-b-medium-density-device-limitations-stmicroelectronics.pdf) If not, good luck making a low-level I2C peripheral work reliably.

Etc.

In-system-debugging is the shizz. And sometimes that even means relying on printf/toggling LEDs, when the timing is tight.