GPS is an incredibly powerful tool that allows devices such as your smartphone to know roughly where they are with an accuracy of around a meter in some cases. However, this is largely too inaccurate for many use cases and that accuracy drops considerably when inside such as warehouse robots that rely on barcodes on the floor. In response, researchers [Linguang Zhang, Adam Finkelstein, Szymon Rusinkiewicz] at Princeton have developed a system they refer to as MicroGPS that uses pictures of the ground to determine its location with sub-centimeter accuracy.

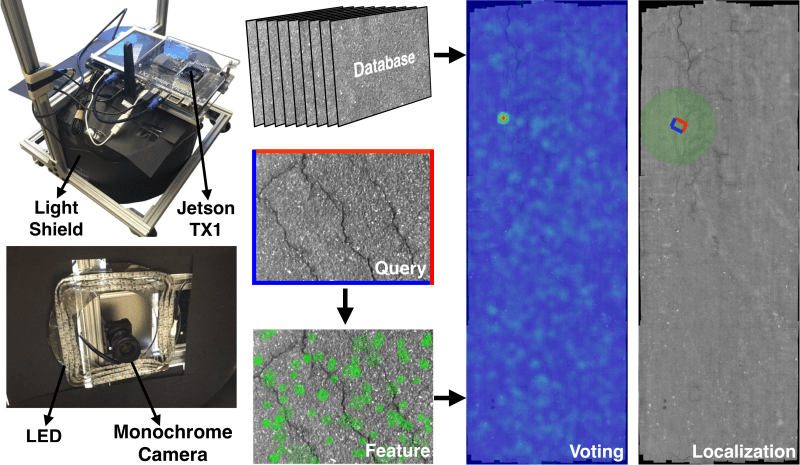

The system has a downward-facing monochrome camera with a light shield to control for exposure. Camera output feeds into an Nvidia Jetson TX1 platform for processing. The idea is actually quite similar to that of an optical mouse as they are often little more than a downward-facing low-resolution camera with some clever processing. Rather than trying to capture relative position like a mouse, the researchers are trying to capture absolute position. Imagine picking up your mouse, dropping it on a different spot on your mousepad, and having the cursor snap to a different part of the screen. To our eyes that are quite far away from the surface, asphalt, tarmac, concrete, and carpet look quite uniform. But to a macro camera, there are cracks, fibers, and imperfections that are distinct and recognizable.

They sample the surface ahead of time, creating a globally consistent map of all the images stitched together. Then while moving around, they extract features and implement a voting method to filter out numerous false positives. The system is robust enough to work even a month after the initial dataset was created on an outside road. They put leaves on the ground to try and fool the system but saw remarkably stable navigation.

Their paper, code, and dataset are all available online. We’re looking forward to fusion systems where it can combine GPS, Wifi triangulation, and MicroGPS to provide a robust and accurate position.

Video after the break.

There are already household vacuum robots that do the same thing with the ceiling structure.

And bottoms of tables I presume. Does it work in the dark too?

Mine does – has an IR beacon that projects a pattern.

Do you have a picture of the pattern?

This was also used on early cruise missles – I assume ‘programming’ those missles must have been quite the undertaking.

So after posting this I had to do some scheduled procrastination, and went reading. I was technically wrong – early cruise missiles used a version of this using radar, not cameras. Optical navigation was done much later.

Actually you were not wrong there were some cruise missiles which used star trackers (optical) for navigation.

Tell us more about your scheduled procrastination. Is this something you do or were you being sarcastic and claiming that the procrastination was on purpose?

I once heard a story of med school student that worked (studied) in 12 minute intervals with one or two minute breaks in between. He had timed his attention span and ability to learn/remember material and he had the discipline to maintain that schedule. Made for odd study sessions for people who didn’t know before hand. Apparently it worked for him.

Interesting. I had not heard about the 12 minute study/work loop. I have heard about research in a 20 minute project focus/break schedule. I wonder how he dealt with context shifts (ie going between two different projects). I would love to hear more about this.

I’ll see if I can get more information and circle back here in the next few days.

Odds are this guy has retired by now, since he went to med school in the mid 70s. It would be interesting to follow up with him to see if he maintained this mentality in other portions of his life after leaving school (because I can’t imagine a doctor being able to maintain it in their professional one).

No sadly I was being sarcastic. Though my mom used to say I needed to do a couple of “manouvers” first before doing any actual work.

It breaks my heart a little that they put all of those cool sensors into a device designed to just be blown to smithereens.

Several militaries still use at least some variation of terrain mapping navigation to some extent for autonomously guided equipment.

GPS = Global Positioning System.

Not sure what is global about a system that requires high detailed photos of a local area. It’s not like you can scan in the whole world at high resolution and store that in a system…

Ground Positioning System?

I know I know…

I think you didn’t really get the point of this whole excercise.

My first reaction was that this was a cool project, but has nothing to do with GPS. If it is not a Global Positioning System the authors should not have used the acronym GPS in the name without some tie to the commonly recognized acornym. Using the name MicroGPS came across to one of my colleagues, who is an expert in geodesy and cartography, as naive and misleading. While I understand the uses and benefits of using photogrametry, and also have experimented with setting up databases to quickly search billions of focal points, the name is frankly misleading — unless they use a Global Positioning System (GPS) to get you in the ballpark, and the mGPS system to get you down to the mm or better. So, @Chris, I think I do get the point of the exercise of being able to position autonomous systems down to the mm, but I will still contest it was named badly. There is also a rich literature in photogrametry, and as I read the paper and inspect the code I will see if they knew about it, if the title just needs a tweak, or what is going on (one of the advantages for releasing code and data to replicate the project — cudo’s to them for that)

All that said it is an impressive project non the less. I look forward to closely reviewing the project.

a couple of updates…

The project uses SiftGPU (a GPU supported version of the Scale Invariant Feature Transform algorithm), FLANN (a Fast Library for Approximate Nearest Neighbors), and OpenCV to support the general image processing. SIFT (and ORB which is not used here) are standard tools for this kind of work, so I already have a very good idea how they are doing everything. I was going to run a couple of tests, but their build system does not work and if I actually build it, it will probably take a non-trivial effort to get it running again.

BTW, when researching this I also found the name MicroGPS used for miniaturized GPS modules… Like I said, the name does not resolve well.

I finally had a moment to poke at this, and here are the changes I needed to make to get it to work:

* change cmake_minimum_required (VERSION 2.6) value up to whatever you have installed (worked with 3.20.5) in CMakeLists.txt

* link config_linux.cmake config.cmake or modify the CMakeLists.txt to include config_linux.cmake

* add the following lines to image.h (to support OpenCV4 (currently compiled with 4.5.2):

#include “opencv2/imgcodecs/legacy/constants_c.h”

#include “opencv2/imgproc/types_c.h”

I still have to resolve some missing GL libraries in the linker, but if I get it sorted I will reply or edit this post. As a note, the build system was not as bad as I originally feared — mostly just hard coded locations of files.

HackaDay’s interface would now allow me to edit my last comment. THis is the last of the magic sauce to get it to compile:

* in execute/CMakeLists.txt, anywhere where you see ‘gflags’ in the target_link_libraries, add “OpenGL GLEW glut lz4” to resolve the OpenGL dependencies.

As a note, this has only been build on Gentoo Linux, and I have not run the program yet — just got it compiled.

Anyway, I hope this is useful to others interested in project.

+1 for not being global.

Why not just use the wheel tick instead a complex camera system that you have to calibrate?

Because the camera system is an absolute position detection method and doesn’t accumulate error over time like a relative positioning system would. Wheels are prone to wear and slippage which an encoder can’t account for, atleast not without additional sensors/information. Also the camera system is a nice fail safe because if the robot crashes it can fairly quickly determine its position again.

You only need to calibrate once to get these benefits. Afterwards you could probably update the system live.

In theory you could also calibrate as you navigate and incorporate a map of things to ignore, further increasing the accuracy. Perhaps even making the ignore list seasonal or periodic, say for day and night.

So it is a bit like a giant mouse, they should call it the capybara.

Just to be pedantic, while a capybara is a rodent, it’s more of a giant hamster than a mouse.

Okay then, how about calling it a R.O.U.S?

https://princessbride.fandom.com/wiki/R.O.U.S.

HIDEOUS

Human Interface Device Of Unusual Size.

Of course, it isn’t a HID, just works a bit like one. Still, the moniker is almost too good.

So my optical mouse is a microGPS! Cool!

I’m surprised this hasn’t been done before!

From what I remember crabs use a similar system of navigation. But instead of light they use fluctuations in the magnetic field to navigate.

In a warehouse/well used area how do they account for crud, spills and marks appearing and disappearing on the floor?

As an alternative you could use the new-fangled Bluetooth positioning system.

I was about to ask exactly the same question, I work in a warehouse as a maintenance engineer, there’s absolutely no way this would work on one of our floors with the amount of dust and general dirt that accumulates.

Perhaps a combo of scanning the floor & ceiling? I would imagine less crap goes on the ceiling, but it’s not always visible.

+1 some sort of local system using receiver/transmitter devices to track something, on places like that, is much better. True satellite position (something this project is not) don’t work on caves or heavy concrete buildings.

The video does mention that they experimented with how much of a given image could vary (by putting leaves on it) before it broke the localization & they found that it worked as long as at least half the image matched. Still I suspect you’d need some sort of dead reckoning to really make it work in the real world where this technique is only used to keep it calibrated.

At first I thought about using this in farming, but when I saw it’s based on visible terrain features I realised it won’t work as tire trails in the mud change even with the same robot transit.

I think it might be possible to get it to work in a farming context — depending on how you filter that images. That said, if you are thinking of just tracking the ground, then yea, you are likely out of luck. I’m thinking more about triangulating on trees, large rocks, fence posts, and things that do not change all *that* often. Anyway, if you are interested in looking into this PM me and we can discuss testing this. BTW, I own a small farm and might be able to test this.

The livescribe pen I use to record notes uses an optical system for absolute positioning that works with sub mm accuracy. The paper has tiny dots placed in specific patterns and the pen has a camera that sees the dots and whatever I’m writing and records it. IRIC the absolute positioning space available is larger than the surface of the earth.

Usually databases are represented in charts as three stacked cylinders. I’m assuming the original intent was to look like a stack of hard disks to represent storage, but I’ve heard people call it a barrel or (humorously) a trash can.

But from now on I shall represent a databases in charts as a stack of concrete slabs. Thank you.

The missile knows where it is at all times. It knows this because it knows where it isn’t. By subtracting where it is from where it isn’t, or where it isn’t from where it is – whichever is greater – it obtains a difference or deviation. The guidance subsystem uses deviations to generate corrective commands to drive the missile from a position where it is to a position where it isn’t, and arriving at a position that it wasn’t, it now is. Consequently, the position where it is is now the position that it wasn’t, and if follows that the position that it was is now the position that it isn’t. In the event that the position that it is in is not the position that it wasn’t, the system has acquired a variation. The variation being the difference between where the missile is and where it wasn’t. If variation is considered to be a significant factor, it too may be corrected by the GEA. However, the missile must also know where it was. The missile guidance computer scenario works as follows: Because a variation has modified some of the information that the missile has obtained, it is not sure just where it is. However, it is sure where it isn’t, within reason, and it know where it was. It now subtracts where it should be from where it wasn’t, or vice versa. And by differentiating this from the algebraic sum of where it shouldn’t be and where it was, it is able to obtain the deviation and its variation, which is called error.

https://www.youtube.com/watch?v=bZe5J8SVCYQ

Yep, reads like too many theory of operation descriptions where obviously the tech writer got paid by the word rather than by clarity of thought.

Could be a fun project to reproduce with an optical mouse. Digitizing the desk would be a bit of a chore though.

Gah! That was supposed to go to the bottom, not this thread.

Hunt around. I have seen projects where they hack the mouse sensor and process the imagery directly. It is likely that you can find all the pieces off-the-shelf, and hook it into the microgps code. Also, if I am not mistaken most mouse image sensors are only 64×4 or maybe 128×128 pixels, BUT produce these images many hundreds, or even thousand, images/sec. If you do get this working, let me know I would love to see it it would also make a good Hack a Day project as well ;-)

“We’re looking forward to fusion systems where it can combine GPS, Wifi triangulation, and MicroGPS to provide a robust and accurate position.”

All this gear will be useless if you can’t get a robust and accurate point of reference. Ultra accurate relative mesurements will never refine your absolute position without a dependable origin point. However the proposal here is very interesting to improve position mesurements relative to an arbitrary origin point.

For the most part I agree with you, but I can still see how this could be useful in a warehouse, building, or home, as long as you can quickly find the absolute position using FLANN from the database. I have not used FLANN before and not sure how well it can make that step. That said, if it can, I have a different project in mind and will see if I can get a hold of an old database of a million plus image…