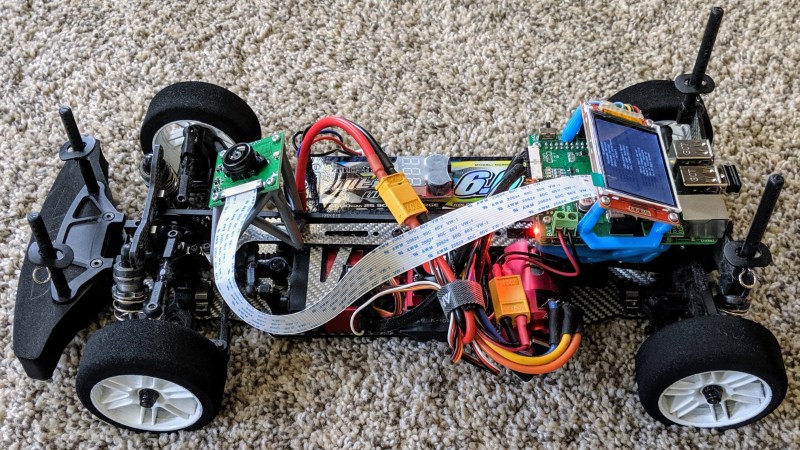

[Andy]’s robot is an autonomous RC car, and he shares the localization algorithm he developed to help the car keep track of itself while it zips crazily around an indoor racetrack. Since a robot like this is perfectly capable of driving faster than it can sense, his localization method is the secret to pouring on additional speed without worrying about the car losing itself.

To pull this off, [Andy] uses a camera with a fisheye lens aimed up towards the ceiling, and the video is processed on a Raspberry Pi 3. His implementation is slick enough that it only takes about 1 millisecond to do a localization update, netting a precision on the order of a few centimeters. It’s sort of like a fast indoor GPS, using math to infer position based on the movement of ceiling lights.

To be useful for racing, this localization method needs to be combined with a map of the racetrack itself, which [Andy] cleverly builds by manually driving the car around the track while building the localization data. Once that is in place, the car has all it needs to autonomously zip around.

Interested in the nitty-gritty details? You’re in luck, because all of the math behind [Andy]’s algorithm is explained on the project page linked above, and the GitHub repository for [Andy]’s autonomous car has all the implementation details.

The system is location-dependent, but it works so well that [Andy] considers track localization a solved problem. Watch the system in action in the two videos embedded below.

This first video shows the camera’s view during a race.

This second video is what it looks like with the fisheye lens perspective corrected to appear as though it were looking out the front windshield.

Small racing robots have the advantage of not being particularly harmed by crashes, which is something far more embarrassing when it happens to experimental full-sized autonomous racing cars.

The Samsung vacuum robots also navigate using a camera that faces up.

What do they do when a cat sits on it, maybe curls up for a nap? Cats can sleep for hours. Does it just sit there? Beep? Send a text to the owner?

Two high voltage contacts on the top?

“autonomous RC car” – isn’t it a bit of misnomer?

Oxymoron – like military justice and airline food…

If you’re in the SF Bay Area be sure to check out the quarterly Autonomous Car Racing at Circuit Launch in Oakland. This car in particular is pretty amazing to watch but the entire event is really fun.

in the meantime, andy managed to get an RC car around a full size road course racetrack at approx 45 mph average. https://twitter.com/a1k0n/status/1449889430202765312 (i run this particular event)

This is awesome!

I bet the glitching of the position data that can be seen in the video can be alleviated by providing a gimballing mount. It seems like the yaw/pitch action of the vehicle as it shakes throws the algorithm off quite a bit at times.

Apart from that, this is really cool!

it obeys the rules of the race but not the intent. The Donkey software and the DIY Robocar races which grrew from it was all about learning and advancing autonomous vehicle systems. Races were initially timed with a single vehicle on the track therefore using something other than the actual track for positioning worked and as Andy showed it can be fast. Plus the ceiling details do not get obscured by eager fans crowding the edge of the track. Later, it was added to put 2 cars on the track but again Andy wins lots of time by getting his vehilce to jump ahead quickly so he never runs over other other car unless it is really slow since 3 complete laps was required.

The more interesting software is the Donkey software which uses Tensorflow to create and operate the autonomous models.

https://github.com/autorope/donkeycar

Now that you pointed it out… although his car goes wildly out of bounds on every lap, it seems to hit other racers with lethal accuracy, causing this Le Mans-esque disaster https://www.youtube.com/watch?v=PsfkLj527Go

I like that sort of out of the box, turn the idea on its head type of thinking Hmmm I must try putting different lenses in front of a chip out of a computer mouse to see if you get usable motion vectors out of it.

Many autonomous mobile robots have this option too. While they mainly rely on a LIDAR to do mapping, they usually have an option to attach a ceiling tracking camera to get better accuracy and help when the robot loses its place on the map (for example if the space has become vastly different from the saved map).

For example: Omron LD-90’s “Acuity Module” (https://assets.omron.eu/downloads/manual/en/v11/i613_mobile_robots_-_ld_platform_pheripherals_users_manual_en.pdf chapter 6)

I think its great idea, in real life, someday EF1 will use this tech, i hope its better racing.

Looks great, but the maths are really “over my head” (sadly)…

:D

https://www.youtube.com/watch?v=sbCe8aOBKPM