Last week we saw the announcement of the new Raspberry Pi Zero 2 W, which is basically an improved quad-core version of the Pi Zero — more comparable in speed to the Pi 3B+, but in the smaller Zero form factor. One remarkable aspect of the board is the Raspberry-designed RP3A0 system-in-package, which includes the four CPUs and 512 MB of RAM all on the same chip. While 512 MB of memory is not extravagant by today’s standards, it’s workable. But this custom chip has a secret: it lets the board run on reasonably low power.

When you’re using a Pi Zero, odds are that you’re making a small project, and maybe even one that’s going to run on batteries. The old Pi Zero was great for these self-contained, probably headless, embedded projects: sipping the milliamps slowly. But the cost was significantly slower computation than its bigger brothers. That’s the gap that the Pi Zero 2 W is trying to fill. Can it pull this trick off? Can it run faster, without burning up the batteries? Raspberry Pi sent Hackaday a review unit that I’ve been running through the paces all weekend. We’ll see some benchmarks, measure the power consumption, and find out how the new board does.

When you’re using a Pi Zero, odds are that you’re making a small project, and maybe even one that’s going to run on batteries. The old Pi Zero was great for these self-contained, probably headless, embedded projects: sipping the milliamps slowly. But the cost was significantly slower computation than its bigger brothers. That’s the gap that the Pi Zero 2 W is trying to fill. Can it pull this trick off? Can it run faster, without burning up the batteries? Raspberry Pi sent Hackaday a review unit that I’ve been running through the paces all weekend. We’ll see some benchmarks, measure the power consumption, and find out how the new board does.

The answer turns out to be a qualified “yes”. If you look at mixed CPU-and-memory tasks, the extra efficiency of the RP3A0 lets the Pi Zero 2 W run faster per watt than any of the other Raspberry boards we tested. Most of the time, it runs almost like a Raspberry Pi 3B+, but uses significantly less power.

Along the way, we found some interesting patterns in Raspberry Pi power usage. Indeed, the clickbait title for this article could be “We Soldered a Resistor Inline with Raspberry Pis, and You Won’t Believe What Happened Next”, only that wouldn’t really be clickbait. How many milliamps do you think a Raspberry Pi 4B draws, when it’s shut down? You’re not going to believe it.

Testing Performance and Power Draw

When it comes to picking a tiny Linux computer to embed in your project, you’ve got a lot more choice today than you did a few years ago. Even if you plan to stay within the comfortable world of the Raspberry Pi computers, you’re looking at the older Pi 3B+, the tiny Pi Zero, the powerhouse Pi 4B in a variety of configurations, and as of last week, the Pi Zero 2 W.

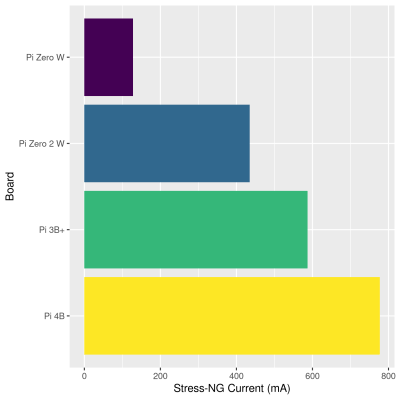

I ran all of the Raspberries through two fairly standard torture tests, all the while connected to a power supply with a 0.100 Ω precision resistor inline, and recorded the voltage drop across the resistor, and thus the current that the computers were drawing. The values here are averaged across 50 seconds by my oscilloscope, which accurately accounts for short spikes in current, while providing a good long-run average. All of the Pis were run headless, connected via WiFi and SSH, with no other wires going in or out other than the USB power. These are therefore minimum figures for WiFi-using Pi — if you run USB peripherals, don’t forget to factor them into your power budget.

Test number one is

Test number one is stress-ng which simply hammers all of the available CPU cores with matrix inversion problems. This is great for heat-stressing computers, but also for testing out their maximum CPU-driven power draw. All of the Pis here have four cores except for the original Pi Zero, which has only one. What you can see here is that as you move up in CPU capability, you burn more electrons. The Pi Zero 2 has four cores, but runs at a stock 1 GHz, while the 3B+ runs at 1.4 GHz and the 4B at 1.5 GHz. More computing, more power.

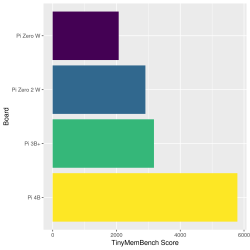

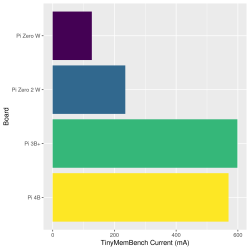

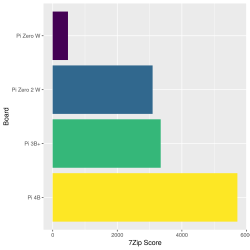

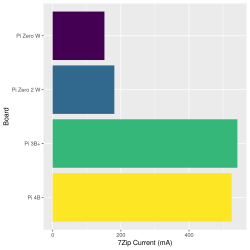

Test number two is sbc-bench which includes a memory bandwidth test (tinymemtest), a mixed-use CPU benchmark (7-zip), and a test of cryptographic acceleration (OpenSSL). Unfortunately, none of the Raspberry Pis use hardware cryptographic acceleration, so the OpenSSL test ends up being almost identical to the 7-zip test — a test of mixed CPU and memory power — and I’m skipping the results here to save space.

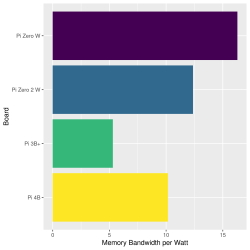

For ease of interpretation, I’m using the sum of the two memory sub-tests as the result for TinyMemBench, and the 7-zip test results are an average of the three runs. For all of these, higher numbers are better: memory written faster and more files zipped. This is where things get interesting.

Looking first at the memory bandwidth scores, the 4B is way out ahead, and the old Pi Zero is bringing up the rear, but the 3B+ and the Zero 2 are basically neck-in-neck. What’s interesting, however, is the power used in the memory test. The Zero 2 W scores significantly better than the 3B+ and the 4B. It’s simply more efficient, although if you divide through to get memory bandwidth per watt of power, the old Pi Zero stands out.

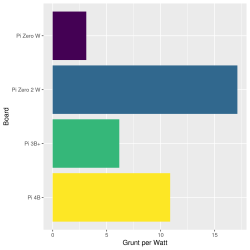

Turn then to the 7-zip test, a proxy for general purpose computing. Here again, the four-core Pis all dramatically outperform the pokey Pi Zero. The Pi 4 is the fastest by far, and with proper cooling it can be pushed to ridiculous performance. But as any of you who’ve worked with Raspberry Pis and batteries know, the larger form-factor Raspberry Pi computers consume a lot more power to get the job done.

But look at the gap between the Pi Zero 2’s performance and the Pi 3B+. They’re very close! And look at the same gap in terms of power used — it’s huge. This right here is the Pi Zero 2’s greatest selling point. Almost 3B+ computational performance while using only marginally more power than the old Pi Zero. If you divide these two results to get a measure of zipped files per watt, which I’m calling computational “grunt” per watt, the Zero 2 is far ahead.

If you’re looking for a replacement for a slow Raspberry Pi Zero in some portable project, it really looks like the Pi Zero 2 fits the bill perfectly.

Idle Current and Zombie Current

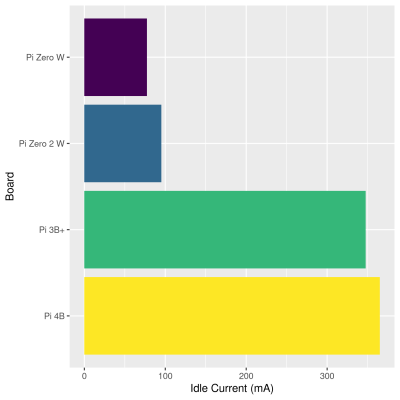

Some projects only need to do a little bit of work, and then can shut down or slow down during times of inactivity to use less total power over the course of a day. With an eye toward power saving, I had a look at how all of the boards performed when they weren’t doing anything, and here one of the answers was very surprising.

Unless you’re crunching serious numbers or running a busy web server on your Raspberry Pi, chances are that it will be sitting idle most of the time, and that its idle current draw will actually dominate the total power consumption. Here, we can see that the Pi Zero 2 has a lot more in common with the old Pi Zero than with the other two boards. Doing nothing more than keeping WiFi running, the Zeros use less than a third of the power consumed by their bigger siblings. That’s a big deal.

Unless you’re crunching serious numbers or running a busy web server on your Raspberry Pi, chances are that it will be sitting idle most of the time, and that its idle current draw will actually dominate the total power consumption. Here, we can see that the Pi Zero 2 has a lot more in common with the old Pi Zero than with the other two boards. Doing nothing more than keeping WiFi running, the Zeros use less than a third of the power consumed by their bigger siblings. That’s a big deal.

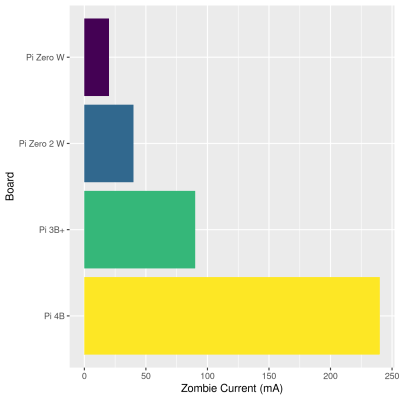

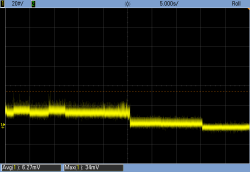

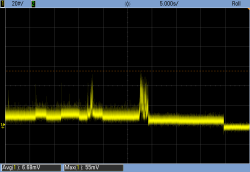

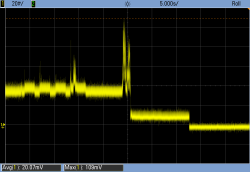

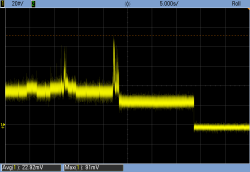

I also wanted to investigate what would happen if you could turn WiFi off, or shut the system down entirely, analogous to power-saving tricks that we use with smaller microcontrollers all the time. To test this, I ran a routine from an idle state that shut the WiFi off, waited 10 seconds, and then shut the system down. I was surprised by two things. One, the power consumed by WiFi in standby isn’t really that significant — you can see it activating periodically during the idle phase.

Second, the current draw of a shut-down system varied dramatically across the boards. I’m calling this current “zombie current” because this is the current drawn by the board when the CPU brain is shut off entirely. To be absolutely certain that I was measuring zombie current correctly, I unplugged the boards about ten seconds after shutdown. These are the traces that you see here, plotted for each system. There are four phases: idle, idle with no WiFi, shut down / zombie, and finally physically pulling the plug.

The Pi 4 draws around 240 mA when it is shut down, or 1.2 W! The Pi 3 draws around 90 mA, or 0.45 W. For comparison, the Pi Zero 2’s idle current is similar to the Pi 3’s zombie current. The Pi Zero 2 has a much-closer-to-negligible 45 mA zombie draw, and the original Pi Zero pulls even less.

The Pi 4 draws around 240 mA when it is shut down, or 1.2 W! The Pi 3 draws around 90 mA, or 0.45 W. For comparison, the Pi Zero 2’s idle current is similar to the Pi 3’s zombie current. The Pi Zero 2 has a much-closer-to-negligible 45 mA zombie draw, and the original Pi Zero pulls even less.

The point here is that while it’s not surprising that the power required to idle would increase for the more powerful CPUs, the extent of both the variation in idle and zombie current really dictates which boards to use in a battery powered project. Watch out!

Size and Power Isn’t Everything

In that respect, with the processing power of the Pi 3B, significantly better power management all around, and coming in at half the price, the Raspberry Pi Zero 2 W is incredibly attractive for anything that needs to sip the juice but also needs to pack some punch. The old Pi Zero shined in small, headless projects, and it was the only real choice for battery-driven projects. The Pi Zero 2 definitely looks like a worthy successor, adding a lot more CPU power for not all that much electrical power.

Still, I don’t think that the Pi Zero 2 will replace the 3B+, its closest competitor, for the simple reason that the Pi 3 has more memory and much more versatile connectivity straight out of the box. If your project involves more than a few USB devices, or wired Ethernet, or “normal” HDMI connections, adding all of these extra parts can make a Zero-based setup almost as bulky as a B. And when it comes down to pure grunt, power-budget be damned, the Pi 4 is clearly still the winner.

But by combining four cores tightly with on-chip memory, the Raspberry Pi Zero 2 W is definitely the most energy-efficient Pi.

1st

Nice. Wish I could get one from Microcenter

For real. I’m chomping at the bit waiting for the microcenter 15 minutes away to finally stock them, and I know I’m not the only one waiting.

I wish I was only 15 mins away. Lucky

About thirty minutes away from me, if you dont count all the road construction by the one near me.

7 hours 10 minutes away from me

You’re lucky. For me it’s 8 hours by plane.

To be fair, here in Europe, we have several shops which still have stock.

Microcenter is the best. It’s the only place around here {just narrowed down geographic location} to really brick and mortar shop computer stuff. Radio shack, Compusa, and Circuitcity are all gone. However there is/was one small unadvertised electronic (b2b focused shop that allowed consumer purchases) building in the industrial spot of town. They have/had bin(s) after bin(s) of parts [have to see if it still exist]

Micro Center says they are coming tomorrow (November 2). You will be able to buy ONE at the $15 price. Additional units will cost more: $25 for units 2-5, $40 for units 6 and up.

They rarely have new Pi models on release day; it probably takes them a bit of time to move them through their system, plus they want to have enough on hand to give people a decent chance of getting one that first day.

It’s a customs thing. They need the compliance docs, and they only get released publicly on release day, so then takes a few days to get from the warehouse to the suppliers.

Pre-ordered from Sparkfun.com, got shipped this week and should on my doorstep tomorrow.

Looked at the Micro Center web site today. The store near me (Cambridge MA) is listed as sold out. Every store except Columbus OH appears to be; that’s the one near their headquarters. Either the other stores sold through their first shipment quickly or they haven’t received them yet.

ASL – Arithmetic Shift Left, and about 254 of his relatives. My 1980 version of the rPi was a ZX80, Times Sinclair. Hard-wired in a keyboard, 16K memory, and an interface board with bi-directional bus driver, 8-bit A/D. Picture this, a 8 bit O-scope on a 12” B&W TV. We had to do synthetic programming to get past the native Basic interpreter. Long term storage was via a cassette tape player and audio cables.

Nice work. :) This is an excellent bit of kit. :)

Not sure why people think 512 megs is not enough. I have a Zero 1 running an HMI on my desktop ( with a 7″ display). It displays images from a weather website, fetches data from some remote devices using MQTT and displays that. And displays my calendar events for today. Also displays some air quality data from sensors attached to it’s GPIO. Currently using 88MB or ram.

And in my day, 48K was a lot of memory…

I have programed, ran apps, in 4K.

4K? Pure luxury!

When I was a kid if we want to play a video game we had to write it ourselves, and it had to fit in under 1KB memory…

+1

ZX81 :)

you had memory? luxury! in my day we had to bang bits down a nickel wire and wait for them to come back again…

Digi-Comp-1! THREE bits! And I *appreciated* it! Its design was a lot like relay logic.

A few years later (when I was 16 Years old), I discovered a PDP-8/L that only saw occasional use to perform experimental data reduction. It had 2 D/A converters and 2 A/D converters. Hooked to a TEK 545 scope with defocused beam, the little -8 could produce credible 5×8 characters (dot-matrix style). With that and a box of buttons, we used it to support the grad students’ reaction-time test experiments.

That was luxury.

My Z2W will be arriving from Adafruit in a few days.

48KB still is a lot of memory. Programmers today are spoiled but sometimes there are massive amounts of sensor data that needs to be cached. Stuff like Python is just wastefulness built on top of wastefulness but it does enable you to complete projects with haste.

I beg to differ. Serious users with serious PCs based on Z80 CPU had the full 64K very often.

Even in the late 70s. With less, CP/M was barely usable. MP/M and CP/M 3 supported bank-switching, even.

Even that moddest C64 had 64KB installed and people made use of the very last Byte of its memory.

For proper GEOS use, memory expansion was required, as well (REUs, GeoRAM).

But of course, 48KB are ok for a standard microcontroller project.

I past days a PC-XT had some 128-640KB memory.

The RPi Pico has 256KB memory, dual core, 32bit, and nearly 30 times higher clock-speed, so in theory it should (lets ignore the graphics for a moment, and just connect a terminal) be capable of doing quite some computing, and not just blink a led.

So what have gone wrong?

I would rephrase that as “what has changed”. Anyway, the answers:

1. We are no longer hand-crafting our code in assembler to minimize memory and computer time usage. We’re trading off speed and size to get shorter development time and code that is easier to understand and maintain. We’re also avoiding programming techniques like spaghetti code that can squeeze a bit more out of limited program space but lead to difficult to maintain code. Computing power has gotten much cheaper so that is usually a good trade.

2. We’re asking our processors to do more. Applications like software defined radio (SDR) eliminate analog components at the expense of doing a lot of computing. SDR can be less expensive to build and perform better than analog radio designs here and now, but it requires amounts of computing power that would have been prohibitively costly in the past.

3. Graphic user interfaces. They use lots of system resources, both for code to handle the interface and for the visual assets to draw the GUI. Even microcontrollers are often called on to create visually rich interfaces on a graphics LCD or OLED rather than simple text on a character LCD.

“1. We are no longer hand-crafting our code in assembler to minimize memory and computer time usage….”

No, but I am not talking about programming the Apollo AGC, but a “plain” PC, with an (not the best, but..) operating system, and the possibility to run program in Basic, Pascal etc.

And all that was absolutely possible with a PC-XT, even running spreadsheets and text editors.

“2. Applications like software defined radio (SDR)….”

This is totally irrelevant in the context.

“3. Graphic user interfaces.”

I said: “lets ignore the graphics for a moment, and just connect a terminal”.

But besides this, there should be no problem in handling graphics if we just do have a little bit more memory,

VGA with 256 colours requires 300KB, XGA requires 768KB.

So a Pico with 1MB should be safe sailing, and could even utilize that one processor could do all “the stuff”, while the other did handle graphics.

“1. We are no longer hand-crafting our code in assembler to minimize memory and computer time usage…”

A PC-XT did have an operating system, and was capable of running languages as Basic, Pascal, etc, and utilizing text editors and spreadsheets – even on systems with only 256KB it was not necessary to hand code in assembler.

“2. We’re asking our processors to do more. Applications like software defined radio (SDR)…”

I can not see the relevance of SDR in this context.

“3. Graphic user interfaces.”

As I said: “lets ignore the graphics for a moment, and just connect a terminal”, but even if we speak about 256 colour VGA (300KB) or XGA(768KB) it could easily fit if we had 1MB to play with.

And as the Pico has two cores, one could deal with graphics only.

To quote Eben Upton: You can do “pretty much anything else that you want to do, as long as it isn’t running that one, horrible, memory-hungry, processor-hungry application.” (The web browser.)

Preach it, brother!

Hi, Richard. Can you send me your design? Those are all the sensors I need every day!

I would like to know more about this, are the details somewhere?

Otherwise, what screen are you using? Total. Power consumption? Software involved? Thanks!

I just popped into one of my PI Zeros that is just operating a relay and monitoring a redis.io server… It is using a total of 31MB. Yep swimming in RAM. Way overkill for what I am using it for, but hey it is a $10 part … For that price, we can use them for anything we like from tiny projects to quite a bit larger projects.

Your RPi, running your software and OS only uses 31 MB? Do tell which operating system your program runs under…

PI OS server.

pi@rpi0w25:~ $ free -h total used free shared buff/cache available Mem: 432M 31M 285M 5.7M 115M 345M Swap: 99M 0B 99MLinux rpi0w25 4.19.66+ #1253 Thu Aug 15 11:37:30 BST 2019 armv6l GNU/Linux

DietPi v7.7.3:

# free -h

total used free shared buff/cache available

Mem: 475Mi 51Mi 343Mi 1.0Mi 80Mi 373Mi

Swap: 1.5Gi 0B 1.5Gi

My DietPi base system on a Pi Zero 2W shows less used RAM:

total used free shared buff/cache available

Mem: 427Mi 25Mi 326Mi 1.0Mi 75Mi 350Mi

“Not sure why people think 512 megs is not enough.”

Possible explanation: Because of Linux (Raspberry Pi OS/Raspbian). Like any *nix, it’s a memory hog.

Everything is a file, also, you know. So any of these tens of thousands of files used as config files, devices,

processes, links, tempory files etc. require an allocation unit (512 Byte to 4KB) for a text file, even if it is empty.

Thus, it’s better to have a RAM Disk to unload this mess onto than to wear out an SD card, whenever possible. :)

I’ve never found Linux to be a memory hog vs any os with equivalent functionality. Nor have I observed undue SD wear, for any reasonably correct installation.

Try using a pure-CLI environment sometime. If that’s not possible, try using a setup with just Xorg, no desktop environment or dbus. Dbus itself isn’t bad, but most of the really low-efficiency software assumes dbus will always be available.

When I’m doing deeply-embedded stuff with linux, I don’t find that it is a memory hog, or that it wears out sdcards or flash storage. In fact, most of the time, my systems go days between io-write events, unless they are acting as data-collectors. Most of these systems use a busybox memory-ring implementation of syslog, but I’ve also built images that use sysvinit and rsyslog. One such system had been running (on the same SD card) since 2016, with greater than 99.99% uptime and minimal SD wear. It has since been taken offline because the industrial monitoring system it managed was no longer needed.

Even for normal console-mode or xorg+xterm usage, there’s just not that much write load, as confirmed by iotop, atop, iostat and friends.

Start a browser (or app based on electron or similar), and all that changes. It’s not the *unix* that’s being the hog, it’s all the piggy apps. And my experience is similar on Windows, Windows IoT, etc. Embedded and non-graphical configurations generally have a low (or nearly zero) io-write load unless the software is actively storing things; desktop has a greater ambient write load; start a browser and open a few tabs, and all bets are off.

Ok, let me put it this way:

To my understanding, unixoide systems do have the strange conception that “free memory” equals “wasted memory”.

These systems will allocate all resources for their own first,

before they (somewhat hesitately) give them back to applications needing them.

And then there’s memory fragmentation, among other things.

Since *nix systems are heavily based on C/C++ code, or rather, being compiled by C++ compilers, they do suffer from the whole allocation issues (malloc etc).

Some examples :

https://savvinov.com/2019/10/14/memory-fragmentation-the-silent-performance-killer/

https://stackoverflow.com/questions/37186421/memory-fragmentation

That being said, I do value Linux as a set of drivers.

Many frameworks, I assume, such as Android, do use Linux as foundation because of this.

Just because your work consumes 88 MB of RAM doesn’t mean everyone else’s does. A lot of ROS stuff requires vastly more memory than that, which is trivial on a cheap desktop / laptop system with 4 – 8 gig of ram, but requires a lot of thought and sacrifice on a system with 512 MB.

The low memory is the reason why I run DietPi on my Pi Zero systems. It uses less memory than Raspian OS Lite, i.e. it runs the applications better/quicker due to more RAM for caching available (which can be seen very good in htop).

See also https://dietpi.com/blog/?p=888 for details.

Can U upload video on YouTube and “slowly” show what devices feed what data to your 0W2? Also “slow” view of th 0 W2 setup/wiring, software required? I would like to give wife one display like that. I’m not tech but can follow any video with clear view of wiring, software required/source websites, etc. (Handicapped – only way to grasp how to tech things. (Very capable as I automated 7 jobs on large server writing code I learned from videos!). Just need good video source for a chance to do it.

Bless you!

RPI is like apple. When they release something people start drooling. Unlike apple, RPI cannot keep anything in stock. So it’s really pointless to use them from a manufacturing standpoint.

They can’t keep them in stock retail, but I’d imagine if you were to approach them and hand them a check to produce YOU some quantities of Pi then they’d be much more capable to satisfy your order.

All Raspberry Pi products have had limited availability at launch. It’s not just at retail; distributor inventory is also limited or nonexistent. That’s mostly OK because the early buyers are presumably mostly buying for personal hacking and for prototyping rather than volume production. Eventually they catch up and the boards become readily available; at that point it’s easy to get enough to design the Pi into a production device, something that may make sense if you’re designing something that is made in the hundreds or thousands. If you’re making something in millions you’ll want a more custom solution, because now you’re at the point where spending additional development time to get unit cost down makes sense.

The situation is a bit different than with Apple products because there are fewer volume buyers for those; most of the demand is for personal use. Availability can be an issue for corporate IT departments; they might have to wait a few months before rolling out a new Mac company-wide. But usually they only want a couple for the IT department to test at launch, with the volume order coming later.

To be like Apple they would have to lock you into an app store with terrible anti-coder, anti-hacker, anti-maker, anti-tech app policies such as no interpreters, emulators or ways around their jail. Just a tool to keep the masses tech-illiterate eager spenders of cash.

And since when was being a component for manufacturing part of the purpose of a Raspberry Pi? They were originally intended to teach kids to code and sort of turned into a maker toy. They are to other computers what Arduino is to other micro controller platforms.

So basically the exact opposite of the evil IQ reducer from Cupertino in every way that matters.

Hilarious, just open a terminal window and MacOS is just another unix workstation, no different from the platforms from Oracle IBM HP etc that run the internet.

People forget that free software could not have been developed without these closed source unix workstations. Gcc, emacs, the entire free software stack was developed on Solaris and HPUX.

Full and certified POSIX API is available. Full development environment is available for free. Golly they don’t just blindly run executables you’ve downloaded, good on them.

Meanwhile raspberry pi has no hardware documentation, no schematic, no source code, limited availability.

> Gcc, emacs, the entire free software stack was developed on Solaris and HPUX.

GCC and Emacs were originally developed under 4.2BSD on a VAX. The Sun and HP machines came along later and their main attraction was that their hardware was faster, not that their software added anything. 4.2BSD itself was almost entirely free software. The proprietary bits were later removed, resulting in FreeBSD, OpenBSD, etc. Anyway there were no serious dependencies on proprietary software in those GNU programs. In principle it could have gotten started on, say, ITS or Lisp machines, but VAXen were more readily available.

Wow, you’re secretly a glass half full kind of guy, huh? I suppose SOME closed source system must have existed in a state so miserable as to make coding open source alternatives look preferable. It didn’t have to be UNIX, but it was. I bet if it hadn’t happened oh so long ago with UNIX it probably would have happened under Apple or the now-Apple-copycat: Microsoft.

Pi hardware is indeed closed, with only limited schematics Enough for 99.9999% of users. But the software is 99% open, so have no idea at all why you think there is no source code. We are also actively reducing the reliance on the firmware blob (the 1% closed source code). We also have a fairly comprehensive datasheet on the HW, some bits are not able to be published sadly, but its covers a lot.

The future is mobile devices and mobile/desktop convergence. It doesn’t matter what you can do in OSX. It matters what you can do in iOS.

In iOS you can’t run anything that doesn’t come from Apple’s store and Apple’s store policy is not to allow any sort of compiler, interpreter or emulator so that pretty much just means dumb consumer mode with no opportunity to make, create or hack on anything.

And no, limiting one to only run their approved code on the hardware you have purchases is NOT good. Offering a regulated store, even making the user change a setting or OK a popup that informs them they are putting their safety in their own hands when they go outside the store is good. Forever locking them out of their own hardware is bad.

Also, no, you cannot (in any practical sense) get a free development environment for iOS that runs in OSX either. Sure, you can get XCode for free but you can’t get anything you write in it to run even on your own phyisical device that you purchased without buying into their $100/year dev program racket.

Who wants to write iOS code just to run it on a desktop in a simulator?

“since when was being a component for manufacturing part of the purpose of a Raspberry Pi? ”

Since it says so on their web site:

“Our network of trusted, hand-picked Approved Design Partners can help you design Raspberry Pi computing solutions into your product. “

They do make components that are intended to be used that way. The Compute Modules and the RP2040 microcontroller chips are examples. That doesn’t mean that everything the Raspberry Pi Foundation makes is automatically “a component for manufacturing”.

“And since when was being a component for manufacturing part of the purpose of a Raspberry Pi?

They were originally intended to teach kids to code…”

Ohh the old we-are-so-good-we-are-only-doing-it-for-the-kids story again.

But in reality, professional customers primarily, secondarily hackers, are those who makes it all possible from a financial viewpoint.

And maybe the really big costumers do have at chance with the Foundation, but the smaller professional customers, who maybe are using a few hundred units per year, are treated like stepchildren.

So we very much appreciate your money, but besides that you can go to hell.

And remember, a lot of the software success is due to the fact that lots of supporters are writings OS stuff.

From a manufacturing standpoint, you should probably just be buying the SOC and putting it on your PCB directly. Maybe use something like the RPI compute module if you’re doing a really small production run and don’t have the resources to properly integrate the SOC. If you’re relying on what is basically a consumer-oriented PC for your product, something is off.

As for the fanbase, it’s largely deserved. Their products are pretty easy to use and have good documentation. The 4B also happens to be best-in-class in terms of performance vs price. As someone else mentioned, they do absolutely nothing to “lock you in”, save for the proprietary Broadcom chip quirks resulting from cost-cutting measures. Plain Debian runs fine on Raspberry Pi with a slightly modified kernel. RPI users generally have no problem at all going to other boards, and you can find other boards almost everywhere you can buy a pi.

Depends on production volume. Many products are only made in the hundreds or thousands; in that case, designing in a production SBC like a Raspberry Pi is often a better option than designing an SoC directly into your product. The savings in engineering time more than compensate for the possibly higher unit cost — and often the unit cost isn’t even higher because the SBC maker can get better pricing on the SoC than you can.

Once you get up into making something in hundreds of thousands or millions, it’s time to look at designing in an SoC directly. Or if you are designing for an ultra-compact space or for long term battery operation, you may need to do that.

You should probably also do the math for the SoC with some sort of multiplier so you can stock extras. Depending on the product it might be vital to buy and stock thousands of extra SBCs. If your building a 100k$ industrial machine that uses a pi compute inside it or a 3B or whatever, you better be able to replace that board if the one in the device fails!

The sad thing is I’ve already seen this happen with non-raspi products. The manufacturer uses some off the shelf sbc and then the company making the SBC goes bust or moves on to a new and incompatible design. And suddenly owners of the 100k$ machine are running to ebay to snatch up used boards for backup (or totally redesigning the machine and a new board and controller for it. That was a friends CNC machine).

It was designed with filling education gap in mind. That is why they don’t do all thise things some people complain about on every new board premiere.

It would be cool if iPhone was as easy and intuitive as RPi with raspbian.

“So it’s really pointless to use them from a manufacturing standpoint.”

The 1st RPi Zero was produced at a loss but in absolutely insufficient quantities just so that they could write $5 on the advertising and take away buyers from the competition, while limiting the damage by producing very few boards; the number of people who wanted to buy one and couldn’t without paying it 3x-4x bundled with unnecessary stuff had been high for years. That was the reason I stopped thinking about the RPi for anything but media playing.

But the real reason the RPi shouldn’t be considered for manufacturing is that there’s no source for their CPUs. Once you test your code on a Raspberry you probably want to move it on a dedicated board with the form factor, devices, I/Os and ports you need, so that it’s perfectly normal to buy the processor and build your own project around it.

Well, you can’t simply because Broadcom won’t sell you them alone, no matter how many you’re willing to order, while other CPUs are not only readily available but also more open and better documented.

That makes the RPi an excellent product for teaching about embedded electronics and Linux (and a very good one for making nice media players) but I wouldn’t consider it for anything where a line production is involved.

Oh, and I know there are many “fans” over here ready to jump at the neck of anyone who criticizes the RPi. Whether their mission in genuine or not, I hope to see valid counter criticism.

Nope, there’s a healthy number of us who dislike the business practices of the RPi Foundation. Even if we like to use their products for some things, they do some sketchy advertising and pricing at the very least. I’ve complained about their dishonest price advertising on just about every product release article I’ve come across. I’m not going to bother with it anymore simply because I don’t think they’re even going to release this thing in Japan. It’ll probably require parallel importing, so will of course cost more than anything they advertise.

Don’t live in the wrong place (though I’d argue with what I know of Japan and the nearby Chinese market you shouldn’t be short of much much cheaper options – so you are probably in the right place to use something else!), I’ve never paid more than advertised, nor have a great many others – I don’t get pissy when something I want coming in from Asia ends up rather more expensive than I’d like than to import shipping and duties, its not whoever I bought it off’s fault that my government decided it needed a heap of money out of me too for the privilege of buying something I couldn’t get here…

And yet RPI sells millions of devices in to manufacturing, so lots and lots of people do not find it pointless at all. Do not conflate initial launch volumes with the volumes available on long term products. We’ve sold 7M devices this year, in the midst of a chip supply crisis, it’s not all to makers….

Raspberry pi is like the old Yogi Berra joke: “millions of people have bought them but they are unavailable” it’s like saying nobody goes to baseball games because they sold all the tickets and you can’t get one.

I think this is a result of the internet. It seems like everyone has one because every news site or post about projects using them essentially advertises them, hypes them, and makes them seem ubiquitous. The truth is that they’re not quite as plentiful at launch as we are led to believe. They are somewhat unobtainable until the hype dies down and the manufacturing can catch up to the demand. During those first months, my guess is that most of the sales are to scalpers or resellers who bundle them and make them further unobtainable. The number of people who get them to actually use personally is probably rather modest, but not unheard of.

200,000 Zero 2’s available at launch (worldwide), and don’t forget the chip supply crisis everyone is going through. We actively try and stop scalping, but its pretty difficult. Some resellers are limiting to 1 per customer, which does help get them in to he hands of more people.

Nice Work!

Should have mine Friday. 512M is really over kill. As above I remember the days with ‘a lot’ less memory to work with. And a lot less horse power. And look what you can do with the Arduino with a lot less cpu/memory resources…. The Zero is not a desktop machine. Let the PI4 handle those chores. Mine all run headless. SSH is the user ‘interface’.

[quote]pointless to use them from a manufacturing standpoint.[/quote] On the other hand, since they are ‘sold out’ most of the time, it means the world is consuming all that can be produced which is a good thing. Not saying that we’d like to see them always ‘in stock’ of course, but …

It is interesting that the Raspberry pi developers had to sign non disclosure agreements in order to get linux running on the platform, but the developers who got Linux running on the Mac did not sign any non disclosure agreements.

That is somewhat interesting, but without looking up the facts, I’d wager that’s because the Linux devs for the Mac reverse engineered it, and the RPi devs got the data from Broadcom. I think it should be possible to reverse engineer the Pis without signing NDAs, but since the work would have little immediate payoff, finding people skilled and motivated enough to do so would be the harder task.

Why is that interesting? Presumably you are talking about the closed source firmware from Broadcom requiring an NDA? Also note, that at the time of the first Pi launch, most of the people who had worked in the Pi were actually working for Broadcom anyway. Not now of course, that was 10 years ago.

There are some brave people reverse engineering and producing their own firmware, but TBH, given the official firmware just works and the vast majority of people have no interest other that “It works”, it’s a lot of work for little benefit. And we are actively moving away from firmware anyway (via KMS, libcamera, V4L2 etc) so the platform is getting more and more open source.

You say the closest competitor is the 3 B+, what about the 3a+? The 3a+ is like a zero 2W on bigger footprint and with 1.4GHz. I use it for my 3D printer with octoprint and Klipper software.

Agree, it would have been interesting to add the 3a+ to the mix – it is the red-haired step-child of the RPi family, often overlooked.

The most interesting aspect of the Pi Zero 2 W is its form-factor along with its increased performance over the Pi Zero W – a drop-in processing boost for Pi Zero W applications.

I agree that it would be an interesting comparison. That would tell us something about how much power is used by the additional components on the 3B+. If there is much difference I would expect the Ethernet MAC and PHY to be the main contributors, though the additional 512 MB of RAM will also be a factor. The extra USB ports shouldn’t matter much unless something is plugged into them.

Nice, but I can’t convert those benchmarks into practical use metrics.

Can it run Kodi, maybe not at 4K but maybe 1080p, while powered from a standard 5V, 500mA USB port?

How about RetroArch, maybe up to PSX?

As the much much slower original Pi/ Zero can do Kodi alright 1080p (with a few caveat) this will be able to.

As for what you can emulate on it… Can’t really help you there… Though the much slower original Zero has been used for such things alot, so at least good performance on anything the Zero struggles with.

Don’t know about Kodi per se, but they recommend a 2.5 amp 5v supply for the zero v2, vs. a 1.5 amp supply for the v1. That made the v2 a lot less interesting for me. Besides being more power hungry it will probably want active cooling, especially in a tiny enclosure. It’s more media-playerization of everything. Nothing against Kodi but the bigger boards seem fine for that.

Ok, thanks guys. I was looking for something I could power straight from the TV USB port without needing yet another PSU/spare socket, which would also mean that I wouldn’t have to worry about turning off/unp[l;ug the Zero when I switch off the TV. I guess I’ll have to wait for even more power efficient chips before that happens then!

It might work on that TV just fine, looking at the article seems under stress its about there – still under technically, so it probably will just work. But not all USB ports actually will meet spec… Still I’d say if that is your goal its well worth a try – Pi’s are pretty good at dealing with slightly inadaqute supply now, and by the graph above 400mA draw seems to be about as high as it will actually go…

>closer-to-negligible 45 mA zombie draw

That’s terrible for battery powered applications, unless you mean a car battery. You have to charge it every two days just to stay idle.

If you want it to run ‘forever’ (over two days) add a small solar panel to your design.

If your design requires a quad-core CPU running at GHz speeds (not MHz), the power consumption is modest – if your needs are much lesser you can find more suitable architectures elsewhere.

I suspect most RPi applications rely more on the fact the board runs ‘standard’ linux, includes a wide-array of I/O devices (wifi, bluetooth, ethernet, usb, camera, display, and GPIO pins) and the designers just work around the boards power consumption issues as a cost of using a part with all that potential.

That’ll work well for devices that are designed to be used indoors, or spend most of their time in a pocket etc.

45 mA is a big ask for a small solar panel as well. A palm-sized 1 Watt solar panel gives about 150 mA in full sunlight, about 15 mA average around the clock, and 1.5 mA or less under room lighting.

For sure! I do most of my projects on microcontrollers, so I’m looking at anything that isn’t microamps and going “that’s too high for standby”. And this isn’t even standby. This is “you need to physically unplug the thing” to get around it.

But what is up with that power draw on a shutdown Pi 4? What the heck is going on there?

Thanks for the article. I would be really interested to know where the 3A+ stacks up among the various Pi versions. I guess it would be somewhere in between the Zero 2 and the 3B+, but it would be good to know exactly where.

Sorry about that — I just don’t have a 3A+ in my stash.

The Pi 3 is actually my Octoprint server. I had to pull it out of service in the name of science. (Old SD card in, it’s already back to work.) The old Pi Zero is from a motion-detection camera setup that we had on our hedgehog house, but they didn’t move back in this year, so that was easier.

thanks for the current draw figures! this has been my biggest real surprise / disappointment with my pi 4b, i really did not expect it to be drawing 350mA idle, especially given how many purported battery-powered projects use them. it seems like the zero 2W’s 100mA idle is closer to the level of performance where battery powered at least becomes plausible…i still wouldn’t call it good but maybe “great for the price”.

that zombie draw, though…jeesh. the only realistic way to put *any* of these devices on battery power in a practical scenario (like a laptop) is if you have a separate real embedded processor (PIC or STM32 or whatever) that is in charge of cutting the power and managing wake-up events. or a hardware switch! drawing 45mA, i’d expect it to keep one core clocking at 100MHz, or at least suspend-to-ram. even on the zero 2W, every portable project is gonna be beating their heads against that wall, wishing they had an intermediate suspend state between “idle” and “physically switched off”

For low idle power you need the ability to power down peripherals that are not in use. Raspberry Pi OS doesn’t have that; I don’t know whether the SoC supports it. Another thing you need to be able to do is to shut down one or all of the processor cores and have them wake up on events; again, not something that RPOS does.

So what you’re saying is, this is a typical Linux HAL power management issue where they started writing the drivers and half-way through went, “OOhhh shiny new project! Let someone else finish this…”

heh if only! :) the system management firmware that runs on the proprietary core is all closed source so no one else can finish the work, and they do intermittently update it so that any reverse-engineering work gets punished with the next update.

No. It’s more like “the ability to minimize standby power is not important for our primary intended use case so it’s not a development priority for us”. The Pi is not marketed as a board for battery powered devices; idle power of 1.5W isn’t a big deal for something that is plugged into wall power.

Unless you want to get Energy Star certified. That’s a serious zombie load.

You can power down much of the soc manually. I disable USB on demand and it really reduced power consumption in my use case… See https://alantechreview.blogspot.com/search/label/nas?m=1

Not much out there that does Pi4 computing power and lower idle power draw (also a bit of declocking does help some with the idle, not a huge amount though). So lots of battery powered projects use them because they need more horsepower than the easily sourced micro’s with great sleep etc, plus it only draws power while its on, and boots stupidly fast – so there isn’t a need in most of these battery powered projects for lower idle too either – its off or running hard…

Be nice if the Pi foundation did put more work in there, but its not like its what a Pi is for…

as i’ve said before, cellphones and even some run-of-the-mill x86 laptops do much better at idle power draw, while generally having better performance. that’s why the pi’s shortcoming is surprising. but i absolutely agree that for the price, it’s surprisingly usable, and there are many use cases where it doesn’t matter.

but even plugged into the wall, it’s disheartening that it idles >40C. i guess it doesn’t matter, it’s just a bummer.

If low power/sleep were easy/possible to achieve, we would have done it years ago. But its not. The SoC cannot really do sleep modes.

This, though. My laptop sleeps for weeks in suspend mode, and that’s keeping the RAM all powered so that it’s instant on. I’m pretty sure it’s not drawing 2W.

When it’s off, it’s off.

A bare chip of anywhere near the Pi4’s performance is going to be hot at idle without cooling… Not even the best of the x86’s drop below a few watts while idling – bigger performance numbers always means more idle draw to some extent, as even if you can properly shut down heaps of cores you still have to have some of them running, and they can’t run massively massively under spec to run cooler, its just not possible to run stably while de-clocked and voltaged that much – that is still significant heat output with no radiator etc.. Even Phones which are designs that have power efficiency as what number 1 maybe 2 priority? tend to have large metal thermal mass/radiator of some sort, helping keep thermals controlled (even if its just he back metal can of the screen etc)…

For reference I have a Pi4 (that I’m typing this on) – its got a nice lump of Al heatsink (chunk of old PC cpu cooler) and before it was put in the restrictive airflow box it ran down to damn nearly room temperature at idle, even in the box with pretty poor airflow it stays bellow 40 – highest I’ve ever seen maxing the poor thing out is 45 or so (and that is overclocked about as hard as this Pi will take – as I use it as my only running computer much of the time – letting me leave my rather old so very power hungry but still potent enough workstation off heaps – its a massive powersaving even cranked up, and so far handles just about everything but gaming flawlessly – even relatively complex cad, though its sometimes noticeably slower as projects get more complexity in modelling, so I will move over to the workstation for that sometimes – but most CAD it will just do effortlessly).

I replaced the Rpi Zero W in my Prusa I3MK3S with the 2W and it is realy night and day running octopi. I no longer have time to make coffe while rebooting the Pi.

What performance are you getting on boot times between both of them?

I love the picture of it leaning against a rock. It makes me think of the classic “rock or something” in the US Army M.R.E. instructions =:-)

is possible using normal linux function suspend/asleep ?

I guess the Raspberry Pi Pico doesn’t count as “most efficient pi”? I think that would silence a lot of the critics in these comments that can’t seem to offer any alternatives, only hollow words. I have never had an issue purchasing any Raspberry Pi product, no issues ordering the Zero 2 W either. I am not sure why others here are challenged in this area.

Adafruit has had pi zero 2 in and out of stock over the past few days. I think they have a lot in the shop, and are releasing small quantities of them for sale a few times per day. I’m not that excited by the zero v2. There aren’t that many applications that want more cpu than the zero v1 has, but don’t want essentially bottomless amounts of cpu (video encoding, web browser etc). The zero v2 seems like a stopgap. What would be more interesting would be a much lower powered board the size of a Pi Pico, It would have a single core SOC with maybe 64MB of ram, some analog i/o like a Pico has, plus Pico-like PIO, maybe on some small (Pico-like) coprocessor cores. Ideally it could all be RISC-V but I guess RPI Foundation is deeply enmeshed with ARM so it is ok.

Ahhhh … if only the title of this article had added the words “… In The Entire Multiverse!”, I could have closed my eyes for a moment and pretended that Brian Benchoff had returned to write for Hackaday!

:-)

If it can work with the HQ camera it will be a winner for me.

I’m a tad dissappointed that there’s still not a really low-power sleep/suspend/idle in any of the Pi’s, given the processors are from the mobile world I’d hope they could be made to run at a fraction of the ~500mW even the Zero seems to use.

A compute module based on the zero would also be good – CM4 footprint + eMMC, but minimal hardware/power consumption for applications where you just need a little bit of linux ;)

The processors are not from the mobile world. Broadcom’s primary business for their SoCs is for routers and similar embedded devices, not for mobile; they are found inside quite a few WiFi routers. I’ve never heard of their SoCs being used in a phone or tablet; that would be Qualcomm, which is a different company. Because of that background, it’s possible that Broadcom’s ARM SoCs don’t implement the kind of low-power standby features that you would find in a mobile SoC; their applications don’t call for them.

Why did the Pi Foundation end up with a Broadcom SoC in the original Raspberry Pi? Because the company agreed to sell the chips to them at a reduced price to support the foundation’s mission. That’s also why they refused to sell the same SoC that is used in the Pi to anybody else; they didn’t want the availability of those specially priced chips to anger their primary customers. (The ones they sell for Raspberry Pi are stripped down from their main product line in some ways, notably by containing only one Ethernet MAC; the ones for routers have two or more.)

Broadcom also makes WiFi and Bluetooth interfaces; those do get used in mobile devices. (I know some laptops use them.) The company is notoriously bad about cooperating with the open source community for those, so Linux users tend to prefer alternatives. They have other business lines as well, such as cable modems.

Broadcom router parts typically have Ethernet switch and at least the 2.4GHz built into the SoC. These are certainly not for routers as RPi SoC have very weak connectivity and not even Ethernet PHY.

Actually, Zero makes less sense than 3B+ and now 4.

Whole point of RPi is to bi minimalist machine that runs whole Linux and is usable for tasks that you expect a computer can do.

For this it needs a decent number of interfaces and bandwidth. Couple of USB2 ports, couple of USB3s, Ethernet etc.

Zero has mostly few I/O pins. And a couple of interfaces on them ( LCD LVDS, USART, SPI, I2C) and that’s it.

SImple, cheap microcontroller can do much better than this.

Horses for courses. A popular hack in one of my communities is using the Pi as a video camera, telemetry logger, and transmitter for FPV/autonomous flying. (https://github.com/OpenHD/Open.HD)

The Pi Zero was what we fly b/c of its low power draw, but it’s basically pegged at CPU 100% the whole time, which lead some people to use the Pi 3, but it’s both bigger and a power hog. For this use case, the Pi Zero 2 is ideal.

I’m sure there are tons of other similar communities who are stoked about this. Tiny portable game emulators that needed just a little more grunt? Studio-in-a-box type portable music applications? Etc.

Exactly, while I don’t see this Pi as being of interest to me, its got a great little niche, low power draw, high efficiency calculations, its going to be great for lots of people who need more than the ATMega (etc) but less than the big Pi4 powerhouses – in most ways it looks to be a straight up improvement on the old zero, have to look hard to find a space the downsides show up…

Its still a full Linux computer, just a small one – so it can do lots of things the ‘cheap microcontroller’ can’t do, and can always pair a zero with a micro if you really want more of x that a micro does better than a Linux box…

Actually, the RPi was never intended to be a “computer replacement”. It kind of ended up accidentally being capable of that, too.

IMHO, saying “the whole point of RPi is….” kind of misses the point of the RPi ecosystem. It’s not about being a baby desktop (although it can do that). It’s about being able to put some computing power into a small space/weight/power budget.

There are plenty of small-form-factor desktop-replacement systems out there. Very few of them offer the IO options that the RPi family offers.

The fact that it can run a general-purpose operating system merely means that in many cases you can make use of existing software or components, rather than being required to solve the whole problem from scratch.

I’ve regularly used these for monitoring door-access switches, tank liquid levels, etc, in non-hazardous environments. They’re much cheaper and easier to work with than most of the industrial controllers, and they have enough leftover power to do various “fun” stuff as well. For example, it only took about ten minutes and a couple of relays at one location to let it automatically activate the lighting in the equipment room.

Hi Elliot,

Good article!

I designed a commercial product around a Pi 4 and spent a good while optimizing for zombie power consumption. It’s worth noting that the zombie power of the Pi 4 can be reduced much further – under 1 mA IIRC – if WAKE_ON_GPIO=0 and POWER_OFF_ON_HALT=1 in the bootloader configuration, documented here: https://www.raspberrypi.com/documentation/computers/raspberry-pi.html#configuration-properties

(Make sure the bootloader and VL805 EEPROM firmwares are up to date.)

I’m still dreaming of the e-ink ereader I could make out of one of these, so they’d have the grunt to display large images in epubs. if I could only find a screen…

Is it seriously so much to ask for a switching power supply and a board with sleep mode? It’s like we can have anything we want but the ability to suspend to ram

I bought the 2 W has a tool to learn Linux while running it with a desktop GUI. It’s too slow for any of that. Opening a browser window can take 30 seconds, as does a terminal window. It’s now in my junk box. My “Chip” single board processor actually ran as a decent desktop, although all support is now gone. The 2 W is probably great for an embedded application.

If opening a terminal is that slow you probably have a crap sd card. Even the first generation of Pi’s should be way faster than that, even loading a full fat modern browser shouldn’t take that long, actually loading a web page usefully on the first generation of Pi’s while possible won’t give a good experience for most sites – the web is just to full of huge pages now. But the programs should load much much faster than that.

Unfortunately SD card performance is often rather awful, try booting off a slow spinning rust harddrive via a USB caddy and it will often still be better than bad SD cards, and not much worse than the best SD cards I’ve used in real world use… If you really want to stick with SD cards check out the Pi forum there was years ago (so I assume its still there) an ever growing thread of good and bad SD cards for performance, personally I’ve never had a bad Sandisk SD card, but every other brand I’ve tried despite being the same class of SD card has been woefully bad… Worth noting though they were all cheaper end, small capacity ones – bought not really concerned for performance but just to have storage to boot the Pi’s off – mostly for early Pi’s before network boot was well documented or USB direct boot was possible)

Also possible you have some error in your boot configuration, so worth checking that out for errors – perhaps an ‘overclock’ that actually makes the pi slower or memory split between GPU and CPU setting incorrect for the use you are putting it to.

Yes, I fully agree and can confirm that: Sandisk and Samsung EVO SD cards never made trouble.

Cheap cards from China often fail after a couple of write accesses.

The Pi Zero 2 has quite a little amount of RAM which should be used efficiently. For this reason using DietPi could be an option to handle the small RAM ressource in a better manner compared to Raspberry Pi OS.

See there to learn about DietPi on the Pi Zero 2: https://dietpi.com/blog/?p=1058.

What is the boot time performance between RPi Zero W vs Zero 2 W?

I have not found a single comparison of boot time for the various RPi models