Our own [Dave Rowntree] started running into bottlenecks when doing paid work involving simulations of undisclosed kind, and resolved to get a separate computer for that. Looking for budget-friendly high-performance computers is a disappointing task nowadays, thus, it was time for a ten-year-old HP Proliant 380-g6 to come out of Dave’s storage rack. This Proliant server is a piece of impressive hardware designed to run 24/7, with a dual CPU option, eighteen RAM slots, and hardware RAID for HDDs; old enough that replacement and upgrade parts are cheap, but new enough that it’s a suitable workhorse for [Dave]’s needs!

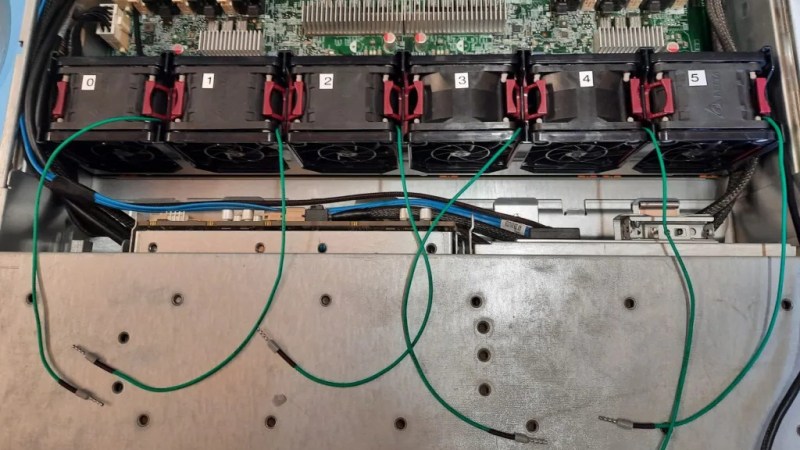

After justifying some peculiar choices like using dual low-power GPUs, only populating twelve out of eighteen RAM slots, and picking Windows over Linux, [Dave] describes some hardware mods needed to make this server serve well. First, a proprietary hardware RAID controller backup battery had to be replaced with a regular NiMH battery pack. A bigger problem was that the server was unusually loud. Turns out, the dual GPUs confused the board management controller too much. Someone wrote a modded firmware to fix this issue, but that firmware had a brick risk [Dave] didn’t want to take. End result? [Dave] designed and modded an Arduino-powered PWM controller into the server, complete with watchdog functionality – to keep the overheating scenario risks low. Explanations and code for all of that can be found in the blog post, well worth a read for the insights alone.

If you need a piece of powerful hardware next to your desk and got graced with an used server, this write-up will teach you about the kinds of problems to look out for. We don’t often cover server hacks – the typical servers we see in hacker online spaces are full of Raspberry Pi boards, and it’s refreshing to see actual server hardware get a new lease on life. This server won’t ever need a KVM crash-cart, but if you decide to run yours headless, might as well build a crash-cart out of a dead laptop while you’re at it. And if you decide that running an old server would cost more money in electricity bills than buying new hardware, fair – but don’t forget to repurpose it’s PSUs before recycling the rest!

At some point in my work life i had the task to set up a new Domain Controller for the small company i worked for.

We also opted for a refurbushed HP Proliant server.

It work fine and was silent. But as soon as we inserted a generic SSD all of the mainboard fans were turning on high speed.

10 or 12 turbines at 12.000 rpm are making quite a noise.

The solution was to buy a Intel NVME riser card and drop the generic SSD.

This is simply a way to force the people to buy the “certified” premium products.

It’s funny I’ve added a ton of new hardware to my Proliant ML350 G6 and it hasn’t had any issues like that. The only thing it didn’t like was the Duracell bios battery after I swapped it for a Energizer one it’s been smooth sailing since I put it together back in August. Funny part was both batteries were fresh and brand new in their packs.

There is definitely some of that going on. There’s also a sort of “Hardware-Human Feedback” that some engineers try and do. I used to work w/ Dell and IBM (before it was Lenovo) servers, and while LEDs were nice there were other times/conditions where the machine would intentionally/unnecessarily spin its fans on high in order to draw attention to itself.

It’s an interesting idea of, “If the server has an error, how do we notify?” LEDs are passive, web portals require the admin to check them, or set up outbound SMTP/SMS/etc. notification, but, “Hey, let’s make the fans spin up real loud” is interesting, and (slightly) less annoying than firing off a piezo-electric buzzer.

That sounds like the downside of HP’s (much vaunted, by themselves, a few years back, not sure if it has been replaced by something else in newer marketing materials) “3d Sea of Sensors” technology.

The glorious theory was that the server would have 11 zillion thermal sensors in it to enable granular intelligent fan control and power savings and enhanced reliability and stuff.

In practice, it meant that if any part of the system that included a sensor was replaced with a non-blessed part it would go into full my-nuclear-reactor-is-melting-down mode and run all the fans at speeds just shy of putting it under FAA jurisdiction.

I was never quite clear on why it needed special HDDs, when temperature is a value that basically all SMART-capable drives report; but I assume that someone in the C suite needed a yacht.

Of course, this is the same HPE that won’t give you BIOS updates if you don’t have a support contract, so it’s not a huge surprise. I have my differences with Dell, and Supermicro is perpetually a bit rough around the edges; but at least they don’t pull that stunt.

Quote [fuzzyfuzzyfungus] : “and run all the fans at speeds just shy of putting it under FAA jurisdiction”

ILS non-compliance?

I love the “colorful language.

Locking hardware to another.

https://youtu.be/olQ0yaB9LSk

I did the same thing this past August after my motherboard had a few blown caps. I had a HP Proliant ML350 G6 in my closet. I put in dual Xeon X5675’s and 3 8bgig sticks per cpu for 48 gigs in triple channel. I swapped over my 1660Ti, sound card and both my Hard drives. I’ve added a recently a 2.5″ 1tb SSD and a 500gig NVME drive using a pcie adapter card. Windows 10 had no issues with installing out getting drivers for the hardware. This thing is an absolute beast I do many 1080p/1440p gaming and it gets the job done. The temps are great and even under load it really doesn’t get loud. I’ve been designing as well as ordering parts for a custom water loop for the CPUs and GPU. I’ll be building a custom basement/stand similar to a CaseLabs SMA88 to house the dual rads, pump, reservoir, and auxiliary FSP Hydro-x PSU. This thing will give me great performance for quite some time and then I’ll pass it down to my son when it comes time to replace it.

I worked for a small business and we often bought used servers one generation back for about 1/10 of their new acquisition cost, and that was even after leaving room for the reseller to make profit. Typically the the servers we got were about six months past the end of their manufacturer’s warranty. At that price point it was easy to have a few extra servers in cold reserve in case a server died.

Of course we didn’t rely on used equipment for everything. We used new high performance servers for running a cluster of VMware and an iSCSI storage system which provided the core of critical business functions.

Something to keep in mind is that servers may not have power saving capability as good as consumer grade equipment, the assumption that servers are supposed to be running hard all the time anyway.

Make sure equipment your looking at meets FCC class B RF suppression. It isn’t so common there days, but data center equipment may only comply with class A, the more lax standard intended for business environments.

Most rack servers don’t try to do much to reduce audible noise. Anyone who’s been in a data center can attest to that. If you can, check the acoustic noise level of server you’re thinking of buying. Those tiny fans sized to fit in a 1U high rack case can sound like 12 little dental drills whining away because they run at high RPM to move a large air volume. If you can find a used small business tower server, your ears may thank you. Those servers have space for larger low speed fans and they have to be quiet enough not to drive people out of the office.

Not all server power supplies are created equal. Some cheap supplies have power factors as low as 0.5 while green supplies can have power factors close to 0.9. The theoretical ideal power factor is 1.0. Power factor is an indication of how much current flowing in the AC input line is actually being used to deliver actual power to the device. Residential customers are typically not billed more for having poor power factor, but it is kinder to the planet to have a better power factor. Note that bad power factor does cause more current to flow and you are more likely to trip a circuit breaker because the breaker will react to the total current even if not all of the current is delivering actual consumed power. You can check the servers specifications do find power supply input ratings.

Mine is an ML330 G6. It isn’t silent by any means, but I enjoy the rush of quietly spinning fans. Like most HP servers, the initial spin-up sounds like a (small) jet taking off.

Refurbished used parts are, generally, dirt cheap, and running Win 7 on it is good enough for my needs. iLO means I never need to touch the power switch in person (lazy, or what?) and it is tolerant of non-HP add-ins.

As Bill says, rack servers are, by their nature, noisy. Tower servers (usually intended for small businesses) are typically less so. Likewise, small business servers usually have a better power factor.

Aside from the power supply considerations you mention; the really critical thing in domesticating servers is how touchy they are about their fans.

1U for anything of nontrivial power is just a no-go for operation near humans; but some systems are fairly easygoing about being modified to use bigger fans and more airflow, so long as fans are still present and no thermal sensors trip. Others are some combination of unbelievably obnoxious about proprietary pinouts and (worse) have very specific ideas about what the correlation between their PWM input and the fan’s tach output ought to be; which is great for diagnosing fan failures; but means that switching in a fan that doesn’t spin at between 10 and 15 thousand RPM requires very careful lying to the fan controller.

I have a 15 year old dual Xeon 128GB “desktop server” from Dell with a raid that is extremely reliable. It has a RAID. One of the differences between “a fast computer working as a server” and an actual server is, IMO, ECC RAM. No serious server should be without it. And they are just built better, with all ball bearing fans, overkill airflow, better power supplies, etc. But I wonder what the long term effects are on my hearing!

One of the first things I ever do with a 2nd user (i.e. used) computer is to take out the fans, open the end of the bearing and put in a drop or two of sewing machine oil (no waxing or varnishing) – and once closed back up, the fans are usually good for another 100,000 hours of service.

Even on relatively quiet fans, the relubrication can make a difference. Ideally, you hear the rush of air rather than the whirr of the fan blades.

ECC RAM is a must have for *anything* mission critical, non-ECC RAM is fine for most other things.

What few people realise is that a dedicated RAID controller should handle striping and mirroring without putting any additional load on the processor – and a goodly amount of RAID controller cache helps that right along.

I found that adding a separate graphics card (and not a high-end one, either) made an appreciable difference in system speed, just by the mainboard being able to pass off most of the graphics functions to what is essentially a separate CPU.

Their ability to dump the framebuffer into a remote management interface at the same time they paint it on your video-out is appreciated; but the ASpeed GPUs that you normally find in servers make Intel Integrated stuff from years back look screaming fast.

You wouldn’t really want to waste money on more power for something that is basically intended only for initial OS install and the occasional crash-carting scenario; but if you actually want to use the server as a workstation I don’t think that there’s a PCIe compatible GPU you can buy that would fail to be an upgrade.

I only put it in so that I could get a remote desktop connection that wasn’t just a mess of low-res jaggies. Much nicer for doing remote admin than a CLI – especially given my tendency toward sausage fingers.

It actually sped up the system even running in headless server mode (i.e. not logged on) – I’m sure there’s a lesson right there.

Dual GPUs do not “confuse” the firmware at all. iLO has full management of fans via PWM and controls the speeds base don input from their “sea of sensors.” If you are adding hardware components that are not “approved,” the machine will run the fans excessively. I have had multiple HP servers and the firmware fix has never caused an issue on any of them, although the fans did still run slightly faster. In my ML350P, I currently have an RX580 and a Mellanox 10 Gig card and with the modified firmware fan speeds have increased from 11% to 18% at average load.

“If you are adding hardware components that are not “approved,” the machine will run the fans excessively.” yeah, that’s what I mean by “confuse”, it isn’t programmed to know any better than spinning fans at 100%. Gotta keep my articles short and to the point!

I’m not having that issue at all with my Proliant ML350 G6 I’ve added a ton of new hardware as the only original parts is the motherboard and the dual psu’s.

Most HP servers will monitor current load on the PSU, and will preemptively adjust fan speeds to account for the additional load. Sometimes, a device will report its load as being the maximum load it can apply rather than what it is actually drawing. Result: fans at 100%.

I’ve managed to get my hands on a base model HP z440, upgraded the CPU to a Xeon E5-2630 for $40usd, 8gb ECC chips cost about $35usd each and it can take a total of up to 128gb. All for an absolute steal – The real bonus, being a workstation, I get all the nice desktop comforts (low noise, small size) with some really nice server comforrs (24/7 runtime validation, ECC etc). And being older hardware, circa 2016, its all just out of warranty.