[SpookyGhost] has a big home network, and has taken cable management and server organization to the extreme. He has written about individual components before, but this blog post brings it all together and reviews the entire system. The networking gear is installed in a closet and mounted in a 25U tall 19-inch rack. From top to bottom, here is a brief list of the gear:

- Keystone patch panels

- pfSense Firewall / Router

- Two Cisco Ethernet switches

- Redundant internet connections

- Shelf of numerous servers

- RAID-Z2, 12 each 8 TB SCSI, media storage

- NAS RAID, 6 ea 4 TB SAS, 2 ea 800 GB SSD

- Video Management System, 48 TB storage

- UPS and power distribution units

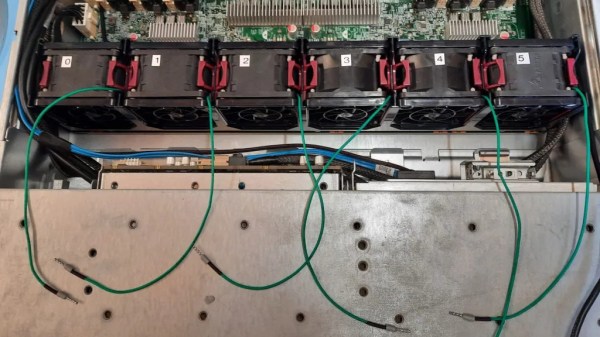

Most of the Ethernet uses 10GBASE-T and Cat6 cabling and connectors, with some interconnects use fiber optical cable and LC connectors. Unsurprisingly, as this setup grew and grew, [spooky] had to pipe in air-conditioning to the closet.

This is a serious installation, but there are plenty of good ideas for folks with less ambitious networking goals and/or requirements. We liked the swappable Keystone jacks in the patch panels, and the cable pass-through panel with a dense curtain of rubber fringe to keep things looking tidy. If you have any ideas to share on network equipment and cable management, let us know in the comments.