You’ve probably heard of the infamous rule 34, but we’d like to propose a new rule — call it rule 35: Anything that can be used for nefarious purposes will be, even if you can’t think of how at the moment. Case in point: apparently there has been an uptick in people using AirTags to do bad things. People have used them to stalk people or to tag cars so they can be found later and stolen. According to [Fabian Bräunlein], Apple’s responses to this don’t consider cases where clones or modified AirTags are in play. To prove the point, he built a clone that bypasses the current protection features and used it to track a willing experimental subject for 5 days with no notifications.

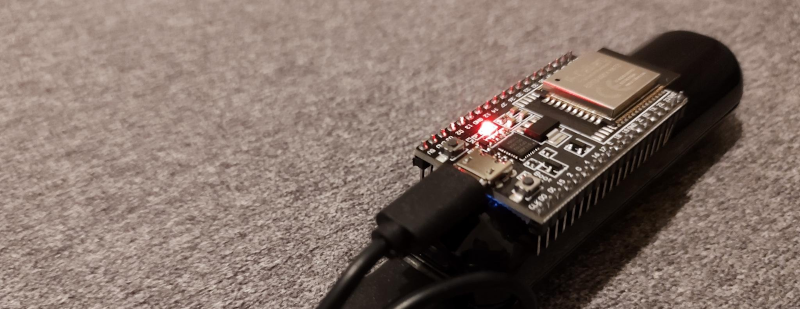

According to the post, Apple says that AirTags have serial numbers and beep when they have not been around their host Apple device for a certain period. [Fabian] points out that clone tags don’t have serial numbers and may also not have speakers. There is apparently a thriving market, too, for genuine tags that have been modified to remove their speakers. [Fabian’s] clone uses an ESP32 with no speaker and no serial number.

The other protection, according to Apple, is that if they note an AirTag moving with you over some period of time without the owner, you get a notification. In other words, if your iPhone sees your own tag repeatedly, that’s fine. It also doesn’t mind seeing someone else’s tags if they are near you. But if your phone sees a tag many times and the owner isn’t around, you get a notification. That way, you can help identify random tags, but you’ll know if someone is trying to track you. [Fabian] gets around that by cycling between 2,000 pre-loaded public keys so that the tracked person’s device doesn’t realize that it is seeing the same tag over and over. Even Apple’s Android app that scans for trackers is vulnerable to this strategy.

Even for folks who aren’t particularly privacy minded, it’s pretty clear a worldwide network of mass-market devices that allow almost anyone to be tracked is a problem. But what’s the solution? Even the better strategies employed by AirGuard won’t catch everything, as [Fabian] explains.

This isn’t the first time we’ve had a look at privacy concerns around AirTags. Of course, it is always possible to build a tracker. But it is hard to get the worldwide network of Bluetooth listeners that Apple has.

If you try to mention the rules, at least check if the number is occupied or not.

35) The exception to rule #34 is the citation of rule #34.

Yes, that’s the important take-away from this article.

This cloning project would be under rule 62: It has been cracked and pirated. No exceptions.

We love this new rule! But as Colton mentioned, the slot 35 is taken – https://en.wikipedia.org/wiki/Rule_34#Corollaries – what could we call this new rule instead?

Rule 62:

https://hackaday.com/2022/02/22/no-privacy-cloning-the-airtag/#comment-6430543

I have found one exception to rule 34 and it is NOT rule 35. The Jetsons. I’m sure there’s animated stuff but I only ever classify that as animation whereas there is zero live action Jetsons available.

There’s no way The Jetsons is an exception. Rule 34 doesn’t say anything about what form the porn has to take. It’s irrelevant if it’s live-action, animated, fanfic or someone’s dodgy DeviantArt drawing. Any of it counts.

Obviously the solution is not have a worldwide network of mass-market devices.

Hey Apple, 1984 called, they want their advert back

In fact that horse left the stable a long time ago.

Funny how those that complain about evil eventually turn into exactly the thing they complained about.

In all walks of life,throughout history.

I think it’s just basal human nature.

The old “just because you can, doesn’t mean you should”

If it’s possible to build a tracker, is it possible to build a detector for that tracker? Is it possible to build that detector with a data logger? Is it possible to build that data logger with clear findings to provide to LE and court? It seems to be so easy to target/destroy, but not to have anyway to free oneself.

Well, a tracker has to emit *something*, sometime, or you’ll never know anything about what it’s tracking. What the tracker is emitting, and when, will depend on the sophistication of the tracker. A very simple “tracker” could just emit a constant RF frequency, requiring the user to listen for the signal and do radio direction finding. A more sophisticated tracker could, for instance, store its location every few seconds and use open wi-fi networks to send the accumulated data over then internet when it had the chance. A tracker could also have a SIM card, or multiple SIM cards, and use the cell network.

All of these methods involve radiating RF energy, which can in principle be detected, but detecting it *and* recognizing it as a tracker is going to be non-trivial, especially if you don’t know ahead of time what to look for. If you’re checking for cell network and wi-fi transmissions but the tracker uses LORA, you’ll miss it.

As for using tracker data to provide an alibi in court, 1) anyone with the skills to do that would have the skills to fake it, and 2) having an alibi conveniently set up before hand is awfully suspicious.

Not everything has to emit RF energy to be detected; RFID/NFC tags work by loading down an externally applied field, not by generating their own.

They still emit RF energy once powered by the detector

Apple tags have a CR2032 Battery that apparently lasts a year. They’re not a simple RFID tag as such

So I put a tracker on my bicycle, and, if the thief is an iphone user, Apple helpfully lets them know I have a tracker on board! And it bleeps so they can find it. Nice.

removing the coil that does the beeps is easily done in under 10 minutes

Which still does nothing to remove the notification that the apple user gets about a device following them.

Does it really matter by that point? From a legal perspective you only need to show that it was taken, not where it was taken to. The police will give you a stolen property report, they won’t go get the thing. Insurance just wants that report, they won’t go get it either. All that info you have already before the tag starts beeping.

When it comes to theft, knowing where it is at and not notifying the thief only seems helpful if you plan to go all vigilante justice on them.

It certainly sucks that this is how things are, but I find it hard to fault apple’s tags for the poor state of the legal system.

Would still be good if these could be used in kid’s clothing and such so you could track down your lost little one. If a kidnapper gets a notification, oops. Pretty sure the police go after abducted people.

Yeah but I’m pretty sure abduction prevention is out of scope as a use case as being directly opposed to the anti-domestic-abuse design goals.

Why?

It’s a very strong “think of the children” knee jerk and that’s’ typically why we cant have nice things.

Another problem is there are situations where having a stealth tag, or something like it, is useful — I had someone breaking into my home repeatedly, and it would have been nice to tag both valuables AND some juicy things that I know they would steel AGAIN. So yea, having one that does not beep and tell the thieves that the thing is tagged or send them notices that there is a tracking device near them, would be helpful — and I think legal on my own devices. Yes, I see how this could also be used for nefarious purposes, but when can we use them for legal purposes?

if someone *repeatedly* broke into my home to steal my stuff, I’m making sure their next try will cause physical pain and I don’t care about the “you can’t do that” crowd.

Most of the things I came up with would be a felony. If you have suggestions I would LOVE to hear them. I would not mind a misdemeanor as long as it would not mess with my job — and I would bother to ask security services about the consequences up front like I asked them if I started carrying pepper spray if I can leave it in the car…

Watch Home Alone? :3

Mantras and boobytraps are almost always very illegal.

Raccoon deterrents are much less-so.

Mantras? Not following…

This specific situation of having an already deployed worldwide non-opt-in mesh network capable of tracking devices small enough to hide is only practicably doable today by one company.

I don’t think Google could have done it with Android, as they lack the control. Microsoft couldn’t have done it with primarily stationary computing devices (and again, lack of control). Wearables companies aren’t ubiquitous enough (& battery power is too precious). Any utility company is too localised. We don’t have the tech yet to practically do it over very long distance via the likes of satellite constellations (although the likes of Swarm is trying to solve that).

So of course that one company that could do it, decided to do it without really thinking through the potential consequences. Or they did think it through, but didn’t care enough to stop. And typically, the potential harm is most likely to affect people who aren’t like them.

And although Apple was that company, sadly I think that type of thinking is a systemic issue in the tech industry.

It’s no just the tech industry. Heck, it’s not just industry. “They didn’t think it through” is a systemic issue with all human activity.

+42

This guy knows where his towel is!

and HATES certain types of poetry.

This 👆

That’s absolutely the truest truth I’ve heard today, so thank you for that.

It’s not limited to one company. Tile was doing it, before Apple completely ripped off their idea.

They also had the functionality of any phone with the tile app being able to detect any tile and notify it’s location. Apple just had the power to install their app on all their phones. Google location services are on all Android phones. Most android phones log WiFi ssid’s visible in their location. Logging BLE Mac addresses would provide the same function as AirTags

Let’s make All Detector. A full spectrum airbag and tile notification app. Don’t sell it to customers like a normal app, sell it to the companies as bloatware.

I think Apple should roll back on this one. If people are tracking cars to steel them, sure, an old android with a £5 SIM card will do it over 3/4/5g. Or you can buy purpose built GPS trackers. Sure, the AirTag is smaller and cheaper, but these aren’t really enabling anything that’s not already possible.

I guess for stalking people the size of the AirTags is good, but there’s always been other ways to track people. Not least a newspaper and binoculars.

It’s not the tool that’s the problem here.

…surely you are an Apple Fan Boi, not a Crusader For Privacy™️ / aka basement dwelling Android Fan Boi, there’s only two kinds of people in the world, right?

This is the tech equivalent of the “guns don’t kill people, people kill people” argument. As in that patently fallacious argument, the enabling technology plays a significant role.

The enabling component is also why you can’t own and/or freely operate a fully functional main battle tank or attack helicopter or nuclear weapon. Pointing out that you should be able to have them because you’d just stab people anyway is not a valid argument.

People do bad things, but we can limit their effectiveness in that effort by making the doing of bad things more difficult, more risky, and/or more painful.

And it seems you have gone down the road of “hacking tools can be used for bad things too therefore they should be banned” because that’s exactly what we have heard for most of our lifetimes at this point. There is no nuance you can add that is going to make your point more reasonable here.

STOP. Hold it right there. Put down the media talking point and think for yourself. My dad was on a shooting team in high school (Indiana, late 50’s) – Never had a shooting at school. Technology of ANY kind can be abused or used for ill purpose, that does not mean that the technology should be banned – just that the penalty for being “That Damn Dumb” is not high enough. Our actions (or inactions) are personal choices that we make in the pursuit of an outcome. Sometimes those outcomes are good (stopping a woman or police officer from being assaulted etc.) and sometimes they are bad (robbing a bank, stealing things, hurting folks) – the technology does not make ANY of those choices, the PERSON makes the choice.

That’s the “guns don’t kill people people kill people” argument with more words and anecdote frosting. Human choice does not absolve technology providers from all moral obligation. Gun manufacturers should take at least some steps to ensure responsible use. Apple shouldn’t provide a product that can enable stalking. Poison manufacturers should not sell their products in colorful candy shapes.

The most irresponsible part of this whole thing is that Apple gave iPhone users an app to see if they’re being tracked, but did no such similar thing for, e.g. Android users.

Thanks to the Seemoo lab for Air Guard, then.

This is definitely “guns don’t kill people” though. In exactly the same way that easy access to firearms increases the number of shootings, easy access to a tracking network will increase the number of technologically enabled stalkings.

Whether we think that tradeoff is worth it, as a society, is exactly the point under debate right now.

That’s just dumb and misleading. The sole purpose of guns is to kill things, any other use is secondary. The purpose of AirTags is to track your own stuff if you lose it or misplace it. It’s a whole lot more of a knife for food that can also be used to stab people type of thing, except the knife has no pointy end and you have to sharpen that in yourself or pay someone to do it

A phone or live GPS tracker will give someone days worth of tracking before the battery needs charging. An AirTags lasts a year. It’s also smaller. It also works without clear view of the sky, like in a parking building, or attached under a vehicle.

Is this attack just possible because apple exposed the possibility to use openhaystack? If apple simply remove any tag that not officially registered with apple, the cost of generating keys will immediately get expensive?

Such is the world we live in..

It’s an amazing, and sad, hack. Kudos for making a database of airtags IDs and getting the ESP32 to iterate through them. Although it limits the precision of the device: because it can emit only one ID every 30s, the tracking ID and its position will be captured once every 17 minutes.

um, hours !

The receiving side knows all 2000 private keys, so it can also roll through the corresponding beacons. It should be able to get every ID across. So the usual airtag “a few minutes” of delay only applies.

Am I the only one who doesn’t care about privacy?! If you have your cell phone, you’re trackable. If I want to track someone, I will find a device to do it.

Truth simple illuminating truth, so thanks for that.

It isn’t a binary thing. It is at least slightly harder to get access to phone location records than AirTag-based stuff. And having a convenient mass-market device makes it easier for people.

How easy is it to detect airtags with, for example, a laptop’s blueooth connection? I don’t have any kind of smartphone, let alone an iphone. Airtags just use BLE, right? So I should be able to spot them using any BLE-enabled device? What about SDR?

I guess what I’m looking for is some way of logging the airtags around me and, ideally, notifying me of any that seem to hang around me for “too long”. Surely someone has looked into this, but I’m struggling to find anything useful with the usual search terms.