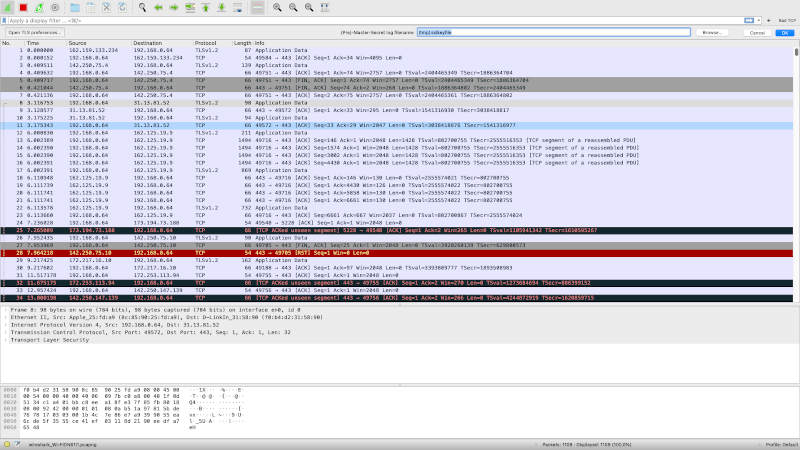

If you’ve done any network programming or hacking, you’ve probably used Wireshark. If you haven’t, then you certainly should. Wireshark lets you capture and analyze data flowing over a network — think of it as an oscilloscope for network traffic. However, by design, HTTPS traffic doesn’t give up its contents. Sure, you can see the packets, but you can’t read them — that’s one of the purposes of HTTPS is to prevent people snooping on your traffic from reading your data. But what if you are debugging your own code? You know what is supposed to be in the packet, but things aren’t working for some reason. Can you decrypt your own HTTPS traffic? The answer is yes and [rl1987] shows you how.

Don’t worry, though. This doesn’t let you snoop on anyone’s information. You need to share a key between the target browser or application and Wireshark. The method depends on the target applications like a browser writing out information about its keys. Chrome, Firefox, and other software that uses NSS/OpenSSL libraries will recognize an SSLKEYLOGFILE environment variable that will cause them to produce the correct output to a file you specify.

How you set this depends on your operating system, and that’s the bulk of the post is describing how to get the environment variable set on different operating systems. Wireshark understands the file created, so if you point it to the same file you are in business.

Of course, this also lets you creep on data the browser and plugins are sending which could be a good thing if you want to know what Google, Apple, or whoever is sending back to their home base using encrypted traffic.

Wireshark and helpers can do lots of things, even Bluetooth. If you just need to replay network data and not necessarily analyze it, you can do that, too.

99% times we don’t need the protection of HTTPS. I’ve started to think HTTPS is mostly actually against us; we cannot see what kind of information apps are sending. And yes part of it is “metrics” of us.

Remember back in the days when app (well, a program back then) that phoned home was considered evil and got quickly uninstalled and got bad reputation? Today – if program doesn’t phone home, it is probably considered suspicious ..

some sort of anti privacy propaganda?

I disagree. Without HTTPS your network can trivially alter and monitor all your traffic, which poses obvious security and privacy problems. Some ISPs did (do) things like injecting ads or dubious scripts into pages. There’s not much of a reason to not subject some traffic to HTTPS, especially since HTTPS pages embedding non-HTTPS opens some attack vectors.

You described the 1% or less what I’m talking about. There are other means to prevent those too, rather than encrypting whole traffic and introducing much more problems with data leakage.

You make a few valid arguments. I remember when websites were unencrypted but wanted to sell you something. They’d use a third party payment processor who used encryption. I’m all for encrypted connections, but name calling and insults are unnecessary.

True but much traffic is itself trivial. Is it worth releasing more CO2 and becoming more reliant on centralized certificate authorities just to protect every inane bit of meme traffic?

Agreed. The vast majority of what is now forced to be HTTPS would be better off just signed once by the originator and sent in the open – and then could also be cached at the edge.

https://wp.josh.com/2016/04/01/googles-war-on-http/

It’s generally not possible to sort out data that should not be shared, esp when you have to make those decisions for other people. The only way is to encrypt everything. And if that is your attitude then you should be advocating for real energy savings instead of concern trolling.

Seriously you couldn’t be more wrong if you tried. TLS is an essential thing in the modern internet, it protects you from thousands of attacks. Even dns should use TLS so the ISP can’t track what websites are you going to.

Found the spy.

HTTPS is both a protection but also a convenient way to obfuscate data/metrics or any litigious traffic. One, as a end user, security researcher… should be able to decode ANY traffic at will, in order to be able to check what is sent out to Internet. Would we trust and run an encrypted application binary if we have no mean to check it with an antivirus or study it with a disassembler/debugger? Obviously no! But we have to trust in/outbound data traffic of any application or connected device.

Some times, and with some skills, you can get around encryption with HTTPS proxies, but some devices/applications/servers can’t be configured to use proxies, or use certificate pinning to prevent that.

>One, as a end user, security researcher… should be able to decode ANY traffic at will,

You shouldn’t consider yourself a security research if you are not able to setup TLS decryption at some layer. Of course it requires additional effort but thanks to it user is usually aware about deep packet inspection (not a big deal for pentester)

> But we have to trust in/outbound data traffic of any application or connected device.

Of course we don’t have to.

And the move which was promoted by big techs to https everything not just important pages was move towards users not goverments. As with the architecture of current browsers and applications(where threats are also within our “secure” network segments as LAN and corporate env behind VPN) we cannot risk to have a single not end2end encrypted connection. Because even it this particular connection doesn’t carry important, ability to inject arbitrary traffic still poses a risk to the browser thus to the user.

One rough way is to block all traffic to these “metric” services. Oh boy how many connections apps make. The apps that I wouldn’t believe make such calls.

Even in unenrypted traffic, data can be hidden en obfuscated.

A random end-user will not be able to detect this.

If you want, you can investigate up to a certain point what is in the encrypted data.

However, by already using certain software, you have at least some trust in that software. If you really want to see what data is tranfered, you should also examine the software binaries that handled that data to verify what it does exactly.

Maybe a simple unencrypted “418 I’m a TeaPot” HTTP error code will trigger a corruption of a file on harddisk? How do you know it is not doing that? Did you verify the source code or the compiled binary?

Offcourse, you still can setup the client/server of the HTTPS in such a way it can not be decrypted. (Using also a client certificate, using ephemeral keys, Using TLS 1.3, etc…

Although it is important to perform valdiation of network traffic, it is also important to be confident in the security of end-to-end encryption.

As HTTPS solves much more problems than it creates, “everything HTTPS” is much better than “no HTTPS” at all, or even worse: “only HTTPS for private data” as suggested.

Using HTTPS offers you, and others, much more protection than only the confidentiality of data. In most cases encryption is’nt even the most important reason for using HTTPS!

You probably always want some assurance you are talking to the intended server, even if you don’t intend to keep things secret.

There are some good arguments against the movement towards HTTPS everywhere.

For example there is the environmental cost of all this encryption and decryption. It does use electricity. Does one really need to generate more CO2 just to encrypt, decrypt and ensure the authenticity of yet another cat picture?

HTTPS is centralizing the Internet.

The Internet was supposed to be a interconnected mesh network where any computer connected to any point in the mesh can share data. Any computer can be a server. But with the web is the only protocol now that people really use. Popular web browsers are defaulting more and more to https. I guess I haven’t tried in other browsers lately but I have noticed that in Firefox if I type the URL of a certain site without the scheme it attempts to load it via https. The site is only available via http so it fails. Not that long ago http was the default. After https became default, if the user didn’t type the scheme it would still fall back to http. It is only recently that an http site simply wouldn’t work if the user doesn’t type the scheme. Is this just the latest step towards not accepting http at all?

Do most users bother to type the scheme? Do most even know what it is? I don’t think they do. So someone who wants an audience among the masses now MUST use https. Even if they aren’t taking any data from the user and their content isn’t really anything that needs protection.

Again, is it worth raising the planet’s temperature just so a “man in the middle” can’t alter a picture when you are surfing catmemes.com, not logged in. (or anything else that is equally inconsequential, like probably 99% of all Internet traffic)

Another thing that used to work, a longer time ago was self signed certificates. Sure, if you have never connected to the site before a self signed certificate could be served by a man in the middle. But once you have the correct certificate cached that’s no longer an issue. So they aren’t worthless. It used to be a self signed certificate would result in the user getting a dialog with an easy to click accept button. Then they hid the button behind additional clicks. For years it seemed that all the major browsers had buttons to accept a self signed certificate but even if you clicked it they still would refuse to display. They disabled the feature without removing the UI! More recently browsers have removed even the button. They don’t even pretend to allow self signed certificates.

I remember running a page with an admin area that only I logged into on my own home server. My first time loading it on my laptop (and caching the self-signed certificate) was at home plugged into my own LAN. After that I could be pretty sure that everything was safe as I logged in from various more public places using that laptop. This should still be possible!

All this change means that if you want to plug a device somewhere into the internet mesh and share some sort of content you can’t just do it. You must go to a central authority or one of their representatives to get a certificate. And that certificate must point back to a domain name so You have to go to another authority to get one of those first.

This goes very much against the original design of the Internet.

As an interconnected mesh of equals the Internet was supposed to survive cataclysms such as natural disasters or nuclear war. Now we are dependent on certificate authorities. If they go down so does our communication.

The Internet was supposed to be resistant to censorship. But as a centralized point of failure a certificate authority could be pressured to take someone down. To be fair, there is so much harmful bullshit on the Internet today (lookin at you Trumpers and Anti-Vaxers) that I’m no longer always sure this is an entirely bad thing. But what if the wrong people get control of the ‘ban hammer’?

So was this change necessary? Most Internet users are pretty conditioned to click through warning boxes. They don’t want a professional level understanding of what those boxes mean they just want their content. I would suggest a different system. Every browser would have a little picture of a traffic light in a common place, probably next to the URL bar. HTTP gets a red light, HTTPS with a certificate signed by a trusted authority gets green and self-signed (even ip based, no domain necessary) gets yellow. People are told Green is good for personal info, monetary transactions, etc.. Red is not. If they ask what yellow is.. it’s “amateur” security. Good for basic logins at amateur owned sites, not for important info and/or financial transactions.

I would only include “are you sure” dialogs if the user is submitting a form with a password field and/or a form where some label says something about a credit card and the light is red, or just the credit card situation and the light is yellow. I would never actually prevent the user from clicking through or ignore the fact that they did and refuse to continue anyway. Safe or not, that’s their business.

So why is it the way it is?

Probably because forcing SSL with centralized certificates on the world makes a lot of money.

This comment is a perfect example of wasted electricity, nobody will read it

I read it.

You certainly don’t need to run HTTPs if it’s your own server for your own use. Yes you’ll get warnings, and these days with Chrome won’t even get the option ignore the dragons and to proceed (there you can type the falsehood “thisisunsafe” in the browser window and it will let you proceed to this ‘unsafe’ site, serving ‘unsafe’ cat pictures.

If you’re expecting a wider audience then yes it’s an annoyance, but a free (dynamic if needed) DNS service and letsencrypt will see you serving up green padlocks to your users.

What you talkin bout Willis, I am running selenium tests all day on the latest chrome with http and there is no warning at all. If there were a warning I would have to add code to dismiss it but I haven’t.

In my humble opinion, a true hacker is not your Average Joe when it comes to being curious. And driven, by curiosity, one reads entire books, full letters, thousand page schematics, just saying. But sadly, the Average Joe has become more and more intolerant of reading long text, being used to SMS before, tweets and short posts now. To me, being a punctilious person, if someone writes a long meaningful text with some thought density in, then it must not be considered just a waste of electricity and I perceive saying so as a rude thing, failing to consider the commitment of the mental resources and the use of precious time of the writer.

Sure, synthesis is an appreciable gift, no doubt about it, but dismissing the reading a post or a email just because of its length is impoverishing for those who do not read, frustrating when not irritating for those who write and finally is a symptom of belonging to a society to which, frankly, I do not feel I belong.

Part of this “the only protocol used is the web” is that now browsers are block ports which are no 80 and 443.

So those special services you run on non-standard ports for security in the “wrong range because we are the browser police” need to be moved or you have to work around your browser.

Which is ridiculous. IN playing to the dumbed people on the internet to “protect them” we just keep narrowing the ability for the rest of use to use it how we want to.

Any active on the internet in the 90’s well knows, once the corporations took it over and it became mass market, it was fucked.

Are you sure it is the browser blocking those other ports? My colleagues and I create web services that use all sort of other ports and test them on a daily basis even across different IPs (not the usual 127.0.0.1:xyz ) and never had a problem. On the other hand, we DID have problems with some ISPs that arbitrarily (and most likely outside of the contract or exploiting some obscure part of it) put firewalls rule blocking OUTBOUND traffic outside the common port ranges…

I worked as a senior software engineer at Mozilla for several years, working on Firefox. Even without being on the security team, few opportunities give you such a wide overview of web security. And I saw a lot of shit. So I’ll say this: HTTPS protects against a lot more than what most people realise.

Governments censoring, altering websites, collecting data to use against citizens – whether it be anyone they can, people against the current government/their policies, or some minority or vulnerable group (eg, ethnic minorities, religious minorities, LGBTQ people). ISPs censoring and altering information on websites that they disagreed with for personal/commercial/political reasons, acting like their own little authoritarian regimes. Antivirus & security software (free & paid) data mining all your network traffic to sell your data (& actively sabotaging security measures to do so). Banks having such weak security measures that everything may as well be in cleartext (& also sabotaging security features in browsers). Public WiFi access points (free & paid) being used to monitor traffic to sell your data, or manipulating what you see – and not just the odd small time rogues, also local governments and major companies like airports. MITM attacks (man-in-the-middle) replacing downloads with malware/spyware, or just very subtly altering downloads – like changing some URL where data is reported. Modems/routers hacked (or setup by the ISP or manufacturer) to monitor, modify, or selective block your web traffic. Financial transactions redirected, or just monitored for various purposes. Software updates – even if these are protected in other ways against manipulation, just being able to monitor these enables numerous avenues of abuse. More subtle things like steering people towards alternate news source to manipulate their politics or economics. Or selectively blocking certain pages that container certain words/phrases. Even people in your own household using the ability to maliciously monitor or manipulate your web traffic – examples like an LBGTQ teen who isn’t out to their bigoted parents, or a someone trapped in an abusive relationship trying to access online resources for help.

Some people may think some of this is hyperbole or doomsaying, but I’ve seen instances of every one of these things.

HTTPS isn’t a magical fix for all of these, but it is an important tool in helping protect against it. And it isn’t just your own use of HTTPS, it’s everyone else’s use of HTTPS that protects you too (and in turn, your use of HTTPS protects other people).

Some of it happens even when HTTPS is used and should protect against it. Sometimes it’s because HTTPS traffic is rare and that rarity can be exploited – making HTTPS ubiquitous helps solve that. And newer features have been helping – HTTPS isn’t this one single simple thing, it’s a complex system that needs to keep evolving. And yes, one of those new features helping prevent some of the bad stuff like the above is DoH (DNS-over-HTTPS) and DoT (DNS-over-TLS) – I know some people are against DoH/DoT, but it’s similarly hard to understate the importance of that and how vulnerable everyone is without it.

And yet… its still important that the web is an open platform, that you have control over your experience, that you have control over your own data, and you’re able to have a good user experience that works for you personally. Tools like HTTPS (& DoH) aren’t mutually exclusive to that – they’re complementary & help protect it.

At the same time, it’s important that other tools like Wireshark exist to help create things, to tinker, to debug code, to research security issues, and sometimes to forcibly take back control.

Thanks so much for such an interesting post based on in-depth knowledge – a true unicorn in the comments section!

+1 !

You cannot possibly be more wrong than this. Reality is, HTTPS is NOT even enough. We also need encrypted SNI and signed downloads.

Ever considered you might already be downloading from a hacked server? How do you know the data you received is from the actual source without a signature?

Supply chain attack and MITM attacks are real. Of course signatures alone are useless too. And a signature only works if a developer has his signing certs password protected and on a dedicated HSM stored. Not on his desktop.

Never tell anyone crypto is useless or bad or not required. It’s as if people did learn zilch from Snowden’s and Assange’s demise.

That’s ridiculous. Without https anybody in the middle (like your ISP, or even the NSA) could see everything you’re doing online. Nothing would be private. An attacker could intercept your connection and steal your login details. That’s way more than the 1% you mentioned.

If I have understood correctly this allows decrypting the payload after a secure connection handshake has taken place but doesn’t allow the TLS handshake to be understood.

If the random numbers used by the TLS handshake can be logged somewhere it is also possible to decode the handshake and work out the private key used by the session – this I have used in embedded systems to decode the Wireshark traffic (although not in Wireshark itself but by playing back Wireshark recordings though another program – noting that the handshake must be in the recording for it to be possible).

In-fact if malware can hijack the random number generator so that its values are know at each step (or freeze it so that it simply returns the same random number all the time) any HTTPS session can be intercepted by watching the handshake, reverse engineering it (knowing the random number sequence used) to calculate the keys and then the session becomes effectively plain text to the observer.

I assume that hacking the random number generator is probably impossible in modern computers since it will be protected adequately but in embedded systems it can often be done easily and I sometimes do it to analyse TLS operations for development purposes.

Since the HTTPS content still looks to be garbage others may assume that it is safe and the data carried protected, but this assumption would noe necessarily be correct in such a case.

If it is simple HTTPS – use an intercepting proxy (OWASP ZAP, Burp Suite) much, much simpler.

For other traffic: I like socat to terminate TLS there.

Wireshark and decrypting TLS is always the last option – and it only works up to SSL3.0 and only on specific ciphers :-(

This is a nice addition to regular https interception done with custom CA such as zap, burp and mitmproxy do. Some sneaky sites can change their behavior if they see a cert from a CA they don’t like. More so for the apps. It would be really interesting to extract session keys for Android and iPhone apps though.

I can understand the need for encryption when dealing with banks and online purchases. After all, it seems like every day I get a spam email telling me there’s been suspicous activity on my paypal account and I need to verify who I am yadda yadda ad nauseum. So, for that kind of stuff, encryption can be a good thing. I do agree you don’t need encryption on a cat pictures site, or youtube or google unless you’re entering your login information. On the other hand, with the speeds people are surfing at these days does it really matter? I remember first surfing the internet using a 2400 baud modem. Most internet shenanigans are opportunistic, but it’s my opinion and my opinion only that cat pictures etc. don’t really need it, but anything that affects your livelyhood does.

With healthcare etc. do you really want your medical information transferred unencrypted? Stuff like that shouldn’t be sent over the internet, but it is.

THere are just some things that need to be private. (just my $6.47)

Cat pictures or whatever it is you are looking at will generate a profile of you that hackers can use to exploit your tendencies. Cat pictures can be intercepted in transit and used to deliver malware payloads. Do you lock your front door? Why? Nobody is trying to break into your house but you do it anyway.

>Cat pictures or whatever it is you are looking at will generate a profile of you that hackers can use to exploit your tendencies.

If you are vulnerable to malicious cat picture profiling, then you are vulnerable regardless to whether they are delivered via HTTP or HTTPS. Your threat model seems to assume that the attacks come from men in the middle, but in practice the attacker is almost always at the server. If anything, having a HTTPS connection gives the user a false sense of security that they are somehow protected by the lock icon in the address bar.

>Do you lock your front door?

Perhaps a better analogy is “You should never let in anyone wearing street clothes who knocks on your door since they could be attackers, but if the person is is wearing a uniform and shows you an official looking ID card with their photo on it then you should trust them and let them in.”

By your very own argument locking your front door is giving a false sense of security and you shouldn’t do it, because there are other ways to break into your house besides the front door.

What about debugging Android apps on my own phone?

I have a couple apps that I want to automate/script. The apps themselves don’t provide a mechanism of automation. I want to learn how they work on The wire so that I can write my own script to emulate the app and do my repetitive task.

How do I use Wireshark for this use case?

Run an android emulator on your computer and point Wireshark at the emulator. I’m guessing you will find more than you are looking for.

I think some people have forgotten the problems that TLS solved. The worst was when big name ISPs, like AT&T Wireless, began injecting HTML and JS into ordinary web pages, and were analyzing customer behavior. (Many still do customer analysis via DNS queries, which mostly remain unencrypted. To see a list of who is currently abusing DNS to continue to analyze their clients’ traffic, look at the list of ISPs who complained about or demonized DNSSEC.

TLS also validates the site is presenting a valid certificate for the domain name. Without it, you have no way of knowing if you’re connected to the real hackaday.com or a fake site that’s been delivered via DNS poisoning or other Man-In-The-Middle attack.

Because TLS works so well, most people are unaware of the amount of malicious network traffic there really is, and what the capabilities of the attackers really are.

Can I say that the only really secure https is the one forbidden in China?

Everything else is easily readable by states…

Wow so apparently big brother listening in is A- Ok if it saves you $0.001’per month on your electric bill. So this is what you think your privacy is worth.

The pot of water gets hotter and hotter and the frogs still think they are on spring break.

If you lived on the other side of a shared 250Kbs/60Kbs downlink with 500ms latency and paid the equivalent of $10 per MB, I think you would probably see this issue differently. Most of the people on earth have different lives and different connectivity than you- an important thing to remember when you start making decisions about what things they should think are important.

Plz demonstrate how much SSL slows down your connection and increases data costs. If anything encryption is easier with lower data rates.

Also your implication that poor folks have less to lose is pretty bad, their security is just as important as yours.

>Plz demonstrate how much SSL slows down your connection and increases data costs.

I just pulled up the simplest page I can think of (pure text, no images, no JS or CSS includes) using the latest version of Chrome and recorded the results using Wireshark…

HTTP: Total packets: 11, Total bytes: 6202

HTTPS: Total packets: 25, Total bytes: 10632

Again, if you were on a high latency metered connection then HTTPS would have taken more than twice as long at nearly double the cost. And please remember that HTTPS SSL negotiation does not just add extra packets – it adds extra full round trips. On a geostationary uplink, each round trip takes more than a FULL SECOND!

I would not assume that all “poor folks” would be happy with this tradeoff to prevent a potential interloper from seeing the contents of this page.

NOTES:

Test page at http://josh.com/me.html

Chrome Version 98.0.4758.102

Images at https://photos.app.goo.gl/4indgYwrUmMJhAAP6

The encryption that requires more processing power is only used when setting up the connection, then it switches to a symmetrical key to keep communication so the energy consumed is almost the same.

HTTPS requires more energy and bandwidth because of (1) the extra steps needed in setup, (2) the extra computation for each byte transferred, and (3) because each byte must be transferred redundantly for every endpoint since caching is blocked. Out of these, #3 is likely the biggest source of waste.

It’s noise compared to all the other energy your computer uses. If you really cared about your energy bill you would be taking actual steps to reduce it instead of pointless concern trolling over microscopic energy use. For instance you can replace you intel box with a Mac and save enormous energy.

The increased energy usage is most important on the server side, as anyone who runs production web servers knows well. You typically need twice as much compute to serve the same number of users over HTTPS than over HTTP.

Any this ignores the massive savings you get by being able to cache non-HTPS static content at the edge. The most efficient fetch on the internet is the one that never needs to touch the server.

https://www.keycdn.com/blog/https-performance-overhead

“There is also an encryption process between the browser and the server in which they exchange information using a process known as asymmetric encryption. However tests between encrypted and unencrypted connections show a difference of only 5 ms, and a peak increase in CPU usage of only 2%. In January 2010, Gmail switched to using HTTPS for everything by default. They didn’t deploy any additional machines or special hardware and on their frontend machines, SSL/TLS accounted for less than 1% of the CPU load.”

Several problems here. The biggest is this is apples to oranges – they used HTTP/2 on the encrypted test and HTTP/1 on the unencrypted test, and then picked a page with dozens of includes to juice up the benefits of HTTP/2 for multi-connection pages. If you run fair tests with the same protocol (either HTTP/1 or HTTP/2) on both encrypted and unencrypted pages, the unencrypted wins.

Saying something like “unencrypted connections show a difference of only 5 ms” is meaningless without specifying the latency off the link. Since the encrypted connection requires an extra handshake (as acknowledged in the article), then amount of extra time needed for an encrypted connection depends on the round trip latency of the link. Sure, if the link latency is 5ms (ie local fiber in a major US city), then an extra round trip only takes an extra 5ms… but if your round trip latency is 1000ms (the minimum on a goesync sat link), the the encrypted connection will take at least an extra second to complete compared to the unencrypted one.

As to how much extra load switching to HTTPS adds to a GMAIL server, this is figure is not generally applicable since it depends on the hardware google themselves are running the GMAIL servers on (which likely includes specialized accelerators designed for this) and how much load is due to other code running on those servers. (Also this google Google maybe biased since google themselves are the main political driver of the shift to HTTPS). In real life, switching a normal server from HTTP to HTTPS increases the load several fold. Here is major national newspaper reporting that their CPU load increased 4x when they made the switch…

https://www.claudiokuenzler.com/blog/687/encrypted-http-connection-https-use-four-times-more-cpu-resources-load

Too right – I have used loads of servers around the world too even on the wired internet, where the round trip latency approaches or exceeds 1s. Not to mention other wireless protocols. Not everyone is on 1Gbps fibre.