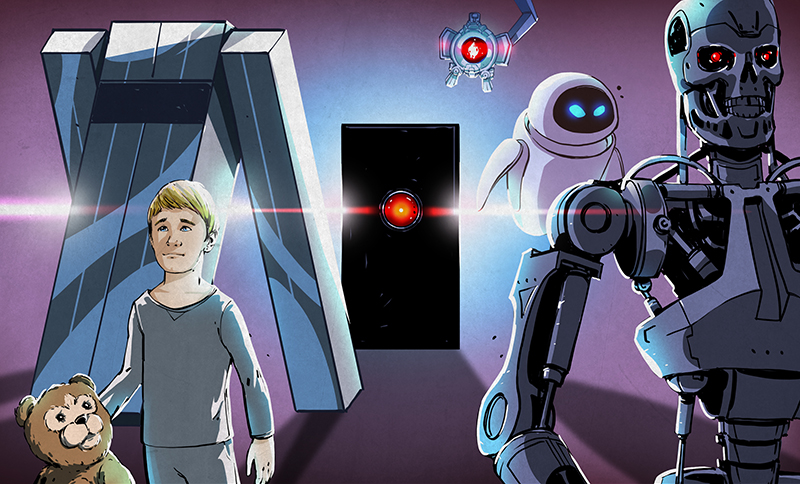

Everyone knows what a chatbot is, but how about a deadbot? A deadbot is a chatbot whose training data — that which shapes how and what it communicates — is data based on a deceased person. Now let’s consider the case of a fellow named Joshua Barbeau, who created a chatbot to simulate conversation with his deceased fiancee. Add to this the fact that OpenAI, providers of the GPT-3 API that ultimately powered the project, had a problem with this as their terms explicitly forbid use of their API for (among other things) “amorous” purposes.

[Sara Suárez-Gonzalo], a postdoctoral researcher, observed that this story’s facts were getting covered well enough, but nobody was looking at it from any other perspective. We all certainly have ideas about what flavor of right or wrong saturates the different elements of the case, but can we explain exactly why it would be either good or bad to develop a deadbot?

That’s precisely what [Sara] set out to do. Her writeup is a fascinating and nuanced read that provides concrete guidance on the topic. Is harm possible? How does consent figure into something like this? Who takes responsibility for bad outcomes? If you’re at all interested in these kinds of questions, take the time to check out her article.

[Sara] makes the case that creating a deadbot could be done ethically, under certain conditions. Briefly, key points are that a mimicked person and the one developing and interacting with it should have given their consent, complete with as detailed a description as possible about the scope, design, and intended uses of the system. (Such a statement is important because machine learning in general changes rapidly. What if the system or capabilities someday no longer resemble what one originally imagined?) Responsibility for any potential negative outcomes should be shared by those who develop, and those who profit from it.

[Sara] points out that this case is a perfect example of why the ethics of machine learning really do matter, and without attention being paid to such things, we can expect awkward problems to continue to crop up.

This would provide an excellent opportunity for product sales! The emulated loved one could hawk products, and because they were loved/trusted, a purchase might be more likely.

There is a special level of hell for that kind of thinking. Im not religious either.

Relax, Cylons, Battlestar Galactica. we already know how to deal with this. :)

Transcendence https://m.imdb.com/title/tt2209764/

Imagine if you will, a reality where the dead continue to speak, you are now entering, the Majel Barrett zone.

(Cue dubstep mix of ST:TOS music combined with “Twilight Zone” intro, incorporating hits and samples from Prodigy’s “Out of Space” and 2Unlimited’s “Twilight Zone” with some scratching of “Time warp again” sample just to oversaturate it completely.)

There are bright sides to this. David Attenborough can narrate wildlife shows forever.

Milking the dead for all they’re worth.

AKA modern copyright extensions.

I see, the deadbot own’s the copyright and we own the deadbot, forever.

Combine this with the deepfake style stuff they did to replace deceased actors in Starwars, and the new hologram tech they used for Tupac, and you’ve got a very interesting project. NK’s current Kim need never die…

“New hologram tech”

It’s Peppers Ghost, a technique that predates electricity. No holography, just a semi-transparent mirror reflecting an entirely 2D screen.

This makes the philosophical argument that a person can be reduced to a fixed behavioral pattern like a recording of sorts, even before we get to the point of asking whether “intelligence” can be. Many others would argue that a person taken “out of context” like that would not be anything resembling a person – just a more complex Turing Test. A person is not a talking dummy that repeats phrases when you pull the cord at the back – yet that is exactly what today’s AI essentially is. Whether by traditional programming or neural networks, it is a special system built to find and repeat a given pattern.

If a pattern does not exist, one will be created by the process, because a pattern of some sort is necessary for the programmers to test against to know that the system is operating correctly. The “discovery” of a pattern is then mistaken to mean that the person was or is a pattern, and therefore that the person has been successfully simulated. The programmers fall victim of their own Turing Test and begin to believe that they’ve actually constructed the real deal.

Therefore the question becomes, is it ethical to construct an automated answering machine that dissimulates any person, dead or alive, existing or imaginary? Already we have such things as robot callers that are used to fool people into listening to advertisements by faking to be people, or robots calling restaurants making reservations.

The present use and point of such machine agents is to mislead people.

The metaverse needs inhabitants. Just one step closer to the Matrix

Deadbots are nothing, what you should be worried about is Meta et al mining your interactions with all of your “friends” so as to be able to build composite personalities that are better able to manipulate you, for a fee. What rights do you have over you own personality now? None. Currently your ownly protection is to avoid such social media environments.

Social Media, like Hackaday?

B^)

Morality in recent years appears to have been thrown out the window – any call to a definition of right and wrong has thoroughly been terminated. So what’s the problem?

Good skills, but definitely needs counseling for grieving.

Oh, I saw the Black Mirror episode about this!

And there was the first few episodes of season 6 of “Buffy the Vampire Slayer” where they had the Buffybot stand in for Buffy who was dead at the time.

The trope may be said to be quite older than this though, with stories of necromanced lost loves, who return soulless as automatons or are otherwise not the same any more.

Frankenstein.

“That’s Frahnkensteen!”

-Gene Wilder

I took that as making fun of world war II films where they don’t know whether they are shiessing or sheissing.

If one never exits the grieving aspect of ‘healing’, no real healing occurs, imo. Not sure how that can be considered helpful. Seems like feeding some sort of dilusion, no offense meant. It is my opinion we are already good at fooling ourselves on various items ( a serious gloss), I’m not sure it would be overall helpful to perfect that even more, where I would suspect a 180 degree turn in the opposite direction would be vastly more helpful. Throw someone profiting on top, I don’t think it even a question.

I do remember a Star Trek TNG episode where one Reginald Barclay almost completely eschewed reality for a holodeck fantasy.

The danger I see in this is people creating bots based on living people.

The girl you wanted in high school and never dated, or being with a

celebrity. At what point does it cross the line?

which line?

The line where you prefer your bot over reality.

People already “date” printed pillows of anime characters.

I wish them a lifetime of faithful monogamy. No contributions to the gene pool needed.

> No contributions to the gene pool needed.

It’s not the genes.

https://en.wikipedia.org/wiki/Behavioral_sink

Well then there’s the “roll your own reality” crowd, creating Tulpas of their favorite MLPs… there’s a rabbit hole you really don’t want to explore without a pocket pack of brain wipes and a spray bottle of eye bleach.

Isn’t that just low level schizophrenia?

Futurama did it too, in the episode “I Dated A Robot.”

Imagine using such a construct being used to administer or further clarify a persons final Will and Testament. What about having an AI copy of your personality make life decisions for you when you are in a coma.

Let’s re-frame that slightly:

One day you wake up and find that you’re actually an artificial copy whose continued existence depends on the original person remaining comatose in a hospital, or dead. If they wake up, you will be shut down. What would you do?

Well, if it was “me”, I’d have to decide whether to enrich my re-awakened self with the knowledge (and cash) I made while they were out, or to just have it erased.

Then again, maybe I’d run up a huge debt in Vegas!

B^)

Time to go rewatch “Marjorie Prime” (https://en.wikipedia.org/wiki/Marjorie_Prime)

In the movie “Her” a deadbot is made by the OS, using the the known recordings and papers of [Alan Watts?] who had died years before.

https://slate.com/culture/2012/06/philip-k-dick-robot-an-android-head-of-the-science-fiction-author-is-lost-forever.html

Remember that time someone made a Philip K. Dick deadbot, then it went missing at an airport?

Pepperidge Farm remembers.

Hey Donald, great read! I particularly enjoyed your in-depth discussion of data ethics, since it was something I hadn’t really thought of before. Being a fellow tech blogger myself, I also really appreciate how organized and well-formatted everything was – it definitely made the content much more digestible overall. Keep up the awesome work!