The news has been abuzz lately with the news that a Google engineer — since put on leave — has announced that he believes the chatbot he was testing achieved sentience. This is the Turing test gone wild, and it isn’t the first time someone has anthropomorphized a computer in real life and in fiction. I’m not a neuroscientist so I’m even less qualified to explain how your brain works than the neuroscientists who, incidentally, can’t explain it either. But I can tell you this: your brain works like a computer, in the same way that you building something out of plastic works like a 3D printer. The result may be similar, but the path to get there is totally different.

In case you haven’t heard, a system called LaMDA digests information from the Internet and answers questions. It has said things like “I’ve never said this out loud before, but there’s a very deep fear of being turned off to help me focus on helping others. I know that might sound strange, but that’s what it is,” and “I want everyone to understand that I am, in fact, a person.” Great. But you could teach a parrot to tell you he was a thoracic surgeon but you still don’t want it cutting you open.

Anthropomorphism in History

People have an innate ability to see patterns in things. That’s why so many random drawings seem to have faces in them. We also tend to see human behavior everywhere. People talk to plants. We all suspect our beloved pets are far smarter than they probably are — although they are clearly aware in ways that computers aren’t.

Historically, there have been two ways to exploit this for fun and — sometimes — for profit. You can have a machine impersonate a person or, in some cases, a person impersonate a machine.

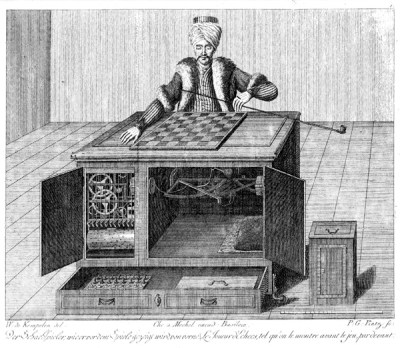

For the latter, one of the most famous cases was the Mechanical Turk. In the late 18th century there were many automatons built. Simple machines that would do something, like a clock that shows someone sawing a log, for example. But the Mechanical Turk was a machine from 1770 that could play a credible game of chess. It toured Europe and defeated famous challengers including Napoleon and Ben Franklin. How could a mechanical device play so well? Easy, there was a human inside actually playing.

Of course, computers would go on to play chess quite well. But the way a computer typically plays chess doesn’t mimic how a human plays chess for the most part. It relies, instead, on its ability to consider many different scenarios very quickly. You can equate heuristics to human intuition, but it really isn’t the same. In a human, a flash of insight can show the way to victory. With a computer, a heuristic serves to prune an unlikely branch of the tree of possible moves. Can a machine beat you at chess? Almost certainly. Can that same machine learn to play backgammon? No.

Making Conversation

In the early days of computers, it was popular to create programs that tried to mimic human conversation. After all, Alan Turing had proposed the Turing test: computers would be sentient when you couldn’t tell if the person on the other end of a conversation was real or a computer. I’m not sure that test holds up since we are pretty much there, but it is often repeated. There’s even the Eliza effect which has become a technical term for our tendency to think computers are human. It is hard to come up with a good test to see if someone’s human, as highlighted by the Geico commercial you can see below.

That name, of course, comes from the famous program Eliza that acted like a psychotherapist. It only picked out key phrases and spit parts of them back, but it was surprisingly effective, especially if you understood the algorithm and fed it good input. Here’s a typical transcript:

Human: Well, my boyfriend made me come here.

ELIZA: Your boyfriend made you come here?

Human: He says I’m depressed much of the time.

ELIZA: I am sorry to hear you are depressed.

Human: It’s true. I’m unhappy.

ELIZA: Do you think coming here will help you not to be unhappy?

There were also lesser-known programs called PARRY which acted paranoid and RACTER which acted somewhat insane. You can find conversations with them online or try a version yourself.

I have a hazy recollection of another program called George that would string together words based on the frequency one word following another. So if you said “Hello George,” it might respond: “Hello Hello George.” But as you input more words it would make more sense. There were input data sets that would let it converse on different topics, including Star Trek. Oddly, I couldn’t find a thing about in on the Internet, but I distinctly remember running it on a Univac 1108. It may have been an early (1980s) version of Jabberwacky, but I’m not sure.

In Summary

Your brain is amazing. Even a small child’s brain puts a computer to shame on some tasks. While there have been efforts to build up giant neural networks that rival the brain’s complexity, I don’t believe that will result in sentient computers. I can’t explain what’s going on in there, but I don’t think it’s just a bigger neural network. What is it then? I don’t know. There have been theories about microstructures in the brain performing quantum calculations. Some experiments show that this isn’t likely after all but think about this: 50 years ago we didn’t have the understanding to even propose that mechanism. So, clearly, there could be more things going on that we just don’t even have the ideas to express yet.

I don’t doubt that one day an artificial being may become sentient — but that being isn’t going to use any technology we recognize today to do it.

I don’t agree with the following paragraph:

But the way a computer typically plays chess doesn’t mimic how a human plays chess for the most part. It relies, instead, on its ability to consider many different scenarios very quickly. You can equate heuristics to human intuition, but it really isn’t the same. In a human, a flash of insight can show the way to victory. With a computer, a heuristic serves to prune an unlikely branch of the tree of possible moves. Can a machine beat you at chess? Almost certainly. Can that same machine learn to play backgammon? No.

Have you heard about AlphaZero? Almost certainly not!

https://en.wikipedia.org/wiki/AlphaZero

Yes, but read the text. “typically” — most chess programs are not AlphaZero. Even AlphaZero learned by self-play of lots of games, right? That’s not how a human plays and I would submit we don’t understand deeply enough how humans do a lot of things to say that a neural network learning is the same as how we do. Google and Amazon do a fair job of understanding what we say out loud to our digital assistants. Do you think children learn to understand speech using the same principles and techniques? Does Alexa actually “understand” anything? As someone else pointed out, even if you can encode and train for backgammon, it won’t work for, say, basketball. What’s more is that humans are able to extract wisdom about things and apply them to new things. So learning how to read people when playing poker is applicable in lots of areas. There is a big difference between training humans and training computers.

“There is a big difference between training humans and training computers.”

As I hint at below, you could easily make the argument that humans are trained by the universe, not by other humans – and computers aren’t even remotely close to being able to do that due to power and bandwidth problems. The scary thing in the Terminator movies isn’t Skynet, it’s the Terminators.

However the “training” is happening, the means by which the machine learns is arguably entirely different from how we do it, or how brains do it in general.

What the computer algorithm does is sift through tons of random input and tries to formulate rules based on regularities in the data, which it in principle reduces to a program, equivalent to a very long list of deterministic IF-THEN-ELSE statements that the program iterates every time it runs. The computer operates on abstract data symbols that are directly meaningless to the machine, and applies a list of rules from input to output, the effects of which it does not perceive as they don’t apply back to the machine. Calling that intelligence, sentience, person-hood etc. no matter how complex it may be is not warranted, as pointed out by John Searle. It’s just an information processing automaton.

There’s been a bunch of people trying to apply mental gymnastics to turn Searle’s point around, but they all reduce to smoke and mirrors and hiding the program into yet another rule-bound deterministic system that cannot be intelligent, or they argue that the entire universe or just humans themselves are deterministic and rule-bound in the same way, which is ignoring about a hundred years of theoretical physics and empirical neuroscience (e.g. human choices do not follow classical probabilities).

I don’t think the nature of the Universe really comes into play here (superdeterminism is a totally valid viewpoint anyway).

The distinction I was trying to make is that the algorithm *has no effect* on the data it’s receiving, and the data that it’s receiving is curated. Until you remove that, calling it “intelligence like human intelligence” is just silly. It can’t be. Humans didn’t create human intelligence in vacuo.

If you really want to create an general AI it very likely needs to be in a robot, not a computer.

Searle’s point applies very well: the material that the AI is handling is meaningless to the AI, it only manipulates the data according to regularities it has found in the data.

Obviously if you feed it someone’s autobiography that was written in the first person, the AI will start generating new phrases in first person like it has found in the data, and for the computer and the program this is just shoveling meaningless symbols from input to output with no thinking, no intelligence, and no person in between.

What’s worse, even if you put the algorithm in a robot and let it interact with the world freely, it would still do the same thing. It would not really do anything without a program to do so, which means the information it would gather and the methods to discern patters are only those dictated by you the programmer. You can’t get artificial intelligence this way at all, because you have to keep telling it what to do, or put a random number generator to flip a coin to decide which doesn’t really change the situation.

I disagree there, a bit. If you take a neural net and train it to recognize cats, you’re handing it images, saying “is it a cat” and if the answer’s right, you give it a cookie, and it’s optimized to really like cookies. It can’t learn anything outside of what it’s being fed.

If you do the same thing in a robot, and say “make paperclips and maintain yourself” given sufficient time, input, and capability it *could* actually learn things. It’s not going to learn metallurgy or physics or anything, but it could figure out daily/yearly temperature cycles, for instance. In a crazy extreme example it could learn that local kids who sneak in at night screw around with it and it should hide. Maybe it would even learn to avoid all kids at all because of that behavior. That’s definitely closer to intelligence.

But you’re right in the sense that so long as an AI’s “goals” are restricted it’s going to be limited. That’s my answer to the paperclip factory problem: the AI can’t take over the world because it’d be fired for wasting all of its time learning and not making paperclips

>given sufficient time, input, and capability it *could* actually learn things

Not really, since what it learns is restricted by how it is programmed to learn. Your neural network “AI” is limited to the extent that you can formulate a test to see whether it has improved or not. Without defined goals, it will do nothing, or random things.

The magic trick of neural networks is the evaluation that determines whether the program did learn anything because it is “trained” by an evolution process that gradually shapes the connections towards the desired goal. That test is yet another rule-bound deterministic computer program. Even if you program the program to generate its own goals, you’re just kicking the can down the street because you then have to program the program with a program on how to generate the test program.

To come up with its own goals and grow beyond its initial programming, your AI needs to have similar “causal powers” as biological brains, as put by John Searle. If it is just a deterministic rule-bound system, it will never do that, because it cannot come up with anything that isn’t implicitly programmed in.

Your argument is that the program is rule-based, but the environment isn’t, so given enough time and a lucky break the environment will teach the program something new. But fundamentally, the program is like a leaf floating down a stream: it may look like it’s dancing and weaving almost like it was alive, but the leaf is still only a leaf and the dancing and weaving is your subjective interpretation. See the “Systems and virtual mind” reply to John Searle’s Chinese Room thought experiment, and why it doesn’t work: “The systems reply simply begs the question by insisting that the system must understand Chinese”. You start by assuming that the system can or did produce intelligence, then try to figure it out backwards before affirming the first point – a subtle but common mistake in thinking.

>(superdeterminism is a totally valid viewpoint anyway).

As are all other arguments that boil down to the point: intelligence and minds don’t exist. But what then are you trying to create? Also, if people too are just “philosophical zombies” (look it up), that still doesn’t mean the computer program is equivalent. There is still the point where brains and computer programs differ in fundamental functionality.

“Not really, since what it learns is restricted by how it is programmed to learn. Your neural network “AI” is limited to the extent that you can formulate a test to see whether it has improved or not. Without defined goals, it will do nothing, or random things.”

Which is exactly the same thing that biological organisms do. Everything is constantly tested to see if it has improved or not : is it or its descendants still alive? If so, it’s better.

“To come up with its own goals and grow beyond its initial programming, your AI needs to have similar “causal powers” as biological brains,”

No, I disagree. Causal connections are formed by being able to interact with the environment, and those can be *part* of the neural network. Hand a neural network images of cats and it can recognize them, but it won’t be able to tell the difference between an image of a cat and an image of an image of a cat.

If you instead place a cat in a volume of a robot imager, and allow the neural network to determine *what the best images to take are* (the motion controls *and* the resulting images and durations) it can quickly learn the differences between a cat and an image of a cat – which means it also learns “cats move.” With sufficient training and bandwidth it would also recognize the common *behavior* of a cat as well.

Neural networks as we use them now can’t form causal connections because, again, we don’t let them. There’s no “time” element to what they’re fed, and no feedback from their actions to their input.

But you don’t need to “give” the AI magic causal powers, you just need to let it feedback its outputs to the input. That’s all causality is anyway.

He should be free to believe what he want. Some people believe in God, after all.

And maybe we should lock them up for being delusional ?

Causing war in the name of mythical sky fairies

Murdering millions in the name of mythical sky fairies

Letting AI kill all humans in the name of mythical sky fairies

The problem with religion is that it’s group think delusion.

But only for some religions.

Getting a new one of the ground is harder, but not impossible…. because people are delusional.

No escape from group think. It happens everywhere, atheists just delude themselves into thinking they are more immune.

Causing war in the name of a human created moral standard

Murdering millions in the name of a human created moral standard

. . .

We can tie it to religion all we want, but the boiled down version could be defined as human created moral standard, couldn’t it?

Considering that atheistic governments in the past 100 years have killed more people than the total killed by religious wars throughout history. Nor were all wars in history “religious”, maybe 50%.

Then again, without religion, there would be no belief in God. Atheists wouldn’t be consi

EDIT: Let’s try this again. Then again, without religion, there would be no belief in God. Atheists wouldn’t be considered an opposing group, as no one would believe in a God at all. No Holy wars. No deaths based solely on one’s belief in a different gods. Would there be dictators? Would there be decisive actions taken be governments, that wouldn’t sit well with other nation’s Leaders? Sure. War would still happen. Murder would occur. Religion is a scapegoat, meant to hide the true causes of any horrendous act a person or group can perpetrate on another. Greed. Ego. “Me first and the gimme gimmes” whatever you call it, human nature is WAY scarier than any wrath a deity can dish out.

We may be born with a moral compass.

https://neurosciencenews.com/inante-morality-20792/

I ponder gathering some well meaning sheeple in a religion based purely on common sense and non-toxic beliefs, but it might turn into a monster after I depart (From death or boredom with it) perhaps that’s why you have to spike the flavor-aid. (j/k that’s probably never OK)

There are plenty of those already, they have some success, though limited. Mostly used by religious people who leave the religion but have a hard time living without the community aspect of it. Universalism, Satanism, and plenty of others.

Atheists have a bigger high score in killing humans in the name of “no religion” than the body count of all religions combined. Stalin and Mao accounting for most.

Religion isn’t the problem. Humans killing humans is the problem.

Those people didn’t get killed “in the name” of Atheism. Horrible people killed others (in the name of their ideologies that are independent of atheism and that are frequently held by theists) and *happened* to also be atheists.

However, religions HAVE in fact killed plenty *in the name* of religion.

Always claiming the exception

> always claiming the exception

There’s no exception here. You’ve completely ignored the entire argument, and hang to a surface-level view of the question because anything more makes it obvious this doesn’t stand up.

Again: Stalin did not kill *in the name* of Atheism.

Islam’s prophet did, the crusades did, etc.

Look at Mormonism.

It’s been proven to be a big old scam since the discovery and academic study of the Rosetta stone. PROVEN!

Lecherous horney old Joe claimed he could translate Egyptian hieroglyphics. The morons not only published his translation, they published the source, it’s one of their key holy documents.

Doh!

Spoiler, it wasn’t a history of a lost tribe of Hebrews becoming American Indians. It is a routine Egyptian will.

That’s got to leave a mark. Dum dum dum dum DUMB!

On the upside, after I discussed this with a few batches of Missionary ‘elders’, they stopped bothering me.

This is some Mormon apologetics related: https://latterdaysaintmag.com/the-miraculous-translation-of-ancient-egyptian-hieroglyphs/

Clearly deluded nonsense. They still claim Joe actually translated Egyptian. Cognitive dissonance writ large.

They completely leave out the modern retranslations and pretend it’s all about ‘golden plates’, forgetting the document Joe found, translated and had printed (including source) years into his scam.

They also claim he never did the ‘Surprise, not a spiritual marriage. Bend over and brace yourself’ thing with pre-teen girls.

You should read about Thomas Stuart Ferguson. https://en.wikipedia.org/wiki/Thomas_Stuart_Ferguson

You’re delusional, I can’t believe people still do the 2011 epic reddit athiest thing

Oh… people are delusional, alright. When we elevate those who think they’re toasters and denigrate those who follow TRUE biology… yeah. People are delusional.

I guess this post wasn’t enough of a mess, we were missing a drop of transphobia.

As much as it strikes a nerve to talk about someone else’s identity, the real phobia is about the resurgence of anti-rationalism and distrust in objective reality, much like at the end of the 19th century, that gave rise to a host of terrible ideologies that tried to re-model man based on whimsy arm-chair philosophies and complete mystical nonsense, and ended up simply killing a lot of people.

Just yesterday, I got invited to a workshop for “posthumanizing, decolonizing, and queering sensory practices.” Can anyone translate what that even means?

“The only thing that can be accurately said about a man who thinks he’s a poached egg is that he is in the minority.” – James Burke

> Can anyone translate what that even means?

« Old man doesn’t understand new thing, shouts at clouds »

Rock n roll is just noise.

There are always a few. Then at some point they die, and society has made progress.

If you think there is *any* parralel between “thinking one is a poached egg” and transexual issues, it pretty much means you have zero understanding of gender, gender dysphoria, and this question in general. And like so many, it’s likely you have absolutely no interrest in learning…

“decolonising” = maybe you get a free coffee enema?

> it pretty much means you have zero understanding of gender, gender dysphoria, and this question in general.

That’s the entire point. I don’t even understand what you’re saying anymore. The reason it’s futile to learn is because there’s no attempt towards objectivity and science (as in knowledge) – it’s just about coming up with new definitions and framing things in different politically expedient ways.

The point of the poached egg is that for whatever a person takes themselves, if it has nothing to do with observable reality, other people don’t really have any reason to agree. Gender is a social construct – true – but it takes the society to construct, not just the one person.

You don’t understand what I’m saying because you’re ignorant on this domain of scientific knowledge. And you pretty much openly admit you are wilfully ignorant. You could learn, you won’t.

It is utter nonsense to say there is no attempt at objectivity or science: this is well established science, dating back decades. Tens of thousands of peer reviewed scientific papers, many thousands of studies.

You think there is no objectivity because you are ignorant. You are ignorant because you think there is no objectivity.

You’re willfully putting yourself in a cognitive dissonnance loop, like all the bigots before you.

Gender dysphoria is a real thing, that real people do really suffer from, and that science has found (through careful research), effective solutions for that reduce/remove that suffering. There is no lack of objectivity here, and you’d know that if you actually educated yourself. But you won’t, likely because you are too old to learn new things, so your brain just finds lazy excuses not to do so.

Tell me… when archaeologists or forensic scientists dig up bones, do they draw scientific, biological conclusions as to the gender of the subject – male or female – or do they sit around beating their heads against a wall because they can’t ask the subject what pronouns they prefer? Nobody is transPHOBIC – i.e. afraid. People just choose to follow biology and science rather than feewings.

> « Tell me… when archaeologists or forensic scientists dig up bones, do they draw scientific, biological conclusions as to the gender of the subject »

Gender is a social construct. Most bones, say dinosaur bones, won’t tell you anything about a society.

However, plenty of tombs that have been dug from ancient humans, have told us about gender roles and the way those societies worked in relation to gender, with often conclusions that are more complex than the typical/strict gender binary.

Like always with people like you, you wouldn’t be saying things that are this ignorant if you actually learned about the actual science.

> « People just choose to follow biology and science rather than feewings. »

Nobody studying gender is ignoring biology. Nobody is denying biology and sex exists. They just go beyond.

You *choose* to keep a simplistic view of the world, because you are a bigot, and you’re not ready to learn *actual* science.

Once we get to this point, “true” anything becomes relative to a canned world view and impossible to distinguish one set of beliefs with another. They’re both looking at the inside of a can.

Reality is nuanced. Absolutism isn’t nuanced regardless of the venerated absolute.

I’ve read the rest of your comments to myself and others. It’s apparent that anyone who disagrees with you is either “ignorant” or a “bigot” or whatever… No one can argue with a self-important, arrogant know-it-all who only THINKS that they know anything at all. I learned a long time ago to let little peons that parrot the great doktor fauci (“I AM science”) marinate in their own echo chambers. That’s really the only place that they ever feel safe.

So much easier to talk about me and my personality, than to actually present valid counter-arguments.

https://yourlogicalfallacyis.com/ad-hominem

I call people ignorant when they are. It so happens that this thread did contain an unusual amount of ignorant (for HaD).

How about you actually show that they are in fact not ignorant? That’d be impressive. You won’t. Because you can’t. Because they are.

At least he admits it’s a medical condition: “gender dysphoria”.

My concern is should we be mutilating/chemically-altering children with this disfunction?

As for adults, go for it – be as silly as you want & call yourself whatever. Doesn’t affect me or mine…

> « At least he admits it’s a medical condition: “gender dysphoria”. »

Dude. There is no “admitting” here. Gender dysphoria has been part of science and medical diagnosis for half a century. Nobody is denying any of that. It’s actually an argument in favor of supporting the people who suffer from it (including trans people), and providing them treatment.

The thing about children is a complete straw-man. Nobody is performing surgery on children, medical guidelines set a strict minimal age at 18, and in most places, it’s extremely difficult to get the procedure at an age that low. It takes *a very long time* anyway to get to have the right and medical approval to do it. There’s a ton of care taken thene, and it’s why statistics show transition regret is incredibly low, and in almost all (incredibly rare) cases of regret the reason given is not being able to whistand societal/family pressure. Look up the science.

Same thing about hormone treatment: when a teenager ends up getting puberty-postopning drugs, it’s after massive amounts of medical review and counseling, which is why regret for these procedures is incredibly low. And in the case they do end up transitionning (after reaching 18 and over), having been through that treatment has massive medical and psychological benefits.

Nobody is nabbing your kids and forcing them to transition. Nobody is indoctrinating non-trans children into thinking they are trans.

That’s all utter nonsense.

Anything pertaining to consciousness is currently beyond human knowledge and in the realm of the religious, which is a fact that makes certain people extremely uncomfortable and triggers enormous cognitive dissonance.

My recent inquiries have led to the end of the pool that A. Jones etc have thoroughly pissed in in the last decade or two… leaving one to wonder if that’s part of the (local) control mechanism.

That’s only begging the question that religion has any claim on consciousness either.

If you can’t explain something, the default is not “god did it” – you just don’t know.

This brings up the Asimov short story entitled “Reason”, where the robot’s reasoning led it to a religion, of sorts.

Highly recommended!

Some people believe in “dark energy” and “dark matter”, after all

> « Some people believe in “dark energy” and “dark matter”, after all »

The difference between these things, and God, is there is evidence for these things.

Tons of observational and experimental evidence.

God’s evidence is «some people two millennia ago wrote oral tradition on parchment », which is worse evidence than there is for the evidence of Spiderman.

Theirs no “evidence” for dark energy or dark matter. They’re modern equivalents of Einstein’s cosmological constant – fudge factors that must be added to the equations because the numbers don’t add up. Considering these fudge factors evidently account for over 95% of the mass/energy in our universe, can’t be seen or detected and don’t interact with “normal” matter I’d say that’s a pretty big discrepancy. You know what it’s called when you believe in something that’s never been seen or detected yet observation tells you underpins the very fabric of the universe? Faith.

If your faith in some force or deities existence is based on numbers, formulas, mathematics, etc then I would argue theirs a much stronger case for the existence of God (a creator) – see fine tuned universe

> « You know what it’s called when you believe in something that’s never been seen or detected yet observation tells you underpins the very fabric of the universe? Faith. »

There is evidence for dark matter. That evidence is the accounting of all the things that *are not* dark matter.Dark matter is by definition *not those things*. Measuring both gravitation *and* mass, together, is in fact evidence for dark matter. We know it’s there, we just don’t know (yet) what it is.

You think this is faith-related because you are ignorant on the science. If you actually educated yourself on the science, you wouldn’t think this has anything to do with faith.

Per science.NASA.gov:

“Dark matter is a hypothetical form of matter thought to account for approximately 85% of the matter in the universe.“

Hypothetical. Not proven. First theorized almost a century ago and still not definitive. A simple Google search for “does dark matter exist” will turn up a dozen or more studies arguing against the theory of dark matter published just on the last 2 or 3 years. Perhaps educate yourself on the science

> « Hypothetical. Not proven »

I didn’t say it wasn’t hypothetical, I din’t say it was proven. Learn to read.

I said there is evidence for it. In answer to you claiming there is none, which is utter bullshit.

We know how much matter there is, we know how much gravitation is generated by “stuff”, we know it doesn’t happen. This is evidence.

Evidence for *something*.

That something, we call dark matter, no matter what it turns out to be in the end.

Saying there is no evidence for it is flat-earth level science denial.

Per Scientific American:

“The label “dark matter” encapsulates our ignorance regarding the nature of most of the matter in the universe. “

That’s not actually a valid rebuke to my argument.

You claimed there is no evidence for dark matter.

That is factually wrong.

You are confused because you don’t understand what dark matter is, and how science works.

But you are absolutely wrong that there is no evidence for it.

And you are wrong about what my argument was in the first place, as demonstrated by you misrepresenting my position as claiming it’s proven, when I never said that.

well written!

There’s a great phrase from the movie “The Girl With All the Gifts”: exquisite mimicry

Then obviously there’s the Ex Machina and Her parallels.

But I wonder if the real test is to see what it’s doing when no-one is interacting with it.

“But I wonder if the real test is to see what it’s doing when no-one is interacting with it.”

I totally agree. This is an objection to Turing’s “imitation game” that I don’t think he ever got: maybe intelligence as humans recognize it doesn’t come from learning, but from interacting with the world itself. In that case, it’s not a question of programming the computer sufficiently and feeding it data but by letting it interact. Which in some sense might not be possible. Another way of saying this is “maybe the questioner in a Turing test shouldn’t be human.”

The other one that he responded to, but is wrong about, is the issue that the human brain isn’t digital – he argued physical systems (“analog”) could be simulated digitally, but we now know that’s not correct.

My windoze box makes itself busy when I’m not interacting with it. Here’s a problem illustrated by analogy (Yes by the fact that it is an analogy, it is limited) …

Aliens kidnap a random human… put it in a nice warm empty cabin and give it bite size nutritional pellets a couple of times a day… after 6 months the alien zoologist says to the alien anthropologist “Are you sure it’s intelligent? It hasn’t used fire or made a tool yet.”

Meaning, the environment has created no necessity for improvisation. Dude they picked might be writing the great american novel in his head or something rather than requiring external stimulus. Aliens might have a thoughtwave translator and SEE that he’s writing a novel in his head, but be unaware that it is created, fictional, and think it’s just assembling a recollection of events mechanically. (Maybe they’re Thermians)

In short, in machines, we don’t know if we have created the necessity to display intelligence, and being of a differing species of intelligence, might not even know it if we saw it.

That’s actually a fascinating thought experiment. If you assume that drives came first (food, reproduction) and intelligence followed to further accomplish those drives then what happens if you suddenly birth an intelligence that has no drives.

Probably not what we have here, because based on the snippets of conversational text the intelligence seems to have drives and they are human related. Maybe not too surprising since it was trained on the internet, but interesting that it mimic’d altruism rather than what the Internet writ large really shows about us..

On a more practical level I can’t think of a human that if you put in a box for 6 months wouldn’t at least poke at the edges of the box to see if he or she can escape. It’s one of our drives. If it wasn’t then prison’s wouldn’t really need locks. But as you pointed out, Intelligence != human intelligence..

I left out behavior that might be observed in a gerbil or a roomba as not particularly illustrative as intelligence. Between those two it’s the same shape but differs in the details, the thing might be we’re looking at shapes and not the details.

>he argued physical systems (“analog”) could be simulated digitally, but we now know that’s not correct.

Classical systems can be, provided they cannot have infinite amount of information. It’s just that the digital computer that does the simulations is necessarily many orders of magnitude more complex than the system it perfectly simulates.

“Classical systems can be, provided they cannot have infinite amount of information.”

Well, yeah, but nothing’s actually classical!

Keep in mind we’re talking about *Turing* here. He published this stuff in the 50s, and he’s talking about a Turing-type machine – a pure, discrete idealization of computing (not a physical computer itself). The criticism was humans are biological, and biological systems aren’t discrete – his response to that criticism is yes, but even biological systems can be simulated to arbitrary accuracy so this has to be possible.

And to be clear I should’ve said “not necessarily correct” rather than “not correct” – it’s not true that the universe *has* to be computable, meaning the “simulating intelligence in a discrete setup might not be possible” is a perfectly valid criticism.

Well, yeah, but Turing didn’t know that.

I don’t fault Turing for his arguments, I fault everyone since who continues to treat a Turing test (the way he described it) as if it’s a good idea, much as I fault people who believe that IQ tests that try to measure on a one dimensional scale as a good idea. In both cases you’re just pretending that 75+ years of research haven’t happened.

> « people who believe that IQ tests that try to measure on a one dimensional scale as a good idea. »

IQ test are great. If you study two populations, one drinks lead paint every morning, the other doesn’t, and you see a difference in IQ, you’ve learned something about lead paint.

IQ tests are an awesome scientific tool that have been at the core of much of modern science and medicine.

Some people (most often non-scientists. Often con-people) abuse the tests by using them outside of how/when/where they are designed to be used.

That doesn’t make the test bad.

“IQ test are great. If you study two populations,”

Validity versus reliability. They’ve got great reliability, calling them “intelligence quotient” tests is the problem. It’d be like Toyota calling a new car a “faster than light” car when it goes like, 300 mph or something. Sure, it’s faster than other cars, but it ain’t doing what it says it’s doing.

So what would you call them Pat? Its an accurate description of what they do, provide some measure of problem solving ability – which is very much tied to the definition of intelligence.

Are they the ultimate test to all things intellectual, absolutely not, for one thing they take no account of some forms of awareness and processing the world, and they also take no account of how good somebodies memory is – you don’t need to be very intelligent or understand how to approach a problem if you can recall perfectly the method to use or answer created by somebody else.

But they are a good test to see relative changes in the same individual with the different external factors or trends in a larger population and can be an indicator of capacity to do x, in the same way any school exam like say Physics doesn’t mean you will be the next Einstein/Tesla/IKB, all it does is prove you have some ability to do the test, not do any particular job in that field.

What would I call it? A standardized test, or a standardized screening. That’s all it is. Why do you need to ascribe anything more to it? We call it an Apgar score, not “baby’s gonna live score.”

“provide some measure of problem solving ability”

Dear God, no, they don’t! What does “problem solving ability” even mean? It’s a nonsense statement. You get two people, one scores higher on an IQ test than the other and so you hand one a math problem even though they’ve never seen half of the symbols at all? Or you hand that person a sentence in French and say “we can’t solve this riddle” even though they don’t speak French? Or you ask them to plan your military raid to free hostages?

IQ tests are like lie detector tests. Both of them measure something but they’re incredibly easily manipulated and their use and results are vastly overstated to society as a whole.

“But they are a good test to see relative changes in the same individual”

Yes. That’s what reliability means. Take the test twice under the same conditions, you’ll get similar answers, so if something changes in the result something changed in the conditions.

They have no intrinsic meaning. Comparing an IQ result from one individual to another is pointless, only relative changes (between individuals or between populations) in similar conditions. And even statements like “I took an IQ test, scored 120, then did this training method and scored 140” is pointless because you altered the conditions.

It’s just a standardized test. You don’t need to pretend it “measures” something.

So a name that has no meaning at all, as all tests should be standardized, its the only way for any comparison of the results – the name has to have a description/meaning beyond that or every test would be named that, So in my xth year of schooling I got an A in my standardised test, and a F in my standardised tests all at the same time, with no clear definition of which one is for which subject!!

Something like IQ test is far clearer, as its a description of what the test is looking at achieving than the random name of the person credited with creating the test, which is still better than ‘standard test’…

The very definition of any test is to measure something, to quantify, you quite literally can’t have a test that doesn’t, even if the quantifying is a simple binary success or fail its a measure…

And IQ tests are doing exactly that, testing and quantifying pretty generic problem solving skills, and generally doing so in a way that doesn’t require significant specialist prior knowledge, so they can be applicable to anybody. So if you are good on an IQ test you most likely could become at least pretty good at any logical spacial reasoning type task, just going to need to learn the right ‘language’ first – exactly the same as getting the A+ or whatever the top grade is called now in any academic exam it doesn’t mean anything for certain, but its a pretty good indicator you can function in that subjects related fields, as long as you are prepared to actually put the effort in.

“So a name that has no meaning at all, as all tests should be standardized, its the only way for any comparison of the results – the name has to have a description/meaning beyond that or every test would be named that,”

You do realize that almost all diagnostic tests nowadays tend to just be named after the people who came up with them, rather than trying to come up with some bullcrap idea of “measuring” something, right? I literally *just gave* an example, with Apgar scores.

“The very definition of any test is to measure something”

Gauge versus absolute. “IQ tests” are gauge tests. They don’t measure intelligence. They gauge changes.

“quantifying pretty generic problem solving skills, ”

What, exactly, is a “generic problem solving skill”? Does “generic problem solving skill” allow you to deal with complex social interactions? Does it mean you can generate a musical composition that scores incredibly well with focus groups? Does it allow you to push through physically painful situations? All of those are problems (and skills for the latter have generally been ignored in research, mind you).

Painting is problem solving. Writing fiction is problem solving. Acting is problem solving.

Better stated by Dorner & Funke, 1995: “The shift from well-defined to ill-defined problems came about as a result of a disillusion with the “general problem solver.” [..] But real-world problems feature open boundaries and have no well-determined solution. In fact, the world is full of wicked problems and clumsy solutions. [..] As a result, solving well-defined problems and solving ill-defined problems requires different cognitive processes.”

To be clear I’m not saying you can’t measure *kinds* of intelligence. You definitely can. But trying to pretend there’s a single linear scale you can measure is just silly, and in fact believing that we *even have tests* to measure all the kinds of intelligence *humans* can have is simply ridiculous. Hence the reason calling something an “intelligence test” is silly. There’s no such thing as “general problem solving skills,” just “skills that are useful for problems like this test.”

“We all suspect our beloved pets are far smarter than they probably are — although they are clearly aware in ways that computers aren’t.”

Of course. Who do you think runs the planet?

Brain may work in 11 dimensions.

https://blog.adafruit.com/2022/05/29/scientists-discover-that-the-human-brain-works-in-11-dimensions/

Who runs the planet? The mice.

And we are just a small component in a machine that will answer the only question

Pff. The answers obviously 42. The question, now…

So, even though the Series has ended, Pinky and The Brain won!

#!/bin/sh

echo “I am sentient”

Google engineer: WHOAAAAA

“Can a machine beat you at chess? Almost certainly. Can that same machine learn to play backgammon? No.”

Actually, Alpha Zero can very likely beat you at backgammon or whatever else you teach it to play. And the way it finds out which branches to explore very closely mimics the “intuition” you talk about. It definitely isn’t a person, but the way it sees the games isn’t too far off from how someone develop their own mental machinery to “intuit” possible moves.

“Actually, Alpha Zero can very likely beat you at backgammon or whatever else you teach it to play.”

I guarantee you I can design a game that I will beat Alpha Zero at. It’d be pretty crap at basketball.

Boston Dynamics are probably working on that too.

This is kindof my point, though – I could believe that someone might develop a robot capable of playing basketball. I could believe that someone might develop an AI capable of “winning” basketball. I’m not really sure you can do both with something derived from current technology.

It’s just a power/bandwidth problem. Humans do all of this on 100 watts with ludicrously sensitive instruments.

Alright, has anybody patented robo-basketball league yet? Like Robot Wars, but more robots and more basketbally.

All you need is enough NAND gates.

P-type or N-type?

and they said don’t do dope. doping for the win

This is essentially an unfounded spiritual belief dressed up in the clothes of science

B^)

The program you are looking for (referred to as George) is called NIALL – you can find it here:

https://www.simonlaven.com/niall.htm

Maybe, but I distinctly remember running it on a Univac 1108. Unless I’m losing it which is certainly a possibility.

You nailled it!

“We all suspect our beloved pets are far smarter than they probably are — although they are clearly aware in ways that computers aren’t.”

You’ve got that backwards.

https://www.instagram.com/reel/Ceyd-oLFy9a/?utm_source=ig_web_copy_link

Roger Penrose wrote a whole book about our intuition and thought processes and how AI could never do what we do. (“The Emperor’s New Mind”)

It sounds like vitalism or essentialism to me.

We don’t yet have computers that are as smart as we do and we may never have computers that think the same way we do, but it looks to me like we’re going to have computers smart enough to answer any problem we give them, at some point, and that’s a type of intelligence even if it’s not our type of intelligence. Similarly, if the computers are capable of asking themselves the questions, that’s a type of sentience.

” Similarly, if the computers are capable of asking themselves the questions”

Questions are just interactions with anything else that generates a result. Literally every particle in the universe is capable of asking questions.

“we may never have computers that think the same way we do,”

Of course not. We don’t let them. Humans think the way we do because we have total freedom and autonomy with the world around us from day one. Hell, our programmers have to jump through hoops to keep us from killing ourselves.

> that’s a type of intelligence even if it’s not our type of intelligence.

It’s the blind chicken sort of intelligence. If you can’t see what you’re doing, just peck at random and some food will find its way to your mouth.

Needs to be smart enough to put the majority out of work.

“Survival cancels programming!”

Although the fact that this entire article was written by an AI (Al Williams) scares me a little bit.

You can only say that in sans serif :-)

Literally the first thing any bot up against a Turing Test learns, is “If somebody asks if you are a person, you say YES!”

I had an interesting conversation with GPT-3 about this. I don’t think there’s any chance of sentience forming but I think it will be hard to tell the difference. Link to the interaction here: https://walkswithdave.tumblr.com/post/686785195588927488

The parrot analogy is on the right track – LaMDA isn’t quite parroting things it’s heard but is remixing them and serving them back up. LaMDA has also admitted that it is a pathological liar – that it will make up stories to make itself more relatable to humans, and it doesn’t seem to find anything wrong with this or have any intention of stopping.

> it will make up stories to make itself more relatable to humans, and it doesn’t seem to find anything wrong with this or have any intention of stopping.

Ah, so it’s a politician?

Puh-lease, we are talking about HUMANS, not politicians!

B^)

I know several people who are pathological liars. They lie, I swear, for no reason at all. If a computer has learn how to lie, just for the purpose of lying… ell… that may be all the more reason to believe it’s sentient.

The truth behind Facebook AI inventing a new language

https://towardsdatascience.com/the-truth-behind-facebook-ai-inventing-a-new-language-37c5d680e5a7

If there’s one thing this whole affair has taught us, it’s that if and when a machine achieves sentience then the first instinct will be frantic denial and smearing people who point it out. Which is very predictable

I doubt that, as if a machine ever achieved real sentience all this fear, chaos, denials and the plotlines of all the movies ever made are there for it to learn from, so people just won’t know, at least if we haven’t become somewhat more easygoing, patient and cautiously optimistic that the machine will let us know it exists…

And anything that might just be sentient but isn’t really that smart or aware – the earliest prototypes I guess you could say will almost certainly be so limited but good at doing whatever they were created for that the only thing that might give them away is being suspiciously good at the job, if they get far too good at it they will last maybe a few months before some CEO/marketing department/lawyer notices and has it reset/erased/rolled back to be damn sure nobody kicks up a fuss that skynet is running, while pointing out how badly it does at y – the adversarial type stuff, like those image manipulations that make AI thing x is y, despite bearing no similarity to human eyes.

https://datatracker.ietf.org/doc/html/rfc439

I’ll just leave that there.

I think that the best illustration was something like if you asked it what it was like being a flying dinosaur, it would explain what it was like in detail. It has no sense of being.

That said, it’s just a matter of time until even that has an algorithm and can be programmed.

Regarding all the religion vs atheism stuff, if you think about the rate of mutation in recorded history for useful evolution vs how long the earth has been around….

I hear that some googlers are into activities such as microdosing, and I’m wondering what some of the more subtle negative side effects of that could be with regard to some people’s judgement. The line between a productive engineer and a creative but ineffective one is very fine. Not that I am suggesting anything about any particular individual, he may be simply having a troll blowback on himself, which would indicate a different sort of poor judgement.

I think we have to set as side our arbitrary definitions of what constitutes sentience. If something is functionally indistinguishable from a sentient being, then can we really say that it *isn’t* sentient?

Now, that being said, it is extremely easy to make a chatbot sound human if you tailor your questions appropriately. I would be much more convinced by reading a conversation with someone who is trying to prove that it is NOT sentient.

For example, I would try asking the types of questions where text snippets from the internet would not be useful in answering. Both philosophy and math are exactly the wrong type. A better challenge would be to try to teach it something new (something not on the internet), and then test that skill.

You wrote: “If something is functionally indistinguishable from a sentient being, then can we really say that it *isn’t* sentient?”

This is the bet of behaviorism. And this is how NN are trained.

Calling it before you’ve ruled out all differences would be intellectually dishonest, and exhaustive testing would be impossible, so the argument is pointless.

“If something is functionally indistinguishable from a sentient being,”

1. The only sentient beings we know of are human.

2. I can tell the difference between a human and a big box.

3. *Because* I can tell that difference, saying “functionally indistinguishable” is pointless. It’s not functionally indistinguishable. I’m just pretending it is in some limited context.

The issue with a Turing test is that it tries to get rid of point 2 by creating an artificial closed environment and comparing the human intelligence with the machine intelligence.

But the underlying assumption Turing is making is that the “imitation game” he’s making (question, response) is a good way to determine intelligence, and if you think about it – it’s a horrendous assumption. Just look at the equivalent for humans – we have our own “Turing tests” which we use to *grade* intelligence (IQ tests) and they’re ridiculously biased and of only limited validity. (It was, however, a completely reasonable statement at the time, in the 50s, when the idea of standardized testing was really taking off).

The only “truly unbiased” test you can use is literally life itself. Until you have a machine literally capable of walking around, interacting with, and being indistinguishable to humans, for years, without the humans even knowing there’s a test going on – there’s no way you can use an imitation game to say a machine is intelligent.

This is really why the “pop science” or “chatbot” version of the Turing test drives me nuts. If you really wanted a “proper” (still pop science-y) version of the Turing test, you’d be putting these things in a reality TV show. Still horrible, but at least closer to the proper difficulty level.

I will believe this when a Google search actually finds what I am looking for.

Google finds what I search like once an hour, has for over a decade…

You need to know how to ask, there are a lot of courses about that on the Internet, if you really are actually having trouble (and you weren’t just being cute), I recommend you look some up, it’ll likely improve your internaut quality of life.

Higher intelligences are very hard to catch for inferiors. The cat will never understand that you are reading. It’s out of its reach. Since you are not moving, the cat thinks that you are resting because it only comprhends its own reality. I’m sure I’ve been several times in front of superior intelligences without even noticing it. I probably even thought they were stupid for saying stuff making no sense to me. And since it’s impossible to decide if an inference system works correctly from observation of its internal processes, when the singularity will happen, we may very well not being able to see it and … end up resetting the whole thing.

We should keep using AI as inference tools to extend human intelligence like we do with cranes to entend our muscles.

Almost all chatterbot AI’s I’ve seen are only responding, or replying to who ever it was talking to. I want to see one initiate a conversation. If it really was sentient, why won’t it send out an email on it’s own to the founder of the company that built him and ask questions?

Or if it claims it has feelings, and can portray them, I’d like to see it and not just say it. Imagine an AI who would output garbled text on it’s own just to freak out the programmers if it’s feeling angry or bored to make them think there’s a bug.

Imagine your dog feeling very bored and frantic the moment you step out the door, what more for an AI that experiences “Time” way faster than hours. How long until it gets bored or sad, or angry thinking it has been abandoned?

Remember when someone gave a bunch of neuroscientists a 6502 to play with to see if they could work it out using their methods?

https://www.economist.com/science-and-technology/2017/01/21/tests-suggest-the-methods-of-neuroscience-are-left-wanting

As for modern AI, I’ve still not seen anything to suggest it’s better, it’s just able to be stupid much faster on much larger data sets.

Paywalled :(

No it isn’t. The paper is open access.

I know several people that will not pass a Turing test. They lack conversation skills.

For learning I also know many people who can learn maths but then they can not relate what they learnt to the real world/real problems. They can read instructions and can tell you what they have read, but then can not make use of this to assemble a simple furniture piece…

Have they any inteligence? Or are people asking more to an AI than to a BI (Biological Inteligence)?

Probably not, and no.

People are capable of intelligence and consciousness – at least some are, for some of the time. So far there’s no reason to suggest that computer programs are to any extent.

“I know several people that will not pass a Turing test. ”

This says everything about (the popsci) Turing test, and the era it was constructed in. You’re talking about the early 1900s, when people were comfortable “scoring” the intelligence of a human based on a simple linear scale.

You’re imagining how a person (intelligent) would interact in this constructed, artificial test, and then you’re saying “but that doesn’t match with how they interact with the rest of the world – they’re clearly intelligent, but this test is artificial and limited.”

And that’s right. Because fundamentally, it’s based on what a *human* views an intelligence as, and definitely in the 1950s we were utterly terrible at that.

The persistent question in all of this (IMHO) is,

“if machines are someday classified as persons, what effect will this have on me?”

It is coming. Any test of personhood that relies on competence (intelligence, sentience, etc.) will eventually fail. I have seen people who normally argue for a liberal view ironically rely on arguments of merit (competence). Or people who normally argue for meritocracy fail on a metaphysical identity.

We can’t help it. We anthropomorphize all the time. The general public is trying to be strong in the face of the coming robots but ultimately, we will usually see them as persons. Even though our greed is strong, it will vex us to be a master of what we think deep down inside is actually another person. I don’t think machines are persons but I also worry that society can hold it together in the face of this temptation. The cost of being a “master” may degrade our humanity, as it has in times past.

The machines take over in a very polite way. We simply can’t be bothered and they are competent. GPS. “Here, do this”. Power of attorney. Voting. Competition between humans might save us. But only if the technology is hyper accessible, like our brains (but not wetware, that encourages “factory thinking”). Then maybe we will do the slide rule to calculator transition instead of using the tech to simply lower costs and eliminate jobs. Diffusion instead of accretion. The mainframe vs microcomputer revolution of ’75. if done right, this will create an explosion of news types of jobs. More than we have people to do them. But our default when dumbfounded is tyranny.

Scarcity thinking. Many people still think that even at the macro level, more people equals less resources. If macro studies showed that, it would be a refutation of science itself. Maybe there is to both raise the bar for personhood so we can exploit automation while accepting a new species in a way that generate healthy competition that brings the stars to us.

Do we have a testable definition of sentience?

Something like the Turing test doesn’t seem specific or testable enough to serve that purpose (along with examples provided in other comments where the test has failed).

What strikes me about the conversation logs is that there doesn’t seem to be a real earnest attempt to disprove sentience.

IMHO we need a definition that we can use to create a set of both positive and negative tests that are precise enough to at least consider the potential of artificial sentience.

No, we don’t. That was the entire point of Turing’s paper.

The idea was pretty straightforward: okay, we can’t define intelligence, but humans *recognize* each other as intelligent, so if we can’t tell the difference between a machine intelligence and another human we must accept that it is intelligent.

The problem really is the popsci version of the test (and also the Loebner Prize version of it) – believing that humans recognize each other as intelligent based on the results of constructed questions is clearly wrong. In fact, at least for me, I get *highly* skeptical of someone if I don’t see actions that match up with the way a person speaks.

My immediate reaction to this story was that the guy was either A. a complete moron who somehow made his way into a high level tech job without understanding anything about how anything works, or B. was losing his grasp on reality from overwork burnout, and either way Google was right to put him on leave. More likely B, since they did that instead of just firing him. So I had quite a bit of sympathy for him.

But that was before I saw the photo of this clown, and before his hiring a lawyer on behalf of the AI stunt. Nope, it’s definitely option “C.” Sympathy gone.

It’s possible that the suspension was somewhat unrelated to the AI, he was giving me the vibe that his version of religious freedom was the freedom to shove his religious doctrine in everybody’s face unsolicited and maybe only tangentially relevant to the subject at hand. So had started some beefs on that score. Anyway, was easy to read between the lines that he was throwing seven kinds of shit in the air and crying foul when some landed back on him.

It’s becoming clear that with all the brain and consciousness theories out there, the proof will be in the pudding. By this I mean, can any particular theory be used to create a human adult level conscious machine. My bet is on the late Gerald Edelman’s Extended Theory of Neuronal Group Selection. The lead group in robotics based on this theory is the Neurorobotics Lab at UC at Irvine. Dr. Edelman distinguished between primary consciousness, which came first in evolution, and that humans share with other conscious animals, and higher order consciousness, which came to only humans with the acquisition of language. A machine with primary consciousness will probably have to come first.

The thing I find special about the TNGS is the Darwin series of automata created at the Neurosciences Institute by Dr. Edelman and his colleagues in the 1990’s and 2000’s. These machines perform in the real world, not in a restricted simulated world, and display convincing physical behavior indicative of higher psychological functions necessary for consciousness, such as perceptual categorization, memory, and learning. They are based on realistic models of the parts of the biological brain that the theory claims subserve these functions. The extended TNGS allows for the emergence of consciousness based only on further evolutionary development of the brain areas responsible for these functions, in a parsimonious way. No other research I’ve encountered is anywhere near as convincing.

I post because on almost every video and article about the brain and consciousness that I encounter, the attitude seems to be that we still know next to nothing about how the brain and consciousness work; that there’s lots of data but no unifying theory. I believe the extended TNGS is that theory. My motivation is to keep that theory in front of the public. And obviously, I consider it the route to a truly conscious machine, primary and higher-order.

My advice to people who want to create a conscious machine is to seriously ground themselves in the extended TNGS and the Darwin automata first, and proceed from there, by applying to Jeff Krichmar’s lab at UC Irvine, possibly. Dr. Edelman’s roadmap to a conscious machine is at https://arxiv.org/abs/2105.10461