Modularity is a fun topic for us. There’s something satisfying about seeing a complex system split into parts and these parts made replaceable. We often want some parts of our devices swapped, after all – for repair or upgrade purposes, and often, it’s just fun to scour eBay for laptop parts, equipping your Thinkpad with the combination of parts that fits you best. Having always been fascinated by modularity, I believe that hackers deserve to know what’s been happening on the CPU module front over the past decade.

We’ve gotten used to swapping components in desktop PCs, given their unparalleled modularity, and it’s big news when someone tries to split a yet-monolithic concept like a phone or a laptop into modules. Sometimes, the CPU itself is put into a module. From the grandiose idea of Project Ara, to Intel’s Compute Card, to Framework laptop’s standardized motherboards, companies have been trying to capitalize on what CPU module standardization can bring them.

There’s some hobbyist-driven and hobbyist-friendly modular standards, too – the kind you can already use to wrangle a powerful layout-demanding CPU and RAM combo and place it on your simple self-designed board. I’d like to tell you about a few notable modular CPU concepts – their ideas, complexities, constraints and stories. As you work on that one ambitious project of yours – you know, the one, – it’s likely you will benefit a lot from such a standard. Or, perhaps, you’ll find it necessary to design the next standard for others to use – after all, we all know there’s never too few standards!

How Is Modularity Still Alive?

We like repairability and upgradeability. Sadly, many consumer-facing gadget-producing companies don’t tend to appreciate these as much as we do – you will see previously omnipresent aspects of modularity, like swappable CPUs or even RAM on laptops, becoming less prevalent with time. Simply put, having us buy new devices is more profitable than letting us upgrade and repair our old devices. Still, there are reasons why modularity lives on – in some important ways, it’s simpler to design modular products. For instance, you don’t have to layout your own board with high-speed CPU and RAM interconnects, able to instead focus on the part that must fit your IO of choice – greatly simplifying design.

An apt demonstration of modular computing upsides being commercially viable, the PC/104 standard has been a staple of industrial computing, in large part because it’s easy to get a replacement motherboard if your old one fails. After all, industrial customers are willing to pay a premium for some degree of modularity, since it means they can get equipment fixed quickly, without losing large amounts of money as their production line is stuck idle. And, when your equipment could use an upgrade due to system requirements rising the way they always do, there’s hardly ever a shortage of PC/104 boards with increased processing power.

An apt demonstration of modular computing upsides being commercially viable, the PC/104 standard has been a staple of industrial computing, in large part because it’s easy to get a replacement motherboard if your old one fails. After all, industrial customers are willing to pay a premium for some degree of modularity, since it means they can get equipment fixed quickly, without losing large amounts of money as their production line is stuck idle. And, when your equipment could use an upgrade due to system requirements rising the way they always do, there’s hardly ever a shortage of PC/104 boards with increased processing power.

Customers don’t have as much of a sway to make modularity in consumer products everpresent. It makes sense from where we stand nowadays, but it’s sad, and doesn’t have to be this way. It also doesn’t help when we have companies like Google and Intel starting attractive modular projects with their own purposes in mind, then screwing a myriad of important aspects up, and ultimately shelving everything. On one hand, bungling products in such a way is a known M.O. of large companies – on the other hand, it’s frustrating to get our hopes up, then have them be represented by a low-effort flop. If you’re not aware of how bad it can get, here’s an example.

The Corporate Way To Get It Wrong

Intel is a giant company manufacturing CPUs, chipsets and all sorts of things that a hobbyist can only dream to one day tinker with. They have both an inordinate amount of resources and a customer base to develop modular solutions, and they’ve been trying to get their own modular, somewhat hobbyist-accessible embedded ideas off the ground, every few years a new one. These ideas have so far been failing, in large part, due to Intel’s own fickle decisions – as many of us somberly familiar with Intel’s Edison and Galileo product lines can attest.

For instance, in 2017, Intel has unveiled the Compute Card concept – a card with CPU, RAM and storage that you could’ve put in your pocket and plugged into anything. Two years later, they’ve shelved the concept. In particular, a politely scathing blog post from NexDock, a company who was trying to develop a Compute Card dock at the time, sheds some light at how rude Intel’s behaviour was. It’s borderline amusing to read about “overly complicated encryption and authentication requirements of Intel Compute Cards”, and nowhere near surprising to read about insufficient support from Intel.

Even though Intel touted NexDock’s efforts as an achievement, it wasn’t enough to warrant proper collaboration and transparency, and NexDock’s ended up spending tons of money and time on something Intel never took seriously. Intel’s latest effort developing CPU+RAM modules is called Intel Compute Element. This one is expressly not for you: it’s for building custom NUCs and other systems with requirements-tailored hardware, reflected by the modules’ price tag. It looks nice in a “what if” way, but by now, we’ve learned to not expect much.

With Enough Resources, We Can Get There Ourselves

Intel presented their Compute Card concept in 2017. In 2016, a surprisingly similar but open-source and hobbyist-friendly project was taking shape. The EOMA68 project invited us to contribute to a future where CPU cards existed – where you could have the CPU, RAM and storage on a small low-power card able to plug into a laptop-shaped housing, game console, a small NUC-like desktop box, or even usable standalone with a HDMI monitor and a powered USB hub. If you’re going somewhere, you can physically take your system out of your desktop enclosure and plug it into a laptop housing, and plug it back when you’ve returned. A lot of the same goals and same form-factor as an Intel’s Compute Card, but none of the corporate backing, it was an daring goal to set, even if you were to get an A20 CPU instead of an x86 system.

Designed by an ambitious engineer set on getting things done, the aim of the EOMA68 project was to never compromise on compatibility while keeping the cards accessible for small-scale design and production – ensuring that after the first compute cards became outdated, building backwards-compatible ones would remain realistic. In a witty move, PCMCIA connectors and housings were used for IO connections – cheap and still widely available. Care was taken to design a pinout which could be compatible with a few different CPU generations going forwards, aiming for upgradability without losing features – and the crowdfunding drive reached its goal as if effortlessly, showing that people believe in what this project stands for.

Designed by an ambitious engineer set on getting things done, the aim of the EOMA68 project was to never compromise on compatibility while keeping the cards accessible for small-scale design and production – ensuring that after the first compute cards became outdated, building backwards-compatible ones would remain realistic. In a witty move, PCMCIA connectors and housings were used for IO connections – cheap and still widely available. Care was taken to design a pinout which could be compatible with a few different CPU generations going forwards, aiming for upgradability without losing features – and the crowdfunding drive reached its goal as if effortlessly, showing that people believe in what this project stands for.

Getting a batch of EOMA68 cards built proved to be a struggle, however. Manufacturing was an uphill battle, with troubles like connectors going out of stock one after another and replacements causing low yield issues. Time is a cruel mistress and had only stacked extra problems on top of every delay, and the project’s last update has been reassuring but not yet fruitful. However, it’s a journey that one of us ought to have embarked on – even unreleased, this small project achieved things that Intel couldn’t. In addition to that, the author has kept a rich research database, and the development process has been discussed openly on a mailing list – invaluable resources for anyone looking into modular computing.

CM4 Form-Factor No Longer Just Theirs

You’re no doubt familiar with Raspberry Pi Compute Modules, but perhaps, not with all the pin-compatible alternatives. When the Pi 4 came out, one of the questions was – how would the next Compute Module look, given the newly added PCIe interface? Most expected a new generation of a SODIMM-mountable module, and what we got was far from that. Once laments about low-pitch alignment-pin-less connectors died down, the promise of PCIe was too much to pass on, and hackers have come out with a wide array of carrier boards and Compute Module-based hacks. There’s almost too many to cover, but we sure try!

Of course, there’s nothing exclusive about a pinout+connector+footprint combination of such a SoM (System-On-Module), and the aforementioned myriad of carrier boards is tempting for any designer wise enough to avoid creating a whole new ecosystem. As a result, you have multiple boards with different – Pine64 SOQuartz, Banana Pi BPI-CM4 and Radxa CM3, to name a few. Each has some benefits over the CM4 – my personal favourites are the eDP-compatible DisplayPort on the SOQuartz and the SATA ports on the CM3, but there’s more to it. Hobbyists are also getting on the CM4-compatible board train with projects like the ULX4M FPGA boards, and there’s a RISC-V CM4-compatible from Antmicro in the works, even.

These modules are not exactly like a computer card that you could swap between your tablet and your desk PC on a daily basis, but it does provide unexpected and pleasant upgradability if you happen to have a device expecting a CM4 baseboard. In times of CM4 module shortages, this is a godsend, too. What’s more, players like TuringPi and MNT Reform have created adapters for their own ecosystems.

Reform The Laptop, CPU Module Ecosystem Comes Free

If you’ve followed projects like Novena, you’ll know that NXP’s i.MX series processors are one of the most openness-friendly ARM CPUs available. Six years after the Novena, the MNT Reform laptop wisely picked an i.MX8 CPU. However, they didn’t want to develop a complex multi-layer baseboard, and went for a DDR-formfactor i.MX8M-hosting SoM from Boundary Devices – making the Reform’s mainboard all that cheaper and simpler to design. The unique part about the Boundary Devices’ SoM – it’s the most open i.MX8M module out there, fit for a laptop that strives to be as open as possible.

If you’ve followed projects like Novena, you’ll know that NXP’s i.MX series processors are one of the most openness-friendly ARM CPUs available. Six years after the Novena, the MNT Reform laptop wisely picked an i.MX8 CPU. However, they didn’t want to develop a complex multi-layer baseboard, and went for a DDR-formfactor i.MX8M-hosting SoM from Boundary Devices – making the Reform’s mainboard all that cheaper and simpler to design. The unique part about the Boundary Devices’ SoM – it’s the most open i.MX8M module out there, fit for a laptop that strives to be as open as possible.

To reiterate, there’s nothing exclusive about a pinout + connector + footprint combination – and a DDR formfactor module is just a PCB with a set of gold-plated pads at its edge. You can see where this is going, right? MNT Reform’s team developed some custom SoMs and adapters, compatible with the i.MX8 module’s surface-level connectivity. To date, there’s an adapter for the Pi CM4, consequently compatible with three more aforementioned CPU boards, a NXP LS1028A board with half as many CPU cores but twice as much RAM, and even a Xilinx Kintex-7 FPGA-hosting board with talks about a software-synthesized RISC-V CPU ala Precursor.

What’s more – MNT has recently announced development of the Pocket Reform, a 7″ laptop-shaped companion device. Not to miss such a wonderful opportunity, the MNT Pocket Reform uses this exact module form-factor as its bigger sibling. Now, the MNT lineup became an ecosystem with swappable CPU cards – we didn’t quite expect this to happen, but it’s a pleasant surprise of the kind that we don’t get every day. If you’re working on a yet-unrivaled cyberdeck, you too should consider putting a DDR socket on a PCB and benefitting from everything the MNT ecosystem has to offer.

More To Come, Already Plenty

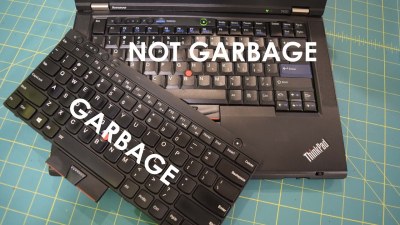

With each project daring to create a standard – or better yet adhere to an existing one – modular computing becomes more and more of a reality in hacker projects. The CM4 and MNT CPU module standards in particular are both accessible and hacker-friendly. If you wanted to develop a custom highly featureful wearable, or a laptop motherboard swap for that old Thinkpad you grew up with, there’s no better time to start than nowadays. Just like I2C devices have slowly been coalescing on JST-SH connectors, perhaps soon, we’ll be building powerful and sleek computers into whatever we want, at a whim, and our parts drawers will get a new drawer named “CPU modules”.

Headline image: “Back-side of an Intel Celeron LGA775 CPU” by Uwe Hermann

Chiplets as a form of modularity.

Chiplets are most often modular to a lesser extent than soldered in DRAM.

Mainly due to the higher pin density, and the fact that replacement chips are often hard to acquire. (unless salvaged from another unit.)

But yes, from a manufacturing standpoint, a multi chip design does give the chip vendor the ability to somewhat increase yields and also make more product variants.

But this is largely irrelevant as far as end system modularity is concerned.

Maybe irrelevant now, but not so in future.

Multi chip packaging (chiplets if one asks AMD to name it) isn’t giving the end user any extra modularity. This won’t change in the future.

If the multi chip package is modular further down the chain is however a different topic to multi chip packaging itself.

It is important to understand the very stark difference between “modularity in manufacturing.” and “modularity in the end product.” Modularity in manufacturing doesn’t inherently make the product more modular to the end user.

Simple. Create a cpu standard that’s followed just like WiFi and USB are. They’re prevalent so surely a common pinout is possible.

Then with modem lower tdp arm processors like apple is doing with the m1, it would be possible to have a cpu in almost an sd card type format.

So much is possible with technology, but companies just don’t do it, all in the name of lock in and profits. If apple could get away with having their own special WiFi that only worked with their devices, they would. Heck I’m sure they tried.

If you know how to get a company to do something that is counter to “profits” we’d all love to know. Closest I know is regulation, and the promise of even greater profits (standardization).

The last time that happened was with CPUs prior to Slot 1 (Intel) and Slot A (AMD). For the Slot Generation, the two companies both used exactly the same connector, but in opposite orientation. One *could* jack a Slot 1 CPU into a Slot A board and visa-versa, but after applying power it’s likely the whole thing would have to go into the trash.

The last pre-slot was Socket 7, culminating in Super 7. Intel, AMD, Cyrix, WinChip and IIRC others made CPUs for that socket generation. There had also been more than two CPU manufacturers for previous x86 sockets.

The main goal of Intel and AMD switching to a slot really wasn’t about speed, signal integrity etc, it was about erasing all the other companies from the PC CPU business. Shortly after all other companies had quit making PC CPUs, Intel and AMD went back to sockets, which used far less bulky and less costly to manufacture CPUs than the slots did.

I won’t take wifi and USB as examples. If you have done some interoperability tests you know that it is a nightmare.

An no, it is not something from the past. My new dell laptop only tolerate low speed usb device on the laptop port, not on its docking station or usb3 hub.

Hey, uh… the picture with the CM3, SOQuartz, and CM4 is one of mine, I believe; I’m okay with the use but a little credit may be nice, at least.

Love your videos! No-one does a better job of convincing me to never compile my own kernel.

The moral of the story is: do not depend on Intel.

The moral of the story is to stop poo pooing other companies. Get your own vision off the ground and win the hearts and minds of the customers. It’s easy to criticize others, much harder to make something better viable yourself.

I’ve tried to get my own vision off the ground using Intel components and relying on Intel documentation and support. When a company won’t give me timely access to necessary documentation, or EOLs a fairly recently introduced SoC that I spent months helping to design into a product just before going into production, I’d say I have every right to be critical.

you should absolutely criticize others! “making things” and “criticizing others’ things” aren’t mutually exclusive, I do both!

:) Good one

Thanks Jeff! And sorry. I’ll get that straightened out.

Hey! My bad! I originally wrote a sentence referring to your blog post piece I took this image from, then later, I edited the sentence out for brevity, but forgot to add a reference to the blog post under the picture. Will be better about that in the future!

Thanks :)

I always assume the best intent, just wanted to make sure the picture had the attribution.

That Lenovo really hits home, my X230 has the X220 Keeb and a ThinkMods NVMe to ExpressCard, I’ve also got the internal Bluetooth to USB card and the 2133mhz DDR3 (I don’t know what deal Lenovo worked with Intel, but it runs at 2133, as proven by IGPu performance and Benchmarks.)

Ironically this isn’t that new, given the existence of MMC-1 and MMC-2 CPU modules back in the late 90s. Granted it was an Intel thing, so maybe doomed to die out.

https://en.wikipedia.org/wiki/MMC-1

https://en.wikipedia.org/wiki/MMC-2

Those instantly came to mind for me also. I can’t see this scheme lasting more than a couple of CPU generations either so I’m gonna figure it’s equally pointless. Even with machines with low profile sockets, going from bottom of range, to maybe next generation top of the range (Ivy to Sandy for example) is rarely worth it until the CPUs are so out of date they are couch change pricing. Even then it’s a 50% speed bump maybe on mundane tasks. Hardware review hyperbole notwithstanding when they say a 1% benchmark difference = “Totally destroyed competitor”, 50% doesn’t really feel like much. YMMV if bus and RAM speed independant heavy math that is efficiently threaded for as many cores as you can throw at it is required. But then if that’s the case, you bought a new machine last year if it’s mission critical.

I had a 300 Mhz Pentium II Toshiba Tecra 800 laptop that used an MMC CPU. There were one or two faster CPUs optional for that model but circa 1999-2001-ish all MMC CPUs were completely unobtainable. What really helped massively speed it up was upgrading the 4200 RPM 20 gig hard drive to a 120 gig 5400 RPM drive. Boot time with Windows 98 (and maximum RAM for the T800) dropped from over 5 minutes to a bit less than 2 minutes.

I had a similar increase on some ex-lease P4’s when WD Black 1TB 10000 RPM were cheap (which was a big step up from the refurbashed 5400 RPM drives they came with)

Surprised raspberry pi didn’t go for the qseven compute card spec nice mix of pc104 and other mini baseboards to choose from using a mxm connector.

Intel has failed quite badly even in the traditional desktop PC modularity. No compatibility between motherboards and CPUs of different generations.

In laptops, Intel used exactly the same socket for four incompatible generations of CPU, with two variants of each generation, M and P. All of the CPUs will *physically* interchange but at best an incorrect CPU just won’t boot. At worst, something fries.

Of course in each generation, the two fastest (M and P variant) CPUs command an excessive price bump, or at least sellers expect one.

I upgraded several laptops from that era with CPUs one step back from the fastest. $10-15 for a CPU VS $50-100 or more. Fargin idjits were going to sit on those for a long time, expecting to get more for a CPU than any of the laptops it could work in were worth.

Yup, that’s the way to do it. Top speed grade is never cost efficient. Though once in a while you’ll get local deals off classified sites, if they see lower speed grades selling for $15 and only tag on another $5.

What I’d like to see is a breakout board for a Pi Compute Module or something similar that fits in the case of a Netgear GS108 gigabit ethernet switch. There’s a power input and Kensington lock hole on one side and on the other side are 8 Ethernet ports and a rectangular power LED. On the bottom are 4 rubber feet and a pair of 3 direction keyhole slots for mounting.

Thus placement of the power jack and LED are a given, leaving a big slot open for I/O, conveniently sized for Ethernet ports, pairs of USB ports, a video connector etc.

The top of the case comes off with two screws and the PCB mounts with four screws. Case size is 1-1/8″ x 4″ by 6-1/4″ The space for I/O is 5″ long by the height of one PCB mounted Ethernet jack. There are vent slots on the ends.

Don’t repaint it and it would be a stealthy computer, practically theft proof because who is going to steal a little, old, gigabit switch?

I happen to have one that suddenly refused* to allow any data through it and they’re inexpensive on eBay, cheaper than a generic steel project box of that size.

*Of course it died precisely at the moment I had to print an important document that was needed *right now*. Printing, it’s *always* printing causing problems.

The Printer That Worked The First Time… and other fairy tales

hey it sounds like you’re in a perfect position to start designing it! ;-P

I think the ship has sailed on EOMA-68 given that the main developer is off doing other things, the other sponsor of the project on Crowd Supply doesn’t respond to mails about it, and Crowd Supply don’t seem to be able to nudge it forward. I’d like to think something will happen and at least something will get sent out to backers but I’m not holding my breath.

Every time there has been any standardization in the PC industry it has always led to limitations that make the next CPU incompatible. Even standardized buses like ISA, EISA, and PCI become limiting factors. Each new generation of CPU requires a new motherboard specification to cover the cooling, interface, and power needs of the new processor. If you want plug and play compatibility, you are just asking for limitations in CPU design to maintain backward compatibility. Given that the number of people who are actually going to upgrade their own PCs, it probably does not make much business sense for a CPU manufacturer to limit themselves that way. If you consider that CPU and memory make up the lions share of the cost of a new PC then it does not make financial sense to make an upgradable system where you throw out and replace the most expensive components.

The people most likely to upgrade their PCs are the same power users that want the best, fastest components and want to wring every last bit of performance out of their systems. They will not like the limitations imposed by modularity. The vast majority of users only want a system that surfs the web and runs their business app well enough. Power users won’t want the performance hamstrung and the second group doesn’t care to run the fastest hardware.

For new stuff, yeah-ish (AM4 is pretty old now), but for older stuff, I dont know if you have noticed the volume of 8088, 8086 and 80286 board being produced atm, and whats noticeable is the CPU on a _card_ instead of a _socket. When people/designers start realising that they can do the same thing with PCI and combo PCI/ISA boards then hardware like CM4 have more uses in the future (and not just for retro stuff)

Another interesting modular system is Sparkfun’s MicroMod (https://www.sparkfun.com/micromod) range of processor cards and carrier boards, based around the M.2 standard. It’s aimed at microcontrollers rather than the CPUs you would put in a desktop or laptop but some of those are comparable to CPUs in some retro systems.

Pity that some of the most interesting compute modules (e.g., Xilinx Kria) use excessively expensive / complicated connectors…

I was thinking, just today, how it is such a shame that, when I next upgrade my otherwise-perfectly-good Late-2013 15″ MacBook Pro merely to get a decent, modern processor that doesn’t throw the internal fan into overdrive when I run Google Docs, I have to “put out to pasture” a perfectly good, well… everything else that makes up this once-above-average laptop. Modular CPU+DRAM cards would be most welcome here, but of course Apple would never go for it, since they are all about the total experience. Or would they?