A footnote in the week’s technology news came from Linus Torvalds, as he floated the idea of abandoning support for the Intel 80486 architecture in a Linux kernel mailing list post. That an old and little-used architecture might be abandoned should come as no surprise, it’s a decade since the same fate was meted out to Linux’s first platform, the 80386. The 486 line may be long-dead on the desktop, but since they are not entirely gone from the embedded space and remain a favourite among the retrocomputer crowd it’s worth taking a minute to examine what consequences if any there might be from this move.

Is A 486 Even Still A Thing?

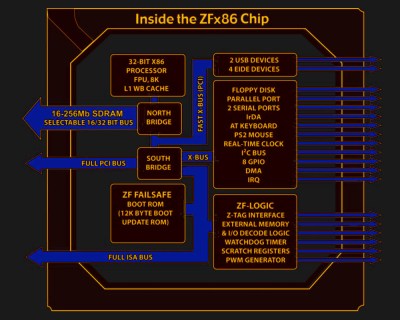

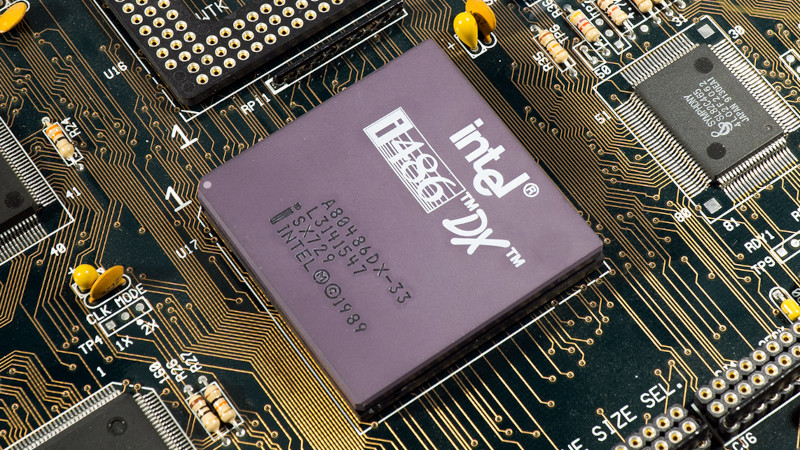

The Intel 80486 was released in 1989, and was substantially an improved version of their previous 80386 line of 32-bit microprocessors with an on-chip cache, more efficient pipelining, and a built-in mathematical co-processor. It had a 32-bit address space, though in practice the RAM and motherboard constraints of the 1990s meant that a typical 486 system would have RAM in megabyte quantities. There were a range of versions in clock speeds from 16 MHz to 100 MHz over its lifetime, and a low-end “SX” range with the co-processor disabled. It would have been the object of desire as a processor on which to run WIndows 3.1 and it remained a competent platform for Windows 95, but by the end of the ’90s its days on the desktop were over. Intel continued the line as an embedded processor range into the 2000s, finally pulling the plug in 2007. The 486 story was by no means over though, as a range of competitors had produced their own take on the 486 throughout its active lifetime. The non-Intel 486 chips have outlived the originals, and even today in 2022 there is more than one company making 486-compatible devices. RDC produce a range of RISC SoCs that run 486 code, and according to the ZF Micro Solutions website they still boast of an SoC that is a descendant of the Cyrix 486 range. There is some confusion online as to whether DM&P’s Vortex86 line are also 486 derivatives, however we understand them to be descendants of Rise Technology’s Pentium clone.

Just Where Can A 486 Cling On?

It’s likely that few engineers would choose these parts for a new x86 embedded design in 2022, not least because there is a far greater selection of devices on the market with Pentium-class or newer cores. We’ve encountered them on modules in industrial applications, and we’re guessing that they’re still in production because somewhere there are long-lasting machine product lines still using them. We’re quite enamoured with the RDC part as a complete 486 PC on a chip with only 1 W power consumption as our ’90s selves would have drooled at the idea of a handheld 486 PC with long battery life, but here in 2022 should we need a quick fix of portable 486 gaming there are plenty of ARM boards that will run a software emulator quickly enough to show no difference. So will the relatively small community of 486 users miss the support for their platform in forthcoming Linux kernels? To answer that question it’s worth thinking about the kind of software these machines are likely to run.

If there’s one thing that operators of industrial machines value, it’s consistency. For them the machine is an appliance rather than a computer, it must do its job continuously and faultlessly. To do that it needs a rock-solid and stable software base rather than one which changes by the minute, so is an outdated industrial controller going to need access to the latest and greatest Linux distro? We think probably not, indeed we suspect it’s probably a lot more likely that an outdated x86-based industrial controller would be using a DOS flavour. Meanwhile the retrocomputing crowd are also more likely to be running a DOS or Windows version, so it’s difficult to imagine many of them squeezing the latest and greatest into a 486DX with 16 megabytes of memory.

Memory Lane Is Not So Rosy When You Remember How Little RAM You Had

The first ever Linux that I tried was a Slackware version on a 486DX-33 some time in 1994. At the time that would have been an extremely adventurous choice for an everyday operating system, and while it was great as an experiment, I kept using AmigaOS, DOS, and Windows for my everyday drivers. It would be around a decade later with a shiny new Core Duo laptop that I’d move to permanently dual booting and eventually switching away from Windows entirely rather than only using Linux on servers, and since then several generations of PC have continued the trend. If you used a 486 for real back in the day then it’s tempting to think of it as still somehow a contender, but thinking how many successive platforms have passed my desk in the intervening years drives home just how old this venerable platform really is. We’ve taken a look at the state of the 486 world in the paragraphs above, and we’re guessing that the support for 486 processors will be missed by very few people indeed. How about you, do you run a 486 for anything? Let us know in the comments.

Header image: Oligopolism, CC0.

The only reason to support 486 is because of old timer engineers afraid to move with the times. Stability is just an excuse, there are many things out there that are just as stable.

Indeed, there are plenty of stable devices out there that are newer and faster and cheaper, until you consider the cost of recertification on things like….say… a radar.

And that recertification is going to happen with the original kernel, so dropping support in new kernels is not an issue.

you wouldn’t just update the linux on one of those either ….

The specific reason that the Vortex86 chip is so hard to categorize is that it lacks an i586-set instruction called ‘cmov’. Technically, ‘cmov’ support is optional in i586 CPUs — that’s what the spec says, to the extent there IS a proper spec — however, in real life, as it works out, support is expected because there’s exactly one chip, or series thereof, in all existence that’s an i586 CPU (or based on it) but that lacks ‘cmov’ support — the Vortex86 line.

Since support for ‘cmov’ is what, in practical terms, differentiates i486 and i586 CPUs, and code targeted for them… for all intents and purposes, a Vortex86 chip of any kind is effectively an unusually fast i486 target.

As one of two asides — I’ve not heard of that RISC thing mentioned in the article (although it reminds me a bit of the Transmeta Crusoe) or of the ZF Micro chips. I’d be interested in a [HaD] article covering them… maybe along with other such early x86 SoC attempts, such as the DM&P (and ALI rebrand) M6117C, which, combined with a dedicated chipset, represented essentially a nearly complete 386 motherboard across two chips, running at 40MHz.

As the second aside… I’ve often felt that the 386SX-25 was my personal ‘sweet spot’ for a DOS nostalgia box. I’m… pretty sure I’d not want to run Linux on that, though, for the most part. I think I’d want something a little beefier for that, maybe a low-end Super Socket 7 board. But, then, I’m really not familiar with Linux in that era.

Is there any way to make a Vortex86 throw an illegal instruction exception and emulate it in Ring 0 software? Old VAXes did that (few had actual hardware for the 128-bit, 33 decimal digit “H Floating” type) and IBM Mainframes still do even in the new ones.

H Floating was slow on a MicroVAX, but it cured/concealed a lot of round off errors. :-)

A 386 will run openBSD faster than an original MicroVAX, or at least it feels like it to me. A SparcStation 5 will mop the floor with it, though. :-)

That’s funny.

I don’t remember entering this as a reply to another comment.

In fact, I remember specifically that my phone thought I wanted to reply to a comment, I tapped [Cancel] without typing anything, and replied separately.

This has happened to my comments multiple times before. [HaD], I think you have a WordPress bug to report.

I though CMOVcc was an i686+ instruction, introduced with the Pentium Pro?

@eriklscott, on x86, illegal instruction was introduced in 386? or 286? So it should…

Even earlier than that, on 80186.

Not just to re-certify – how about re-engineering the whole software stack? Chances are there’s only a binary blob left of the original software and the machine needs to keep running.

Keep the old software and don’t put it on a network. You can’t expect indefinite support for hardware introduced >30 years ago. Or if the hardware is that critical then it is worth it to maintain software as well.

Infrastructure normally require 10-20 year support from when a device is delivered to customer. Which means if the device was produced 10 years after the hardware was introduced then the expected support will reach 30 years.

The customers wouldn’t expect rolling latest/greatest software updates because of customer acceptance, certifications etc. But they would expect constant access to security fixes. So basically LTS – longtime stable.

There are many things that are expected to run for 50-60 years with minimal interruption, such as power stations.

You can maintain the software source code indefinitely. But not the programmer. There’s no way to make backups of the programmer. The programmer is stored on a medium that ages and deteriorates, and nothing can stop that. All you can do, is to exercise the programmer as much as possible so that you can document the inputs and corresponding outputs. That way, you can build up a meta form of backup. But any algorithms inside the programmer that incorporate ‘entropy’, e.g. complex state machines, will be very, very hard to backup. Also, there is a fair amount of randomness inside the programmer, which will be impossible to backup, but is often crucial for the correct working of the program.

All in all, the lifetime of software mostly depends on the longevity of the programmers.

:)

And oftentimes it depends on hardware as well. Can’t run a tool chain for VAX/VMS on MacOS. Although I give you that some very smart programmers created emulators for old systems and saved the day there.

It’s pretty unlikely that they upgrade to new major kernel series then either.

Or an F16, or an aircraft carrier. or some ATMs, a train line in Europe, the bot which builds macs…

Yah but maybe people don’t want to move to the next oldest, i.e. most proven stable, platform every 3 years for the same reason they froze things on an old platform so they didn’t have to keep moving to the newest platform every three years.

That’s not always true. Many times there are regulatory hurdles to overcome (Medical Devices, Military, Aviation, etc). Re-certification can be very expensive. Of course that also means that they are probably running an old kernel and don’t want to update that for the same reasons so it makes this issue somewhat moot.

“Afraid”, probably.

“Don’t have the budget”, definitely.

Once a business has spent a few million dollars shepherding a medical device through all the approvals (FDA, FCC, UL, CE, often DoD, Canadian something-something, Euro blah-blah-blah ad infinitum) they’re almost always loath to do it again if they can possibly avoid it. Even if it means paying dearly for custom reproduction chips from Rochester Electronics. (Rochester buys old designs and their masks – they’ll make you a new production 8080 but if you have to ask how expensive…)

New designs also strongly imply new lawsuits.

The last 737 pilot’s great grandparents haven’t been born yet, and the planes are still in new production. The whole point of the Max’s screwed up throttle-by-wire was so the pilots wouldn’t have to be trained on a new aircraft, just a new version.

Legitimate question – do any parts interchange between a 737 Max and a ’37-100? -200? Besides jellybean stuff like screws?

Their lack of main landing gear doors? That’s an odd bit about the 737 series. Boeing figured out that the weight of main gear doors and associated equipment caused more of a fuel use penalty than leaving the wheels open to the wind, with only rubber flaps around the edges of the wheel wells to partially smooth the airflow over the retracted wheels.

As for the 737 MAX, what sort of nincompoopery designed the plane with dual angle of attack sensors then wrote the software to only use one of them VS monitoring both simultaneously plus integration with other sensors to sanity check things should one sensor’s reading be wildly off from what would be expected at any point of a flight?

They didn’t design it with two, they designed it with up to three and made both of the redundant sensors “optional.” Airline figures one must be good enough and saves some money.

Why not reform the regulatory agencies and standards groups? Why should progress be hindered by unnecessary expenses? And to what end?

Human safety is unnecessary expenses? The expensive certifications aren’t for releasing a new coffee machine or dish washer. But medical equipment, critical process control systems, power grid, fire alarms, airplane systems, …

Would you want to live in a world where a hobbyist is allowed to deliver hardware to control a nuclear power plant with no form of certification of delivered system?

What’s the old saying? Regulations are written in blood.

They exist because people died. They exist to prevent more people from dying.

The last thing we need is Dunning-Kreuger dipshits like you “reforming” them.

It’s worth the cost if it saves even one life, right?

No, sorry, that’s not right. It’s sarcastic. The reality is that a life has a value in the cost benefit analysis. Even if that sounds cold and heartless. There are some things that the rest of us shouldn’t have to give up or be forced to suffer through because one person died once.

And a reader of a site like this should be especially sensitive to that. Since a large number of regulations are of the sort that intend to prevent people like us from doing things ourselves “for our own good.”

Some equipment have a 20-30 lifespan, e.g. Aerospace. When upgrading software, minor tests are required. When upgrading or changing hardware in any way, e.g. changing or removing a resistor, a major test is required. That’ll be e.g. sending an Airbus A320 on an 18 hr flight with full flight crew and 7-9 engineers in the cabin, plus a full ground crew.. kinda expensive…

So, upgrading to a newer processor just because… isn’t the preferred option.

Entertainment systems on commercial flight sometime run on NT 4.0

Valid points up and down this chain. Now how many are NOT running Linux where this whole article will not apply?

No, you just cannot buy maturity. Look at the S/360->System Z line, it’s mature. It’s stable. Banks use it because of this.

Designing around a well-known, matured CPU can be because it needs an error-less solution, and if you look at nowadays software, hardware, it’s a mess, with a lot of bugs and security holes. And in some fields, you cannot allow any errors.

In addition, a lot of 486’s do not require fans. Fans can fail easily…

Yes, fans in vehicles means there must be a service program where technicians handles sand and dust every x months and then obligatory fan replacements etc. Without fans, there are normally not any openings in the case so no where for dust to get inside.

This is a bigger deal than people understand. It seems like that anybody who is not tied to the x86/win upgrade mill are the strange ones! And there’s those that probably want everyone to rewrite their code for ARM now too?? I’ll tell you this though – it’s why risc v will take over like wildfire!

I’ve been entertaining the idea of getting MMU-less Linux built for older systems, I think this SHOULD work, but I could be mistaken. There’s a lot of other challenges for MMU-less Linux but, it would be nice to be able to have modern Linux in some form on the platform for me, as a hobbiest.

But haven’t many distributions moved on? I know Slaclware prpudly announced at some point that they’d continue run on the 486, unlike other distributions, but I can’t remember if that changed since then.

The only time I used a 486 was in late 2000, for just long enough torealize a 240mb hard drive and 8megs of AMwas lacking for Linux at that point. So I bought a used 586.

late 2000s? It was mid 90s for me, with 2x 40GB HDDs. I was lucky enough to use Pentium and 1.2GB HDD in late 90s :D

No, late 2000. In June 2001 I bought the used 586 and have used Linux on the desktop ever since.

You had 40GB HDDs in the late 90s?

I wondered that too. For a project I was on in 1999, we used 6GB drives, as this was about the largest you could get before the price-per-bit curve inflected up unacceptably.

“The only time I used a 486 was in late 2000, for just long enough torealize a 240mb hard drive and 8megs [..]”

Boy, and I thought I was poor.. I’ve seen 286es with higher end specs than that at the time. My respect for getting along with so little memory! 😎

I had a 286 with 12 megs RAM. 512K in DIP chips on the motherboard with the rest on three Micron full length 16 bit ISA boards. One was a different design, which I used to backfill the main RAM to 640K. The remainder of it and the other two boards were split 50/50 between XMS and EMS. The boards had EEPROM chips to hold the settings.

I also had a Pro Audio Spectrum 16 (a Reel Magic video+sound+SCSI card minus all the video and Trantor SCSI parts) and a Soundblaster Pro in it.

All 8 slots were full. 3 RAM cards, 2 sound cards, video card, multi I/O card, and I forget what I had in the 8th slot. It was before I had internet so it wasn’t a modem.

Getting it all configured and every game I had setup so all of it would automatically use the RAM type and sound card it needed was quite the task. Then there was also having the OEM only Windows 3.11 (not Windows for Workgroups 3.11) working on it. I didn’t need any boot menu to select different configurations depending on what I wanted to do. It all worked together, If I wanted to play any game I could simply quit Windows and run the game.

Yes, the old flight sim games that needed DOS…Falcon 3.0, Jet fighter 2 (carrier landings!) and Tornado with its camera view of its 20mm cannon shells and realistic ground search radar. Super fast learning curve, terrible graphics and fun for hours. They all needed upper memory manager installed and half the fun of the game was getting it to run on your pieced together rig with all the DIP switched modem and graphics cards.

Many embedded devices for infrastructure doesn’t use any traditional Linux distributions, because normal distributions are designed for servers and workstations with a user that performs the update and that can handle any update issues.

That isn’t that good for 100k devices that should be updated over-the-air and where a technician might need to drive 200 km single direction if something went wrong.

So it’s often custom builds with custom packaging with imaging.

It’s not like support for the 486 is gone. You can always install one of the many previous releases and have it work just fine on your 486.

Maybe? One of the comments Linus actually points out is that it’s entirely possible that certain kernel configurations won’t actually work under 486 right now, because the testing coverage is poor. Specifically there appears to be a Kconfig that includes a rdtsc instruction unconditionally in 486, which would just fail since 486’s don’t have rdtsc.

Yeah I agree with Linus – if you’re going to use a museum computer, might as well run a museum kernel on it. It will still do great things, but you know, 486-level great things.

486 cores as such aren’t museum pieces, actually. They have a clean CISC design and are not nearly as much affected by recent cache issues/hacker attacks. Oh, and I never agree with that dude. I find him highly unsympathetic also, but that’s another story.

That’s how I see it too.

I still remember though saying “This (486x 100 is so fast, it’ll be the last CPU I need” . How wrong I was….

Linux… today it’s all about business…

Who needs linux anyway when there’s RTEMS?

Or Minix 3? It’s time for the year of the Minix desktop, isn’t it? ;)

All ur desktop are belong to Minix3

Of course you can run old computers, and an old version of Linux. If they aren’t connected to the internet, not much can happen. Those are the things you need to run old things, to keep that old peripheral running.

When I started running Linux in 2001, I used 7.0, because it was in Slackware Linux for Dummies. Back then it was fine, I was using dialup, and nothing needing security. But with time, more websites required security. I started banking online. Various sites in time wouldn’t let me log in until I upgraded the browser.

I would never use old software now. But then I can’t see the appeal of running old computers today. I’ve done it, don’t want to go back.

This isn’t about end users running old hardware. It’s about infrastructure – magic boxes in air conditioners, medical equipment, digital signs, fire alarms etc. So infrastructure that may be installed and running for very, very, very long times. 20 years or even more.

Often very low power because the equipment might be powered by a small 7Ah lead-acid battery or may be constantly powered in vehicles. No fans allowed. No openings for air to pass through. Sometimes with quite wicked certifications costing $xxx,xxx++ and with recertification needs (and new $xxx,xxx) the same moment someone decides “museum piece – let’s replace”.

And some of which have over-the-air update functionality. Some having ether etc updates. Some having some low-bandwidth radio functionality making it take hours to retrieve updates. Some requiring a technician to drive cross the country to plug in some signed USB thumb drive to update.

Bottom line is that there are huge differences in usage cases and longevity needs for embedded devices in infrastructure and end-user builds.

I recently tried to find old Linux distros’ installation media to revive some historic piece of kit. I learned that old versions of current distros are hard to come by.

Keeping an archive of outdated distros could be a solution to keep legacy hardware in service.

People don’t keep their old copies around?

I think the problem are servers/archives for the extra packages, rather than the ISO file of the Linux installation.

That’s the idiotic the design fault of Linux, I think, by the way.

After a while, you need to download all the extra packages due to dependencies.

So you end up needing a whole mirror copy of distribution’s server.

Back in the 90s/early 2000s, this was a non-issue yet.

Linux distros like SuSe Linux 6.x had approximately 10 CDs in the box, with the complete set of files that existed at the time.

So you’re partly right.

Unfortunately, that’s no longer the case for slightly newer releases that still work on the vintage PCs. Retro distros from the late 2000s onwards don’t bundle everything in a single 4,7GB DVD ISO or 10x 650MB ISOs. They rather point to some obscure sever location.

That’s another design fault that I recognized a few years ago. On *nix, you’re screwed without an internet connection. The system isn’t fully functional without it, it’s not independently working.

Windows 98SE, XP or Mac OS 9.. They all had an on-line help that worked offline, completely without external help.

And more recent distributions won’t be used on old hardware.

If something was missing, you find the source and compile it. It’s easier if it comes with the distribution, but I’ve added things by compiling source. People used to be more self-reliant.

I have never needed an internet connection to install Linux. Your world view is limited.

Try setting up a 32 bit Open Media Vault server. The OMV people quit doing ISOs of the 32bit version a while ago so if you’re wanting to utilize something like an older thin client to DLNA serve videos then an online install is the only way it can be done.

One can get an ISO of the 64 bit versions but it’s easier to just do it online and get the current updates to start with.

“I have never needed an internet connection to install Linux. Your world view is limited.”

.. and a mature person wouldn’t judge another person so quickly, I think. Or go down to a personal level. Especially if it’s a stranger.

But even if such a person would, it would add “I think” or “in my opinion”. Without it, the previously made statement is presented as a true fact, but without a proof.

The affected person then can consult a lawyer and sue the other person because of defamation.

I won’t do that, but other people perhaps aren’t as kind and relaxed as I am.

Anyway, I just want you to know that, so you won’t get into trouble because of such a thing. 🙂

PS: Also, you forgot to say in which context your statement was meant to be (“I think your world view is limited in this context” would the be correct way of making such a statement, for example. Otherwise, my complete world view would be wrong.)

It’s not opinion, it’s my experience, thus fact. Yes, I’ve never tried the distributions aimed at refugees from Windows, but that’s no different from you making declarations about Linux based on what you experienced, or more likely read.

You know all these factoids, but not an overview to stitch them together. That’s lack of worldview.

Quite often old distribution disks can be found. What becomes extremely difficult is when a specific version of a bugfix to that old distro is needed … and that bugfix breaks something else in the distro which also has to be updated ….

The days of dependency hell seem to be mostly behind us, for which I am grateful.

“The days of dependency hell seem to be mostly behind us, for which I am grateful.”

Python 2 / 3.. 🥲

Both Debian and Slackware have archives of older versions. https://mirrors.slackware.com/slackware/ and https://cdimage.debian.org/mirror/cdimage/archive/ is a place to start.

I hacked LinuxAP (pre-openwrt days), i still have 6 usr2450 with AMD SC400 SoC (i486) with 1mb flash and 4mb ram. If anyone wants one, let me know.

I don’t care, personally, but.. The graybeards and gurus are forgetting something vital here:

Both 80386 and 80486 are supporting plain i386 instructions, the very foundation of the IA-32 or x86-32 platform!

It’s not some old i686 generation, but the very heart of the 32-Bit code basis that reliably works everywhere, no matter what.

It makes more sense to drop i586/i686, thus, as those equally outdated CPU generations could use i386 Linux, if needed.

Some Texas Instruments, Cyrix, VIA, Nexgen or AMD K5/K6 spin-off do not or do not fully support i586 instructions, also, as used by the original Intel Pentium/i586.

This really could be a problem for the embedded sector, in which super fast 80486 cores are still being used and freshly manufactured.

So there’s still a theoretical need for plain i386 support.

Router hardware is also affected, maybe. I remember thst some popular 90s models use the TI/Cyrix 486-SXL or 486-DLC series CPUs.

So why all the work done since the 386? Might as well stopped CPU development.

Life goes on. I won’t use hardware older than whatever I’m using.

Linus already removed 386 support. As I said, many distributions stopped supporting 486s years back. That wouldn’t have happened if there was real need.

This isn’t an arbitrary decision. Life has moved on, and supporting the 486 is causing trouble.

Don’t confuse Linux, which I suspect you don’t use, with the existence of 486s still being used. This decision won’t mean 486s can’t be used. It just means new releases of Linux won’t run on them.

“Don’t confuse Linux, which I suspect you don’t use, with the existence of 486s still being used. This decision won’t mean 486s can’t be used. It just means new releases of Linux won’t run on them.”

Please go on. You can happily continue to suspect as you wish, but I’m using Linux quite regularly because of my Raspberry Pi 4. 😘

And a) embedded CPUs with 486 style cores or without FPU are still being made (486 systems exist as both 486SX and 486DX, so FPU support couldn’t be taken for granted, code had to check for FPU)

b) the 386/486 are very similar in terms of instruction set c) 386 systems can easily be retrofitted by 486DLC chips (386 pin compatible)

Some embedded CPUs without 386/486 cores:

https://en.wikipedia.org/wiki/Vortex86

https://cpushack.com/ULi.html

Are space hardened processors still 486?

NASA is moving to radiation hardened RISC-V processors.

Or rad hard PPC. No more RH1750 or COSMAC. ESA use rad hard SPARC (LEON series chips) these days. USOS flight computers (multiplexer/demultiplexers or MDMs) fly 386 CPUs with extensive 1553 bus I/O in a distributed architecture.

Speaking of the COSMAC CDP1802.. The amateur radio satellites used ordinary, non-spacehardened versions. In space. Because the space-hardened version either wasn’t around at the time or too expensive. I know, price wasn’t a problem for a single chip in theory, since in those days socalled “eginineering samples” could be gotten through “connections”, hi. Just like the HF transistor for OSCAR-1. But anyway, the COSMAC was so well made it lasted without hardening. What failed rather quickly were DRAMs. Some got corrupted during flight of the south atlantic anomaly..

That’s not what the guy from AMSAT said when he spoke at our ham club in the late seventies. He said the 1802 was chosen for two reasons. It was space hardened, and it was so easy to load the RAM remotely (for the same reason the Cosmac Elf saw a lot of builders).

Perhaps the 1802 wasn’t yet certified, but by the way the 1802 was constructed, it was safe.

That speech was the first time I’d heard of “space hardened”.

Currently using a Texas Microsystems 486 installed in a PCI/ISA backplane to handle serial console output from some network devices and UPS. Runs a slightly dated copy of Debian.

At the time I installed it was a perfect fit. Fanless, with eight real serial ports and four 10/100 network interfaces and the capacity to double that if needed.

It may never be replaced so long as serial consoles stay a thing. Aside from the reboot time being less than optimal I really don’t have any complaints.

The 486 is still alive and well in some military hardware. So are the 386 and 286 processors. And this hardware is still being refurbished, certified and sent back out to the field today.

But why would the military still use these processors? Because they perform their functions very well and ‘upgrading to something better’ would require a tremendous amount of re-design and re-qualification, and the module would not work any better than the current hardware.

And the classics react //predictable//.

That’s a feature all modern processors have lost.

No one really knows the internal state of a modern CPU to a given time beforehand, also thanks to caching algorithms, pipelining, scalability etc.

To find out, the actual software must be run in an experiment. And put into a specific situation. However, since not every scenario can be figured out beforehand, it’s a lot of work to even try to.

Out of order execution capability requires very skillful programming to use and have it work predictably. So why bother with it at all in a fully deterministic use case like a multi-axis CNC machine? Operations must happen in a specific order every time, all the time, so fancy optimization tricks like jumbling up and buffering the order commands are processed does nothing beneficial.

If an old x86 CPU can handle a task like calculating artillery trajectories fast enough for a fire mission, then there’s no need to use faster hardware when the humans receiving the attack order and directing the artillery where to shoot aren’t any faster than they were in the early 1990’s.

Don’t worry, the military will retain people to keep those old gadgets alive with old kernels

Windows 11 isn’t “supported” on my 5th generation i7 laptop.

I could no longer run AutoDesk Inventor on my 1st generation i3 laptop. Plus a 720P display doesn’t work well when installing code that puts up splash screens larger than the screen. Can not run Inventor under linux either.

I don’t have my Tandy 1000 anymore. i believe that was a 386 with a Math Coprocessor. I ran Fedora Core 4 on it for 10 years before having to upgrade its OS because FireFox would no longer run. I believe Fedora 10 was too slow on it. And browsing was starting to be a pain as the amount of graphics per page kept increasing. Like adds and video adds are increasing today. And don’t forget updates. With Adds, Video Advertisements, and Updates 100Mbps internet is unusable.

I think I have an old AMD Athlon Motherboard that used to run Win XP on it. Refurbished Dell Desktops with i5 processors are relatively inexpensive so I have no need for linux on a 486 anymore.

Don’t think Windows 11.

I think you should instead consider “how long to support Windows 7 or XP with security fixes”.

Embedded devices sent out 10 years ago will not be updated with the latest Linux because no one wants to take the cost of testing and recertification of that product.

But there would be a need to know what is the latest Linux version that is supported and how long will there be security fixes and maybe driver updates (such as support for larger memory cards or updated Bluetooth standards) for the infrastructure devices that is expected to be running for maybe 20 years from the date the customer bought and installed them.

This article reminded me of the early i-mate jam’s hard hardware, formerly called the pocket pc, when I saw a prototype of the intel processor embedded in a soc embedded system.

I still have a 486 PC on my bench in the garage but have no turned it on in 5 or more years. It has 4 5″ floppys and other old stuff to be able to recover old stuff I may have or find. Too lazy to get rid of it.

I dunno, this subject irks me. I thought I remembered where I was when the 386 was dropped, I coulda sworn it was only a couple years ago.

I’m certainly no expert, but as I recall, linux had basically one requirement: an MMU, which was basically all that prevented it from running on all processors. And, for that, there was ucLinux.

It’s one thing if distros and package-managers don’t want to support hardware that’s not up to the task… I mean, that’s no different than a windowed program not running on a serial dumb-terminal. But the kernel itself? Maybe this is indicative of a bigger issue?

How much kernel code is written in assembly, or accessing CPU registers, etc., directly? I thought one of the key things that made linux great was its inherent portability to many architectures.

That said, I guess the question of “why would you want kernel 20.5 on a 486?” is valid-enough. Though, the answer would’ve made sense, back when I started using linux: Because, back in the 2.x-era, it seemed like linux’s goal was less about features and more about compatibility, bug-fixes, and improvements… So 20.5, from *that* perspective, would’ve been an extremely stable, extremely secure upgrade to 2.x. Instead, though, it seems 3.x and 5.x aren’t even the same OS. Are the drivers even compatible? Frankly it seems each new version is now more like the difference between Win95 and WinNT than it does the difference between WinXP and Win11.

And moves like dropping the 386, then the 486, just seem to confirm that.

It seems too scattered, now. Whip up a 3.x driver to put this router on the market, and who’s going to backport the 5.x security-fixes to that hacked 3.x? For how long? eWaste straight off the shelf.

That, it seems to me, is what linux is becoming. And these sorts of decisions seem to confirm it. Might as well be WinCE. (Or dare I compare it to a Gigantic CP/M, with an equally gigantic effort necessary to port it, and the next version, to new hardware?).

I guess it is what it is, but it’s certainly not what attracted me.

I wonder how much space there for the 486 in previous kernels. How much effort does it take to support it? As an home appliance tech, I can see how supporting older equipment can take up costly resources. Think, parts, system system schematics, training, etc. For instance, I had a customer with an old wall oven built in the late 90’s. The Electronic Range Control finally kicked the bucket but the rest of the unit was fine. Its a custom fit control module so you cant substitute it with a modern module. Stocking parts for every stove ever made by GE appliance is not really practical or cost effective. Furthermore, not many customers have this unit anymore. The typical thought is, “Its time to just buy a new oven”. However, if this was a unit in a long lived business, buying a new one, may not be an cost effective solution. I guess this situation is similar to supporting hardware on the Linux Kernel too.

Don’t worry. If you still want to run a modern OS that isn’t painfully slow on old hardware like the i80486, there’s always NetBSD :)

I started my own linux distribution recently. Basically to learn how to boot from old 3com bootware boot rom but then it started to grow :). Main question started to rise: Am I really able to netboot current userspace (busybox on this case) with current kernel on 486 machine with at least 8 MB RAM?

Answer is: Yes, for now.

https://github.com/marmolak/gray486linux

I got 1994 micropolis 2GB scsi disk and nec scsi cd-rom.. adaptec scsi cad had no driver for mys cd-rom.. i tested linux and it had driver for it :)

I remember that for a while long after everyone else had moved on NASA was still using 486s because the larger transistors were less susceptible to cosmic rays.

Has that changed?

486-class machines were a breakthrough in multimedia performance. They were the first architectures that could run Windows and X11 and still be somewhat usable. Later 486 processors (DX4-100 and 5×86-133) could playback full 128kb stereo MP3 files. They were the first architecture that supported PCI. With the right motherboard, you could install a PCI USB 2.0 or PCI SATA card and get access to today’s modern storage. Not bad for a processor that was made when you could still buy a new Commodore 64.