[Thomas Bitmatta] and two other champion drone pilots visited the Robotics and Perception Group at the University of Zurich. The human pilots accepting the challenge to race drones against Artificial Intelligence “pilots” from the UZH research group.

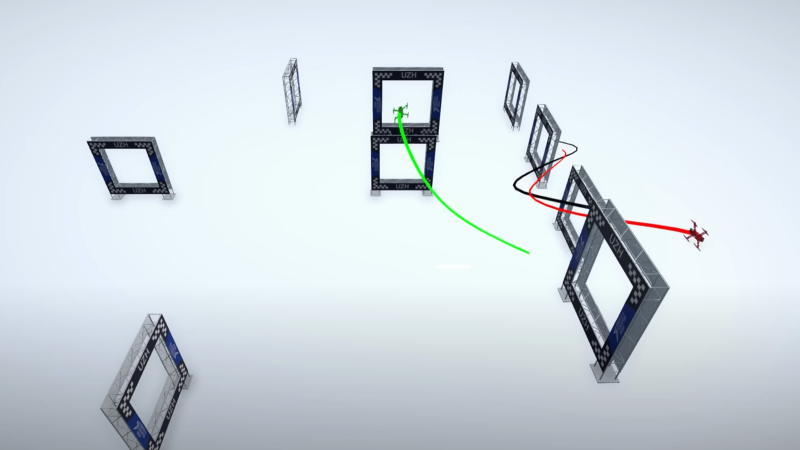

The human pilots took on two different types of AI challengers. The first type leverages 36 tracking cameras positioned above the flight arena. Each camera captures 400 frames per second of video. The AI-piloted drone is fitted with at least four tracking markers that can be identified in the captured video frames. The captured video is fed into a computer vision and navigation system that analyzes the video to compute flight commands. The flight commands are then transmitted to the drone over the same wireless control channel that would be used by a human pilot’s remote controller.

The second type of AI pilot utilizes an onboard camera and autonomous machine vision processing. The “vision drone” is designed to leverage visual perception from the camera with little or no assistance from external computational power.

Ultimately, the human pilots were victorious over both types AI pilots. The AI systems do not (yet) robustly accommodate unexpected deviation from optimal conditions. Small variations in operating conditions often lead to mistakes and fatal crashes for the AI pilots.

Both of the AI pilot systems utilize some of the latest research in machine learning and neural networking to learn how to fly a given track. The systems train for a track using a combination of simulated environments and real-world flight deployments. In their final hours together, the university research team invited the human pilots to set up a new course for a final race. In less than two hours, the AI system trained to fly the new course. In the resulting real-world flight of the AI drone, its performance was quite impressive and shows great promise for the future of autonomous flight. We’re betting on the bots before long.

The “search and rescue” rationale is obviously total BS. They obviously wanted to beat the world’s best humans at yet another task but fell short of their goal. I’m not especially rooting for their success either as I’d rather not live in a world with Slaughterbots flying around.

You already do.

Yeah, racing quads trying to fly as quickly as possible seem like a strange approach for searching in an unknown environment, escpecially since losing a drone is a bigger problem in that case. Probably something larger with more loiter time and less kinetic energy would be a better fit.

Of course, xkcd hits the nail on the head here:

https://xkcd.com/2128/

+1

“search and rescue” is a euphemism to make your project sound altruistic and helpful, but still attract the attention/funding of big defence firms, who know that they can repurpose the tech to “search and destroy.” Not saying that’s the case here, but it’s a pretty common theme in engineering tech demos.

Came here to post https://xkcd.com/2128/

But at the same time as UZH alumnus I have seen impressive strides regarding SAR robots by this research group.

Does anyone know, what camera and connected HW is used for such applications? They must have very low latency for things like this.

Is there any opensource version of something like this?

One mode did onboard processing, so no transmission latency. The other was many cameras around the space, 3rd-person style, tracking the drones via markers.

So none of what human drone pilots think of when they say “latency” which is basically all transmission and (these days) encoding/decoding latency.

Is there a link to the system they used for the self-guided drone?

I don’t know if it is exactly the same, but that group did release a paper describing an open source quadrotor design recently: https://rpg.ifi.uzh.ch/docs/ScienceRobotics22_Foehn.pdf

Thanks, that looks like something closely related. I had a look through all their papers but they have a lot and it’s not easy to search.

Nice article I found it on https://google.com