While JavaScript might not be the ideal language to write a production compiler, you might enjoy the “Create Your Own Compiler” tutorial that does an annotated walkthrough of “The Super Tiny Compiler” and teaches you the basics of writing a compiler from scratch.

The super tiny compiler itself is about 200 lines of code. The source code is well, over 1,000 but that’s because of the literate programming comments. The fancy title comments are about half as large as the actual compiler.

The compiler’s goal is to take Lisp-style functions and convert them to equivalent C-style function calls. For example: (add 5 (subtract 3 1) would become add(5,subtract(3,1)).

Of course, there are several shortcut methods you could use to do this pretty easily, but the compiler uses a structure like most full-blown modern compilers. There is a parser, an abstract representation phase, and code generation.

Even if you don’t like the slide-show approach, the literate commented JavaScript is easy to read and very instructive. If you don’t know JavaScript it should still be fairly easy to work it out if you know any common programming language.

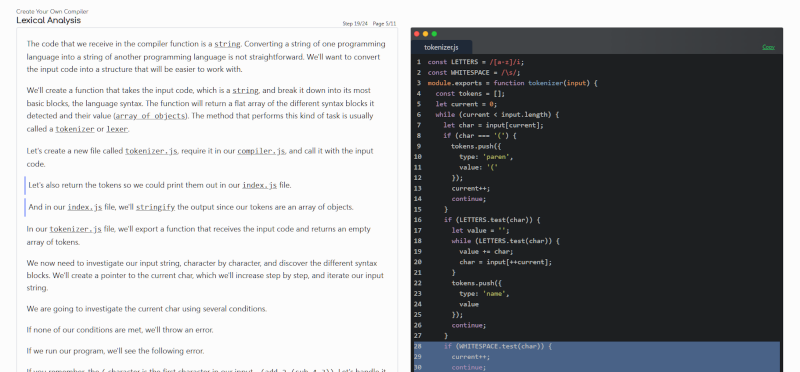

At the bottom left of the page are two buttons: Verbose and Internals. You can press these at any time. Text due to the verbose button will have a blue line next to it and text about internals will have a red line. This allows you to tune the experience depending on how much detail you’d like to read.

This isn’t the first compiler we’ve seen chopped up for exposition. If you are interested in what existing compilers generate for source input, we were always impressed with Compiler Explorer.

Reminds me of From Nand to Tetris, which includes a section on building a compiler. In assembly in that case, I think.

Isn’t that a Transpiler instead of a Compiler?

Or are transpilers technically compilers?

No, they are different. On of the key difference is that transpilers avoid transforming the code where compilers specifically transform it.

Actually, no… but just barely. One of the things a compiler does that a transpiler does not is transform the code which this program does therefore making it a compiler.

Citation needed: you need to prove that a transpiler does not transform code.

If you compile to a similar level of abstraction, it’s a transpiler. Ie new javascript to old JavaScript.

All transpilers are compilers. Transpilers are just a more specific type. But it’s still correct to call it a compiler.

In this case, since we’re just adding lisp style functions to JS, “transpiler” is more precise, but compiler is still technically correct.

Transpiler translate code to the same level of abstraction, while compiler translate code to a lower level of abstraction. A decompiler translate code to a higher level of abstraction. The hard part is how do we measure the level of abstraction.

Nand to Tetris has an assignment for compiler (source to VM to assembly) and an assembler.

one of the biggest influences on me as a compiler writer is a class i took from Dan Friedman where he taught the “baskin robins compiler”, a 33-pass compiler. i’m surprised to find that even though he wrote several books, he doesn’t seem to have written a book based on that lesson.

the idea is that each pass should do only one simple thing. if you are using a pattern-matching language like ML, then it is often very easy to specify the passes. for the class, we used scheme with a couple macros that provided a pattern matching idiom. each pass produces a new intermediate language with different invariants, and obviously (because it’s Dan Friedman), it makes a new copy with each pass instead of side-effecting.

the first passes are obvious, character stream -> token stream -> tree. then there were passes to rename variables to disambiguate scope (like if you have ‘x’ in two different scopes, it gives two different names), to convert looping/conditional statements into branches and labels (flattening control flow), to convert nested expressions into sequential assignments to temporary variables (flattening expressions), and so on. the point is that each pass is so simple that problems which ordinarily seem like a lot of intricate book-keeping become quite obvious when that book-keeping is the only thing going on. the variable-renaming pass, for example, keeps track of scope information, but then that scope information is gone, not used for any other passes.

after a dozen or so very simple passes, it is basically a register-transfer language (or you could do SSA) and then you allocate registers and then convert to assembler mnemonics.

in practice, my compilers don’t have that many passes, but it’s a great introduction…and when you’re working on another compiler and dealing with awkward overlapping book-keeping problems, it is really helpful to remember the baskin robins compiler had these separated into different passes. the problem you face isn’t hard, it’s just become obfuscated by cramming too much work into one pass.

So now I’m wondering if it would be possible to generically transforms two passes into a single pass, and then apply that transformation to the 33-pass compiler repeatedly until the result is a one-pass compiler.

hahaha sick!

a lot of the passes can be trivially composed in that way (and probably should be), but i think it’s impossible to “generally” do it. especially because some of the passes aren’t just the straightforward depth-first tree-visit that most of them are. for example, register allocation has a pass over the intermediate language generating conflict sets, then multiple passes over the conflict sets to determine the assignment, then a second pass over the intermediate language to substitute in the new assignments. so conceptually i’d describe it as one pass but even in that pass it has two different visits that can’t be composed together.

but if you intentionally limited yourself to versions of those passes that *can* be composed, then it’s entirely possible! and i think it’s been done…i can’t remember the name of it but i know there’s a very-light-weight C compiler out there that i think goes to some effort to be one-pass. and i know there’s a register allocation algorithm out there that isn’t as good but does work on just a single visit.

of course, that also depends on your language. i’m not 100% sure but i think C++ is intrinsically multi-pass, for example, because you are allowed to have some level of forward references. if for no other reason than you have to cache the templates and play them back when they’re instantiated. maybe the look-ahead could all be “dumb” like that.

If you write it with that in mind, yes.

As described, each pass is essentially a function of the type ILn -> ILn’ (a function from one intermediate language to the next. That is, you’d have a series of functions like:

tokenizer :: Text -> [Tokens]

parser :: [Tokens] -> ParseTree

varRename :: ParseTree -> UniqueVarParseTree

unlooper :: UniqueVarParseTree -> LoopFreeTree

expFlattener :: LoopFreeTree -> ExpFlatTree

…

objectFile :: AssemblerCode -> ObjectFile

and then you can do

compiler :: Text -> ObjectFile

compiler = objectFile

. …

. expFlattener

. unlooper

. varRename

. parser

. tokenizer

to glue together all 33 passes.

The minimum pass is two, because you cannot determine the offset of jump locations in the one pass for selection (if, switch, etc.) and loop (for, while) statements. If you use unoptimized functional recursion, this is not a problem, but welcome to overflowing your stack.

I remember seeing a program in my commodore 64 magazine. You had to type a program compiler and a checksum before you can actually type the chess program in.

Porting Intel MCS BASIC-52 64-bit to portable Implementations using gcc c 1 x86 2 ARM 3 RISC-V platforms better idea?

since a lot of language compilers are using LLVM, we can declare all of them transpilers.

It’s actually a question of the level of abstraction. Compile to lower level, trans[com]pile to equal level, decompile to higher level.

Will there be a tutorial created similarly in the C or C++ language?