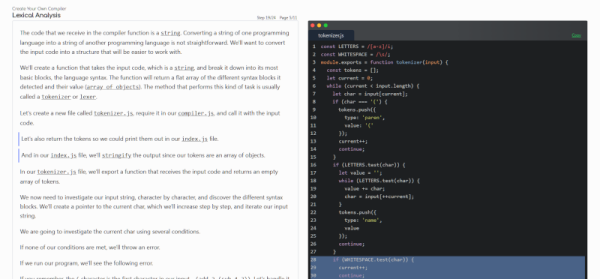

I think most of us who make or build things have a thing we are known for making. Where it’s football robots, radios, guitars, cameras, or inflatable textile sculptures, we all have the thing we do. For me that’s over the years been various things but has recently been camera hacking, however there’s another thing I do that’s not so obvious. For the last twenty years, I’ve been interested in computational language analysis. There’s so much that a large body of text can reveal without a single piece of AI being involved, and in pursuing that I’ve created for myself a succession of corpus analysis engines. This month I’ve finally been allowed to try one of them with a corpus of Hackaday articles, and while it’s been a significant amount of work getting everything shipshape, I can now analyse our world over the last couple of decades.

The Burning Question You All Want Answered

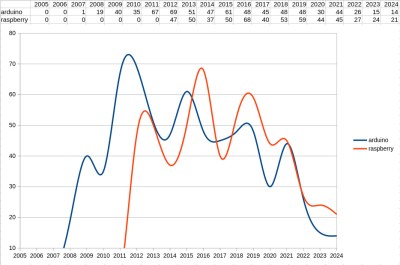

A corpus engine is not clever in its own right, instead it will simply give you straightforward statistics in return for the queries you give it. But the thing that keeps me coming back for more is that those answers can sometimes surprise you. In short, it’s a machine for telling you things you didn’t know. To start off, it’s time to settle a Hackaday trope of many years’ standing. Do we write too much about Arduino projects? Into the engine goes “arduino”, and for comparison also “raspberry”, for the Raspberry Pi.

What comes out is a potted history of experimenter’s development boards, with the graph showing the launch date and subsequent popularity of each. We’re guessing that the Hackaday Arduino trope has its origins in 2011 when the Italian board peaked, while we see a succession of peaks following the launch of the Pi in 2012. I think we are seeing renewals of interest after the launch of the Pi 3 and Pi 4, respectively. Perhaps the most interesting part of the graph comes on the right as we see both boards tail off after 2020, and if I had to hazard a guess as to why I would cite the rise of the many cheap dev boards from China.