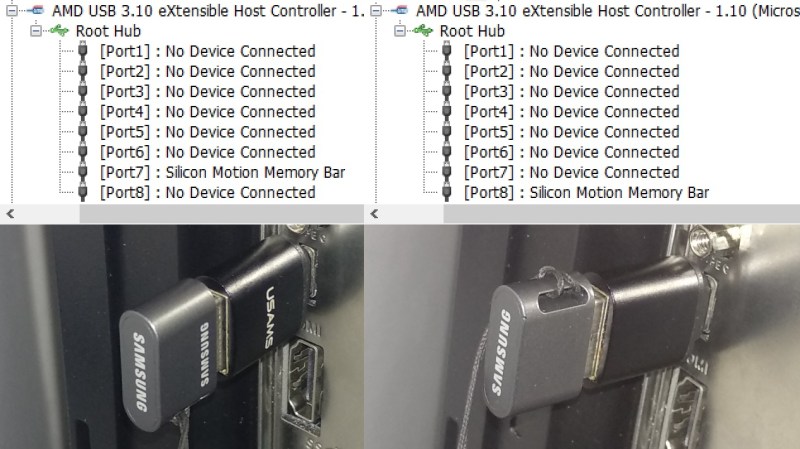

[RichardG] has noticed a weird discrepancy – his Ryzen mainboard ought to have had fourteen USB3 ports, but somehow, only exposed thirteen of them. Unlike other mainboards in this lineup, it also happens to have a USB-C port among these thirteen ports. These two things wouldn’t be related in any way, would they? Turns out, they are, and [RichardG] shows us a dirty USB-C trick that manufacturers pull on us for an unknown reason.

On a USB-C port using USB3, the USB3 TX and RX signals have to be routed to two different pin groups, depending on the plugged-in cable orientation. In a proper design, you would have a multiplexer chip detecting cable orientation, and routing the pins to one or the other. Turns out, quite a few manufacturers are choosing to wire up two separate ports to the USB-C connector instead.

In the extensive writeup on this problem, [Richard] explains how the USB-C port ought to be wired, how it’s wired instead, shows telltale signs of such a trick, and how to check if a USB-C port on your PC is miswired in the same way. He also ponders on whether this is compliant with the USB-C specification, but can’t quite find an answer. There’s a surprising amount of products and adapters doing this exact thing, too, all of them desktop PC accessories – perhaps, you bought a device with such a USB-C port and don’t know it.

As a conclusion, he debates making an adapter to break the stolen USB3 port out. This wouldn’t be the first time we’re cheated when it comes to USB ports – the USB2 devices with blue connectors come to mind.

That’s what you get for motherboards designed by Sauron

One port to rule them all, one port to bind them, one port to fake you out and on the board hide them

youtu.be/w_Yh2n8ZoHM?t=110

One Onion Ring to appetize them all

One Onion Ring to main dish them all

One Onion Ring to garnish them all

And in the plat du jour bind’ em all

(he’s dangerous)

One Onion Ring to grease them all

One Onion Ring to gormandize them all

One Onion Ring to fatten them and bind’ em there

In the land where the obese lie

We will find a way

To destroy the onion ring

Dip it in the Bourguignonne

An offer to the picnic gods

Yes we will find the way

Happy New Year

‘Me be luving Onion Rings !

Thanks ‘mate !

Nice

No idea what you’re talking about; I use the terms interchangeably, no preference for one or the other. Idk if you have heard some university student say something or whatever, but that’s a pretty low bar for jumping to conclusions.

Mainboard is cooler anyway. Motherboard would only make sense if it gave birth to a childboard.

Well the PCI(x) extension cards are child/daughterboards aren’t they?

Still, Comedicles complaint is confusingly comedic?!

you have doughterboards connected to motherboard

Can’t tell if trolling or legitimately an upset septuagenarian. Irrelevant, either way. The terms “motherboard” and “main board” communicate the same thing.

Either way, not someone who is actually familiar with historical usage, certainly not in any hands-on way.

Anyone who commonly worked with technical equipment would realize that ‘motherboard’ is the neologism.

Both are common nomenclature. What a dumb thing to be upset about.

So a hub that knows about this could actually utilize both USB 3 lanes for additional bandwidth. Sounds well engineered.

I thought about designing such a hub and even laid down a schematic, but I figured it would come out to >= the cost of a standard USB 3 hub, and it wouldn’t really help bandwidth-saturated applications such as VR where people use multi-controller add-in cards anyway, not to mention the “duck test” argument I made in the post.

It’s worth mentioning there are a bunch of USB 2+3 hubs out there now, which only have an USB 2 hub controller and pass the USB 3 data pairs directly to one of the ports. It wouldn’t surprise me if such a hub showed up doing just what you proposed, passing the USB-C pairs to two ports.

You’re showing your (lack of) age, you whippersnapper.

The term ‘mainboard’ dates no earlier than the end of WW2, and was mostly used as a technical term (anyone who did maintenance or repairs on circuitry would be aware of it, but consumers weren’t very exposed to it). Whereas the term ‘motherboard’ emerged as a mostly-microcomputer-related consumer-facing marketing term, first gaining substantial popularity around 1973.

In many pre-digital printed circuits, there might be one or more “main boards”, combined with controls, indicators, and other peripheral circuitry (often still fabricated by point-to-point chassis wiring). In milspec equipment, mainboards were usually field-replaceable depot-serviceable assemblies, while the rest of the circuitry might be field-serviceable or might not be depot-serviceable.

As a term, mainboards were common well before printed-circuit digital systems emerged, and most of of the earliest printed-circuit computers had ‘mainboards’. The term ‘motherboard’ was largely originated by early solid-state computer makers attempting to differentiate between ‘digital-domain’ and ‘analog-domain’ printed circuit boards, as people weren’t commony aware of the differences. I’ve personally serviced equipment that had both mainboards and motherboards, with the mainboards being things like power and analog signal-conditioning circuitry, and the motherboards holding all of the digital-domain circuitry.

The term rapidly became popularized as jargon as a marketing differentiator.

But, if you want to make it all about culture wars, there’s no need to worry about all of that.

Surely motherboards made sense with the introduction of ISA slots for daughterboards? I doubt that was the first usage but there’s a logic to it. Why not parent and childboards though?

Technically there’s three USB ports there, both USB 3 ports and a USB 2.0 port.

https://electronics.stackexchange.com/questions/297031/usb-3-x-ss-enumeration/297373#297373

https://hackaday.com/2022/03/07/a-chip-to-address-the-fundamental-usb-3-0-deficiency/

Asus no less although it’s one of their cheaper boards.

This is totally normal; not really “dirty” or improper IMO. A lot of controller chips have integrated mux and can be configured as, e.g., 2x type-A or 1x type-C. The root hub in this case is acting as “the functional equivalent of a switch” so I don’t think it violates the spec either.

Its a hack, I like it. Not sure why I need 14 USB ports on a midrange motherboard, only so many phones and RGB lights to charge.

Keyboard, mouse, 2 HDD, audio, external Optical, video game controller, VR rig, camera, 2 flash sticks, I guess I could get close to 14, maybe add a 2.5Gbe adapter

keyboard, mouse, film scanner, 3 printers, USB microscope, and a few more ports for Arduino, EPROM programmer, etc.

14 is probably a bit more than what I need and I use more than an average Joe

That’s a lot of home-run cables and connectors on PC. Time for Thunderbolt or some other daisy chain high-speed external bus technology.

Which one of these needs USB 3 speeds?

The pages are really flying out of those 3 printers, USB 3 might honestly be a bottleneck

My motherboard has 14usb ports (13type a, one type c). I have more than once maxed out those ports, often enough that I have a usb3 x7 expansion card installed in my first PCIe slot for external storage in case I need more than 14 ports again. I won’t bore you with details about why I’m using all those ports at once, it’s enough to say it’s video recording related. It’s surprising how much recording related equipment one can find themselves using after a while.

That’s all to say it’s definitely not hard to max out those ports if you have the right kind of use case.

I think part of that is a lack of imagination, you can really quickly start getting into huge numbers consumed by devices you don’t use often. And all the ports are on the back are hard to get at so lots of folks plug everything into it in advance, even the stuff they almost never use as you just can’t get there to change devices… Also I’d not be shocked to find 1/4 of the ports can’t be used either – Seems to always be some cable or the device that is too bulky to allow the clearance for the other port….

Author here. My setup is rather odd; the rear of my PC is more accessible than the front, and at any given time I can have a keyboard, mouse, analog capture device (blocks another port due to how big it is), HDMI capture device, EEPROM programmer, logic analyzer, Arduino, UART, free space (SDR / controller / flash drive / whatever), and finally USB-C charging my phone. In order to fit everything, I loaded the front panel with ports – 2 from the case, 2 from a hotswap cage, 8 from a “dashboard” hub thingy – in part driven by an add-in card with two USB 3 headers (those exist but watch for the models with 1 vs. 4 buck converters).

Forgot to mention, the add-in card has two headers only (no external ports), and I also got it in an attempt to sidestep the infamous AMD USB issues, which are afflicting my B450 motherboard mostly on USB 3 hard drives.

Ha, ha! (said one septuagenarian to another.)

> a dirty USB-C trick that manufacturers pull on us for an unknown reason

> in a proper design, you would have a multiplexer chip

The reason is right there in the text – manufacturers don’t want to use an extra chip if they don’t have to

Total cost for the extra chip and accomodations on the motherboard… about US $0.20.

This is cheapness just for the sake of being cheap and punishing the customer for not buying a more expensive motherboard.

Actually it’s more of a 10 to 13€ chip on 1000 unit rolls according to Mousser, + whatever margin they must add to that. People will choose other brands because of less of a difference.

Chances are that specific kind of chip was hard to get during the shortage.

The world of connectors was already too complex for me when a DB-25 connector was either a serial or parallel port. The COM was usually male and the LPT female, but I’ve seen both the other way around.

USB 1/A/B solved these problems, with 2 being faster and mini- and micro-B smaller. But I recently came across a micro-A connector (to act as a phone charging cable from an ebike. We first thouht this was a spiel to buy the very expensive connector from the e-bike factory, but they had included 2 cables And a micro-B connector by design fits into a micro-A socket, so a micro-B-micro-B-cable would be just a quick soldering job away, if needed).

With USB-C a world of complexity is forced upon both engineers and the end-consumer, who can not know at a glance what to expect from his host-cable-device-combination, even if it “fits”, all at the slight bonus of being able to plug in both ways. And my phone+cable has now degraded to the point that data transfer only works when USB-AC is plugged in one way around.

For the most tech-savvy this might be a big bonus, but for the simple user expecting complex combinations to “just work” this might be a big source of frustration.

>With USB-C a world of complexity is forced upon both engineers and the end-consumer, who can not know at a glance what to expect from his host-cable-device-combination,

Very much my thinking as well, they had all the opportunity in the world to create a sane and simple spec while fixing the one real issue of the older USB ports – which way around does it go? Dump the either way up USB-C shape cable for a cable that just clearly has a handedness so you can feel and see the correct orientation trivially. Want more power and more data speed by all means add some more data pairs, but don’t do the daft negotiated huge range of voltages – its a failure point and extra complexity you can eliminate entirely by simply making some of the very many extra conductors they added with USB-C run at a fixed higher voltage…

USBC solved a bunch more than just port orientation.

It did it by increasing compatibility limitations.

Honestly, I can live with that, knowing that I can buy a bunch of good cables and they’ll work for everything, and not have to go hunting for different cables “where’s my printer cable” “where’s my mobile charger” “where’s my more different mobile charger” “where’s the monitor cable” “where’s the cable for my old monitor”

Nope, in future, every high quality USBC cable will work with everything.

Sure, I’ll also buy/receive shitty cables that can only charge slowly or whatever.

And sure, I’ll have to check if the USBC device supports one speed over another, but remember, those speed features exist, and aren’t universally supported, so why would we require a unique cable for that?

No. Keep your physical cable proliferation to yourself.

Give me a million different soft-standards, as long as we can get rid of the million different “hardware” standards.

I’ve bought more than a few ‘high quality’ price tag cables that are supposed to do everything, many of them either did but break in practically 5 seconds of gentle use or flat out didn’t work right from the off, as despite saying they were everything they actually just put the USB2 features in or something stupid… I’ve almost certainly spent more on USB-C cables alone in the 12 odd months I’ve needed to use USB-C than I have in my entire life on other USB cables, and hubs, as soon as you add in a USB-C dongle or two… And its not just the cable its the devices as well having wildly different capabilities with all those optional alt modes but being able to just call themselves USB-C devices…

Personally I would far rather have that dedicated HDMI or Display port so you know you can actually get a display signal from somewhere, with the cables that are so common they probably already are attached to the display. And know that you have not got to buy heaps of expensive dock/dongle things to break out the tiny number of USB-C ports the devices give you to actually have the external display and odd USB device like perhaps a keyboard at the same time… All the alt modes and optional modes are just a mess…

All your ‘Where is’ questions were already a solved problem if you wanted it to be enough anyway – USB 1,2 and even just about 3 spec are ubiquitous enough and you could get simple adaptors if you didn’t go through the effort to source every device using the same connector.

The EU forcing everyone towards USB-C MAY mean in the future device makers actually use the same standard connector for more than a few years on all their devices. But I’d not count on it sticking, even if you live in the EU as the laws don’t apply to every possible electronic device anyway and are quite likely to get amended at some point. If they don’t I’d say its more likely to cause trouble than help in the long run too – as tech moves on and the EU is still stuck with USB-C, or to work for the EU USB-C gets ever more piled up with optional alt-modes while still needing backwards compatibility with the minimum valid specs for the latest features etc. Its just going to get to be an even bigger pile of shit to wade through on the device and cable specs trying to figure out compatibility…

(CGA, EGA, early VGA) Computer monitors used to use (female at the host) DE9s too. Just like modern RS-232 today (whixh are male at the host).

So, what’s the deal with the 19 pin header to Type E connector adapters for connecting one front panel USB-C port? Do they multiplex both USB 3.0 ports in the 19 pin or ?????

Good news is that some time in 2022 the peripheral industry *finally* got around to making a PCIe x1 card with a Type E connector, after years of making PCIe x1 cards with a single external Type C port. Despite being identical aside from the connector type and external VS internal location – the industry as a whole simply refused to put a Type E connector on an x1 length card. x2 was the shortest available.

Of course to get full USB C speed out of it, the x1 Type E card has to be in a PCIe 3.0 or later slot, but even on PCIe 2.0 it’s still faster than USB 3.0.

If I knew how to do the design, and had the $$, I’d design a single port USB-C ExpressCard and have a batch manufactured. I don’t care that it’s only PCIe 1.0 x1, some USB C devices just don’t work right connected with an adapter cable to a USB 3.x port.

Is that Uwe Sieber’s USBTreeView[1] I see in the picture up there? :-)

Awesome program I can only recommend: Restart ports & devices, safely remove devices and all the USB device (enumeration) information you could wish for.

[1] https://www.uwe-sieber.de/usbtreeview_e.html

It’s HWiNFO, but USBTreeView has become my tool of choice since writing this post.

Maybe we can finally figure out the AMD USB connect/disconnect problem. Maybe a hookup with the event logging.

I feel like it’s an intermittent signal integrity issue of some kind with external factors in play, from how AMD is struggling to solve it, and how people seem to have remedied it by changing the PCIe link speed (to Gen3) and C-state settings.

As I mentioned in another comment, the issue is even present on my B450 motherboard, messing with USB 3 devices. I have a PCIe Gen4 GPU and CPU arbitrarily limited to Gen3 by the motherboard, and haven’t tried messing with the aforementioned settings.

What’s to figure out? All the Ryzen chipsets were designed by ASMedia, who are notorious for their atrocious USB host controller implementations.

It’s cheeper and provides a better user experience. If your USB device stops working (because you blew a port on the internal hub), you might just think “I’ll try flipping the cable over and see if that works.” and in this case it will. Additionally they can sell you defective boards and you likely won’t notice. You will just try flipping the connector over and go on with your life never knowing the hw is defective.

This is by design. It’s a USB 3.2 10GB/s port. It consists of two 5 GB/s channels. A compatible device can make use of both simultaneously.

not true? check explanation https://www.cuidevices.com/blog/usb-type-c-and-3-2-clarified , 10Gbit is higher rate ovdr single pair and you can have 20Gbit over two pairs.

x2 operation (using both lanes simultaneously at 5 or 10 Gbit each) requires the USB host controller to explicitly support it. Newer platforms from both AMD and Intel can combine two single-lane ports into a single dual-lane port for x2 mode, but that’s not relevant to this previous-gen AMD system which cannot combine the lanes.