If you know anything about he design of a CPU, you’ll probably be able to identify that a critical component of all CPUs is the Arithmetic Logic Unit, or ALU. This is a collection of gates that can do a selection of binary operations, and which depending on the capabilities of the computer, can be a complex component. It’s a surprise then to find that a working CPU can be made with just a single NOR gate — which is what is at the heart of [Dennis Kuschel]’s My4th single board discrete logic computer. It’s the latest in a series of machines from him using the NOR ALU technique, and it replaces hardware complexity with extra software to perform complex operations.

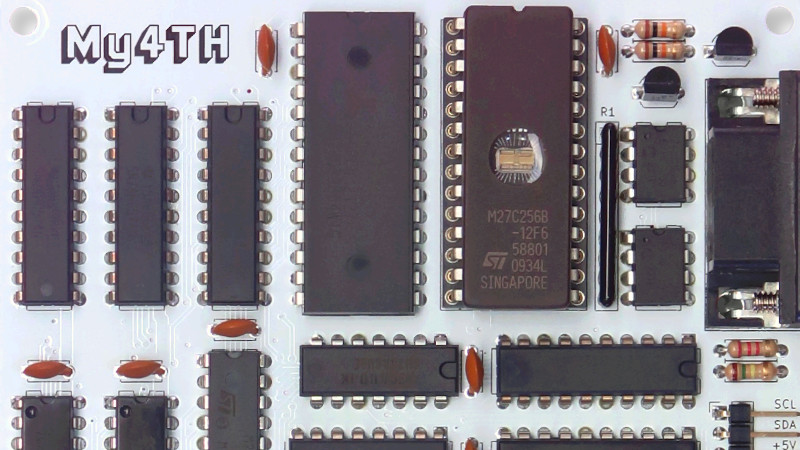

Aside from a refreshingly simple and understandable circuit, it has 32k of RAM and a 32k EPROM, of which about 9k is microcode and the rest program. It’s called My4th because it has a Forth interpreter on board, and it has I2C and digital I/O as well as a serial port for its console.

This will never be a fast computer, but the fact that it computes at all is ts charm. In 2023 there are very few machines about that can be understood in their entirety, so this one is rather special even if it’s not the first 1-bit ALU we’ve seen.

Thanks [Ken Boak] for the tip.

“It’s a surprise then to find that a working CPU can be made with just a single NOR gate”

Typo. s/CPU/AlU/

Hmm. Helps I don’t typo it as well! Oh well. You get the idea.

he to the and ts to its.

Nice! The lack of blinky leds seems weird though :)

http://mynor.org/my4th

If you scroll down, you can see the flashing LED …

http://mynor.org/my4th

In the HOW DOES IT WORK, there is a link “The MyNOR ADD instruction” to explain how the ADD works.

Well, as we can see hardware has been replaced by software.

Interesting to see how it actually works.

But to understand 9k of microcode will be quite a task.

Great work though.

Just press your capslock button repeatedly. Or to see two capslock and numlock.

Guessing “the” rather than “he”, glad to see the charm of this site is still present after so many years. (:

Ultimate RISC machine?

THE ULTIMATE RISC title has been taken many years ago

https://homepage.cs.uiowa.edu/~jones/arch/risc/

There it was 1 instruction – not one NOR gate …

We implemented it in CPLD more than 25 years ago

and I could convince Steave Teal to bring it to life again in FPGA recently.

And now running Forth as well

One bit processors can actually be useful.

Many, many years ago I worked for Martin Marietta on an infrared video project that used a chipset they were developing called the grid array parallel processor – GAPP.

It consisted of an array of small 1-bit processors, and the idea was that you could load a block of pixels and work on all of them in parallel.

This being the 90’s and chip technology being what it was, 1 bit machines were all we could build.

Still, there were a *lot* of them, so once you got the data on the grid, the actual processing was really fast.

Unfortunately, the overhead of breaking the pixel data into 1 bit streams to load up the array, and then unloading the array back out to get normal video again was just unmanageable.

That is extremely similar to how the Connection Machine CM2 worked – it had 16,384 one-bit processors. From an information theoretic standpoint, the fewer bits wide your machine is the more efficiently it uses power and space – smaller width ALUs increase the odds of a bit changing state on the next instruction and hence conveying more information. Thinking Machines’ compilers did the work to hide the 1-bit nature of the machine and made it look like a “more” conventional machine. More like a conventional machine with a very, very wide vector unit anyway.

I wonder how often these days the top 32 bits of a 64-bit operand is 0x00000000 or 0xFFFFFFFF? I bet a few folks at AMD and Intel could answer that off the top of their heads. :-)

So often we made the default data width for x86-64 instructions 32-bits, and made acting on the lower 32-bits of a register automatically zero the upper 32-bits. We also encouraged C compiler vendors to keep the ‘int’ data type as 32-bits when targeting our 64-bit instruction set.

Interesting! Since video output is essentially a serial process, I am wondering why this was so difficult.

I am so old that I remember ENIAC used decimal ring counters.

Both NAND and NOR gates are “Universal” logic gates. Charles Sanders Peirce (during 1880–1881) showed that NOR gates alone (or alternatively NAND gates alone) can be used to reproduce the functions of all the other logic gates…[1]

1. Universal Logic Gates

https://en.wikipedia.org/wiki/Logic_gate#Universal_logic_gates

Something I very much like about this site is the variety of intelligent comments the subject elicits.

One of best and complete Forth.