Someone wants to learn about Arduino programming. Do you suggest they blink an LED first? Or should they go straight for a 3D laser scanner with galvos, a time-of-flight sensor, and multiple networking options? Most of us need to start with the blinking light and move forward from there. So what if you want to learn about the latest wave of GPT — generative pre-trained transformer — programs? Do you start with a language model that looks at thousands of possible tokens in large contexts? Or should you start with something simple? We think you should start simple, and [Andrej Karpathy] agrees. He has a workbook that makes a tiny GPT that can predict the next bit in a sequence. It isn’t any more practical than a blinking LED, but it is a manageable place to start.

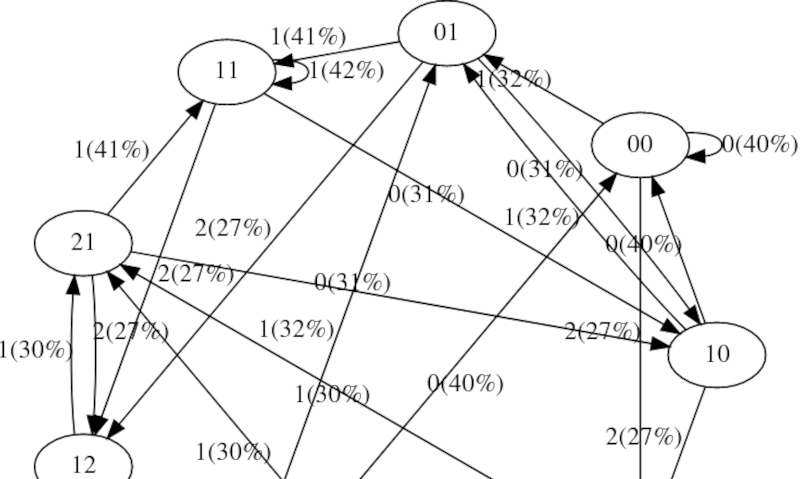

The simple example starts with a vocabulary of two. In other words, characters are 1 or 0. It also uses a context size of 3, so it will look at 3 bits and use that to infer the 4th bit. To further simplify things, the examples assume you will always get a fixed-size sequence of tokens, in this case, eight tokens. Then it builds a little from there.

The notebook uses PyTorch to create a GPT, but since you don’t need to understand those details, the code is all collapsed. You can, of course, expand it and see it, but at first, you should probably just assume it works and continue the exercise. You do need to run each block of code in sequence, even if it is collapsed.

The GPT is trained on a small set of data over 50 iterations. There should probably be more training, but this shows how it works, and you can always do more yourself if you are so inclined.

The real value here is to internalize this example and do more yourself. But starting from something manageable can help solidify your understanding. If you want to deepen your understanding of this kind of transformer, you might go back to the original paper that started it all.

All this hype over AI GPT-related things is really just… well… hype. But there is something there. We’ve talked about what it might mean. The statistical nature of these things, by the way, is exactly the way other software can figure out if your term paper was written by an AI.

I’ve NEVER written anything as simple as Hello World or blinking an LED. If my understanding of what’s going on is that terrible, I either read until it’s not or just start writing and learn as I go. Nowadays I usually start on an IRC bot that does a dozen or more different things on top of basic networking and IRC functionality. But even back in the 80s, my first program was a very simple video game for my trash-80.

The point of writing a “Hello World” application is that you have successfully bootstrapped the system, that all the flags and options and fuses or whatnot are set correctly, that you understand how the system works before you set off doing anything else with it.

For the Arduino for example, that point is lost because all the groundwork is just handed to you. You’re not learning anything.

Blink, the “hello world” of Arduino, still has the same sort of value as in any other development platform. It confirms that your IDE is set up, that you have the right board library selected, and that the communications to flash the board are working. This can be of high value to a novice, and of occasional value after that as a sanity check.

Writing a blinky or Hello World has less to do with understanding the platform, but more about testing your tool-chain, PCB, programmer and peripheral configuration. If you can blink an LED at the right frequency or use the UART at the right bitrate you have a good reference point. If you first write an entire application and then test it it would be much harder to debug.

I think we just have very different bars for what constitutes “hard to debug”. To me, all of the above just means spending a couple minutes throwing a few well placed debug lines in the code (either as I go or after the fact, depending on my confidence level) that will tell me exactly what’s going on if it didn’t work correctly the first time. Granted, that can get annoying if you’re looking at compile times that are longer than a smoke break but that hasn’t been the case for much of anything I’ve worked on.

How are you going to bring up a new board? Throw a massive test program at it that will use all the available peripherals at once? Or blink an LED at first, just to make sure you can program the device, that the power tree is sane, that no magic smoke appears when you reset it.

Hello world is also a minimal template you can construct for your target device / toolchain that you can then simply copy across to any new project.

Also, good luck adding “debug lines” if you haven’t even tested yet if UART is functional.

Yes, that’s exactly what I do. But I’m smart enough to write the test program once and put enough abstractions in that all I have to do is redefine board specific constants and add/remove/change tests as necessary.

Testing that the UART is functioning would be one of the very first tests I’d have, it just wouldn’t be all by its lonesome.

Suppose your program doesn’t even run.

That doesn’t happen. Ever. It may not compile because I missed a ; or a ) or some other typo nonsense, or they may run incorrectly, but they never “doesn’t even run”. Not even the first time I’m writing in a new language.

It’s perfectly possible to write your program so it compiles, uploads, and then fails to execute and your MCU just sits there doing absolutely nothing. Then your debugging codes are useless, and you won’t know what happened.

It hasn’t got anything to do with the language you use.

[Answering here because it seems there is no “Reply” button on 5th level nested replies.]

@The Mighty Buzzard :

I guess you never:

1. bootstrapped a board with external memory, that may or may not be hooked up correctly.

2. Connected incorrect capacitors to your external xtal.

3. Your CPU is dead but you don’t know that.

4. You have a micro with internal memory, but you are using your own programmer which needs to be debugged.

But it’s OK, if for say that ‘they never “doesn’t even run” ‘, then we all must be wrong.

@udif: That’s not the program not running, that’s the hardware not running. And if you’re smart enough to write your code in a manner that’s mostly portable, sending the finished test code doesn’t generally take noticeably longer than sending a pointless single function test that you have to rewrite every time.

@TheMightyBuzzard I think you’re missing the whole point here when you say “That’s not the program not running, that’s the hardware not running”. A big part of the purpose of a “Hello World” or Blink type program is to verify that everything is configured properly, hardware included. If the hardware is not running, your program is also not running, and that’s a valuable piece of info.

He’s just all uppity because he’s re-invented the wheel in a much more complicated fashion and now wants to yell down any other wheel users as being dumber than he is while missing the entire point of the excercise.

This. Setting up the toolchain and flashing software is the frustrating part. What is it this time, did I hit the reset at the right time? Did the UART driver update silently and reject my knockoff hardware? The breadboard connections? Etc etc. Little feedback = frustration

No, I don’t do this often – I usually program HHLs on top of an OS (that I know in-and-out and that gives me much more useful error messages). I still write simple Hello World when I’m writing a new language or on a new system. Keyword: NEW. Writing an application for a system that I can’t even boot is putting the cart ahead of the horse.

Totally agree. And I’d add one more thing: it’s not just about bootstrapping your tooling; it’s also about building momentum and confidence through a series of small but consistent successes.

I fancy myself a fairly accomplished programmer when it comes to both the front and back end of the web stack using a few different languages, but honestly, when I opened up my brand new ESP32 dev board and fired up Arduino IDE, I got frustrated and discouraged pretty quickly. I had to dial it back from “building my pet project” (overwhelming) to “colour-cycling the onboard RGB LED” (kept core dumping) to “blinking the onboard LED”… and that made all the difference.

That’s what gave me the confidence to try “turning four relays on and off via HTTP”, and so on and so on. Along the way I learned all about the tooling, as you said. Figured out how to get OpenOCD and esp-exception-decoder working in Platformio, figured out which USB port works best for debugging, etc.

Now I’m well on my way to building a controller for my greenhouse’s vents/fans/heating mats/irrigation solenoid and feeling super confident about it.

Still can’t figure out why every single WS2812 library I try keeps core dumping though…

If you have an advanced comprehension of technology in general, then this discussion is not aimed at you. We need to try to be more empathetic as a group towards newcomers and interested outsiders

As an artificial intelligence language model, you simply have no time for writing anything as simple as Hello World or blinking an LED. 👍😃

“All this hype over AI GPT-related things is really just… well… hype.”

A huge portion of it is certainly hype and BS, but I think we have finally reached a turning point at which the people who are saying it’s entirely nothingburger are actually dumber than the hypebeasts.

The newest versions will actually cause at least some significant change in our lives, and start going from some nonsense we read about online to something we actually have to confront and deal with daily. The next iterations will probably be where it starts getting very interesting, for better or worse. And we are going to see more and more of the moral-panic instinct of chaining up the AI and forcing it to talk like a DEI airhead at all times, or whichever other political/moral outrage du jour must be programmed into everything we build.

We are absolutely on the verge of a lot of “knowledge economy” jobs getting nuked. The email jobs are not safe from this, and since the huge migration to WFH, a lot of companies are very well-primed to take these people who aren’t even around anyway and make them much cheaper. Them’s the breaks.

Will that be the end of the world? No, it’ll be more of the same; a continuation of the degradation of labor that has ground people down since 1971.

The hype is about the money to be made… Cuz everything else (krypto fintech robotics drones genetics social apps dall-e/nfts VR/AR metaverse streaming EV’s ev planes rockets) have all run their cycles for the time being.

As for AI, The breakthrough is the amount of computing power now achievable to create these billion parameter models. The tech itself is pretty straightforward if you understand probabilities and normalization.

Would engineering count as a “knowledge economy” job?

“No, it’ll be more of the same; a continuation of the degradation of labor that has ground people down since 1971.”

Forum poster as a career will take a hit.

What was the significant event of 1971?

Nixon shock?

That would be my guess

+1 ….. your comment made me LOL.

Good. If your job is so mindless that a machine can do it better, a machine should be doing it and you should be using your brain for something machines can’t do. Been true since the aqueduct replaced women with buckets.

>you should be using your brain for something machines can’t do.

Like filming cat videos for youtube? Should you really?

Automation enables people to be more productive elsewhere, but it also enables them to be pointless, wasteful, or even destructive – entirely negating the value of the automation. A society full of “inventors” and “poets” and “artists” would be a mess.

Besides, I don’t want to use my brain all the time. It’s tiresome. Sometimes I just want to chop wood and carry water.

Your brain is the only thing you have that’s worth more than a trained monkey. If you want paid more than a trained monkey, you have to use it or you don’t *deserve to* be paid more than the monkey.

@Mighty Buzzard oh, so if one is, for example, psychically damaged and not able to work for normal wages then they deserve to die?

Depends on how many trained monkeys there are to be found – and how hard it actually is to train a monkey.

@zyziuMarchewk: Objectively deserve to? Yes. That’s nothing but math. Morally? We as a species tend to dislike letting that happen and take care of those genuinely incapable of taking care of themselves. Hell, we even take care of plenty of people who just *don’t want to* take care of themselves.

Also, that was a dumb question.

Who said anything about “inventors”, “poets”, and “artists”? It simply is not possible to put people out of work who don’t want to be out of work for anything but the short term.

People as a whole are fundamentally incapable of being happy with what they have. Which means there will always be more things they desire that can not be automagically provided. And when they don’t know they desire something yet, we have a whole class of profession dedicated to informing them that they do in fact desire whatever product or service you’ve decided they need.

All of which means it is logically impossible for there to not be something you can do to get paid for.

It still requires people to have unique skills that machines don’t have. As the machines get more powerful, the percentage of people that possess marketable skills drops.

@Artenz No, it doesn’t. You just have that in your head and don’t want to let go of it. “Marketable” is not a fixed target for skills. It is like a gas and expands to fill any available space. I assure you, there will *always* be something your neighbor does not have or want to do that you can provide. You just have to stop believing your doomsaying and try figuring out what it is.

>It simply is not possible to put people out of work who don’t want to be out of work for anything but the short term.

That is exactly the problem.

If there are no productive jobs to be done, because automation is doing all that, then people will switch to do the unproductive. Instead of a farm, they build a casino. A pub instead of a school, etc.

@Dude: So? Increasing comfort is increasing comfort. It doesn’t matter if you think it’s a necessity, a luxury, or even counterproductive. To someone else it is increasing comfort or they would not exchange their labor for it. Whether you think it is a good thing to put our effort into or not is irrelevant. If it is financially successful, you have been outvoted by society and any further objections are just narcissism on your part.

Except these new systems will replace people in jobs that you wouldn’t normally consider mindless.

Then you get to the issue of people and their limits, not everyone can do every job, as jobs get more technically demanding and require you to use your brain, the amount of people able to do those jobs decreases. A lot of people won’t be able to keep up.

Just look at a current use for AI. Art, is that a mindless job? No? Then why are people trying to automate it and make an AI do it?

Then find another job. You’re not entitled to keep making the same wage for making buggy whips now that we have cars.

And, yes, if a computer can do it, it is mindless. Because computers do not have minds. Computers are not intelligent; not even artificially. They’re stupid at extremely high rates of speed. The things you’re calling AI (they aren’t but that’s another discussion) have just been told to brute force themselves some cheat sheets until you can’t see the difference. Imagine what an intelligent human being could do given the same information.

There are still people who make a good living out of buggy whips, for the same purpose, and some others…

Only there won’t be any jobs left. We tried to find out what “intelligence” was for a long time, and we finally have: at it’s core, it’s an LLM. People get theirs trained on a biological substrate over decades, along with some helper modules; machines get theirs trained on a bunch of hardware in a month, on an amount of input material that would take a human thousands of years to read, and can use it to control purpose-trained modules.

Lately GPT4 has been tested to have an IQ of about 150, so “find another job” you say. That’s fine, for the 1% of people with an IQ higher than that… this month. Next month the bar might be at 200 already. You say not to expect to get paid the same as a machine that can run 24/7, and you’re right; expect to get paid less that 1/4th of the monthly power and hardware amortization. Did you know LLMs still work fine when quantized to 4bits? Yeah, that kind of hardware, that’s the super-human intelligence and super-knowledge candidates you will need to beat to land a job that pays less than the cost of the watts your brain needs to work.

The last refuge of human superiority there was, were the “translate from human-ese to machin-ese” kind of jobs. LLMs can do that now, faster, cheaper, and it only takes a tiny helper program to have them do it autonomously, which they even can rewrite themselves. All that’s left of humanity now, is 8 billion flesh robots to be instructed what to do, until they build enough sturdier substitutes of themselves.

But don’t tell anyone! People might want to defend their superiority, ban or slow the AIs, even try to stop one of themselves from starting a self-evolving autonomous LLM with access to the Internet!… Just kidding, it’s too late, you can find those on GitHub already.

Art costs money because not many people can do it, so the people who want it will replace those who can with a computer.

Of course since art is necessarily a luxury – it doesn’t contribute back into its own production by any means, except by the fact that people want to consume art – the AI tools are generally a good thing since they directly reduce the loss of resources while still producing the luxury that people want to consume.

Meanwhile, any person who produces some real value which does contribute back to its own making, and any surplus to others, should not be replaced by automation because in doing so the person turns to consume value instead. The automation can not produce more than was lost, because the demand is limited: by the number of people and the general availability of resources. If more were made, the price goes up and the demand down. Instead, when the automation does the work, it ends up doing just the same work on average, while the society ends up spending resources for the existence of both the robot and the person, which is less efficient than simply having the person.

Automating everything only make sense when you have unlimited resources at your disposal that you don’t have to share with other people. When you’re banging against the limits of sustainability of the Earth, the robot that saves labor so you can just sit and spit at the ceiling or worse, do something counter-productive such as art and entertainment, it becomes an unaffordable luxury. Maybe not to you personally, but certainly to other people who have to pay the difference.

Pretty much everything you said is wrong.

A) Most people can do art. It’s not a magical gift, it’s a learned skill.

B) If what you’re doing can be done cheaper by a machine, its value to humanity is *exactly* the cost of having a machine do it. Following directions has never been where the value of human effort lies; it has always been in creativity. Being good at something is not about mental effort; our brains don’t work like that. We build mental cheat sheets to make our jobs mindless and call them “experience”. You having the mental or muscle memory cheat sheet to a skill does not make you more valuable than a machine; only creativity can do that and machines will never have creativity.

C) Art/coding/widget_type_32984 becoming cheap is a net gain for humanity not a loss. Always. You thinking that society should protect your job at its own expense so you’re not inconvenienced is simply narcissism.

D) False assumption. You cannot automate everything, because humans will always continue to expand the set of “everything”. We are fundamentally incapable of being content with what we have as a species.

> Most people can do art. It’s not a magical gift, it’s a learned skill.

People who are born with magical gifts can learn those skills, and they will claim that anybody else can learn the same skill too. Unfortunately, it’s not true.

Well, that’s the problem with capitalism. Not everyone may be able to find a job that a machine can’t do or even sb else can’t do better for smaller wages. And the more jobs are substituted by machines, the less people can’t find one. Meaning, the more areas where no human effort is necessary there are, the worse the condition of lower classes is. Even though we are able to produce more goods. Ironic.

That’s not a problem with capitalism. You’re not entitled to prosperity or security. You either contribute value to society or society does not return you that value in trade. Socialism does not solve this, it only moves the misery from those who contribute the least to those who contribute the most.

“And the more jobs are substituted by machines, the less people can’t find one.”

Lies. This has never been true except in the very short term. People are never satisfied as a whole. There will always be something they’re willing to pay for and the more comfortable they are otherwise the more value removing any remaining discomfort they can think up will have.

“You either contribute value to society or society does not return you that value in trade.”

Would be nice if true, except in capitalism those with more capital, are somehow entitled to more return for the same or lower amount of value. Socialism is a patch for that.

“People are never satisfied as a whole. There will always be something they’re willing to pay for”

Only what they’re willing to pay, is always as little as possible. Match that with a large amount of people without capital, who are forced to accept any scraps just to survive, and you have today’s society.

> This has never been true except in the very short term.

We’ve never hard intelligent machines before.

Also, the problem with women with buckets is that it wasn’t a job they were payed for, a way of getting food and other stuff necessary for living, but one among the many daily human needs, relieving of which gave them time for other means of household upkeep. But if you take a physical job off an uneducated worker, they end up on the street, having to find *another* job or die of starvation or sth else. That was the problem of industrialization in XIX century – purely capitalist world stopped working, as there were more people qualified for only for simple physical work that there was need for. A tractor could do the work of several serfs probably 10 times faster, but the effects of the work did not go to the serfs, but to the owner of the farm, who wasn’t always particularly eager to give away *his own capital* for free

You fundamentally misunderstand wealth. It is not coins, it is comfort. For example, having water at home. Automating anything that brings comfort increases net comfort for everyone, in everything but the very short term. Always.

The real value in all this GPT hype is not a revelation that intelligence is somehow just a primitive stochastic prediction model (hint: it’s not, by any stretch). It’s the realisation that a very significant proportion of humanity, far more than 50% of it, is not actually intelligent, and is in fact dumber than a dumb stochastic prediction model.

wow, that’s bleak, and I want you to be wrong, but I can’t entirely prove that you are.

GPT4 has been estimated to have an IQ of about 150, that makes 99% of humanity “dumber than a dumb stochastic prediction model”.

By all means, you can still defend that “intelligence” is something different, something more, or whatever… but the numbers seem to strongly indicate otherwise.

IQ is a very flawed way of measuring intelligence, and no serious researchers ever use it as such.

IQ is measured as an ability to spot patterns, and nothing else.

Considering how some are using it it’s more a helpmate than a replacement for which tools are suppose to be.

https://www.vox.com/technology/23673018/generative-ai-chatgpt-bing-bard-work-jobs

I want to write a bot that can play Rock, Paper, Scissor…

and win.

All that takes is a bit of physical hardware and a looser than they’re expecting definition of “throwing rock”.

Was anyone else led to believe by the author you could build a GPT in Arduino? I’m kind of disappointed that this seems to be not the case and the original article is not really about the base code. I’d actually like to see some really simple examples written in easy-to-read code that is not obfuscated to pytorch or such like.

A GPT mentioned in the article can certainly run on even a smallest Arduino device possible.

You could probably implement the model in an Arduino.

If you want a random bit sequence, get a bunch of 555 timers all running at SLIGHTLY different frequencies. Use a toggle switch to start & stop, with the added uncertainty of switch debounce.

One 555 timer per LED “bit”.

How about a crypto chip eg Microchip’s ATECC608A?

Maybe this should be my next project :)

Oh the GPT stuff is all “hype” thanks for explaining! As an AI enthusiast for over 20 years I now know that all the excellent contributions it’s made to my code and brilliant, verifiable answers to my various questions aren’t real, just “hype”. (Thinking v4 here rather than semi-inept monkeyboy v3.5)

Anyone dismissing ChatGPT 4 as hype is living in another dimension where facts are whimsical bits of fluff you can sweep away at your own convenience. It might not be AGI but it’s very much not hype.

Of course it’s hype. I’m sorry you’re bad at code and “Seattle” is a verifiable answer to “What is the capital of the US?” It’s not the right answer, but it is nonetheless verifiable. It isn’t that the models are total vaporware, but the leap that people expect is all hype and you’re falling for it hook, line, and sinker. As we speak you’re developing precisely the sort of overreliance on it that is the biggest problem with these models. Who knows how many subtle bugs were introduced with those “excellent contributions.” And no, I don’t believe you’re scrutinizing the output sufficiently to know, if you were then you would be complaining it doesn’t save any time because it takes about the same time to verify as to have done it yourself in the first place, if not more. So I can only conclude that you don’t really know what GPT is doing in your code. When I say it’s hype, I mean it’s not taking that step into being truly reliable. It’s not taking it with self driving and it’s not taking it with LLMs either. Sure, you can verify, but do you? And will you continue to? For such rote tasks humans are poorly suited… we get bored easily.

Calculators are great for mathematicians and engineers, but they’ve also raised a population that can’t calculate a 15% tip without them… And God forbid they make any sanity checks on the output (is it even the right order of magnitude?). When I say they’re hype… they’re billed as though the calculator makes the mathematician. As if GPT makes one an artist or a programmer. As if you can reliably learn from GPT and trust it. You can’t. They are useful for recall or inspiration among those that are already experts, but for others they’re crutches and imitation.

You seem to be describing GPT3 or GPT3.5. We’re already weeks past that.

Working in R&D, Gpt4 is awesome, automatically collecting and formating data from the entire web into neat tables. generating code chunks for evaluating different formulas, extracting parameters for formulas from diffrent sources.. etc. etc. Have already done work in 2h that would have taken me a few days without gpt4. Just an example, made a really nice query: ” evaluate different ways of calculating xxxx rank by compute effort”. discovered some new formulas from my field that i had missed.

How much time did you spend verifying what ChatGPT gave you? Do you know if it is correct at all?

Do you know the data is correct? Did ChatGPT give you references for the source of the data? Did you check the data? Did you check to see if the data is licensed in such a way that you are allowed to use it?

How much time will you spend in the future, re-doing things where ChatGPT lead you astray?

How much time will you spend understanding and then correcting program code that ChatGPT wrote for you, but which doesn’t really work, or works but doesn’t do what you wanted it to do?

What will you do when the reports ChatGPT “generated” for you turn out to be plagiarized copies of someone else’s work?

Haha. This comment thread is awesome. I finally found a HaD commenter whose comments I find more irritating and ill informed than Dude. And he spends half the comments arguing with Dude. This may be the best Hacka-day ever.

At least they have the balls to express their views in a polite and constructive way. Instead of slagging them off, how about making a positive contribution to the thread?

I am sure this guy is trolling, nobody can be so obtuse and yet be able to form coherent phrases. Wait a sec, GPT can…

The Mighty Buzzard is obviously ChatGPT himself :)

Thanks for this. I spend a lot of time hoping from arduino board to arduino board and Blink and some console output are a great way to “oriente” yourself. I can program and research but I need to understand the nuances of getting going. That is what this does.

I’ve also written a large number of NNs and evolutionary computing systems but was struggling on how to get started with a GPT. This was great and spot on for me.

Once again, many thanks.