[Ramin Hasani] and colleague [Mathias Lechner] have been working with a new type of Artificial Neural Network called Liquid Neural Networks, and presented some of the exciting results at a recent TEDxMIT.

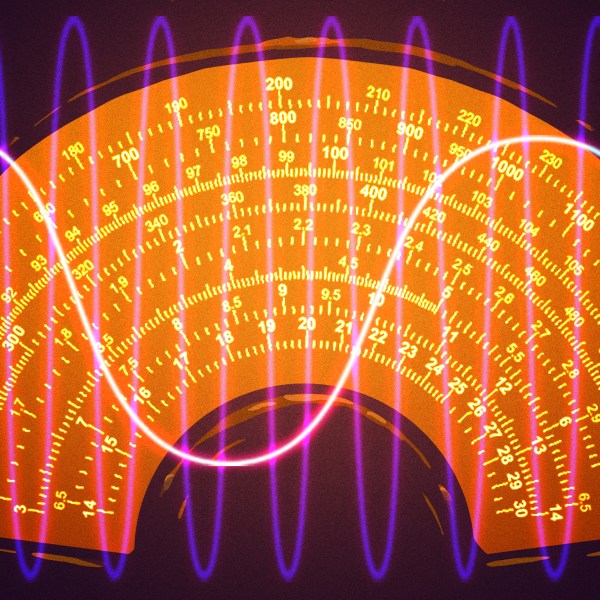

Liquid neural networks are inspired by biological neurons to implement algorithms that remain adaptable even after training. [Hasani] demonstrates a machine vision system that steers a car to perform lane keeping with the use of a liquid neural network. The system performs quite well using only 19 neurons, which is profoundly fewer than the typically large model intelligence systems we’ve come to expect. Furthermore, an attention map helps us visualize that the system seems to attend to particular aspects of the visual field quite similar to a human driver’s behavior.

A liquid neural network can implement synaptic weights using nonlinear probabilities instead of simple scalar values. The synaptic connections and response times can adapt based on sensory inputs to more flexibly react to perturbations in the natural environment.

We should probably expect to see the operational gap between biological neural networks and artificial neural networks continue to close and blur. We’ve previously presented on wetware examples of building neural networks with actual neurons and ever advancing brain-computer interfaces.

How do you watch 1 million pixels with 19 neurons?

Many in parallel.

That’s what I imagine, yes, but then it would be more appropriate to tell us how many neurons total to decode the whole sensor input, and how many are needed in a more traditional design using the same camera resolution.

What do you mean? Decode?

There’s a map at 7:50, also, MIT has a more scientific article!

If you really wanna know, you’d have to study the same worms i guess😁

Each neuron can have anywhere from a few hundred to several thousand synapses. The average number of synapses per neuron in the human brain is estimated to be around 7,000.

I was expecting liquids, ala T-1000 terminator.

Or a hydraulic analog computer. Something modeling a neural network with pressure and flow rates.

Can you possibly elaborate on the benefits of that? Aren’t you just using something less efficient than a transistor?

19 Neurons for lane keeping is just clickbait.

All the heavy lifting is done in the perception layers in the 3 convolutional layers and the condensed sensory neurons.

“The system performs quite well using only 19 neurons…”

Well that’s more neurons than most drivers use.

This time-based approach looks like it will help with real world problems. Currently, we seem to be doing things in a stateless manner, like object detection based on a single frame instead of based on video. The future is looking interesting. :)

The system performs quite well using only 19 neurons…

are they now saying a worm can drive a car !!

i do not get it

Are the neurons organic and are they specialised for certain ‘jobs’?