Recently the [Global Science Network] released a video of using an artificial brain to control an RC truck.

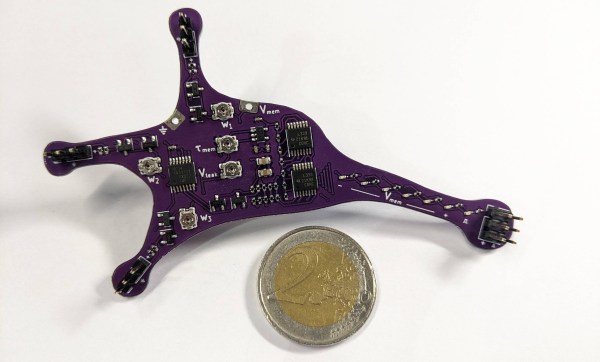

The video shows a neural network comprised of eight artificial neurons assembled on breadboards used to control a fully autonomous toy truck. The truck is equipped with four proximity sensors, one front, one front left, one front right, and one rear. The sensor readings from the truck are transmitted to the artificial brain which determines which way to turn and whether to go forward or backward. The inputs to each neuron, the “synapses”, can be excitatory to increase the firing rate or inhibitory to decrease the firing rate. The output commands are then returned wirelessly to the truck via a hacked remote control.

This particular type of neural network is called a Spiking Neural Network (SNN) which uses discrete events, called “spikes”, instead of continuous real-valued activations. In these types of networks when a neuron fires matters as well as the strength of the signal. There are other videos on this channel which go into more depth on these topics.

The name of this experimental vehicle is the GSN SNN 4-8-24-2 Autonomous Vehicle, which is short for: Global Science Network Spiking Neural Network 4 Inputs 8 Neurons 24 Synapses 2 Degrees of Freedom Output. The circuitry on both the vehicle and the breadboards is littered with LEDs which give some insight into how it all functions.

If you’re interested in how neural networks can control behavior you might like to see a digital squid’s behavior shaped by a neural network.

Continue reading “Eight Artificial Neurons Control Fully Autonomous Toy Truck”

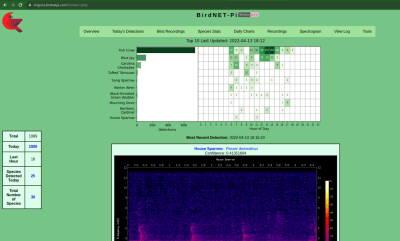

About that Raspberry Pi version! There’s a sister project called

About that Raspberry Pi version! There’s a sister project called