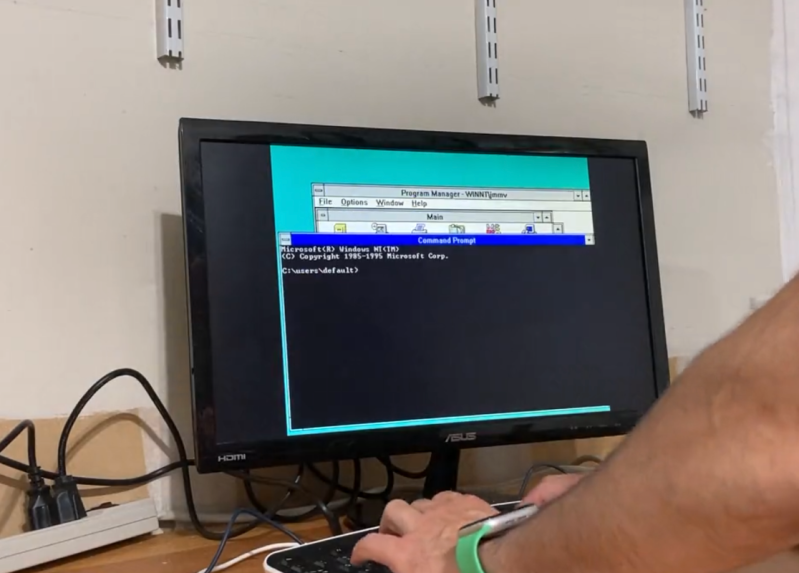

[Julio] has an older computer sitting on a desk, and recorded a quick video with it showing how fast this computer can do seemingly simple things, like open default Windows applications including the command prompt and Notepad. Compared to his modern laptop, which seems to struggle with even these basic tasks despite its impressive modern hardware, the antique machine seems like a speed demon. His videos set off a huge debate about why it seems that modern personal computers often appear slower than machines of the past.

After going through plenty of plausible scenarios for what is causing the slowdown, [Julio] seems to settle on a nuanced point regarding abstraction. Plenty of application developers are attempting to minimize the amount of development time for their programs while maximizing the number of platforms they run on, which often involves using a compatibility layer, which abstracts the software away from the hardware and increases the overhead needed to run programs. Things like this are possible thanks to the amount of computing power of modern machines, but not without a slight cost of higher latency. For applications developed natively, the response times would be expected to be quite good, but fewer applications are developed natively now including things that might seem like they otherwise would be. Notepad, for example, is now based on UWP.

While there are plenty of plausible reasons for these slowdowns in apparent speed, it’s likely a combination of many things; death by a thousand cuts. Desktop applications built with a browser compatibility layer, software companies who are reducing their own costs by perhaps not abiding by best programming practices or simply taking advantage of modern computing power to reduce their costs, and of course the fact that modern software often needs more hardware resources to run safely and securely than equivalents from the past.

I cannot agree more.

I have an elderly Lenovo laptop that I never turn off because it sometimes takes over an hour to reboot.

Switching between applications can take tens of seconds.

Perhaps it is RAM limited or the hard drive is severely fragged.

But I suspect that it is down to the relentless “improvements” in modern software.

While I do agree that abstraction has a penalty there is also always the “weakest link” in the equation.

You can get cheap SSDs now for under $100. Then, re-paste the CPU and probably buy a fresh battery that’s compatible to with the laptop. After all of that your 1 hour boot probably goes down significantly.

Also a re-install or running the several suites of disk clean/maintenance tools can always help.

Maybe instead of running maintenance tools or reinstaling Windows you could get proper operating system which doesn’t require this. I’m running and upgrading same Ubuntu almost 10 years now, during that period 2 times completely changed the hardware platform, and two times exchanged the SSD to bigger one moving my OS with sector copy of the old one. Never needed to do so called ‘fresh install’.

This could also get you some rest of exchanging CPU paste beceuse in the world without telemetry when you do nothhing -> your computer do nothing so the CPU is not a subject of constant frying with 100% usage.

Stop shilling for Linux. As a Linux user I am offended at how stupid you make the rest of us look.

He’s not wrong, though. I’ve seen multiple instances of Windows installs slowly grinding themselves to a halt over time, requiring a reinstall from scratch to restore usability. I’ve never seen that behavior on a Linux box (or a MacOS/X box either, for that matter).

@Jeremy I haven’t used Linux (as a desktop os) in about 4 years, but it always felt like if I didn’t just install each big version jump as a fresh install then I would get all sorts of messed up packages and driver issues etc.

Since I had /home partitioned it was relatively painless but I haven’t had that experience with Windows.

I’m not saying either of our anecdotes speak to the whole but I certainly have had Linux issues.

Everyone would use Linux if it wasn’t so complicated to install even simple software. Also Linux does not have that fresh feeling that Windows has. I feel bored and somewhat confused when using Linux. Don’t feel that way with windows.

Between the suggestions of “try a different brand of OS” and “occasionally re-paste your laptop’s CPU and swap SSD/battery”, it’s the former you find absurd/offensive/stupid?

@Jeremy

Linux has the exact same problems as windows: bloat, dependency hell, slow apps, installations that corrupt the system, driver issues. I’ve had more issues with Linux then Windows. But both need periodic reinstalls and need some tweaks..

Actually, one of the big problems that slows down Windows is the Registry, the other is legacy DLLs. The problem is that it keeps growing and has a large number of abandoned entries. In addition to this, there is an ever-growing number of legacy DLLs that get loaded upon boot. Fragmentation used to be an issue but I think they finally fixed NTFS but it could just be that SSDs made the problem invisible. Back in XP days, I worked for a company that wrote a Window’s optimization tool, so I’m not just inventing this stuff.

And Ubuntu of all flavors?

I’ve been a relatively hardcore Linux programmer for 23 years… and I use windows in my laptop. Mostly for simplicity, but mainly because I hate Openoffice, Gimp and half-baked, poorly mantained software that claims to be “as good”. Period.

Btw..Whoever still claims “LaTex is cool” can comb his pubes

I wouldn’t run a server in windows. But let’s be honest : laptops/desktops are just a hassle to run in Linux. All you need is Putty, Notepad++, Office and WinSCP. Maybe even VSCode if you like colors.

@keredson

Reinstalling the OS is sloppy design of said OS.

Cleaning your hardware, a physical object that good old time will corrode and that can’t fix itself, is maintenance.

Use linux, you’ll be amazed how fast the system will perform compared to windows. The os is sluggish and very inefficient, always has been like that, nothing that microsoft ever released has been anything but horribly mediocre. But that is a well known fact.

I use both Windows and Linux daily. Windows is very slow to start. However I can’t compare them fairly because all my linuxes have SSDs, either as only storage, or just for non-/home, and company windows machine is HDD only. Linux is a rolling release and I update daily. I rarely have problems with it, occasionally there is some dependency clash, but it is usually an update timing problem which resolves itself the same day.

But for me it was never about performance anyway. Freedom just feels right for my values.

You don’t need to run maintenance tools or reinstall Windows either, so let’s stop pretending that’s a thing except for lazy/dumb IT people or for the wannabees who comment and don’t have a clue.

I’ve reinstalled Windows exactly 2x in the last 20 years to “fix” an issue with it. Once was simply because it took less time than the actual fix would take and I didn’t want to waste the client’s money.

Another time was because I was under a deadline and needed the machine back ASAP. I took a drive image and eventually figured out the actual fix because I try not to be a hack professional.

But Windows certainly doesn’t “require” reinstalls, if anything it’s gotten more resilient over time. You can upgrade a Win7 PC these days directly to Win10/11 without losing any data or breaking a sweat. Modern Windows barely cares what hardware it’s on or what the previous MS OS was. If you want to gripe about something in Windows, there is plenty to choose from but this area isn’t one of them.

You are right. Even windows does not need regular reinstallation. Except when there was a new large update or a new version (irritating)

Still. I was working in helpdesk of a large company for over 20 years and there was times, when the re-installation was the only way.

It does require installs. Case is point is my upgrade from 9900K to 13600K. The old install worked mind you and a normal user wouldn’t notice much difference but there was something off… I kept seeing 110w on Idle and games were still a bit microstuttery at times. Process lasso helped with the stutter but power consumption was still higher than I’d liked it to be… and yes there was no malware, I have a comprehensive security suite. Reinstalling Windows made all the jitters go away and now my PC is idling at 60-70w. So yes reinstalling Windows is kinda needed at times…..specially when where are architecture shifts in your hardware.

I had a Windows 95a install that I kept through multiple hardware upgrades and (IIRC) five complete system changes. There was a specific procedure involving putting both drives into another PC or booting from a live OS CD-R. Too long to detail here.

It died coincidentally with my switching of the critical stop sound. No idea what glitched at that precise moment. What made it really bad was I used a very authentic sounding breaking glass noise.

What it would so is in Windows Explorer I could make exactly three clicks on things with the left mouse button and it’d hard crash with the breaking glass sound. Made it impossible to move or copy or do much of anything in Explorer. IIRC it would also crash if I clicked three icons on the Desktop.

Fortunately I was able to use a web browser without Windows crashing. I found the last, unfinished, release of Total Commander for Windows and was able to use it to copy off everything I wanted to save so I could wipe the drive and do a fresh install. The experience made me really miss XTREE. (Sit me in front of a DOS box with XTREE now and I’m certain I’d have no idea how to run it.)

What was the problem. ONE corrupted character in one Registry key. DOS mode Regedit registry export and re-import would choke on the corrupted character. Must have given me some idea of where it was stopping so I could find it in GUI Regedit.

I tried every Registry fixer and cleaner and method of forcibly deleting the bad key but Windows protected it like a mother wolf guarding a dead pup.

Windows 9x was also good about protecting corrupted files and folders from being deleted. Unlocker was a very welcome tool that could pry Windows hands off those and nuke them. Before Unlocker (which hasn’t had an update in forever but still works on all Windows from 95 through 10) the only way to get rid of those was wiping the drive. Couldn’t get rid of them with any DOS mode tools. Totally the opposite of how Windows should have acted. The response should have been “This file/folder is corrupted and completely unreadable and unrecoverable. Would you like to delete it?”

This seems to have descended into a flame war, but I am just curious, not having been an IT guy since about 2007 (and lazy/dumb being a fair description of my approach at the time): what do you do about the hard drive slowly filling up over time from Windows updates, orphaned install files, etc.? Where do the slowdowns that Windows is known for come from generally, and how do you fix them?

Seph – We are talking about Windows reinstalls to fix a problem, not because you changed the underlying hardware and just expected is to work. Does it often work just fine, sure. Should you expect your OS install to be portable across disparate hardware or major upgrades – probably not.

And your situation should have been fixable with some benchmarking tools and a look into system processes (among other things). But yes, sometimes a reinstall is the way to go if you don’t need the data, don’t mind not knowing the underlying issue (this is my annoyance point), and don’t have a lot of time.

Yep… I spent hours trying to fix it… When instead I could have had it all back up and running within 30-40 minutes (with all my softwares and games).

Sometimes it really is just more time efficient to reformat and reinstall rather than waste hours.

Steve – Windows has built-in disk cleanup tools you can call to deal up with files that are no longer needed at both the user level and system levels, which includes update files, optimization files, etc. In addition you can use tools like TreeSize Free, Spacemonger, Wiztree, etc to find non-Windows files that are taking up space where you can personally decide whether they are necessary or not.

A drive that’s filling up shouldn’t affect performance unless the drive is nearly COMPLETELY full, in which case it’s time for a larger one unless you don’t need some of that data, and at this point everyone should be using an SSD of some sort so it’s not like data being stored on the inside tracks as opposed to the outside ones is really a concern anymore or affecting the performance of the underlying hardware.

Windows has been around long enough and is popular enough where between the built in diagnostic tools and some trusted popular third-party ones, it’s pretty easy to disgnose issues without resorting to a fresh install. Malware attacks are different obviously as it is hard to trust any OS install after an invasive malware attack, especially for sensitive data.

Seph – 100% agree with you that a reinstall can be more time efficient. I just hate doing it because then I’ll likely never know the underlying issue and/or be able to help someone else in the future. For those of us not in the biz (or not as stubborn), it probably doesn’t matter though.

Win10 is exceptionally good (normally) at moving to disparate hardware. I’ve imaged older AMD machines to modern Intel rigs just to see how bad it would be as a test, and for the most part it works just fine even when going from am ancient SATA HDD installation on AMD hardware to a newer NVMe rig with an Intel. Certainly not best-practice, but considering how inflexible older Windows OSes were with stuff like this…. pretty neat!

Oh yeah that’s amazing how it just reconfigures itself now. I remember having to reinstall windows when upgrading processors back in Pentium 4 days 🤔.

Seph – Same. Win98 SE and XP had no interest in going from a Slot 1 PIII to a socket 478 (I think?) P4 without a fight, haha.

Windows 11 was my call to make the move to Linux. I run older quad core rigs with SSDs but this was not good enough for the new MS OS. KDE Neon runs beautifully, looks a lot like Windows, and is easy to maintain. Never looked back.

Nobody but server admins will use Ubuntu, it’s not user friendly

I’ll fix that: “you can now get cheap 2TB SSD for under $80.”

I will sit here and tell him with a straight face that an E5 1650v2 is not comparable at all to modern hardware. It’s great, I’ve built a half dozen of them. But Skylake is a lot quicker and an Alder lake is another ball game. i3 12100T is going to whup your 1650v2 into next week.

I am serious. Also please tell me you are running an NVMe drive on the E5 platform.

I also want to ask him did he run ‘bloatynosy’ or ‘thisiswin11’?

I’ve tried 10 LTSC and its wonderful. (I ran 2000 for years, and 2000 theme on Win7 for many years after that even, it’s great)

Buddy you’re still using an HDD? Do yourself a favor and install an SSD (even an old one) in that thing and it will feel brand new.

You’re totally missing the point. SSDs are awesome, yes, but software should NOT expect them to be standard. SSDs are not supposed to compensate for bad performing OSes and applications, get it? 😃👍

i think you’re missing the point, that hard drives are finished for personal computers. get over it.

‘If you have nothing meaningful to say, please do the world a favor and keep quiet. Thanks for your understanding.’

Joshua snarks without a hint of self awareness.

Should modern OS’s also work well with drum memory? Eight inch floppies? 4k of RAM? Punch card readers?

Nah, when it comes to storing large swaths of data HDD’s are still king, unless SSD’s get significantly cheaper. For $124.99 I can get a 7200rpm 6tb WD Black w/ 256mb of cache & a 2tb Silicon Power A55 for $64.97; so for roughly $130 I can get either a, 6tb HDD that performs ok, or a similarly performing 4tb of SSD that takes up two sata ports; I think it’s a no-brainer what one should choose, seeing as not everyone can shill out hundreds upon hundreds for a decently sized SSD.

I think the main point is that newer versions of the same software now run slower on SSDs than their older versions did on HDDs. Software should take advantage of SSDs, but not to the point they use SSDs as RAM and actually run slower then before.

@Arlyst

>People like the person complaining basically want to halt progress because they feel their old systems should be working forever..

Well, the old system works, for all intends and purposes and the occasional maintenance withstanding, forever.

My fiancee and i have a friend, an older doctor, who still uses an old Win95(!) machine to do all his personal office work. Why change whats not broken and does the job for someone?

I sometimes use old hardware on purpose, to focus, to slow down, to write something and not being distracted. And i am always amazed how blazingly fast stuff just works. Yes, IO is slower on these machines, spinning rust at five MByte per second is slower than even a cheap SSD at 150, but with program sizes in the kilobytes and not in the hundred megabytes that benefit is eaten up fast.

Then the whole world bowed down and changed their ways because you said so; software companies relentlessly worked to optimize already working code, foregoing the market demand for new features, because you deemed it more important than the revealed preferences of everyone else. What an arrogant comment, of course we want optimized software, but don’t chastise everyone else for dealing in the real world rather than your dream.

Well, speed is also a feature.

And in my experience, most software has not added any features the last 5-15 years.

There are exceptions, but those programs are also pretty fast so kind of a moot point.

And it’s fully possible to make fast software without “working relentlessly to optimize”, in fact it’s the same as for hardware, you just remove the biggest bottlenecks.

And if you remove a bottleneck in software, it runs faster for millions of people, compared to just you for hardware.

Thinking about it, the business case for spending some time optimizing software would probably pay for it self in a great way (for the world economy).

And it might just be justifiable for big companies also using their own software, such as Microsoft. A second saved is a penny not spent, multiplied by the amount of users, multiplied by uses per day, multiplied by 365 days a year.

That’s a lot of pennies.

I also say so. There is no good reason why a newer version of an OS should be slower than the previous, it’s not as if Windows has become a 3D VR environment to need gaming hardware to run.

We have energystar ratings for consumer computer hardware.

Perhaps it’s about time we had the same type of rating for consumer software and operating systems?

What’s the point of having energy efficient hardware when the software squanders it all?

Peter, I can assure you, based on personal and current experience, MOST microsoft employees are

1. Wasting most of their time at work, Microsoft contracts all the real work out

and ALL are

2. Using linux for critical work

3. Using software slower than anything Microsoft provides customers

There is no benefit to microsoft to invest resources into this kind of thing for employees.

Further there is no business case for anything absent a monetization scheme; corporations, schools, governments, professionals etc. that actually buy licenses for microsoft office products are not going to switch away regardless, and anyone outside that bubble that can avoid wasting money on office by installing a free alternative, will. You may not call what microsoft adds “a feature”, and most of it is not anything users want, but someone in HR got their promotion getting their company to buy the new cloud feature despite everyone still using slack.

You can say, “hey, you are agreeing with me, this is all stupid and microsoft should fix it”, that is you trying to fight the tide, microsoft has no incentive to change and that is exactly the one thing that matters. Find a better strategy, buy used midrange hardware more regularly to keep up, get so used to reformatting your drive you keep a thumb drive on a necklace, use FOSS, or don’t and be forever confused that the world doesn’t work in a way you find rational.

You can also only optimize so much before you run into a wall. A HDD no matter what you do is going to be slow unless what you are loading uses a very small truncated file structure with data sizes optimized for an HDD.

Complexities of a modern software end up causing file sizes, amount of files, and their structure to be impossible to optimize well for an HDD no matter how many hoops developers jump through

People like the person complaining basically want to halt progress because they feel their old systems should be working forever..

Maybe we should optimize for tape drives while we are at it too… Then we got the bozo who thinks basic computer maintenance things that everyone should be doing is somehow a reason the switch to a different operating system which is just laughable because that maintenance still needs to be done when it is things you need to do to the computer physically.

I’d wager money that said person rarely blows out their laptop fans and likely is running a system where they should have likely been replaced by now and if they aren’t willing to check battery and re-do thermal paste that computer is going to die spectacularly and it’ll be their own dam fault.

Heck they could take it somewhere to do this stuff for them too. Ultimately though people need to realize that things absolutely do change with time and things get more complex on the software and operating system front as we go forward. I mean we went from machines that were basically glorified typewriters that simply input data tonhe analyzed by huge room size computers that basically spit out results on paper to more and more comped systems all of which have required leaving certain things in this past.

Also to the person saying you need gaming hardware to run a modern system this is wildly untrue. It just so happens that you need to run hardware for modern systems that drivers are actually made for and you can’t sit there acting like a hardware company should support something indefinitely when the hardware becomes obsolete.

You can still get a good performing device with built in graphics that is best for office work, but it just so happens that advances with hardware have bought those up to the low tier of gaming

The point is that the increase in hardware requirements has nothing to do with more features and an improved user experience. It is from software development practices like abstraction and generic libraries which reduce the time and skill required by the coder at the expense of hardware efficiency.

OSes from the spinning rust era naturally got optimized by the developers for the awkward, complicated timing behaviour hard drives imposed. Often at the cost of resilience – W2K era systems were not exactly forgiving about repeated sudden power outages or forced reboots….) and features.

You can get quite some performance from spinning hard drives by reordering requests over significant time spans, aggressive write back caching – advanced hardware raid controllers do these things, while they can achieve near-SSD performance with reasonably large arrays, woe betide you if you tweaked these screws up to eleven and neglected to invest in/maintain power failure protections (UPS and BBU/FBWC). Legacy OSes will have done similar things, on a slightly more conservative level.

(Note: Journaling File Systems will not save you, they will make the mess far worse!, once you are reordering writes in cache!)

These kinds of optimizations will just add unneeded complexity and failure potential in an SSD era.

Journaling did l did in fact actually prevent these problems for most users most of the time. Large ram caches massively parallel writes exacerbate it, but both are features of modern Windows, not legacy systems.

For most users, the fact that older systems were designed to assume iops were limited results in much faster responses on modest or old hardware… For excruciatingly obvious reasons. Spinning rust is going to be around a long time, and designing an OS that is crippled at sub 100MB/s r/w and sub 100 iops/s is just bad.

@S.O. ext3 might have some resilience, OG NTFS or Reiser tended to fail REALLY bad with out of order journal writes.

Throughput is less important than one would think – an SSD at a reliable 50MB/s (eg iscsi target) will make W10/11 reasonably usable. Latency/IOPS, yes, that is what matters.

However, I had two bad Samsung EVO 2TB SSDs in a row. Each lasted only 7 months before S.M.A.R.T. warnings and Samsung replacement took 2 months. Eventually Amazon just refunded me.

Hi reading some of your comments has made me laugh it has nothing to do with anything hdd related you can reinstall windows as much as possible but it’s the cpu that is the bottle neck the only way to keep the computer running fast is to do some coding of the software built-in to the cpu ok it might be hard for some of you but just read the manual on the cpu I have been coding cpus for a long time and had success in unlocking features that was not meant to be unlocked with no problems hope this helps clear some of the bad air.

There’s only enough optimization you can do for a hardware that can do a handful of random seeks per second.

And chances are the HDD is pretty old anyway, no reason not to replace this with a SATA SSD.

So let’s break some things down here. Software does not expect them to be standard, but almost all modern operating systems have some form of live scanning antivirus and a lot of indexing things going on which for a standard HDD are taking up a massive amount of it seeking which slows down the entire system

You can see this shown by simply running the operating system off an SSD of any sort and then installing programs on the HDD and you won’t have as many problems or hell bring up task manager with nothing at all actually open and things sitting idle and you will see quite high disk usage if your HDD is your boot drive…

The whole thing here is largely related to how modern operating systems operate for security and indexing and the way a hard drive reads and writes. You also run into fragmentation on a HDD which can mean different parts of a program are scattered around and not in efficient places for the HDD to seek which also makes things take longer.

As you see there is a ton of things that simply cause an HDD to be slow that are outside of software they relate to security, indexing, how things fragment or get placed on the HDD and simply drives never being able to maintain their top speed at all times as a result of other factors. Even the highest end HDD (a lot of laptops use slower hdds)

Transfer speeds on an HDD typically used in older laptops are going to peak around 75-100 Mbps if you are lucky (that’s with it completely empty and fresh). Heck even age can slow them down quite after awhile. In the real world these times are going to be lower and then these other factors are going to come to light.

The operating systems are not bad performing. You’d see many of the same problems if you loaded a live scan antivirus software onto windows XP or did better indexing/superfetch on the OS.

You can’t keep technologies moving forward by clinging to dated technologies just because you think you have a good opinion when your opinion is in fact just whinging about advancements.

Software and operating systems for more complex, more secure, and have done a lot of things to try and ease used interactions with indexing s d superfetch. Programs can have a lot of files that are large that a HDD needs to find or a lot more small files both of which an HDD simply isn’t grand for because to put it bluntly HDDs suck for anything that wasn’t from the windows XP and very early vista days.

Software complexity, file structure, file number, and file size alone preclude an HDD with an OS on it from operating anywhere near peak efficiency and even without an OS on there they aren’t going to perform well for those tasks simply because they have a limiting factor due to how they operate.

TLDR : HDDs are limited because of how they operate which causes slowdowns due to a multitude of factors many of which are not related to software, but the things that are software related are unavoidable if you want security and to have many of the modern things we have

On-Access Virus scans were probably worse in the W7 ecosystem….

If you buy a car based on the paint color and number of cup holders stop reading this comment. What slows down the OS is all the un-needed cr@pware and spyware/malware/usertracking built in and installed. Do you really want/need your computer constantly connecting to google/micro$oft/apple/amazon/facebook? Even when you don’t have a facebook account? Pretty and time wasting animations and sounds for opening and closing windows? Software updates for the sake of software updates is a great way to break things that are working and slow things down with useless and un-warranted overhead. Most software and OS “features” seem to lean more towards being malware and revenue generating for the perpetrators than benefiting end users.

Old SSD’s aren’t necessarily the best in the world, & in some cases a cheap HDD may be faster than a cheap SSD, especially if the SSD doesn’t have a Dram Cache.

I was with you on storage per dollar meaning that HDDs are still relevant for large storage needs, but they are absolutely terrible in comparison to SSDs for responsiveness.

Even a very budget SSD like Micro Center’s in-house Inland brand (they give them out for free with digital coupon fairly often) will beat a decent HDD in terms of latency. Often, SSDs are orders of magnitude faster in iops, which makes a huge difference in how people perceive the responsiveness of their system.

Having said that, a combination of solid state system drive and HDD (or perhaps multiple HDDs) can definitely make sense if you need a responsive system and best bang-for-your-buck storage in one machine.

Latency is what matters for an OS drive, and most any flash storage better than a bargain bin SD card or USB stick will beat even a 15kRPM SAS disk into the ground on that. A 100MByte/s SSD will make a huge difference with W10/W11 compared to a 100MByte/s HDD.

Absolutely. Even a cheap SSD in an old machine makes it feel like a new PC for users. An SSD is essentially a requirement for Win10/Win11. I always strongly recommended that anyone upgrading to Win10 also clone their install to an SSD.

First thing I would do is turn off that laptop, remove the HDD and image it. With the image safely stored elsewhere, I would then copy the image to a SSD and see if the elimination of random reads from the HDD solves the issue. Often I have found that modern OSs tend to not like older HDDs and are often written with the expectation that they will be installed on an SSD. HDDs are the most common bottleneck in situations like this. A cheap SSD can often make an older machine feel like new.

Second, and I think this a good thing for every PC user do regularly, is to wipe the machine and do a clean reinstall. Assuming it’s performing better afterwards, which I suspect it will, then you were probably dealing with OS bloat (pretty common with windows). I make a point to wipe my c: drive and do a clean install on my primary machine every 6 months or so. It saves a lot effort by not having to deal with most issues that crop up over time.

This thing where people ignore basic maintenance and other quality of life procedures when dealing with their personal machines is baffling to me. I have machines that are 15+ years old that run perfectly fine with some TLC and well managed expectations.

You can easily check task manager (if Windows) after a reboot and see hdd utilization to determine this. 100% of the times it’ll be just a suffering hard drive.

Jack – this is 2023.

We should not have to go out of our way to do basic remedial maintenance on computers.

My Huawei cell phone boots from absolute cold start in 30 seconds. It finds Wifi and 4G almost immediately.

Why are we left with this legacy of laptops and PCs that need human intervention and hardware upgrades to keep them running smoothly?

Someone writing OS for PCs needs a kick up the ass – a rude introduction to the 20202s

We are left with legacy hardware that needs upgrades and intervention because they no longer occupy the part of the hardware spectrum that developers are targeting. Seemes pretty obvious to me.

30s to boot seems slow to me. My PC boots in about 20s, so I guess I win?

It sounds to me that real problem your having is you don’t want to put in the effort it takes to maintain legacy hardware. What you really want is for legacy devs to cater to your specific needs and write OSs that work perfectly on old hardware. It’s not going to happen. Be prepared to do some work.

No, it’s not like this.

Of course an SSD is much faster and recommended, but before, HDD “were not so slow”: meaning the current configurations (software & hardware) render them almost unusable as OS drives. And this is mainly Windows, BIOS, drivers, and other low level software to blame.

Let’s get one thing right: in the 80’s / 90’s hardware was not enough, so programmers did incredible things; but unbelievable things with their software. It was super efficient & super conservative. Now, maaaany developers program like crap. Windows is a disaster, and you don’t need to see the code to know it. Software lacks design. If a decent analyst / programmer had a quick look at the DBs of one of these “marvelous” Content Management System (for example Drupal), he/she would want to cry.

Before one man was making a full program or game. Now you have 1000 hired workers to do it, as they build one on top of another. This software, even if carefully developed, will be bloated with functionality that may never be used. Microsoft recently removed features from file explorer in Win 11 that you can revert through registry: meaning not only they didn’t really removed those features, but they also added more bloat letting you choose if to see them or not. Pointless, brainless.

Because phones are by design swapped after at most 4 years and if not developers actively try to kill them. Laptops and PCs can easily work 15+ years

#facts…I like to read before I comment…you’d b surprise how many lamen are here misleading the hell outta of ppl with nonsense….I blame Apple for ppl comparing their phone to a PC…because they have reduced consumers to ninnies that overpay for the same device…. Iphone, ipad, ipad pro etc…(Ipad was decisively given ipadOs to seem different) n they would never give the mac’s touchscreen because u would see the sham. (Their PC/MacBooks weakest link in their company for this reason and not paid attention to). Point is Windows is fragmented because hardware is not standardized n constantly changing…yet they are innovating…there will b bugs n bloat…they are reacting and anticipating…while trying to lead. Linux is reactive and MacOs is uniform …you’re comparing… homeschooled, parochial n public school students 🤷🏾♂️

Jay Gatsby – it’s “laymen” firstly.

Secondly, if you think no one is paying attention to MacBook offerings, you must have been asleep for the last few years. The M-series chips make MacBooks serious performers and anyone who wants powerful hardware coupled to a reliable OS is taking a serious look at MacBooks.

We are a Windows house and we still bought a family member a MacBook last holiday season because it was the most performant option available at the time. MacBooks are seriously capable with the newer M chips, but especially for content creation vs the previous Apple offerings.

Your phone uses solid state storage and boots a much lighter weight/less capable OS that is modified and compiled for that specific hardware configuration. It doesn’t have to be flexible to different CPUs, GPUs, audio/IO/etc chipsets, storage controllers, etc, and it doesn’t have to retain backwards compatibility for 30 years of apps before it.

Your laptop likely has a failing HDD that’s causing the majority of it’s issues. If it could run 7 then it can almost certainly manage 10, and a memory upgrade will handle the extra “weight” of modern apps.

But you’re comparing a device made in 2023 to an old device that’s that Jack is saying

Your (admittedly justifiable) advice to periodically reboot a computer you tend to leave on, or to wipe and reinstall your computer’s OS to restore performance, is an outcome of choosing Windows as your OS. Amazingly, Microsoft has successfully convinced its user base that this kind of crummy behavior is to be expected. Well, it’s not.

I have used (debian-derived Linux distributions for 20+ years and never have problems like this. I have systems that have run 24/7 for a year or two at a time, with no need to reboot or rebuild.

Windows will happily run without needing a reinstall and will basically run forever unless it needs to install updates so there’s very little difference.

I have a friend who refused to run updated and never turned off his Win7 installation – he had uptimes well beyond a year at least 3x.

Windows Server has no problem with long uptimes either, at which point usually a system update will cause the uptime count to be interrupted.

So while people like to harp on this about Windows, it’s largely untrue except for the fact that Windows tends to favor reboots after updates more than some Linux distros might.

I run both *nix and Windowd as desktops and Servers and I rarely run into shiw stopper bugs in either.

It really boggles my mind how many “IT Pros” cannot handle basic maintenance to whatever OS they claim to be an expert in.

I have a windows 3.11 install on a am386dx-40 that hasn’t been reinstalled since… oh.. 1994? It survived my childhood, my grandad abusing it, etc. I just finishing upgrading the memory and replacing the RTC in the system and plan on more mods. But guess what? Same OS install. A heavily modified windows 3.11 with CalmiraXP running, DOS upgraded to 6.22, win32s etc etc.

I have a Windows XP install that I had upgraded to from Windows 98se (along with a fat32 to ntfs conversion) that has been migrated across probably 10 different machines including between AMD and Intel chipsets. Still runs great.

I have migrated Debian (my usual Linux choice) across hardware many times and have Debian server installs that are well over 15 years old that have been also upgraded from whatever original install base to whatever was/is current. And this includes switching from 32bit to 64bit.

Same with macOS (OSX). Ancient installs that have been heavily abused and upgraded a ton.

/occasionally/ I have run into bad enough corruption issues to warrant just going ahead and reinstalling or reimaging just to save time (across Linux/BeOS/macOS/Windows/etc). But this idea that you should just randomly wipe and reload every couple years is absurd.

It really shows a lack of knowledge, in both breadth and depth, that people have WRT their operating systems.

I will admit to the backup/imaging > wiping and reinstalling > reloading/restoring method for /clients/ who were in a time crunch. But.. it shouldn’t be the go to.

I think this mindset has also poisoned the knowledge well for a lot of IT people and the moment you are operating outside a narrow view of “the documentation says X should happen but G is happening!” They fall apart and just nuke it. I see it a lot and it drives me insane. Especially when the fix is relatively quick and simple often.

/rant

Helf – could not agree more with you and FWIW, I appreciated the stroll down memory lane.

Nuke and reinstall has its place, but I feel that it should be reserved for time crunch situations, spots where it’s the cheaper option for the client, or times when you have a nasty malware attack and need to be relatively sure you can trust the system again.

Moving 3.x installs and 98/XP installs is honestly pretty damned impressive. Older Windows OSes were generally more resistant to migrating to new hardware in my limited experience (with the case of 3.x anyway, I was pretty young) and I had honestly forgotten about the FAT32 to NTFS conversions, but you’re absolutely right. Did many of those back in the day!

It’s honestly impressive how little modern Windows cares what hardware you are running it on and how quickly it can go and grab (mostly) appropriate hardware drivers without user intervention.

Definitely progress in that area.

But yes, I greatly dislike when people feel the need to rag on Windows in order to make their OS of choice sound better. Each OS has its own particular merits and may be better suited for the person or their specific situation, but that doesn’t mean we need to crap on all the other OSes that exist. For the most part, modern operating systems are extremely reliable and can run for a long time uninterrupted if you do a reasonable amount of caretaking and don’t do anything incredibly stupid.

And if nothing else, reloading the OS should not be a regular or “maintenance” event.

Caveat when imaging a drive wholesale: Unlike legacy (MBR) style partition tables, GPT technically needs some fixing (backup partition table will be in the wrong spot. Can be fixed with the gdisk utility on linux) after copying to a larger medium.

Not sure why I can’t seem to comment, only reply. Anyway, my example is that I rely on Word 2003 for production purposes, because later versions of Office are slow as mud even with things like recognizing a right click. I create and edit massive amounts of text on a daily basis, so every 5 milliseconds makes a lot of difference to me. Should Word 2003 not run anymore on a future Windows version, I hope to be able to run it using WINE. Heck, WINE might even run on WSL by then.

Games I play run faster on wine than windows natively, same build and setup.

I’ve noticed the same on my Threadripper QEMU/KVM passthrough setup. While it’s “native”, it’s still under a hypvervisor, and is markedly faster than running Windows on bare metal. I chalk it up to better memory/NUMA management, but it’s still surprising.

Same with my steam/proton chroot. Windows sucks.

I wouldn’t give Microsoft a pass for this. But a lot of software is very large. So larger that no single developer understands the entire codebase. Microsoft I’m particular maintains so much backwards compatibility that software from Windows from 30 years ago will run on Windows today without a recompile.

This means more and more on top of the old. It happens with Linux too, but I’m that space things get replaced in incompatible ways more often.

Not to mention that 16-256 colors in 800×600 is a lot less overhead than true color with transparent compositing at 4k+ … And that’s just rendering. It doesn’t include security isolation, monitoring, memory clearing, etc that modern systems do for security.

Windows 9x was a giant security hole where any process could access anything everywhere.

Hell, it gets so complex that rust was created as a new approach just to avoid whole classes of bugs without sacrificing correctness and safety by default. Similar for Go and other patterns since then. Some more or less effective than others.

We now simulate or create entire isolated environments for safety. It’s a huge deal. This is where most of the performance has gone. A lot of abstractions,. Sure, but just as much is for improved security.

I don’t have direct experience but instead of WINE, have you considered using “Bottles”? It’s supposed to be able to run more Windows apps than WINE… or so I’ve read.

Uhh.. You really just need to reinstall your OS on that thing. Under no circumstances is any of that acceptable. It has nothing to do with bloat and everything to do with the state of the install. Seriously…

Even that likely isn’t necessary. Removing unused services and applications, old security software and enabling defender etc. A proper defrag,. Though the HDD itself could be dying.

An SSD and 8+GB RAM is really necessary for most modern OS options to run well.

1 hr boot sounds like a memory stick is going bad. That happened to my Win10 box. Try running memtest86 for a few cycles.

Booting a computer now and then cleans some carbage from memory. Cleaners like Easycleaner and CCleaner make huge boost to Windows. The registry database of Windows is the main reason for slowdown.

Another thing is that most Win programs are built with libraries compiled to executable and not common to many programs. This eats RAM. One problem here is the thousands of programming languages used, which can not share libraries.

Some programs, like Firefox and Chrome load pages in memory and don’t rely on the links.

My own Firefox eats sometimes over 1GB, when I have a large project open.

All these time-wasting housekeeping manoeuvres (including Windows defrag) to keep a Windows system going is one of the main reason Linux is superior. No such baloney to ever have to do. No anti-virus or spyware scanning either.

The Windows registry is a complete nightmare and always has been. When the foundations of an OS are poorly designed, you can put lipstick on this pig and seemingly make it workable but it’s still a darn pig.

Reading through many of the comments here, I’m struck by how unfounded and technically untrue many of the statements are against Linux. For one thing, there isn’t such a thing as “Linux” in the same sense as when we say “Windows”. (Actually, a better term is “Windoze”.) Linux comes in many different distributions. A Ubuntu-based Linux OS isn’t the same as a Debian one or something based on a different desktop environment.

No one in their right mind would install the latest Ubuntu distro onto a 15-year old computer with only 2GB or less of RAM. Instead, Linux’s diversity of distros is its strength. You pick the one that’s suitable for the hardware specs you have. Any modern computer is fully capable of handling any Linux distro that’s available today. But older hardware requires informed selection choices. No one expects you to instantly know what will work and which ones won’t. The OS is free so just try it out and see how it goes.

Testing Linux out is just a matter of getting a USB stick and using an app like “Rufus” to make the .ISO file bootable. There is no such thing as a “live” Windows version (yeah, yeah, it can be done but its a painful ordeal and not commonly done). But with Linux, I used an app called “Ventoy” and the only thing I need to do is install it properly on a USB stick and then just dump (i.e. copy) any Linux .ISO I want onto the stick. “Ventoy” makes ’em all bootable without having to go into any extra steps.

Now concerning the comment about it being so difficult to install apps on Linux, I will call “total bullshit” on that statement. Linux has a myriad for ways to install software. You can get something called an “App Image” which is very much like an Windows .exe file. The app isn’t truly installed into Linux. It just sits there and you can launch it any time you want.

Then there’s the “normal” software repository method. To a Windows user, it’s similar (but better) than the Micro$oft app store. You also have Snap files and FlatHub. Depending on the distribution you install, these may all be available with the OS or something that’s very easy to install yourself. What is terrible about the way Windows handles software is that you can grab app all willy-nilly over the web, never totally sure if it contains malware, and updates have to be done on a ad-hoc basis, typically being notified of a newer version and interrupting your workflow in order to get the update completed. Totally stupid method. By contrast, Linux’s software manager takes care of ALL apps under one roof and gets them done all at one time, in the background, without every affecting your workflow.

In all of my 20+ years using Linux, I’ve NEVER run into the same serious hassles that I had with all versions of Windows. I am also a 40+ computer tech and have seen numerous OS’ on a variety of hardware platforms and Micro$oft wins the lottery for the most headaches acquired per day.

Linux may not be for everyone. I get that. If you are a gamer, there are ways to get Linux to play many popular games. But, it may not be as easy or streamlined as in Windows. (I’m not a gamer and never will be, so I will admit I have a limited degree of knowledge on that subject.) Suffice to say, that when you have a massive money-hungry company like Micro$oft dominating the market, it’s only natural that every other software and hardware company out there will bend their will towards M$. But don’t try to compare apples with oranges. Or Apple with Micro$oft. These are two different things.

Ultimately, any time someone scoffs at how terrible Windows is and suggests using Linux, some Windows lover will lambast that Linux user for promoting Linux and demean them with the “fanboy” tag. To that, all I can say is “grow up”. We Linux aficionados realize that there are no TV commercials or magazine ads promoting Linux to common, brainwashed Windows users. Therefore, our only means of getting the word out to suggest (not demand) that they give Linux a fair shake.

I’ve got a 25 year old IBM Thinkpad running Pupply Linux. It’s Win3.1 counterpart is completely incapable to surfing the web. I’ve also got a 15 year old HP Pressario chirping along just fine using Linux Lite and is my main machine. I am typing this reply on my wife’s 6 or 7 year old Acer Cloudbook using an old version of Peppermint Linux (ver 7). And my new Lenovo laptop runs the latest Linux Mint. I totally got rid of Win 11 before even install that piece of crap. I couldn’t be happier, thank you.

So for those people with slow-as-molasses Windows machines, and all the usual spyware issues, keep doin’ what your doin’. I couldn’t care less. But if you want a real smooth user experience, Linux is truly the only way to go… or buy an expensive Apple product that’s locked into its own eco-system. Anything is better than Windows IMO.

It’s almost as if modern software is designed for modern hardware 🤡

Hi, I have an elderly Lenovo laptop (a thinkpad from 2007, making it the first with the Lenovo badge.) I swapped the hard disc for an SSD, found an 8gb kit of DDR2 memory, and installed Windows 10.

Now I wouldn’t say it’s the fastest machine on the planet… but it handles modern software, including Office 365, just fine.

I would suggest your issues are memory and storage related.

Conspiracy theorist here. At least some of this seems to be by design or at least conflicting motives betweem the software company and user. We all have lighting fast freeware that does exactly what you want (like xnview or everything), yet it seems utility for enterprise solutions would conflict with the need to sell or constantly upgrade with subscription pricing. While developers often cite that they want more security, I think this is an excuse. Truly secure systems could be built into the hardware at lighting fast speeds. Software security seems mostly for the purpose of hiding code from competitors and manipulating the user into certain behaviors that are profitable for the company but disastrous for user productivity. Solutions to this rests more with regulators and a Congress “representing consumer/public interest” than developers. In fact smarter developers make things worse if they aren’t fighting for the right team…which is why software gets worse every year for people who want to use their computers to maximum efficiency. There is too much phoning home to Redmond and perhaps the nsa (nsakey) which violates privacy and 4th ammendment, as well is a form of fraud where you buy a computer and software…yet it is normalized that it can be changed and altered at the whim of the company who supposedly sold it to you and cpu cycles are being used also by that company without your permission. If we havked into Bill Gates servers and used the cycles to mine bitcoin, we woukd be in jail, but the reverse criminal activity of constsntly checking certificates, cloud computing, etc has been normalized. We need better laws.

what model is that laptop? Also as mentioned, get an ssd instead, turn off windows defender , system restore and all the other security trash in windows and you’ll notice a big difference,

google is the new microsoft

and microsoft is the new ibm. lol

“Don’t be Evil”.

They abandoned that a decade ago.

Longer than that.

In general, I find that Linux boots faster than Windows. I have no idea why (and choose not to dig into the topic). My laptops of choice are ex-corporate refurbs, ideally ones I have experience with from work (so I know they work well with Windows). I’ll buy them 3-5 years after they come out, replace the HDD (if so fitted) with an SSD and max out the RAM. “Zippy” is what I’d call them after I install Linux (MINT is my current preference).

My experience, yours may vary, but I strongly advise staying away from consumer laptops and going with refurbed corporate ones.

There’s a few reasons, one is that open source development is often as much hobby as job so developers are more likely to dig into the nuts and bolts to optimise things that interest them rather than just meeting a target and delivering, another big one related to this is that most Linux based operating systems support much older hardware for a variety of reasons than Windows does (eg to run a system for playing around with on otherwise defunct hardware). Having deleted anything but the most modern hardware (relatively speaking) from the support list Microsoft for instance is free to leave Windows 11 relatively unoptimised. You can also see this effect when comparing Windows to MacOS – on similar hardware MacOS flies compared to Windows because Apple cares a lot more about optimisation (and yes, optimisation is easier when you’re literally only supporting one CPU and GPU, but Microsoft could still limit themselves to effectively 2 x86 architectures and optimise a lot more than they choose to).

I think your first statement’s mostly it: I don’t think it’s the OS specifically, it’s just the applications themselves. There are commercial applications that have both Windows and Linux ports and the Linux port is so poorly written it’s an utter dog in comparison.

And a ton of applications I use on Linux are *incredibly* old, whereas Windows basic apps seem to be rewritten constantly.

That’s because those apps are “ported” with very thick “compatibility” layers. A properly designed app will have internal APIs to isolate different elements. It’s then easy to support different UIs, say, by implementing that API using native Windows, MacOS, Gtk, etc. primitives. The downside is that you have to give serious thought to what the API really needs in terms of the UI instead of creating a thin facade for one platform and then require thick adapters when supporting platforms with different concepts. (E.g., that’s why docker on macs is actually run in a Linux VM running under MacOS – the platforms are very similar but docker requires something MacOS lacks. “Capacities”, iirc.)

With those crappy ports you’ll usually (always?) find that they were written for windows and then a very clunky adapter was added to convert the calls to Gtk. This means that some individual windows calls will be converted to multiple Gtk calls, and the system won’t be able to replace multiple Windows calls with a single Gtk call that does the same thing.

“That’s because those apps are “ported” with very thick “compatibility” layers.”

…which is exactly what the article is saying is slowing down software.

In the specific case of what I’m talking about, God only knows what’s slowing it down because the “slow” part is command line.

Apple also isn’t living with 30+ years of backward compatibility. They’ve also had their own security issues.

I use Linux as my daily driver personally and currently m2 max for work. Windows had a ton of crust to be sure. It’ll also run an exe from 30+ years ago without a recompile. There’s something to be said for that.

Linux and Mac will also run a Windows app from 30 years ago, it’s called QEMU and DosBox, UTM etc use it or Wine/Proton does a good job too these days and it’s more secure to boot than allowing an app built without any ideas of multi-user and Internet connectivity to run as admin/system.

Windows just has a lot of feature creep. The project is too large and has been mismanaged for decades, .NET is one of those debacles where you just have a layer on top of layers of old cruft and Microsoft has been forcing their devs to use it for a while now resulting in the bloated Windows 8/10/11.

If you get a new language/paradigm, like Cocoa, Swift or ObjectiveC did, you don’t need to incorporate the old mistakes. The fact current .NET can call old and broken DLLs, OLE, WinForms and do crap like dependency (aka memory) injection etc is a problem, not a feature.

“What Intel giveth, Microsoft taketh away.”

The average age of working PCs in our house is around 9 years. All run Linux, mainly Mint, with a cheap SSD for the OS. original HDD for data, and max 8 GB RAM. Don’t need more.

I’m typing on a 2013 model Chromebook Pixel with Mint OS. No more new consumer grade cheapo PCs for me, learned my lesson.

For mobile computing, I have a “laptop pool” of full-disk-encrypted laptops with Ubuntu GNU/Linux on them. They are ex-corporate X-series thinkpads and I max out the ram on them generally. Their performance is fine despite them being at least 10 years old.

Everything in our house is 3rd and 4th Gen OptiPlexes and Latitudes running Win10 with SATA SSDs and 8GB RAM…. they are plenty zippy unless you try to play a 4k movie or so something similar that requires software decoding because the CPU and GPU don’t have dedicated decode for it.

Windows isn’t the problem, people being stupid with their computers is generally the larger issue.

linux is tiny and depending on the distro, doesn’t install a bunch of crap you don’t need. my windows install is like 33.7 gigs in the windows folder alone. a modern linux distro isnt even half that.

Just looked it up, and apparently a fresh Debian install requires ~1 GB of storage space on disk.

As a programmer, … er, I mean software developer, I can agree with these conclusions and to some extent justify them.

On one hand laziness. In the “old days”, speed was always a concern and we took great pains to make software run fast on those mc68020 powered sun3 workstations. These days I often do things that would have been stupid, even reckless in the old days. Read an entire 100M file into memory and scan it multiple times — no problem and it only takes a second or two. Unthinkable in the old days.

On the other hand, time spent making a piece of software run in less than a second when a quick approach will yield something that works, but run in 4 seconds. Who cares? The extra effort truly isn’t worth it. But this leads to a mindset that emphasizes getting things done quickly rather than spending time to write software than is optimized for efficiency. And here we are.

You can guess where the corporate mindset puts its priority. Getting it “done” quickly comes first, not having nasty bugs draws second place, and making it run fast isn’t even on the map.

I remember the really old days, when I build an in-our-application-editor that can handle files greater 64k… what a hassle.

Then, in the not so old days, my windows program was some resources and data that I moved into standard GUI objects. GUI design was driven by what was there and what that stuff could do. Make fancy looking stuff? Please no, it is all a hellscape of an individual draw routines nightmare.

Today I craft a class with INotifyPropertyChanged, have some xaml with xml namespaces and wonderful looking things happen everywhere, regardless of windows, linux, android or macos.

I like the new times more.

Modern chat apps use a gig of ram per tab/account (*cough*slack*cough*). Let’s not pretend “we’re not writing assembly anymore” is the only alternative.

yeah a funny thing about the last 20 years is that the browser is the heaviest program, by far. if a laptop gets too slow, or too unsupported, it’s always been because of the browser. but even within that world, slack held a special place. my battery life went down by about 30% just by leaving a slack tab open. and it didn’t do anything that ircII didn’t do for me in 1995.

A program that runs in 4 seconds instead of 1 second is OK if it’s only run occasionally. When it’s the target of a half dozen nested loops, come back in an hour.

Well, I expected someone to say something like this and it is certainly true. That is why I said “program”. Even today if I am writing code in a library that may have unanticipated uses I will use more care than when writing a throwaway python script. The key point is the mindset that develops as you get used to it not mattering if you do quick and dirty coding.

Issue is that you’re not writing the program but merely a single function for it. The runtime of your function is 4s, which is easy to disregard.

But there is so much stuff running on top and below, before and after, and each thing is it’s own function that takes 4s to run instead of 1 and suddenly the user is sitting there waiting for multiple minutes for a seemingly simple process to finish.

And the other factor is overabundance of resources. No software engineer is coding or running tests on a generic computer with barely enough RAM to open a few tabs in chrome. It’s always either a high-end computer or even an entire farm of top-of-the-line servers. No one gives a damn about how their product is going to perform out in the field.

With modern computers, even if you optimize, you have to pick a bit of what you want to optimize for, speed or resource usage. Was messing around the other day and an app manipulating some images took 4-5 minutes using a couple hundred mb of ram, multi threaded it for some speed, it now was 20 seconds, but over a gb of ram

Yes in today’s world always push off the cost (effort) on to someone else (user).

In other words, you gave up upon your ideals? What would your younger self think about you, if he saw how you ended up?

Exactly… Get it running, correctly is much more important. And depending on what it is you may have to scale horizontally anyways. If it takes 2 years to release something that runs on half of one server or 4 months to get something that does the job but takes 3 servers, sooner is better and cheaper than the Dev cost.

There has been a continuous trend since the advent of computing to make coding faster at the expense of needing more machine time. First came assemblers, then compilers, then API layers, then virtual processors (like the JVM or .NET runtime) etc. The thinking is that machine time has become progressively cheaper while developer time is progressively more expensive.

While old code may indeed be vastly more efficient at what it does, it is also much less functional and/or portable than the modern equivalent. Meanwhile the point at which hardware becomes unusable due to age has stretched further back into time due to the capability of even 8 to 10 year old systems these days. Upgrade them with an SSD and most are still quite usable. It seems to be a fair trade-off.

Abstraction making processor transitions easier. e.g. Apple.

Yes and no, agree with nearly all of yout statements. It does not make sense to develop super high complex systems with assembler, higher languages have their opportunities.

Only: “It seems to be a fair trade-off.” is a bit bad to me. Every energy that isnt used is good energy. We are blasting tons of megawatt in the world for displaying GUI frames renered in a bad effiency.

Thats why i liked the gentoo thinking, just compile as efficient as possible to your computer.

And yes, I am working in a company developing in java. It is really unsure to me if the IML is a bad thing or a good thing. But it should be the last layer in any way.

Explain Javascript on the server then Sweeney…

It’s terrible at both development and runtime performance.

On the one hand is sucks huge glistening slimy wet donkey balls for debugging. Worse than COBOL in 1965 using punched cards and printed memory dumps.

On the other hand it crawls like a 1 MHz 6502.

On the gripping hand it’s a dependency nightmare with libraries referenced by URL. Said libraries can and do use specific, hardcoded, obsolete versions of other libraries.

The only thing I can say about it is: Javascript programmers deserve it, no lube.

This. Slow languages, dependencies. Modern development isn’t as efficient as they say.

It’s an attempt to solve a portability issue. Not a good attempt I’ll admit, but for an issue that genuinely exists. How do you run code from within any web browser was the question, and having gotten a hammer Javascript developers tried to make everything in to a nail.

There are plenty of examples in the past of languages that performed poorly but were popular (heck, even the 8 bit era was ruled by interpreted BASIC that likewise sucked compared to assembler). These languages either adapted or were swept away. Javascript hung on because there wasn’t a cross platform alternative, that was until the advent of WASM. How long it continues in the face of that is an interesting question.

heh there is some of this and there is some of not-this, as well.

“While old code may indeed be vastly more efficient at what it does, it is also much less functional and/or portable than the modern equivalent” there is a lot of awful code today that is not only slow but also unfunctional and dysfunctional and unportable and dysportable.

there are many penalties for overuse of recycled code with underuse of comprehension. poor performance is not the only penalty.

in fact, one of the starkest penalties is unmaintainability. often, it costs programmers more time to work with poorly-performing code because of the factoring decisions that were made. of course, we solve this problem by simply not spending the time. so in the end maybe we still saved programmer time but at the expense of having the program be buggy and unfeatured forever.

i’m pretty much describing every android app.

Thank our “friends” at microsoft…. 40+ years of absolutely no planning for anything but profit. Bill got rich, but the world got a big steaming pile of “bill” for their hard earned cash. Sad that monumental failures such as his can’t be added up and the bill given to the cause of it all.

Ha ha, made me laugh – spot on!

Microsoft is a big company -not everything they are doing is bad… .net core for example: it runs on all major operating systems and hardware plus the evolution of c# has made it one of the most powerful programming languages around _and_ influences another great language, typescript. VSCode also comes to mind.

I’m not a msft purist. I run MacOS, Windows, and Linux (xubuntu, alpine) daily. Each wins and loses on different metrics.

MS gave up on VB6 and its community. That betrayal should never be forgotten and forgiven.

Instead, VB.Net was introduced. A completely different language, without all the advantages that programmers loved about VB Classic.

The only reason there were so many VB6 programmers is because most of them wouldn’t be programmers otherwise. VB6 was a steaming pile of dung (“on error resume next”).

So that’s where all the Javascript ‘programmers’ came from?

Don’t believe you. They’re worse now.

The fact is that no ‘programmer’ only uses one language. That’s the tell. Doesn’t matter what the language is, if it’s the answer to everything, the answerer is a fool.

No, I think that’s not the case. VB Classic was reasonable to understand by mentally healthy people who’re not one trick ponies. That’s why VB Script and VBA were popular, too.

The problem is, I think, that guys like you who use street language aren’t capable of anything meaningful in life besides coding abstruse C/C++ code. You can’t even change. People who used VB Classic or Delphi were still capable of normal social interactions.

I was a VB6 developer, and was happy to see it killed off. VB.NET fixed many of the things I hated about VB6. The only thing that VB6 had going for it was familiarity, and that’s something that a developer has to deal with constantly. Things always change, keep up with the changes or move over to management.

“I was a VB6 developer [..]”

Who wasn’t at some point?

“The only thing that VB6 had going for it was familiarity, and that’s something that a developer has to deal with constantly.”

No, it wasn’t not the only thing. VB Classic was the true successor to classic BASIC language. It had natural language elements and allowed for both procedural and object-oriented programming.

I say” oriented”, because it wasn’t being object “based”. That’s a questionable paradigm that’s been forced onto programmers, it’s not an optional anymore.

VB Classic appealed to all the programmers who previously loved to work with home computer BASIC, GW-BASIC and QBASIC.

The Visual Studio 6 IDE also allowed for easy prototyping. You, as a developer, could create experimental prototypes for boss or client.

That’s why it was a real Rapid Application Development Development Environment (RAD IDE).

VB6 and Delphi were not seldomly used by electronic hobbyists to write utilities and control programs.

All these things are no longer being covered by VB.NET.

In Dot-Net, VB nolonger is an independent language with a future. It’s an afterthought, a neglected legacy.

The automatic VB to VB NET translator was introduced in VB.Net 2005, then dumbed-down in VB.Net 2008, then scrapped all together.

Seriously, what’s wrong with you? As an ex-VB6, why are you so blind to not see these crucial grievances? The other VB6 developers did sign a petition for MS to bring back VB6 – or more precisely release an update. VB7 was something that VB6 developers had still keept asking for a decade after VB6 went EOL.

Seriously, I’m quite disappointed by that attitude. VB Classic was beloved by a silent majority, by “normal” users that wanted to write their own programs, like in the DOS and home computer days. It’s as if those users were being left behind on purpose by MS or IT as a whole.

I hope you understand the dimension of all of this. Microsoft was once the leader of BASIC programming languages. It was their original business in the 1970s. That’s why the attitude against VB Classic/BASIC users is twice as sour.

Sounds like you have learned nothing since the days of VB6 then. I also used (and loved) Delphi, and Turbo Pascal before it. It is perfectly possible to write p*ss poor block structured code in them also, and in VB.NET if you so chose. VB.NET is still a RAD tool that lets you knock up quick prototypes, and VB6 needed a bunch of support files to run (it couldn’t create free standing binary code like Delphi). The support files for .NET may be larger, but cause less issues with version compatibility.

BASIC was never a great language. It encouraged writing un-maintainable messes of code and performed poorly. The reason it was popular back in the 8 bit days was due to the fact that BASIC interpreters were small and needed few resources. That and the fact that it was easy to learn made it the de facto choice. Microsoft spotted that and made a BASIC interpreter their first product. They didn’t stop there though, and have been involved with many other languages since then.

Modern programming is less about the language and more about understanding the APIs and system resources. Once you get past that idea then the important thing is to create readable code that prevents anything external from messing with its inner workings. OOP is one, but not the only way of doing this latter thing.

@Sweeney:

>[…] VB.NET is still a RAD tool that lets you knock up quick prototypes, […]

No, it doesn’t. I can’t throw a button and a input box into an editor, double-click the button on said editor and tell it what to do when clicked. In all mayor modern languages that can do a proper native GUI or are a hidden browser i have to write tons of boiler plate to get shit done.

Qt? Fiddle with signals.

Gtk? Fiddle with callbacks.

NodeJs? Fiddle with a server client architecture to open a frigging file.

VB6 was easy to use and lived to the BASIC spirit, open it and trow something together, polish later. VB# doesn’t.

There are reasons why i prefer good old C or C++ and a text interface nowadays. It gets shit done without any boilerplate forced on the user.

@Bastet I’ve no idea what you’ve been trying, but yes you can do precisely that. Pick Windows Forms as your target environment and VB.NET will work exactly like VB6 in terms of the design workflow. You get the ability to write command line code, libraries, visual components, services, Windows Presentation Framework code etc, but this doesn’t mean you can’t create in the traditional RAD style also.

https://learn.microsoft.com/en-us/previous-versions/visualstudio/visual-basic-6/visual-basic-6-support-policy

This does not sound like giving up.

Does anyone here actually work in a corporate environment? Because most of those still run on-prem hardware for both the server and workstations and they’re almost all Windows boxes.

Works just fine. If anything, hardware lasts longer productively running Windows for workplace tasks than ever before. Probably part of why Win11 instituted a surprisingly high bar for certified hardware even though Win10 will install and run on stuff as old as (and probably older than) a Core2 build if you have reasonably fast storage in there to install it on and run it from.

Hardware refreshes on leased units used to be much more necessary after 3-5 years than they are now. Many more people ask to keep their old workstations and that almost never happened in the bad old days.

I’m not sure many people here bashing Windows are basing their opinions on much more than very limited experience with home machines.

Corporate programmer here!

We have windows _and_ linux servers for cross-compilation to “sane” hardware running embedded software.

Linux is more reliable (one was up for over a year before needing a reboot), faster for the same hardware, and doesn’t need a nasty s/w upgrade multiple times a month.

The windows stuff is heading for the cloud anyway, which adds yet another layer of inefficiency (not to mention needing 100% internet connectivity). It’s getting slower every year.

On my personal laptop I run linux in a VM when I get hacked off with all the memory leaks in the Windoze apps (corporate says we must have a windoze laptop). It runs everything faster.

a weird irony here, i don’t know how to parse it into like a true/false evaluation of “absolutely no planning”, but microsoft has a lot of high quality planning. by the late 90s, they already had an internal plan for dot net to become what we now know as android. but somehow, android got there first even though android started from a standstill after a giant megacorp had already had a decade of progress on a good plan.

and today they’re doing the same thing with putting the browser in the cloud. running a browser remotely is a fantastic battery life hack. i believe it will take over the world in some form in 5-10 years. and here, microsoft has already announced the plan, years ahead of the pack. and yet i know, when it becomes everyday, microsoft will not be there.

i honestly don’t know how a company can repeatedly have such good plans and such poor execution. i just thank god that microsoft isn’t a part of my daily life since the 90s.

It’s a tale as old as time. I first heard it as “What Andy [Grove] giveth, Bill [Gates] taketh away” in the 90s.

It’s not too dissimilar to the phenomenon of adding lanes to a highway to “solve” congestion. More lanes means less congestion, which makes that route more attractive to drivers, which means more congestion…

It takes effort to make software perform well. It might be fast when simple but as you add complexity (aka selling points) it gets slower. In development you try to speed things up to an acceptable level – but what defines that ? Typically, it’s the performance of the software on the developers and salesmen’s machines.

And those machines are modern. It’s rare to test on an older machine unless that’s known to be an important part of the customer base.

It’s clearly a waste of effort to improve the performance beyond what a typical user expects, so optimisation and tweaking stops at the point where it’s acceptable. And slower machines don’t feature.

Your point about acceptable performance of the software on the developers machine is part of the problem and should never be considered a benchmark for acceptable end user performance because I’ve yet to meet a developer who uses a system that comes even remotely close to those of the average end user. I’ve always considered this to be the “developers dilemma”; a problem requiring unwavering diligence that is hard to maintain in the corporate world.

Just force developers to work on 10 year old hardware.

No, I don’t think so. Rather, force developers to TEST their software on 10 year old hardware.

Development itself requires constant changes and re-compiles. It would take ages on old hardware to complete, it would totally slow down the development until it comes to a halt.

Developing and running are two different things, really.

Like cooking and eating.