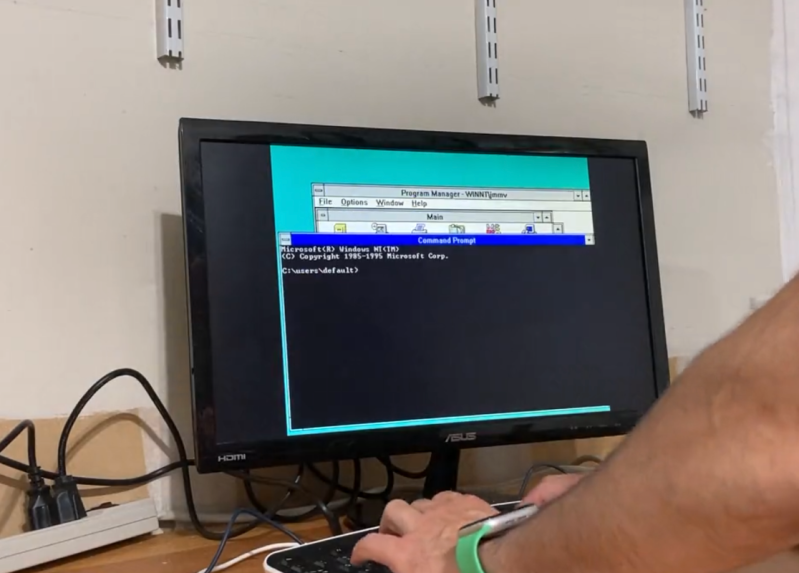

[Julio] has an older computer sitting on a desk, and recorded a quick video with it showing how fast this computer can do seemingly simple things, like open default Windows applications including the command prompt and Notepad. Compared to his modern laptop, which seems to struggle with even these basic tasks despite its impressive modern hardware, the antique machine seems like a speed demon. His videos set off a huge debate about why it seems that modern personal computers often appear slower than machines of the past.

After going through plenty of plausible scenarios for what is causing the slowdown, [Julio] seems to settle on a nuanced point regarding abstraction. Plenty of application developers are attempting to minimize the amount of development time for their programs while maximizing the number of platforms they run on, which often involves using a compatibility layer, which abstracts the software away from the hardware and increases the overhead needed to run programs. Things like this are possible thanks to the amount of computing power of modern machines, but not without a slight cost of higher latency. For applications developed natively, the response times would be expected to be quite good, but fewer applications are developed natively now including things that might seem like they otherwise would be. Notepad, for example, is now based on UWP.

While there are plenty of plausible reasons for these slowdowns in apparent speed, it’s likely a combination of many things; death by a thousand cuts. Desktop applications built with a browser compatibility layer, software companies who are reducing their own costs by perhaps not abiding by best programming practices or simply taking advantage of modern computing power to reduce their costs, and of course the fact that modern software often needs more hardware resources to run safely and securely than equivalents from the past.

I live an everyday fight against this hot mess. Everything grows more complicated by the day and the market as a whole just will not put its collective foot down. More and more bloat down to even the OS itself (which should be the lightest part of your computer!) just keeps getting piled on. It’s not just applications. I see games more and more just demand that users simply add on more and more resources rather than performing even basic optimizations and struggle to run completely smoothly even on the most powerful components sometimes. For all the functions of many of these games they should run pretty smoothly on a low-medium class processor with 4GB of RAM even, yet they require 16GB and a very high medium class (or even something in the upper class) to at all run smoothly just because of bloat. (Especially becoming a problem with nVidia still so far behind the curve on VRAM since some of the lack of optimizations includes textures, shaders, and etc.)

FWIW you can strike a balance with some things at least. Some OS features can be slimmed/removed and you don’t have to use the latest (OMG! BLASPHEMY!!! Please don’t kill me! I didn’t mean it!) Also, most applications have much cleaner third party alternatives. Notepad++ starts almost as fast as I can blink and every function is just as snappy fast for me for example. LibreOffice is orders of magnitude times other orders of magnitude cleaner, smaller, and faster than Microsoft Office and runs so much better that even weak hardware handles it well. And so on and so on.

I will say the difference isn’t as extreme as it feels like though. As a coincidence I’ve been messing around with DOSBox-X recently going down memory lane hoping to maybe get a few old games going in a virtual gaming machine. (I already have had a MS-DOS setup for ages and that is still snappy as should be expected for something that barely even does anything on its own and is more just a gateway for other processes.) I setup a virtual machine with Windows 98 Second Edition among other things and I have to admit that a lot of processes are a lot more sluggish even on a fairly fast virtual machine than I would really have thought. And I don’t think it’s emulation limitations either. (Which is to say there are some limitations like lack of GPU acceleration, but the stuff I was doing for setup/etc doesn’t use acceleration.)

And the more I think about it the more I remember that it really just plain wasn’t as snappy back then. I was just more patient (fed in part by always being well behind the curve in hardware back in those days. Back then if a CPU couldn’t keep up the entire system just blocked. Today you can perform other tasks because chances are nothing can fully utilize all cores 100% and even many functions within a single program will still continue to work while it’s otherwise processing a task.) I think we just get used to this so much so that when something does actually cause something to be less than instantly responsive it causes a bit of lack of patience. And I’m definitely guilty of this lately. Some things taking longer to focus than I would expect, thus not taking my keyboard input that I started too soon drive me crazy, but really it’s just a fraction of a second delay that once would probably have been multiple seconds instead. I’m totally spoiled by fast processors, SSDs, etc, so it really bugs me when something doesn’t take my input *NOW*. Which is actually silly. That said, it’s still really bad in a number of ways at a number of different levels for everything to get too bloated. Even if nothing else, it’s just that much more electricity on your bill.

Well said

Agreed. My current job is such that the db is has been designed for backend convience not consumers… insaine mutations of unorganized db data occurring on front-end because of this. When I bring up things like normalization I get responses like “just accademics”… yeah, the basics imho. The big problem here is the ORM, I find them to be infinitely more trouble than worth, mainly because it gives the programmer undue confidence to pretend like he understands db admin while avoiding understanding sql and corrupting data structure.

A lot of this is caused by software world not drawing a distinction between programmers and software engineers in the corporate world. These fields are distinct, yet I see software engineers promoted thru the ranks with a 3 month programming course certificate. To get a PE license in real state certified software engineering, meaning you have a professional license like a doctor or lawyer, you have to get a 4 year ABET degree, 4 years of apprentissage and 2 8 hour tests, annual certification and legally required annual training hours. To be a programmer, good or bad, you need literally nothing… programmer/developer is to software what writer is to paper, engineer is to software what author is to book… one is the act of building something practical that is gauranteed to work flawlessly, the other is just implementing good or bad. In other words, it’s as if the software world has taken construction workers and replaced then with architects and vice versa when construction a building… even worse, the software corporate world doesn’t seem to understand the distinction between an architect and an engineer… architectures show you what you want to build (they call them designers which engineers refer to as drafting) while engineers show you what you actually can build and/or need to build.

This in turn is all caused by software world thinking that EVERYTHING they do is new and needs a unique custom in the moment solution. This is what birth agile… they didn’t know real good solid project management, they didn’t know how to control those parameters for maximum agility as desired, so they came up with a buzz world and use it as an excuse to ignore all the things GOOD project management was intended to solve.

It’s a little pathetic… most software is totally focused on winning each battle while ignoring the war, tactics are resultingly immature and strategies are non-existent.

The worst thing for me is the blasted file manager. Simple tasks like moving a directory inside another or renaming a file will trigger a 1-2 second delay while it shows me a dialog and is “calculating”. Open a folder with media that I forgot to designate as “not media” triggers 5-6 seconds of delay while thumbnails or directory views are calculated. It’s infuriating. Ancient Win95 running on spinning rust acted faster.

Agreed – file manager is terrible these days. I wanted to copy 1000s of files between machines and had to resort to command prompt methods

Easy solution:

Stop buying/using garbage software. When people ignore Microsoft’s bad practices and “use it anyway” because they “have no alternatives”, they’re just reinforcing Microsoft’s behavior. That tells Microsoft that they can do whatever they want with impunity, and people will just suck it up and install the “latest and greatest”.

You don’t HAVE to be stuck in that paradigm. Vote with your wallet and conscience.

Most developers these days need an entire framework with external libraries just to read a value from a text box.

The cause is lack of good software engineers. Using frameworks and abstractions allows companies to

create software quicker with less engineers and less experienced engineers. But it comes at the expense of quality (stability, performance, binary size etc.).

I’m an embedded software engineer and I love coding bare metal. It’s often much more elegant and performs better. We have a bare metal device with a graphical color display that boots in 10ms. But we also have devices running embedded Linux that take 10 seconds to boot. Even our Rhode&Schwarz bench power supply runs Linux and takes 10 seconds to boot. On OS running on a CPU with multiple cores running in GigaHertz frequencies should boot within a second. Apps should boot within 500ms.

I think a better solution is simply less software (sometimes it’s not needed, not everything needs to be “smart”) and less complicated software. Focus on elegance and not on features.

Couldn’t agree more I’m an absolute noob to programming world but this comment makes the most sense!

The cause is lack of good software engineers. Using frameworks and abstractions allows companies to

create software quicker with less engineers and less experienced engineers. But it comes at the expense of quality (stability, performance, etc.).

I’m an embedded software engineer and I love coding bare metal. It’s often much more elegant and performs better. We have a bare metal device with a graphical color display that boots in 10ms. But we also have devices running embedded Linux that take 10 seconds to boot. Even our Rhode&Schwarz bench power supply runs Linux and takes 10 seconds to boot. Processors with multiple cores running in Gigahertz frequencies should boot within a second.

I think a better solution is simply less software (sometimes it’s not needed) and less complicated software. Focus on elegance and not on features.

How fast did PyCharm open? I wonder how much speedier Photoshops generative (background) fill was.

My point is this: capabilities.

There’s only one UWP app I even use because of how terrible they are—Phone Link. Everything else has been replaced with traditional alternatives (like Notepad++ x86_64 executable version, not the UWP app). And I don’t use Windows 11 except for one machine. It’s STILL buggy and unreliable (can’t count how often the desktop just refuses to respond).

I have a newer i7 with loads of RAM and an SSD that came with W11. The most basic function that an operating system can do is launch an application, right? The Start Menu has typically been Windows’ method for that since its inception in ’95. Most recent iterations have allowed you to begin typing after pulling up the Start Menu to quickly search it. W11 now uses this to search the web or the MS Store, etc, and has completely crippled the speed of this function, rendering it all but useless. That’s not just abstraction layers, it’s blocking the primary function of something to bloat it with secondary crap that I don’t want and can’t turn off.

Have you noticed they also got rid of the clock in W11? You cannot see the seconds in the bottom right corner. Even if you click on it. You need a third party application to bring it back. They’ve had the clock since windows 3.11.

It’s really convenient that for simple operations on the sort of data you encounter daily, we’re at the point where cpu throughput is usually not the limiting factor – just latency of things and both latency and throughput of I/O. E.G. if I need to search a bunch of text files with notepad++, it won’t take that long even if I am using regex and not just a contains. But of course hardware with greater throughput doesn’t equate to proportionately less latency, they’re different things. Extra layers of abstraction may or may not be worth it, but even if you take away that part, something like modern Word uses the extra performance to do a lot more *stuff* than 1995 Wordpad. They’d rather do all that stuff and some other questionable things besides than get such good latency that other factors become limiting. And really, at least not everything has more latency. I remember typing a sentence sometimes with an XP machine built in ’99, and I could take a sip of a drink before the letters finished appearing if the machine was bogged down at all. I did like how although everything seemed to be buried in menus 5 levels deep, at least when you hovered over a menu it popped up immediately and didn’t waste space, so you didn’t need to scroll as much – again, unless everything was bogged down.

Honestly, all of this stuff has massively more latency than the analog days. Back then, the latency of e.g. receiving audio was low enough that you could have several devices tuned to the same transmitter and they would all be in sync well enough that you might not be able to tell they were independent devices instead of multiple speakers from the same device. And a number of other devices were sync’d to the grid mains instead of using NTP or onboard clocks, so in some situations two similar devices would do the same thing at the same point on the mains sine wave, which is another assumption you can’t make anymore. (E.G. if two lights in a room use PWM dimming, there’s no reason to assume it will be synchronized).

There’s wisdom, of the crowd here, a combination of code bloat and the law of diminishing returns, sucks the life out of our systems. I can factory reset my iPad mini 6 and Mac mini M1, in 40 minutes, the same set of hardware and backups, a fan assisted PCIe4 stick, with a fan, Thunderbolt USB C cable. Unified memory, PCIe4 memory, on the Mac mini M1, 5nm, 16 billion transistors, I factory reset, get rid of the update, on update bloat, as if it were a fresh boot. The Office 2021 Professional, slides out into place, forced to have some discipline, by the operating system; or the iPad, the discipline of a mobile operating system, factory reset, an application reload of 100 apps, over WiFi 6.

In Windows, an old laptop, updating, 20 hours, replacing hard drive, with a flash drive, that is ghosting the hard to flash drives after the long updating, factory reset, 3 hours, loading Office 2021 an hour and a half, it had 16 GB of RAM. But that hard drive, was mighty slow, hence the long update time, what I did notice, was the speed of defragmentation, in times gone by, that would take many hours, say 7 hours, it only took about an hour and a half. So when I ghosted, things were in order, after 6 defrags, I’d gotten it down to 4 passes and 12 minutes a defrag. Chrome is rather Beta, but I keep a Chrome tab, as a spare constant updating of the OS, apps, like windows, lots of reconfiguring, with the Apple product, very little reconfiguring, although the flash and RAM are mighty expensive.

The processor (Core, Athlon, Phenom, Ryzen) is very old techology, you can think that multi-core and cache is some grand thing, but its not. At the root of the processor the technology hasn’t changed. I keep waiting for some kind of breakthrough that will reignite my technolust, but I always stand disappointed.

“simply taking advantage of modern computing power to reduce their costs”

You got it right at the end of the article. It’s getting ridiculous as a whole not just Software.

Bloat is a huge driving factor. Each new version of any operating system adds a large amount of new features and functionality that many users will never need. New versions of most software packages adds even more new functionality as well. All of this increases disk space usage which increases load time, and increases the memory footprint which adds to load time and causes slower operation. We would be better off in each case if more of these functionality came via plugins and could be optionally installed and enabled.

The shift to more and more abstraction is definitely a problem as well. Even if it weren’t for the browser layer and it’s extra weight interrupted languages virtually never operate as fast as compiled languages. So much software today is written in JavaScript and Python and is simply not as fast and can’t be by nature. They were never intended for the usages they been forced into.

One that personally bothers me more than any of these other problems is one that I see in more and more software engineering articles, posts, and forums. “With modern hardware you don’t need to worry about performance unless you see a problem.” or “Preemptive over optimization just increases development time and complicates your code.” We used to assume that every machine running our software was running other things and that they all had absolute minimal computing power, so we wrote everything to be as optimal as we knew how and tired to use more performant languages when needed. Sure one piece of software running on your computer being suboptimal probably isn’t going to even be noticed today unless something massively wrong is going on, like a memory leak for example. However when there are hundreds of processes and subprocesses running and they are all operating under this new philosophy of course there is going to be a noticeable problem.

Back in ~1990ish in comp.arch (iirc) someone made a statement along the lines of “why does any personal workstation need more than SpecInt 20?”. My response was approximately “we software people will happily add another layer of abstraction on top of X, etc and use up all the CPU speed you give us. If need be we’ll add voice recognition…”

And pretty much I’ve been right. But as pointed out here, many important features came with these extra layers, like utf8, etc, etc, so it does a lot more. Some of these added features are spurious, like cute rounded corners on windows and I’m using animations, but many others are extremely helpful to some or all users

Yet unanswered, hides the shell within a shell within a shell within a shell within a shell within a shell within a shell within a shell , monster.

In any case: What are we complaining about? In 2023, you can literally buy a >10 year old high end computer (eg a 3rd gen i7) *very* cheap, do maintenance and some very cheap upgrades (maxed out RAM and SSD) and end up with something that will still have mid-level performance today (never mind the artificial restrictions in W11, fact is once you bypass the checks, W11 will run well on most anything that would run W10 well).

Project this to 2013… Nope, you are in the W7 era already, a K8 or very early P4 box will NOT cut it at all, not even a Dual LGA771 Xeon will. A very trim Linux distribution from 2013 or even 2023 will run, but forget eg streaming video. PPC machines (G4) from 2003 would still be reasonably useful with MacOS X 10.5

Project this to 2003 … Hell, 2003 was XP and desktop linuxes that began to use rather heavy desktops, and 1993 would only have 486s and 66MHz pentiums for you. NOPE. Linux with a desktop, even lightweight, NEVER ran at any non-frustrating speed on 486 class machines (even a literal 1994 distribution will feel really slow).

In 1993… well, some DOS stuff actually would have still been useful on an *original XT class machine*, the only thing you got in 1983 (Windows 3.0 actually ran on one if you insisted). PC style computers cost a ton back then, software developers did not assume everyone was using the most modern or high end. The actual performance difference between a 1993 high end system (Pentium 66 MHz or 486DX50) and a 1983 high end system (still an XT class machine anyway you look at it!), however, was absolutely brutal and order-of-magnitudes, far larger than 2023 mainstream i5 vs 2013 i5 for certain.

Also: Oh, the cryptography!

Whoever tried to use SSH to or from a 386/486 or 68k class machine will know it can take minutes(!) to establish the connections, and tens of minutes to generate host keys or public/private key pairs. And that is with 2000s era SSH – there are newer algorithms in use and enforced by recent versions, you will notice if you try to scp anything to/from a non-AES/NI capable machine (eg some Atoms) and end up with a performance ceiling of a few 10 megabytes per second….

And a similar thing recently happened when privacy intrusions were monopolized by certain big tech companies, errr I meant mitigated, by going all-HTTPS everywhere, with heavier algorithms than 2000s HTTPS on top of that….

And… someone might not even notice they installed a full disk encrypted OS on a slow CPU these days, and wonder what isn’t quite right…

I run Linux on older computers – runs quite fast.

One problem with Windows is that updates cause system files to be fragmented, and those files cannot be defragmented when the system is running, yet there is no standalone defragmenter. (Note Linux files could be defragmented and the new ones used on a reboot)

Another is that Dave Cutler, architect of VAX/VMS and then WinNT, seems to have taken the bad stuff from VAX/VMS and not the good stuff. Heavyweight processes and other things I don’t remember from my VMS and Ultrix internals courses from at least 30 years ago.

But yes, another problem is software engineering. The reuse of existing software isn’t like building a bridge where girders, brackets, rivets, bolts, etc. are standard – software engineering has a bolt and nut, an adapter for both the bolt and nut, adapters for the brackets the bolts and nuts attach to, and for the beam they hold up.

Modern software spends a lot more time “contacting home base” and cloud-based data. This is very very slow

Use tiny11… issue solved…

Unless you need to power user it…

Many people already gave their thoughts so I’m going to keep mine short.

Three points are often brought up: portability, compatibility, and efficiency.

While I do agree there is a level of truth to both sides’ arguments, time is still the biggest factor.

90’s code was incredibly small by today’s standards. It is obvious we are cramming so many more features, content and considerations into every app. We don’t just need it to run on any hardware and any platform, we need it to look good in any resolution, to adapt how it runs to the number of cores and threads, to adapt its memory usage to what’s available, to handle unexpected cases that even the developers can forget about.

All of this requires a lot of code. This is code that could be done directly in assembly, with every line being given the utmost consideration to maximize performance, but:

-this code would have to be mostly rewritten for every platform

-it would require hundreds if not thousands more man-hours

-any mistake would throw the whole thing away

A good point of comparison imo is Windows. People have a lot of things to say about their dislike of modern versions of the OS. But they mostly idealize the past, they remember only the good things. It is a rare occurence nowadays to blue screen, the bugs you encounter are temporary in nature, never permanent, your devices work immediately when you plug them in, most software installs without needing a restart, you don’t need to manually care for your HDD’s fragmentation, if you even use one anymore, file corruption is a distant memory, a single app doesn’t bring the whole system down, etc.

Modern programs cost more resources, but they also do more to give you the best experience everywhere anytime.

Each layer of abstraction removes a percentage of additional development time that would make it impossible to do what’s done now.

Honestly, it’s a number of things.

1. More programs running at boot.

2. Encryption.

3. Bad programming.

4. Security.

5. Programs are bigger. Even notepad has changed over the years.

6. HDDs got denser, some even spin slower. Some have more platters to search. Is there a fair way to test SSDs on old IDE hardware connections? I actually don’t know, but would think it’s possible.

7. Push those pixels, kid! 640×480 vs 4k.

8. There’s so much more you can do with modern computers. There’s also far more hardware that runs a whole lot faster. Case and point, a 10Mbps lan card vs a 10Gbps one. 1000x more data getting pumped in. Lots of decrypting and running of cat videos! Want to go back further to dial-up?

9. Cores! Cores everywhere! And yet many programs still only use one…

10. Remember how CPU flaws had to essentially be patched in ways that reduced their performance?

Couldn’t the speed problems be due to a bottle neck in the softwares, whereas if the computer was programmed to finish certain tasks before others meanwhile performing less important ones in the background?

“often involves using a compatibility layer, which abstracts the software away from the hardware and increases the overhead needed to run programs. Things like this are possible thanks to the amount of computing power of modern machines, but not without a slight cost of higher latency”

But why are these newer abstraction layers so poorly written? We didn’t invent abstraction in the 21st century. My applications back in the 1990s ran on a dozen wildly different platforms (rather than just 2 or 3 which are practically identical), and with far lower latency than modern ones.

We have this thing called a “compiler”. It lets the programmer write abstract code, and it generates optimized machine code. Did programmers forget that compilers exist?

Java and. Net have been running their intermediary layers for twenty years, this is not new.

Current builds of windows seem to start with 180+ processes running and over 500 scheduled tasks. Reign that in a heck of a lot and performance does get a bit better. Tread with care as one can damage a windows install if going to far. It is not to have one’s computer resources available and not working away on tasks for the OS provider.

I agree. When I use window XP in 2001 on 800Mhz. Processer and 128 MB RAM. It work very good compared to my current laptop.

It’s 100% bad software development. Bad practices, bad coders, and an insane dependency on libraries and “other people’s work.” I have come into dozens of projects, and sped things up by 10 times, sometimes 100 times. Peers are constantly asking me how I do it, and I almost don’t know how to answer it. Like… I don’t actively sabotage my software with every line of code I write. I don’t intentionally *try* to screw it up like my peers. And they don’t understand it. I don’t even try to be efficient. I don’t really care, because modern computers are so powerful. But I also don’t go out of my way to be as inefficient as possible, which seems to be what 99% of programmers are doing.