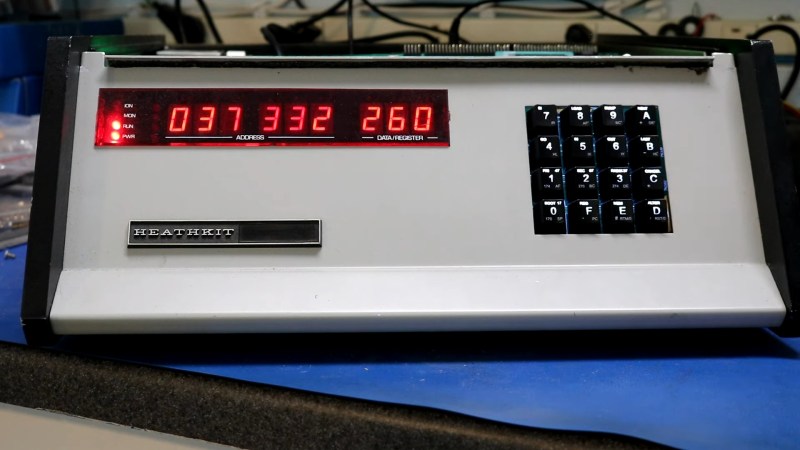

Typically when you’re replacing parts in an old computer it’s either for repairs or an upgrade. Upgrades like adding a more capable processor to an old computer are the most common, and can help bring an old computer a bit closer to the modern era. [Dr. Scott M. Baker] had a different idea, when he downgraded a Heathkit H8 from an 8080 to an 8008.

Despite the very similar numbers, the 8080 runs at four to nearly sixteen times the speed of its predecessor. In addition to this, the 8008 is far less capable on multiple fronts like address space, I/O ports, the stack and even interrupts. The 8008 does have one thing going for it though: the 8008 is widely known as the world’s first 8-bit microprocessor.

In the video after the break, [Scott] goes into great detail about the challenges presented in replacing the 8080 with the 8008, starting with the clock. The clock is two-phase, so that what would otherwise be a single oscillator now also has a clock divider and two NAND gates.

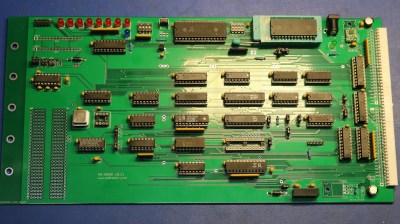

Boring clock stuff aside, he does some great hacking using the I/O ports including expanding the I/O port count from 32 to the full 256, bit-banging serial, implementing an interrupt controller and even memory mapping 64 KiB into 16 KiB of address space! With that and a few more special adapter circuits, we think [Scott] has done a great job of downgrading his H8 and the resulting CPU board looks fabulous.

Maybe you’re wondering what happens if you upgrade the computer instead of the CPU? What you get is this credit-card sized 6502 computer.

The 4004 is considered to be the world’s first microprocessor.

Yes, but the article says “world’s first 8-bit microprocessor”, and the 4004 is 4-bit.

Stupid small font on phones…

This is inside baseball, but I think there was another processor (or at least chipset) that beat out the 8008 by a couple of years.

IIRC, it was built by Grumman for use in the F14. Sadly, it’s almost unknown today because it was classified at the time, and by the time people were aware of it a decade later, the state of the art had moved so far that the story of a weird chip in an old avionics box was just lost.

That’s true, the real “who was first” is seldomly for sure. The light bulb, the telephone, first computer etc. There were multiple inventors around each time who developed in parallel, independently of each others.

Alas, most of the time the history books are in favor of western inventors. The other, lesser known individuals don’t get nearly as much credit for what they had accomplished. In reality, the “this was invented by” stories are all about their respective patent holders, not about the true inventors. Thus, the history books are merely an orientation. The truth lies somewhere in-between.

The F14 chip was alsp the first dual core CPU iirc

https://web.archive.org/web/20190523172420/http://firstmicroprocessor.com/documents/ap1-26-97.pdf

looks to be a good read, but i’d argue

Wow – it’s amazing just how hard this stuff was in 1970. With that massive 375Khz clock.

While nowadays our usual snarky quip “Why don’t you just use an Arduino?” would actually be a simple solution.

Where can one get those I wonder

The Grumman F14 air computer woulda been a big step forward from the old Bendix Air Data analog computers from back inna day. CuriousMarc and Ken Shirriff got to begin reverse engineering one of those: https://youtu.be/D-wHIDnnQwQ?feature=shared