Despite recent advances in diagnosing cancer, many cases are still diagnosed using biopsies and analyzing thin slices of tissue underneath a microscope. Properly analyzing these tissue sample slides requires highly experienced and skilled pathologists, and remains subject to some level of bias. In 2018 Google announced a convolutional neural network (CNN) based system which they call the Augmented Reality Microscope (ARM), which would use deep learning and augmented reality (AR) to assist a pathologist with the diagnosis of a tissue sample. A 2022 study in the Journal of Pathology Informatics by David Jin and colleagues (CNBC article) details how well this system performs in ongoing tests.

For this particular study, the LYmph Node Assistant (LYNA) model was investigated, which as the name suggests targets detecting cancer metastases within lymph node biopsies. The basic ARM setup is described on the Google Health GitHub page, which contains all of the required software, except for the models which are available on request. The ARM system is fitted around an existing medical-grade microscope, with a camera feeding the CNN model with the input data, and any relevant outputs from the model are overlaid on the image that the pathologist is observing (the AR part).

Although the study authors noted that they saw potential in the technology, as with most CNN-based systems a lot depends on how well the training data set was annotated. When a grouping of tissue including cancerous growth was marked too broadly, this could cause the model to draw an improper conclusion. This makes a lot of sense when one considers that this system essentially plays ‘cat or bread’, except with cancer.

These gotchas with recognizing legitimate cancer cases are why the study authors see it mostly as a useful tool for a pathologist. One of the authors, Dr. Niels Olsen, notes that back when he was stationed at the naval base in Guam, he would have liked to have a system like ARM to provide him as one of the two pathologists on the island with an easy source of a second opinion.

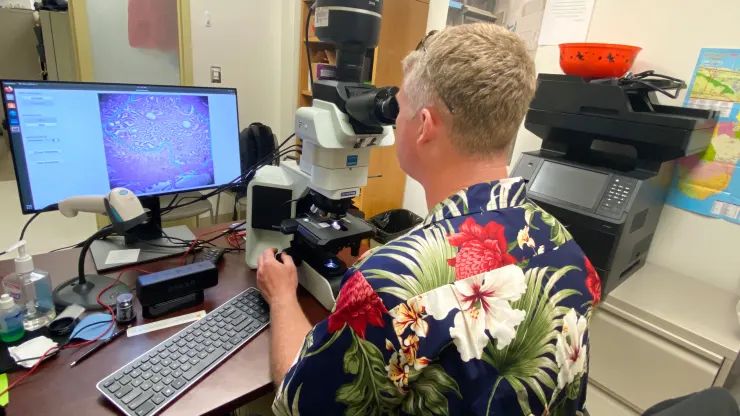

(Heading image: Dr. Niels Olson uses the Augmented Reality Microscope. (Credit: US Department of Defense) )

Well, I hope Google’s CNN is more accurate in its reporting than the television channel with the same initials.

But then again, it is Google.

Let’s not bring politics here.

Now this is how it /should/ be done, using AI as a tool to assist rather than a solution.

A cancer diagnosis is always a pathological/histological diagnosis. Many/mostnow also confirm with molecular genetic, flow cyto and advanced staining and other methods. Not just for diagnosis but prognosis and treatment options.

That said yeah first step is always the old fashioned microscope.

Friend works in a cancer lap, he said that cancer cells are visible different from the sorounding onces. Like the cancer cell looks more fatty and have cellutite compared to the other round shapes. He looks in the miscroscope and counts how many cancer cells are there, so he can give feedback if the therapy works (cancer cells goes down) or nothing happend (the cancer cells amount stay same).

So for what did we need A.I.?

Erm, so your well qualified friend can do something better with her PhD qualified ass than simply counting circles? Maybe, free her up to do something more valuable?

I mean, I get that luddites don’t want automation but this seems like a odd choice of application to object to.

Cancer cells do often look very different, but in a sample there is already a bunch of “odd” things, like blood vessels. This tool can, for example, tell the pathologist “hey, look at this corner, there is this one cell that looks funny”. Something that a human might miss, and it is of real concern in the clinic. Basically, the technology can, if it works well enough, make human experts faster and more accurate.

Related open source microscope, that supports augmented reality:

https://www.youtube.com/watch?v=Scaw8fW-bQM

https://github.com/TadPath/PUMA

A related project is https://openflexure.org/

“high precision mechanical positioning available to anyone with a 3D printer – for use in microscopes, micromanipulators, and more. “

The AR overly is a TFT image projected into the optical path. So the original specimen is seen with full optical/analog resolution and features, it’s not seeing a digitized image. Only the AR overlay is a digital image.

https://youtu.be/7UbkrZyNgpo?t=469