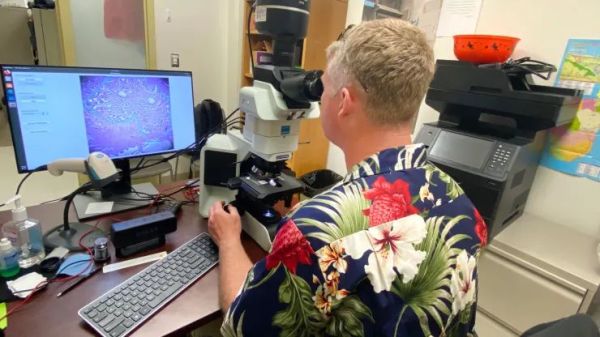

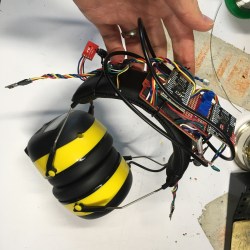

Speech jammers were a meme a little while back. By feeding back delayed voice audio to a person’s ears, it makes it near-impossible for most people to speak, as our speech system runs on a continual feedback loop. [Benn Jordan] decided to try reworking that concept by replacing headphones with a directed sound projector.

The key to the project is the use of hypersonic sound arrays. These essentially use high-frequency sound beyond the human range of hearing to carry a lower-frequency sound signal. By essentially modulating this higher-frequency carrier to create the perception of lower-frequency sound, it’s possible to create an audible signal that is highly directional. It’s like a “sound laser” that can be pointed directly at a person to allow them to hear it, which is then inaudible when pointed slightly away.

These allow the delayed voice signal to be fired at a person’s head with a relatively narrow spatial spread. When an individual speaks into a microphone hooked up to the device, delayed audio is sent through the hypersonic array back to the speaker’s ears, garbling their speech as their brain gets confused by the feedback.

[Benn] demonstrated the device in public by offering random individuals $100 to read a paragraph out of a book. The speech jammer worked a treat, and [Benn] was able to keep his money… until one amazingly immune individual breezed through the test. Check out our prior coverage of speech jamming technology. Video after the break.

Continue reading “Hypersonic Speech Jammer Works At A Distance”