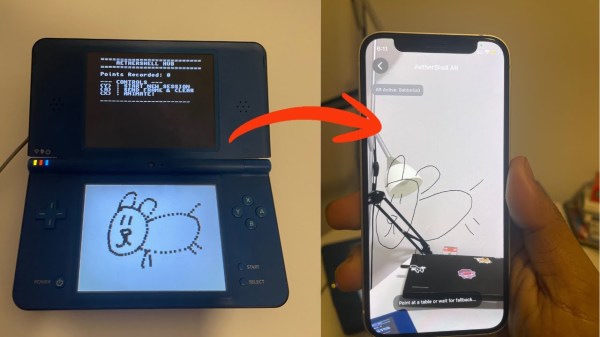

[Bhaskar Das] has been tinkering with one of Nintendo’s more obscure handhelds, the DSi. The old-school console has been given a new job as part of an augmented reality app called AetherShell.

The concept is straightforward enough. The Nintendo DSi runs a small homebrew app which lets you use the stylus to make simple line drawings on the lower touchscreen. These drawings are then trucked out wirelessly as raw touch data via UDP packets, and fed into a Gemini tool geometric reconstruction script written in Python which transforms them into animation frames. A Gemini tool is used to classify what the drawings are in order for a future sound effects upgrade, too. These are then sent to an iPhone app, which uses ARKit APIs and the phone’s camera to display the animations embedded into the surrounding environment via augmented reality.

One might question the utility of this project, given that the iPhone itself has a touch screen you can draw on, too. It’s a fair question, and one without a real answer, beyond the fact that sometimes it’s really fun to play with an old console and do weird things with it. Plus, there just isn’t enough DSi homebrew out in the world. We love to see more.

Continue reading “Augmented Reality Project Utilizes The Nintendo DSi”

![[miko_tarik] wearing diy AR goggles in futuristic setting](https://hackaday.com/wp-content/uploads/2024/11/diy-ar-goggles-1200.jpg?w=600&h=450)

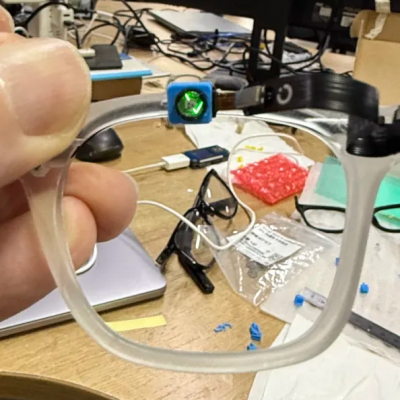

![[miko_tarik] wearing diy AR goggles](https://hackaday.com/wp-content/uploads/2024/11/diy-ar-goggles-smallphoto.jpg?w=400) Creating Zero wasn’t simple. From designing the frame in Tinkercad to experimenting with transparent PETG to print lenses (ultimately switching to resin-cast lenses), [mi_kotalik] faced plenty of challenges. By customizing SPI displays and optimizing them to 60 FPS, he achieved an impressive level of real-time responsiveness, allowing him to explore AR interactions like never before. While the Raspberry Pi Zero’s power is limited, [mi_kotalik] is already planning a V2 with a Compute Module 4 to enable 3D rendering, GPS, and spatial tracking.

Creating Zero wasn’t simple. From designing the frame in Tinkercad to experimenting with transparent PETG to print lenses (ultimately switching to resin-cast lenses), [mi_kotalik] faced plenty of challenges. By customizing SPI displays and optimizing them to 60 FPS, he achieved an impressive level of real-time responsiveness, allowing him to explore AR interactions like never before. While the Raspberry Pi Zero’s power is limited, [mi_kotalik] is already planning a V2 with a Compute Module 4 to enable 3D rendering, GPS, and spatial tracking.