To read the IT press in the early 1990s, those far-off days just before the Web was the go-to source of information, was to be fed a rosy vision of a future in which desktop and server computing would be a unified and powerful experience. IBM and Apple would unite behind a new OS called Taligent that would run Apple, OS/2, and 16-bit Windows code, and coupled with UNIX-based servers, this would revolutionise computing.

We know that this never quite happened as prophesied, but along the way, it did deliver a few forgotten but interesting technologies. [Old VCR] has a look at one of these, a feature of the IBM AIX, which shipped with mid-90s Apple servers as a result of this partnership, in which Mac client applications could have server-side components, allowing them to offload computing power to the more powerful machine.

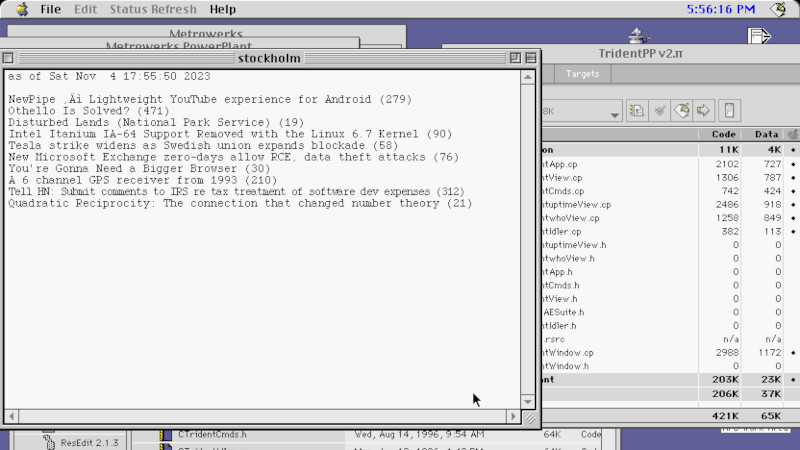

The full article is very long but full of interesting nuggets of forgotten 1990s computing history, but it’s a reminder that DOS/Windows and Novell Netware weren’t the only games in town. The Taligent/AIX combo never happened, but its legacy found its way into the subsequent products of both companies. By the middle of the decade, even Microsoft had famously been caught out by the rapid rise of the Web. He finishes off by creating a simple sample application using the server-side computing feature, a native Mac OS application that calls a server component to grab the latest Hacker News stories. Unexpectedly, this wasn’t the only 1990s venture from Apple involving another company’s operating system. Sometimes, you just want to run Doom.

Once? every single app you can download nowadays has some type of internet connection requirement (do you even know any truly offline app?) and server side processing.

Transfer speed and latency are the Achilles heel of server side computing. Every 15 years or so it rears its head. “Thin clients”, even X clients have come and gone. With 100BASE-T it’s possible to run thin clients if all you’re doing is data entry or query, but anything more interactive will be frustrating.

We have great bandwidth now, but I kind of miss when we had low bandwidth and a comparatively minimal latency. You could look at a piece of tech and feel that it was as close to synchronized with something else on the same signal as it’s possible to be. A row of TV’s or radios could all play the same thing without the differing latency making it a cacophony. Talking on the phone, local calls and phones all on the same line were faster than the speed of sound. But if you called cross-country you’d really feel the distance, especially if you had someone closer on the same call – either a 3-way or just on the same line.

Latency never was really good, though.

That’s why, say, Gameboy emulators had to use tricks to get net play going.

The internet connection was too bad to allow for simple implementation of Game Link Cable support.

And that’s Z80 technology from the 1980s.

No, I don’t think internet technology was ever a great thing. We got used to used to it, rather.

IPX/SPX was the norm on LANs in the 90s. It wasn’t routable, maybe, but much quicker.

Then there was X.25 protocol, also nicer than TCP and IP, maybe.

Unfortunately, Unix helped to push TCP/IP.

It’s like with Windows, not always does the “good” thing win. It’s rather a matter of dominance (market share) etc.

Oh, sure, the *internet* wasn’t that low latency. But other tech was and local stuff could be, and things on the internet like the web or a terminal felt more like it was happening in real time no matter the fact it was slow. I mean, of course you had to bring a computer around to the same LAN if you wanted to do much together. But what little I did back when I had dial-up felt like my machine and the other machine were working together to deliver whatever I asked for. I guess because at the speeds we’re talking, the delay bandwidth was tiny and there was maybe a few lines of text in the pipe at once. *shrug*

I’ll defer to the people who used it more than I did as far as internet goes; most tech for me wasn’t internet related at that time.

Cloud computing *is* server-side computing. It happened, it just got rebranded first.

At it’s core client/server is just a new name for terminal/host.

We’re going backwards, really. Away from personal computing and intelligent end user devices.

The rise of Linux or *nix in the 90s was just a start, maybe. At least it was for me.

OSes like Amiga OS, OS/2 and BeOS were much more modern and elegant in several things and an upgrade to a 1960s technology.

Glad someone else gets it. Cloud computing is just a mainframe.

Exactly. The technology behind a lot of this stuff, such as containers, virtualization, and namespaces in Linux, are descended from ideas that took root on mainframes. Everything new is really only like 10% new and 90% old technology.

It is close but cloud computing is actually much more than server-side computing. Instead of having a single server that is being time-shared by multiple clients, a cloud service has lots of servers that collaborate somehow to get a job done. The magic is to make sure that proper load sharing happens and that communication between the nodes is optimized. As an end user we just bitch about latencies etc., but ‘the cloud’ is actually an amazing feat of technology and software.

Naw, it’s a big mess of crap created by mainly incompetent ppl, the inefficiency and overhead is proof of that.

Latency and transfer speed are server-side computing’s worst enemies. About every fifteen years, this comes up. Even X clients are “thin clients” who come and go. If all you’re doing with 100BASE-T is data entry or querying, you can run a thin client; however, anything more interactive will be a pain.

“APPLE” (ARCHEOLOGY)??? seriously? Problem with “Authors” now, all Apple heads so warping history. Apple have/had little to do with the above. They couldn’t even get simple multi-tasking/threading running on MAC O/S in the 90s so had to ditch it for Berkley BSD Unix, then did the same with their hardware, adopting WinPC hardware, Apple is/was a unix PC with some “Apple Makeup”, Apps writen by MS etc. Don’t even talk about backend tech… they’re a marketing company.

Making sure that appropriate load sharing occurs and that node-to-node communication is efficient is the magic. Although we end users complain endlessly about latency and other issues, “the cloud” is genuinely a remarkable achievement in software and technology.