You want to build a cluster of computers, but you need a high-speed network fabric that can connect anything to anything. Big bucks, right? [Fang-Pen] developed a 10 Gbps full-mesh network using USB4 that cost him under $50. The first part of the post is about selecting a low-power mini PC, but if you skip down to the “Networking” section, you’ll find the details on the cluster.

The machines selected have two USB4 ports. In theory, you can transfer 40 Gbps on these ports. In reality, the cluster only hit 11 Gbps, but that’s still well above common Ethernet speeds. [Fang-Pen] has yet to determine why he isn’t getting even faster speeds.

Since Linux is Linux, there is a module for networking over Thunderbolt, so the rest is basically set up. There are, of course, some limitations. First, it is only fully connected because the cluster has three computers. More computers would need more USB4 ports or more hardware.

In addition, the standard says you can only count on full speed with cables 0.8 meters or shorter. However, that’s the 40 Gbps number. We wondered if a 2 m cable, rated at 20 Gbps, would have still managed 11 Gbps in this setup. A 10GBASE-T network, on the other hand, should allow 100-meter cables. But for a cluster of computers, do you really care?

We’d be interested to see this idea extended to more nodes. High-speed fabric can be useful in networked disk servers, parallel computing, and probably some other scenarios. We’ve seen 10G Ethernet on the Pi, although the PCI bus limited it to about 3.6 Gbps. For reference, we saw another three computer networks with 10GBASE-T done for about $130 with similar limitations.

The 40Gbit rating is raw throughput and doesn’t include the overhead for error correction and alt mode channels. Realistically, you’ll see ~25Gbit maximum usable bandwidth. Maybe that mini has its thunderbolt controller on a limited bandwidth interconnect with the CPU internally? Like it’s on an PCIE x4 interface to the CPU?

More like a PCIe 3.0 x4 or PCIe 4.0 x2 interface. Or both ports share a single uplink to the CPU. Jives with the 25Gbps throughput, basically half that because the ports are limited.

I was going to play around with Thunderbolt 3, but it is hot mess. Waiting for TB5 for it to be kinda mainstream-ish.

The person doing this has hit on a main problem of Mini PC’s; very lacking in the expandibilty department. Also he needs to look again for 2.5Gbps switches, they are $65-80.

Probably needs to take a look at Lenovo Tiny, HP Mini, Dell Micro machines as well (At least Lenovo has a x8 PCIe slot exposed on the Tiny series, so you could do this with Fiber if you wanted.) Not sure on the raw compute, but there are a lot of options, including 65w processors.

There’s no point in TB/USB4, because OCuLink already widely available in Mini PC, ITX motherboards (Miniforums and couple more manufactures), as well as M.2/U.2 -> PCI-E 4.0/5.0 adapters.

https://hackaday.com/2023/12/13/pcie-for-hackers-external-pcie-and-oculink/

You can as well use used CXL adapters in some cases maybe cheaper and faster.

But again same issue as always serial interfaces can be fast but nowhere’s as fast as reliable as actual mesh interfaces especially optical wired/wireless mesh, hopefully become more of default standard in future.

Sure there is.

OCuLink is fun, but it’s clearly an internal connector, with all the limitations that come with it.

Wn 3xt3rnal connection that barely works at 0.5m and is only rated for 100ish insertions isn’t going to cut it for most uses…

> More computers would need more USB4 ports or more hardware.

Or a different network architecture, such as a ring network.

Depending on the job, a form of star network could also work, and maybe reduce the amount of data any one node had to deal with. I wonder what the load from routing the packets would be like on the boxes though?

Ah, I only see two ports. Never mind :-)

I have a 3 machine ring/star network using USB4 on mini PCs in my lab.

Throughout varies wildly. Linux support needs some work.

It tops out at 22.5Gbit with iperf3.

That pins one of the CPU cores at 100%

With 3, isn’t it already a ring?

Is a ring topology doable in networking?

I barely meet it in serial communication (HVAC equipment) and it’s still the most unrecommended.

It’s very common/recommended to connect a number of Cisco switches in the same stack in a ring.

isn’t https://en.wikipedia.org/wiki/Token_Ring?useskin=vector both electrically and logically a ring?

I’d be curious to know what the OR is doing with this Ceph cluster.

Er.. I came here for the “mesh”, but that’s not mesh at all. Mesh nowadays is https://en.wikipedia.org/wiki/Mesh_networking and involves automatic routing, self healing and such.

That’s just 3 computers each connected to each other. Most people would use those terms: https://en.wikipedia.org/wiki/Network_topology#Fully_connected_network

For it to be mesh, every computer would need an individual connection to every other computer therefore providing alternate paths, this would also increase bandwidth as you are not passing traffic to one computer to get to the next.

Well, .. I (respectfully) disagree. What you describe is what he did, and it’s called “fully connected network”.

For 3 nodes, fully connected and mesh is identical.

I disagree, a mesh network for 3 nodes could have two nodes unable to communicate directly, but able to pass information via the third node. That’s the automatic routing part. The self healing part would be coping with changes to which nodes can communicate.

I guess you could argue something along those lines, but fundamentally, what he does is not mesh. There’s no routing protocol software behind for example.

Nothing bad will fully connected network, especially here as that’s what you want for this use-case. Just don’t call it mesh.

‘Guy buys cables and hooks them up’. You’re late, hackaday! This article usually gets recycled every 3 or 4 years and it’s been 5!

Check the links in the article to confirm.

Now if one could find a NUC with ECC memory… (ddr5 ecc doesn’t count)

I am showing my ignorance, but why doesn’t DDR5 ECC count?

The ECC in DDR5 is on the chip level, it doesn’t cover any of the interconnects.

I agree with others, this is a horribly titled article. This is not a traditional mesh network at all.

There is a lot of confusion around USB4, and this document from usb.org cannot be wrong, and is very concise and clear:

https://www.usb.org/sites/default/files/D1T1-3%20-%20USB4%20System%20Overview.pdf

Here is some extra information about where that “thunderbolt-net” comes from:

https://www.kernel.org/doc/html/v4.19/admin-guide/thunderbolt.html#networking-over-thunderbolt-cable

When I needed some reasonably fast but short range networking recently, I found that 54Gb Infiniband cards, cables, and switches were really cheap, like $10/card, $10/cable, and $40 for an 18 port switch. For under $100, I had four machines on a 54Gb/s network. The cards and switch are old and burn way more power than I’d like to keep lit up every day, but for the short time I really needed to move a lot of data, it worked quite well. IB is much easier to setup on Linux than I was lead to believe as well. I had to install a few packages and then it was just a few easy to find commands later and I had two machines up and running.

My rig for a few years now is:

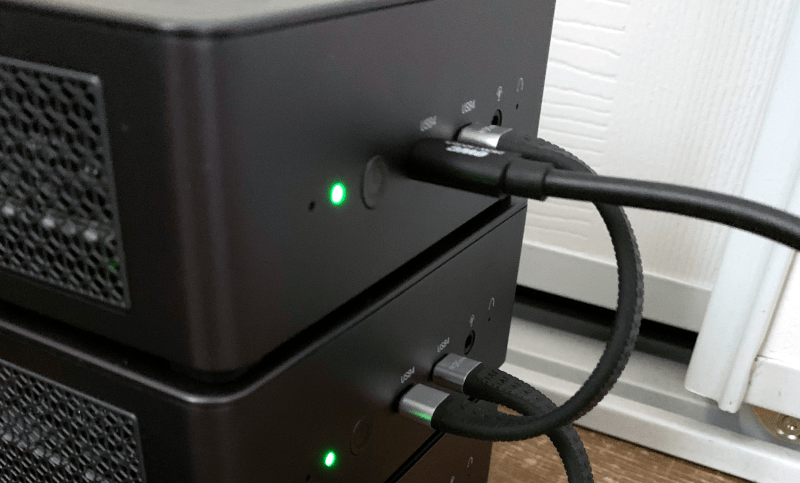

iMac -(Thunderbolt 2 -> Thunderbolt 3 Adapter)-> Thunderbay 8 -(Thunderbolt 3)-> NUC.

The iMac runs GlobalSAN iSCSI initiator and the NUC runs “mdadm” RAID 6 and “tgt” for iSCSI.

Roughly 24TB, 300Mbps read/write speed and it all backs up to Backblaze.

Why not get second hand Mellanox cards, under 100 bucks you can get sustained >30Gbe if you really need it

Isn’t the limitation on cable length only a limit for passive cables? If you go active (a bit more expensive for sure) the range goes up significantly for max bandwidth. Or am I thinking about tbt 3?