While the 8080 started the personal computer revolution, the Z80 was quickly a winner because it was easier to use and had more capabilities. [Noel] found out though that the Z80 OUT instruction is a little odd and, in fact, some of the period documentation was incorrect.

Many CPUs used memory-mapped I/O, but the 8080 and Z80 had dedicated I/O addressing pins and instructions so you could fill up the memory map with actual memory and still have some I/O devices. A quick look in the famous Zak’s book on Z80 programming indicates that an instruction like OUT (C),A would write the A register to the output device indicated by the BC register pair (even though the instruction only mentions C. However, [Noel] missed the note about the B register and saw in the Zilog documentation that it did. Since he didn’t read the note in the Zak’s book until later, he assumed it was a discrepancy. Therefore, he went to the silicon to get the correct answer.

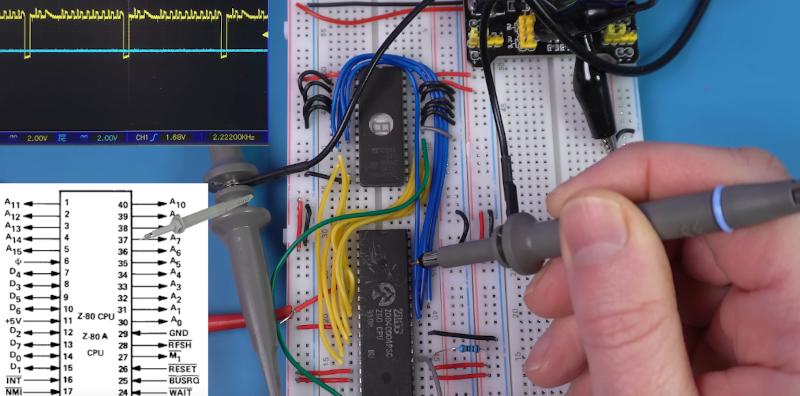

Breadboarding a little Z80 system allowed him to look at the actual behavior of the instruction. However, he also didn’t appreciate the syntax of the assembly language statements. We’ve done enough Z80 assembly that none of it struck us as particularly crazy, especially since odd instruction mnemonics were the norm in those days.

Still, it was interesting to see him work through all the instructions. He then looks at how Amstrad used or abused the instructions to do something even stranger.

If you want to breadboard a minimal Z80 system, consider this one. If you enjoy abuse of the Z80 I/O system, you don’t want to miss this Z80 hack for “protected mode.”

The Z80 was the winner?

???

The heck is that statement. The Z80 was designed to quite literally function as an 8080/as an extension of. It was so close that they had to double back and change stuff and it directly gave things like the 8088 and 8086 the advantage later on.

I ain’t mad, I’m just… Confused. I mean you could argue that they cut directly into what would have been profit for Intel but it’s more like Zilog did all the heavy lifting in setting up the ecosystem for Intel to florish in for free. If anything Zilog got both scammed out of their hard work and played by Intel in exhange for a couple years of stardom.

in the battle tween Z80 and 8080 I would say yes, the Z80 is the winner, in the war, which is where your looking, well yea no duh

I think the question of “who won : the 8080 or Z80?” depends on what time frame you’re looking at. My memory was that in ’78, the Z80 was expensive and supplies were only then starting to stabilize (I know this because we were designing a widget and compared the two.) I REALLY loved the Z80’s single voltage power supply and integrated DRAM refresh logic. But our widget already had a power supply and we used SRAM for some reason.

A couple years later I looked at the 8085 and Z80 and noticed the Z80B price-point was pretty much where it needed to be. And Intel had started telling people they should use the 8086.

When we heard the rumor IBM was going to go with the 8088, the ’88 and ’86 became much more compelling as we felt there would never be supply problems for a chip IBM used.

I kept up with various Zilog follow-ons like the Z8, but from a business perspective it was hard to argue with the availability of Intel (and third party) parts. Come to think about it, TI second sourced the 8080 (like Rockwell second sourced the 6502) and I can’t remember if there was a Z80 second source at the time.

Anyway… The Z80 was clearly superior to the 8080 from a tech perspective. But Intel invaded various verticals w/ the 8051, 8042 and 8085 eroding Zilog’s technical advantage. (the Z80 may have had more features, but if the 8051 does everything you need, why pay extra for Z80?)

And by the time the IBM PC came out, Intel had outmaneuvered Zilog.

Still… The 8080 and the Z80 were both great chips. No need to pit them against each other.

+1

As far as I know, both i8080/i8085 and Z80 gave birth to various compatible chips with additional features.

Such as the NSC800 or the R800 used by MSX platform.

https://www.cpu-world.com/CPUs/NSC800/National%20Semiconductor-NSC800D%20-%20NSC800D-3.html

https://en.wikipedia.org/wiki/R800

The Gameboy processor, the DMG CPU, uses a modified Z80 ISA, too.

It was Z80 enough for people building custom cartridges using their existing Z80 skills (like a lowed oscilloscope or data logger).

The Z80 had numerous clones, also. Way more than the i8080.

In East Germany, the U880 was made, I remember. Similar chips were popular in UdSSR, too. They were needed for these ZX Spectrum clones, I suppose.

https://www.cpushack.com/2021/01/26/the-story-of-the-soviet-z80-processor/

At least on 6502 clones existed, too.

Made in Bulgaria, for the Apple II clones.

Oh yeah. Completely forgot about MSX. That market alone justifies the Z80’s existence. I think most of in the states weren’t exposed to MSX (unless you happened to have a Yamaha fetish.) There were some very, very nice MSX machines.

“When we heard the rumor IBM was going to go with the 8088, the ’88 and ’86 became much more compelling as we felt there would never be supply problems for a chip IBM used.”

That was a good thinking. 🙂👍

And quickly after, second-sourcing became a reality.

What’s also interesting, the” IBM of Japan”, NEC, had built the PC-9801 (PC-98 series) on a basis of x86 ISA, too.

NEC not only cloned the 8088/8086, but created the enhanced versions V20/V30 which were 80186 instruction compatible and featured an i8080 emulation mode.

This often was associated with CP/M compatibility, but NECs prior PCs were Z80/µPD780 based, too.

Such as the PC-6001, PC-8001 and PC-8801 (PC-88 series).

A very interesting, but rare Z80/8086 hybrid was the NEC μPD9002.

It did not only have i8080 compatibility, but Z80 compatibility.

Or more precisely, Z80A and V30 compatibility.

It was used in PC-88VA.

Makes me wonder why that chip wasn’t being used on a wider scale and why it wasn’t a big success.

A V30 compatible with a full Z80 core would have allowed to run Turbo Pascal in CP/M emulators.

The usual “8080 emulation mode” wasn’t capable of this, so that many uses like my father switched to software emulation of Z80+CP/M (Z80MU on DOS was a popular emulator in the 80s).

Don’t forget the Philips 2650. It was a static CMOS CPU which meant you could vary the CPU clock, down to 1Hz is you wanted to and just step your software through for debugging. Genius.

Amstrad used the Z80 in their first home computer offering and did something odd with the shadow registers so they controlled hardware. The meant a sneaky bit of software could write to a shadow register with an opposite binary value and cause the CPU to burn out.

Mostek was a second source (at the very beginning the only source) from the get-go; Synertek, SGS and NEC soon followed. Sharp became a second source when they started building their MSX computers in 1978 two years after the Z80 was first launched.

Well, the Zaks is really not the greatest Z80 book for learning. It’s more of a reference. There are much more accessible books for learning Z80 available. I guess reading another book would have expedited his learning process quite a bit… interesting to see that this gets so much attention.

The Zaks doesn’t even give a straightforward explanation for conditional branching and which flags are set under which conditions…

I prefer this book, for example, any day over the Zaks:

The Z80 Microprocessor: Architecture, Interfacing, Programming, and Design

Gaonkar, Ramesh S.

That’s all fine in 2024 hindsight. But back in the day we didn’t have the internet to review titles, at most there might be a review in BYTE. At the bookshop or computer store you bought what was available. And Zaks was it, so I got Zaks in 1980 or thenabouts.

I just looked at my copy of Zaks’ book. Both which flags are set and what conditions set them are described, just not always in the same place.

“While the 8080 started the personal computer revolution, the Z80 was quickly a winner because it was easier to use and had more capabilities.”

The single operating voltage was a great thing, too.

The fact it was the TTL standard (5v) made it even better.

Z80 also had a DRAM refresher built-in, but the more professional engineers surely opted for proper SRAM anyway.

Anyway, I think it’s cool that the Z80 had same parents as the i8080 (those guys leaving Intel and founding Zilog).

That’s why I’m always so baffled by the 8080/8085 fans who like to criticize the Z80 or down-play its importance.

The Z80 was the most popular “8-Bit” CPU, followed by maybe that, um, 6502.

Are you aware of how expensive and small SRAM was back then? DRAM was the way to go with the 4116 DRAM having an amazing size of 16384×1 bits. Yup, just 8 chips for an amazing 16 kilobytes of memory. There’s a reason that the Z80 only updates the lower 7 bits of the R register. After all, that’s all that’s needed to support the largest available DRAM at the time.

Now, of course, we have high capacity SRAM available, but back then DRAM was what you used.

“Are you aware of how expensive and small SRAM was back then?”

Sure I am. And I know how “reliable” DRAM was, as well.

DRAM was good for the amateur, the hobbyist, of course. :)

The ZX81used half-dead DRAM chips very well, I think.

In the late 70s and early 80s, DRAM had the (often well deserved) reputation for being flakier than SRAM. If you were building a CP/M machine the user could easily reboot if it flaked out, that’s less of a problem than if you were building an embedded device at the end of a pipeline 100 miles from the nearest technician.

I think the sheer volume of PC DRAM being manufactured in the early 80s did wonders for memory reliability, but a generation of engineers had swallowed the SRAM kool-aid.

For PCs, DRAM was absolutely the right answer. But there were corners where SRAM met availability / reliability / utility requirements for scome embedded systems (like ATMs, anti-lock breaks, industrial printers and process control.)

Thank you for pinning his ears back. Saved me the trouble…

Video never touches on the most important reason it’s OUT (C),A rather than OUT (BC),A: the OUT nn,A instruction (as well as its IN A,nn partner) only take an 8-bit address.

More to the point,

OUT (n),A

populated address bits A8 through A15 with the contents of the A (accumulator) register. Other I/O opcodes did similar:

OUTI, OTIR, OUTD and OTDR put the contents of B (the loop counter) on A8 through A15, as did the INI, INIR, IND, and INDR instructions.

So, the high order bits of the address lines were not consistent; hardware could not assume that they represented the high-order byte of a sixteen-bit I/O address.

It doesn’t matter in the slightest what the OUT (n) instructions do, as we’re only talking about the OUT (C) ones, and those *all* put B on the high order address bits.

The real reason is that it’s a legacy of the 8080, which had only 8-bit ports, and the Z80 could still be used that way by ignoring the high byte of the address in hardware (like the CP/M machines and the MSXes), or the implementation could use all 16 bits (the Amstrad CPC).

Mnemonics are by convention anyway, the processor itself only thinks in opcodes, so it’s less about intentional design and more about quirks of history why things are like this.

The 8080 is where this behavior of the upper 8 bits was defined (by definition, B during C-indexed; A during immediate address). Zilog didn’t have the option of redefining it, because binary compatibility was part of the design specification. Intel ?fixed? this behavior with the 8085, which defined A8-A15 as a copy of A0-A7 during I/O port access.

Entirely unrelatedly, I do think it’s unfortunate that the video only covers the use of this behavior in the Amstrad CPC, without mentioning a few of the other systems that do, like the the ZX80 or Neo-Geo.

You might want to recheck your facts. The behavior you specified (B during C-indexed; A during immediate address) does not make sense as regards the 8080. Reason is that out (C),A is on the ED second page. That opcode does not exist on the 8080 and is a purely Z80 extension.

I disagree. From the external hardware perspective, an I/O device can’t distinguish between an access generated by an OUT (C),r instruction and an OTIR or OUT (n),A instruction. All it knows for certain is that A0-A7 contain the I/O port number, and D0-D7 contain the data.

What is uncertain is the value provided on A8-A15: sometimes it is the B register, sometimes it is the A register, and both of those registers can vary in value, even across single I/O instructions (i.e. OTIR).

If a hardware designer noticed that OUT (C),A (for instance) put the value of the B register on A8-A15 and the value of C (the I/O port) on A0-A7, without considering that the A8-A15 component may be unstable, they might assume that the CPU supported a 16-bit I/O space, addressed by the BC register pair. This assumption would be incorrect, and would fail for most of the other I/O instructions.

Yes, it is interesting that Zilog built the Z80 to put random data on the high 8 bits of the address lines during an I/O operation. However, unless that data is useful to something that reads the address lines, then the activity is just an “interesting fact”.

Whatever comes out on A8..A15 during I/O instructions is a side effect of not clearing an internal register, which saves one machine cycle and precious microcode ROM space. Since this behavior is consistent, it was documented with the 8085 and the Z80, probably because customers too often asked why the upper address byte isn’t zero.

“not clearing an internal register” doesn’t make sense to me. What register? Certainly not the B register, which has to be relied on to maintain its value. What goes onto A8-A15 is “don’t care”, so the designer might have taken whatever choice used the fewest gates. The control for LD (BC), A already exists, why use extra gates to zero A8-A15 for OUT (C), r ?

I’ve battled for years to understand why only C is in the mnemonic when both B and C are presented onto the address bus. The High byte isn’t supposed to represent part of the port even though it can be used and decided that way.

Some things humans were not meant to know…

On the Z80, IN A, (n) puts n on A0-A7 and the contents of the accumulator register on A8-A15, so it’s effectively a 16 bit address. IN r (C) puts the contents of the C register on A0-A7 and the contents of the B register in A8-A15. So again a 16-bit effective address. INI and INIR put the “byte count” in register B in A8-A15 and register C in A0-A7 (and then did funky things like decrementing B and writing the octet input from the peripheral to memory pointed to by HL.) But there was something that “looked like” a 16 bit address on the address bus. I think INI(R) was intended for devices that used the lower 8 bits for a “device id” and then the upper 8 bits for a “register id” in the device’s address space.

8080 I/O was mundane: IN / OUT x would input / output the single octet to port “x” using the lower 8 bits of the address bus. My memory is that it duplicated x onto the upper 8 bits of the address bus, and I googled it but wasn’t able to find an authoritative source in the time I spent on it.

From a hardware perspective, you would absolutely know ahead of time if you were using an 8080 or a Z80, so your hardware was set-up to use only an 8-bit I/O address space or a 16 bit I/O address space. If your Z80 hardware only looked at the lower 8-bits of the address bus to decode which port it was talking to, executing an IN or OUT instruction should behave the same (modulo electrical differences.)

Back in the old days embedded software was HIGHLY coupled with software. And CP/M devices segregate I/O routines into the BIOS, so if you had a Z80 card, it’s BIOS would know how to properly access peripherals with a 16 bit I/O address space (or it would punt and behave like 8080.)

So I think the answer is… yes, you can shoot yourself in the foot with I/O instructions if you’re not careful and don’t understand how the address bus is decoded to select a particular device.

Can’t believe that 40 years on we’re still talking about the good old Z80!