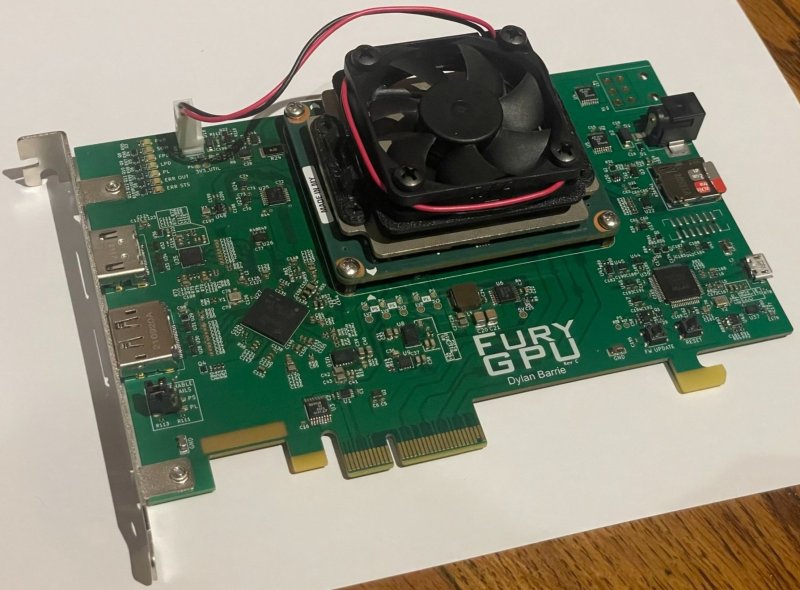

Have you ever wondered how a GPU works? Even better, have you ever wanted to make one? [Dylan] certainly did, because he made FuryGPU — a fully custom graphics card capable of playing Quake at over 30 frames per second.

As you might have guessed, FuryGPU isn’t in the same league as modern graphics card — those are made of thousands of cores specialized in math, which are then programmed with whatever shaders you want. FuryGPU is a more “traditional” GPU, it has dedicated hardware for all the functions the GPU needs to perform and doesn’t support “shader code” in the same way an AMD or NVIDIA GPU does. According to [Dylan], the hardest part of the whole thing was writing Windows drivers for it.

On his blog, [Dylan] tells us all about how he went from the obligatory [Ben Eater] breadboard CPU to playing with FPGAs to even larger FPGAs to bear the weight of this mighty GPU. While this project isn’t exactly revolutionary in the GPU world, it certainly is impressive and we impatiently wait to see what comes next.

Next up try PowerVR.

Absolutely not. PowerVR is dead, dead, dead. It’s an awful architecture and nobody writing drivers wants to touch it.

https://libv.livejournal.com/26972.html

In other news, this driver was merged into the linux-6.8 kernel:

https://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git/log/drivers/gpu/drm/imagination/Kconfig

“Don’t want to” isn’t the same as “haven’t”

No, they still haven’t. This is a completely new architecture that happens to reuse the PowerVR branding.

This is a brand new driver for a completely new architecture. 20 years of being singled out (and loosing marketshare to ARM) for being shitty eventually brought Imagination to the table.

Nobody, and I mean NOBODY, wants to touch the old SGX or RGX crap, both because it’s a god-awful mcirocoded architecture and because people still don’t trust Imagination not to start flinging lawsuits.

Let the old PowerVR hardware die.

Actually PowerVR architecture still lives on in mobile chips including Apple silicon.

ACTUALLY the M1 is a SAMSUNG CPU. It must be, since it’s still using Samsung IP for the interrupt controller.

There’s some quirks in how the GPU hardware works that lives on because apple engineers are used to working with imagination IP. Large swaths of the GPU have been re-engineered and there’s NONE of the reconfigurable microcode BS that made legacy PowerVR an utter pain.

Apple themselves have also been LEAGUES more inviting to people developing 3rd party drivers and OSs for their hardware, just by not constantly threatening lawsuits.

Don’t bother following the link – you just get the same youtube video as above, and “Blog and details coming soon”. Nothing else.

The link that takes you to their site that has a blog and two blog posts with details about the thing?

That link?

Ha ha yes the link that says “Blog details coming soon” in big letters that I noticed and a “Blog” link in little tiny letters in the top right hand corner that I didn’t!

An interesting reminder that you can replicate/implement almost anything in an FPGA.

if you can get over the learning curve that is. id rather play around with 7400 series chips but that’s just me.

An FPGA can do anything… But slower, more expensive, and with more power.

I do love FPGAs and enjoy working with them. The flexibility is fantastic, but it takes an order of magnitude more transistors to do the same job as an ASIC. So you will never get “real” GPU performance out of one.

Still, the skills needed for this meager feat are amazing. Kudos. This person did solo what takes an entire team to do.

This is an incredible amount of work.

Creating a gpu from base building blocks is one thing, but something that runs modern(ish) resolutions, with high framerate, built into a custom hardware device on top of a PCIE endpoint, and then writing windows drivers (windows of all things…) for video and audio.

I am going to be actively watching this site to see the documentation roll out for this.

A massive passion project that truly is incredible.

As someone who dreads wading into the abyss that is software development, I have an immeasurable amount of respect for the work that must have gone into this.

-Ben

IIRC regular Quake was CPU rendered. You needed GLQuake to use a VooDoo card.

Is this cards 3d hardware even used?

It looks like he recompiled regular Quake as ‘FuryQuake’.

It’s been a long time, but the demo sure looks like the non-GL rendered version.

Quake sucked, it was like a Doom evolution but with too much going on. Doom II was peak PC gaming, with a tangent going towards Duke 3D.

Redneck Rampage was better than Duke.

Someone should remake RR on a modern engine.

With elastic modeled alien boob guns. Tricky aim. Hard to keep on target.

More ‘Yeahaw!’

GL Quake supported OpenGL, running in Windows 95.

“That is not to say that this GPU is modern, or has modern capabilities. It is (at the moment) fully fixed-function, with no programmable shading to speak of. It runs off a custom Vulkan-like graphics API (“FuryGL”) and due to the lack of shaders and other modern features, is unable to support modern D3D or Vulkan.”

So this is why he’s got a custom Quake version.

Can we can make virtual pci-e device on kvm/qemu and Windows fast 3d driver