In case you haven’t heard, about a month ago MicroPython has celebrated its 11th birthday. I was lucky that I was able to start hacking with it soon after pyboards have shipped – the first tech talk I remember giving was about MicroPython, and that talk was how I got into the hackerspace I subsequently spent years in. Since then, MicroPython been a staple in my projects, workshops, and hacking forays.

If you’re friends with Python or you’re willing to learn, you might just enjoy it a lot too. What’s more, MicroPython is an invaluable addition to a hacker’s toolkit, and I’d like to show you why.

Hacking At Keypress Speed

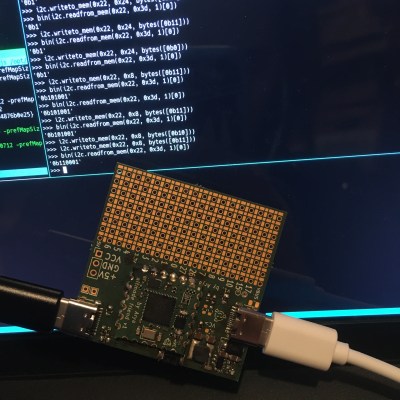

Got a MicroPython-capable chip? Chances are, MicroPython will serve you well in a number of ways that you wouldn’t expect. Here’s a shining example of what you can do. Flash MicroPython onto your board – I’ll use a RP2040 board like a Pi Pico. For a Pico, connect an I2C device to your board with SDA on pin 0 and SCL on pin 1, open a serial terminal of your choice and type this in:

>>> from machine import I2C, Pin >>> i2c = I2C(0, sda=Pin(0), scl=Pin(1)) >>> i2c.scan()

This interactivity is known as REPL – Read, Evaluate, Print, Loop. The REPL alone makes MicroPython amazing for board bringup, building devices quickly, reverse-engineering, debugging device library problems and code, prototyping code snippets, writing test code and a good few other things. You can explore your MCU and its peripherals at lightning speed, from inside the MCU.

When I get a new I2C device to play with, the first thing I tend to do is wiring it up to a MicroPython-powered board, and poking at its registers. It’s as simple as this:

>>> for i in range(16): >>> # read out registers 0-15 >>> # print "address value" for each >>> print(hex(i), i2c.readfrom_mem(0x22, i)) >>> # write something to a second (0x01) register >>> i2c.writeto_mem(0x22, 0x01, bytes([0x01]) )

That i2c.scan() line alone replaces an I2C scanner program you’d otherwise have to upload into your MCU of choice, and you can run it within three to five seconds. Got Micropython running? Use serial terminal, Ctrl+C, and that will drop you into a REPL, just type i2c.scan() and press Enter. What’s more, you can inspect your code’s variables from the REPL, and if you structure your code well, even restart your code from where it left off! This is simply amazing for debugging code crashes, rare problems, and bugs like “it stops running after 20 days of uptime”. In many important ways, this removes the need for a debugger – you can now use your MCU to debug your code from the inside.

Oh, again, that i2c.scan()? You can quickly modify it if you need to add features on the fly. Want addresses printed in hex? (hex(addr) for addr in i2c.scan()). Want to scan your bus while you’re poking your cabling looking for a faulty wire? Put the scan into a while True: and Ctrl+C when you’re done. When using a typical compiled language, this sort of tinkering requires an edit-compile-flash-connect-repeat cycle, taking about a dozen seconds each time you make a tiny change. MicroPython lets you hack at the speed of your keyboard typing. Confused the pins? Press the `up` button, edit the line and run the i2c = line anew.

To be clear, all of code is running on your microcontroller, you just type it into your chip’s RAM and it is executed by your MCU. Here’s how you check GPIOs on your Pi Pico, in case you’re worried that some of them have burnt out:

>>> from machine import Pin >>> from time import sleep >>> pin_nums = range(30) # 0 to 29 >>> # all pins by default - remove the ones connected to something else if needed >>> pins = [Pin(num, Pin.OUT) for num in pin_nums] >>> >>> while True: >>> # turn all pins on >>> for i in range(len(pins)): >>> pins[i].value(True) >>> sleep(1) >>> # turn all pins off >>> for i in range(len(pins)): >>> pins[i].value(False) >>> sleep(1) >>> # probe each pin with your multimeter and check that each pin changes its state

There’s many things that make MicroPython a killer interpreter for your MCU. It’s not just the hardware abstraction layer (HAL), but it’s also the HAL because moving your code from board to board is generally as simple as changing pin definitions. But it’s all the other libraries that you get for free that make Python awesome on a microcontroller.

Batteries Included

It really is about the batteries – all the libraries that the stock interpreter brings you, and many more that you can download. Only an import away are time, socket, json, requests, select, re and many more, and overwhelmingly, they work the same as CPython. You can do the same r = requests.get("https://retro.hackaday.com"); print(r.text)[:1024] as you would do on desktop Python, as long as you got a network connection going on. There will be a few changes – for instance, time.time() is an integer, not a float, so if you need to keep track of time very granularly, there are different functions you can use.

Say, you want to parse JSON from a web endpoint. If you’re doing that in an Arduino environment, chances are, you will be limited in what you can do, and you will get triangle bracket errors if you mis-use the JSON library constructs because somehow the library uses templates; runtime error messages are up to you to implement. If you parse JSON on MicroPython and you expect a dict but get a list in runtime, it prints a readable error message. If you run out of memory, you get a very readable MemoryError printed out, you can expect it and protect yourself from it, even fix things from REPL and re-run the code if needed.

The user-supplied code is pretty good, too. If you want PIO or USB-HID on the RP2040, or ESP-CPU-specific functions on the ESP family, they are exposed in handy libraries. If you want a library to drive a display, it likely already has been implemented by someone and put on GitHub. And, if that doesn’t exist, you port one from Arduino and publish it; chances are, it will be shorter and easier to read. Of course, MicroPython has problems. In fact, I’ve encountered a good few problems myself, and I would be amiss not mentioning them.

Mind The Scope

In my experience, the single biggest problem with MicroPython is that writing out `MicroPython` requires more of my attention span than I can afford. I personally shorten it to uPy or just upy, informally. Another problem is that the new, modernized MicroPython logo has no sources or high-res images available, so I can’t print my own stickers of it, and MicroPython didn’t visit FOSDEM this year, so I couldn’t replenish my sticker stock.

On a more serious note, MicroPython as a language has a wide scope of where you can use it; sometimes, it won’t work for you. An ATMega328P can’t handle it – but an ESP8266 or ESP32 will easily, without a worry in the world, and you get WiFi for free. If you want to exactly control what your hardware does, counting clock cycles or hitting performance issues, MicroPython might not work for you – unless you write some Viper code.

If you want to have an extremely-low-power MCU that runs off something like energy harvesting, MicroPython might not work – probably. If you need your code run instantly once your MCU gets power, mind the interpreter takes a small bit of time to initialize – about one second, in my experience. If you want to do HDMI output on a RP2040, perhaps stick to C – though you can still do PIO code, there are some nice libraries for it.

Some amount of clock cycles will be spent on niceties that Python brings. Need more performance? There are things you can do. For instance, if you have a color display connected over SPI and you want to reduce frame rendering time, you might want to drop down to C, but you don’t have to ditch MicroPython – just put more of your intensive code into C-written device drivers or modules you compile, and, prototype it in MicroPython before you write it.

As Seen On Hackaday

If you’ve followed the USB-C PD talking series, you must’ve seen that the code was written in MicroPython, and I’ve added features like PD sniffing, DisplayPort handling and PSU mode as if effortlessly; it was just that easy to add them and more. I started with the REPL, a FUSB302 connected to a RP2040, poking at registers and reading the datasheet, and while I needed outside help, the REPL work was so so much fun!

If you’ve followed the USB-C PD talking series, you must’ve seen that the code was written in MicroPython, and I’ve added features like PD sniffing, DisplayPort handling and PSU mode as if effortlessly; it was just that easy to add them and more. I started with the REPL, a FUSB302 connected to a RP2040, poking at registers and reading the datasheet, and while I needed outside help, the REPL work was so so much fun!

There’s something immensely satisfying about poking at a piece of technology interactively and trying to squeeze features out of it, much more if it ends up working, which it didn’t, but it did many other times! I’ve been hacking on that PD stack, and now I’m slowly reformatting it from a bundle of functions into object-based code – Python makes that a breeze.

Remember the Sony Vaio board? Its EC (embedded controller) is a RP2040, always powered on as long as batteries are inserted, and it’s going to be running MicroPython. The EC tasks include power management, being a HID over I2C peripheral, button and LED control, and possibly forwarding keyboard and trackpoint events to save a USB port from the second RP2040, which will run QMK and server as a keyboard controller. MicroPython allows me to make the firmware quickly, adorn it with a dozen features while I do it, and keep the codebase expandable on a whim. The firmware implementation will be a fun journey, and I hope I can tell about it at some point.

Have you used MicroPython in your projects? What did it bring to your party?

Great read! This motivates me to want to try MicroPython, just don’t know when I’ll get around to it.

Ditto!

Same here. There are benefits I did not expect. I always avoided it because a scripting language on a microcontroller just seemed like wasted cycles, but as a diagnostic tool, it sounds quite nice.

Indeed, a fine article that has fired me up to look at it as a tool on a couiple of stalled projects. Thanks.

You sound like me, I would love to get back into programing but I get stuck with other projects along the way I first started programing on a acorn atom which could be programmed with either “acorn basic”, “assembler” or “machine code” due to the small amount of memory 1k (half of was used to run the machine) on the basic machine I paid extra for a second 1k which was supplied in 8 chips which I plugged into 8 push in chip holders as I did not want to solder the chips directly onto the main board I was thinking that in the future I may want to spend more money on larger chips ( amount of memory ) I did manage to write a program of space invaders the same as the arcade machines I could get the invaders to walk side to side waving there arms up and down then drop down one line then walk the opposite way and the invaders would shoot down and the base’s would break down as they were shot and the game would end once you were shot 3 times or the invaders touched the bottom, the only thing I could not do was record the highest score as there was not enough memory for that all it needed was a few byts more but forget that it was back in the beginning of 1980. Now at almost 71 years old my memory has started to break down which I would change if it was as easy as the acorn atom was.

How does this compare to CircuitPython and why are you in favor of MicroPy vs CircuitPy? Adafruit is a heavy hitter in the Arduino/Microcontroller/hacker programming community. I know they first approached MicroPy to partner but ended up forking it and developing their own CircuitPython. I’m not sure why. With their knowledge, expertice, attention to detail and open source ethos I figured they would quickly take over with their developement on CircuitPy and become the defacto standard. In the last 6 months or so I feel like more and more stories are out there regarding MicroPy and CircuitPy is never mentioned. Even Arduino recently talked of MicroPython support but again, no mention of CircuitPython.

Anyone have some insight as to why it seems to be ignored and not embraced? Is it a mistake to learn CircuitPython and not just regular MicroPython?

Both work just fine. When working with Adafruit sensor boards, I’ll use CircuitPython as they have their modules available. Otherwise, I’ll just use microPython. Python is … Python after all. I like and use both. One difference is how you get software on the target board. rshell for microPython and drag and drop in CircuitPython. Both interfaces work fine in Linux (don’t use Windoze).

I should add not only sensor boards with CircuitPython, but their SBCs (like the Metro Grand Central M4) as well.

“don’t use Windoze”

????? I started with Thonny (fine to start with) then stepped (way) up to Visual Code. Easy breezy.

I am old school I guess. I use the geany text editor to write python code if in a GUI, or nano if in a command line situation. rshell works great when working with microPython. I never saw the usefulness of an IDE for writing python. At work (they use Windows :rolleyes: ), I use notepad++ for any scripts I need to write.

If you can’t realize the usefulness of a tool like Visual Code over grantor nano, you should just stick with nano and such. you’ll never understand… nuf said…

I will. Thank you :) . The only IDE that made sense was/is Delphi. That was a game changer back when. Otherwise as a professional programmer since ’86, I’ve never had a need for VC or Visual Studio for coding. Just a good text editor, compiler(s), and make. That said at work I am forced to use VS as the vendor requires it for compiling their suite of software (Energy Management System) on Windows. They are starting to transition to cmake though. So it goes…

Python just doesn’t need a silly IDE as it is so simple to code with (which makes it great).

What visual code extension do you use? I tried micropython for ESP32’s porgramming and ended up giving up as the extension was no longer being maintained.

There is a Github site that has files which allow you to run micropython code on circuitpython devices; I have used it previously with an ADAfruit CLUE device

My 2 cents worth…

I find Circuit Python frustrating although they have ready built libraries for many functions. The main problem is that they try to maintain a “whole of IOT” approach which means using system helper libraries eg busio. That then leads to complex dependencies and makes understanding what is happending difficult.

I just give up and write my own simpler shorter programs in Micropython. The micropython system also has many equivalent libraries which are usually shorter simpler and to the point. Mostly I can write a standalone Micropython program in one fairly short file with a couple of simple libraries (mostly I2C drivers for devices). After all you are programming an MCU not an enterprise system.

Same, sometimes. My applications are usually simple enough that rolling your own solution is practical (and more fun). For more complex systems libraries can save you a ton of grief.

As far as I know (did not follow the project closely) the people who created micropython put in a year or more of development time. It was a kickstarter back then. When it was getting ready and they wanted to make some money with it by selling pre-made boards, someone else forked the project, did some minor fiddling (removed REPL I think) slapped on the name circuitpython and started selling their own boards.

I have not followed the details close enough to judge whether that was unethical or not, but it sure has the smell of it.

Nah, nothing so nefarious. Adafruit has put a lot of money and time into developing CircuitPython, all of which is open source too. But Adafruit’s leadership and MicroPython’s leadership have necessarily different priorities and goals, so the fork and rename was done deliberately ahead of time so both sides can do what they need to without interference or blame. It’s not theft, it’s open source and governance issues being resolved in a mature and respectful manner.

CircuitPython and MicroPython both have their place – and the teams communicate regularly and with respect from both sides. I do wish there were less differences, but it’s proven difficult to resolve now.

I think both projects are increasing in use rapidly, I think both projects have their niche – if you’re an Adafruit customer, a beginner or hobbyist, CircuitPython is a great choice. If you want more control then MicroPython is often a better fit. They use practically the same interpreters so the bulk of the language is largely identical; it’s in the peripheral control where there are differences.

MicroPython has remained relevant because the core team are *very* knowledgeable and produce an exceptionally good system – that CircuitPython benefit from.

There’s few mistakes to learning either platform. There are differences, but switching from one to the other isn’t particularly difficult.

For a coding library to be taken seriously, it has to prove it’s stability by existing for a while with continual updates and by garnering wide spread adoption from developers. Micro Python meets both criteria

I know circuitpy has some issues with multitasking, but then micropy doesn’t really follow standard python in a lot of ways.

Both kinda have their issues

Which issues are you referring to? MicroPython does differ from CPython in some ways; usually where conformance would mean using excessive memory. That said, the core team do what they can to make it as compatible as possible. The differences are documented here:

https://docs.micropython.org/en/latest/genrst/index.html

I’m pretty old school, but I never use a low level language unless it’s required for the task. I can get more done quickly using something like Micropython, so that’s what get’s used when I can.

It’s also opened up a lot of low cost micros for my hobby interests as well. I personally applaud those involved with bringing it to fruition.

Yeah, ditto.

If I can get a project done quickly with uPy, that’s what I’m gonna use!

I don’t resort to low-level programming unless I have to.

The most complicated thing I’ve done with Micropython (or rather, the Adafruit fork known as CircuitPython) involved one of Adafruit’s “Scorpio” boards to drive a small number of WS8212 LEDs (“Neopixels” in Adafruit parlance) and a two digit LED display via one of their i2c backpacks to make several storage appliance bezels light up and do cute things without having a couple hundred pounds of storage appliance breaking the cubicle desk.

I have a picture of it (and some prototyping pics getting the LED displays working) at https://www.sub-ether.net/gallery/index.php?twg_album=jecook%2Fbebezeled&twg_show=x#2.

I have a couple ideas for my next project using that board- I’m using not even one percent of it’s capacity.

(Just… don’t ask about the code itself- it’s very much brute force and ignorance. :D )

Props for the Rory Gallagher reference

Thanks! (and sorry about stealing the username. :D )

I think it will get quite interesting when they get USB Host working, I can imagine many “mini” computers being built using it as both the OS and the programing language.

I’m not familiar with “USB Host”.

I’ll have to look into it, it certainly seems interesting in my head!

Another drawback of micropython is heap memory fragmentation. Although it doesn’t leak that much, it’s using the heap a lot more deeply than a typical C/C++ program. And this, in turn will lead to heap fragmentation, the allocator will fail to find a free space that’s large enough for your need. You’ll need to reboot the microcontroller to solve this, since GC doesn’t work either, the other object are still reachable but spreads everywhere in the heap.

It also doesn’t actually compile the python code, but just interpret it. It’s slow, but for most part, it works.

I love the FTP part of micropython where you can upload your .py file and get that executed. Used for a lora sensor on a Pycom device and it was a very joyful experience.

If you need REPL and near native performance, Espressif has a webassembly WASM project here: https://github.com/espressif/esp-wasmachine

The advantages are multiple, you can develop on your own machine (WASM can be run anywhere), if you provide your own stub for low level components, and upload OTA on ESP device’s filesystem in live to re-run. Debugging is harder however, but who needs to debug with printf?

Are you saying that micropython does not support garbage collection, or it just doesn’t work properly?

It uses GC which does not do defragmentation since it would need double indirection (or some hardware mechanism which MCUs usually doesn’t have) so you references can be changed. AFAIR it was all deemed too much for humble MCUs years ago.

All those *Python on MCUs are just child’s toy. Excelent for learning, unsuitable for anything even one tenth serious…. since all you got are mitigations without any (even unproven but good faith) guarantees.

“It also doesn’t actually compile the python code, but just interpret it. It’s slow, but for most part, it works.”

https://docs.micropython.org/en/latest/develop/compiler.html#emitting-native-code

You can set a threshold in the GC to run it more frequently if you are loading and unloading in memory often. This prevents memory segmentation, but the real solution is to freeze your modules to prevent having to load them at all

My experience is that when you’re doing anything timing-critical, you want to be running the GC yourself. It takes around 2 ms, which can introduce significant weirdness into routines where the timing is tight.

If this isn’t frequent enough, you can then run into memory problems, as you’re all suggesting here.

Also, rebooting the uC shouldn’t be viewed as a negative. You can create a module to store data (like state) over a reset, and it’s virtually instant anyway. So you can sit waiting for a command, then when you get it, push to a new state, reset, do what you’re supposed to do, set new state, reset again, etc. It’s a little hack-y, but it works.

That’s one of the huge benefits of scripting: you can extend code/RAM space even on systems that don’t really support it.

Heap fragmentation should be managed carefully for anything that uses the heap. MicroPython actually has some tools/APIs that can help with this. For example, several APIs that deal with buffers (like I2C etc) support an “_into()” version – where you can specify the buffer to use – reducing allocations (and fragmentation). It is also wise to use memoryview to get an access to a buffer. And using bytearray when constructing data instead of string concatenation etc is also smart.

I use MicroPython’s Unix port more than its big brother. Having two nearly but not completely identical languages just would need too many special cases to get both variants covered the same source.

In case you’re not aware, you can also run MicroPython in a container:

> docker run -ti –rm micropython/unix

It’s particularly useful for running unit tests.

Was anyone brave enough to use it in a product? Enough to think about dynamic RAM.

Yes, and it’s been a wonderfully productive environment in which to develop.

You do have to watch memory usage for long-term robustness in a product. I still think that’s easier than avoiding the footguns provided by C/C++.

Does it halt compiling if indent is done with tabs when rest of the code is intended with spaces?

Tabs are taboo as far as I am concerned…. Unless in your editor you set ‘replace tabs with spaces’. I avoid tabs like the plague myself as I’ve had to maintain code (c/c++) that had tabs in it — very much disliked them. Only place tabs our used because I have to is in ‘make’ files.

I can understand why you don’t like tabs. I didn’t like them too.

The real problem with tabs is when different people use them in different ways.

A good use I practice is to use the tabs for indention in new blocks and spaces everywhere else. This allows for different people to have their tabs equal to different amount of spaces.

Nowadays there are tools that can easily deal with all kinds of styles and conver the code to the preferred one.

“The real problem with tabs is when different people use them in different ways.”

Yep, that is exactly what I am talking about. I never use tabs, so my code is clean using my 3 space indent rule (whether C, Python, Pascal, Perl, etc.) . But when perusing other peoples code … use of tabs ‘really’ bugs me :) . And of course, the next big irritation is when open brace is NOT lined up with closing brace in C/C++, Java, Perl, etc. Nice that Python enforces this block style sans braces.

In short: it gets Really Pedantic about spacing and tabs REAL quick. (At least, CircuitPython does.) this has led to at least one instance of me just walking away from the project I was building for a couple days due to the frustration and the lack of it going “HEY IDIOT, I YOU DONE SCREWED UP HERE” but not _telling_ me it couldn’t parse the line because of an errant tab or space. SUPREMELY frustrating.

Well, this is just basic language syntax. You get the same weird errors when you forget some braces or semicolon in C. (Or in some cases the newline at the end of an header file…)

As with any languages, the best remedy to cure a lot of frustration is to write your code using a good IDE with syntax checker, linter and static validation. Also most [Micro-/Circuit-] Python libraries nowadays provide good type-hinting, which also help prevent a lot of errors at runtime (which could be a plague in the past).

Yes, it is the main reason I dislike python very much. It’s too dependent on whitespace. I once found a bug in MeldMerge, and tried to generate a patch for it.

The bug was missing whitespace at the last line of a for loop, so the last line was not part of the loop anymore. Such errors are too easy to slip into your code by mistake.

The other big problem is lack of the need for declaring variables. A simple mis spelled variable name, just invents a new variable, and you can spend hours debugging that.

Another one is the if __defined__ main(); thing, or whatever it’s syntax is. It’s a horrible hack for something that should be standard from the beginning.

The Python V2 vs V3 war is mostly over now. That was a real nuisance when I was interested in python. I took 10+ years to settle, and in the mean time I’ve mostly lost interest in python because of the earlier mentioned faults.

Some other small ones:

You can’t add to a list, unless you first declare an empty list. So you can’t use a single line to create a list variable and add the first item in the list. (Would be handy in for loops for example).

Also very irritating that python ads newlines to all print statements. It’s such a nuisance to print a bunch of things on a single line.

Python is a popular scripting language, and I might use it for that when forced to (KiCad, FreeCAD, etc) but it won’t ever be a language I like to use.

I did once flash micropython into a black pill (STM32F411 from WeAct). It worked, and I was sort of impressed by it. Also liked the idea of the REPL, but would like to see it extended a bit with (linux like) file operation commands (ls, cd, mv, rm and a few of those, nothing special, just to do the basic file manipulation. I guess such a thing can be added without loosing much compatibility (except for the extra reserved keywords).

After verifying it worked it got put back in a drawer somewhere. For real programming I use either C or C++, and I prefer a real programmer (such as ST-Link V2) above finicky bootloaders.

Maybe I’m just old fashioned.

Basically the same rules as regular Python.

You can mix tabs and spaces – even though that’s a terrible idea!

“But it’s all the other libraries that you get for free that make Python awesome on a microcontroller.”

Libraries: Good, Bad, Ugly

Let’s face it, libraries are valuable (invaluable?); but, the black-boxes called libraries are not always the blessing they appear to be initially. Arduino’s “core” is essentially a library providing the syntax and low-level interfacing to the manufacturer’s microcontroller; without the “core” you might as well write the full program in C.

With “core” libraries, a high percentage of Arduino projects can be ported (easily?) between different uChips at the source-code level with just minor changes, if any at all.

Peripheral and Sensor libraries such as Adafruit’s are invaluable to ‘easy’ project implementation; example code from Adafruit soothes the pain of digging into the ‘black-box’ to understand proper usage. A very good thing.

— or is it ? —

As a teenage boy, I worked on my first car all week to ensure I could drive it successfully on Saturday night. I learned about every system in that Nash from the strangeness of the breaks to the flaky electrical wiring and other temperamental stuff. In a year’s time I knew that metal beast of a convertible from bumper to bumper. When I drove that automobile, I knew what was causing every creaky noise or whiny sound. That knowledge has been invaluable in my lifetime. Most teenage male drivers today are clueless to the science that makes their car function and are impotent if required to repair anything.

I learned to program in the very early 1970’s in machine language before formal programming at the university using Fortran 66. While Fortran had library calls, it was mostly file-based and no decent program ever was written without many, many sub-routines. There were math libraries and statistical packages but to use those tools, one really needed to understand the underlying library or be well-versed in the topics.

And, I thus believe that far too many black-box libraries exist in today’s programming world; the programmer is no longer expected to be able to write the library code independently; rather, libs are used to implement projects and the “coder” often is ignorant of the internals of the library. Said another way, libraries are (often) the easy-way to an end result.

Which begs the question, “Are we creating programmers or just coders that can color a picture-by-numbers”? Can a black-box user really attest to the accuracy and stability of their full project code? The switchboard operators of old with their jack-panels and corded plugs were not titled “telephone engineers” – would they be in out modern world?

breaks –> brakes

Another marvel of today’s world: spelling autocorrect

Do you still have the Nash?

How I wished … miss that ol’ thing, so much fun @ 16

Mine was B&W:

https://en.wikipedia.org/wiki/Nash_Metropolitan

Or more likely, ppl that don’t know (or care) about the difference between works.

Things I see that ppl have problems with:

Two, to, too;

Brakes, breaks;

There, their, they’re;

Pole, poll:

Peak, pique;

Much more.

And, I’ve even seen where ppl use an idiom that they’ve heard, but don’t understand the actual words (the example doesn’t come to mind right now, it’s my “nominal aphasia,” lol)

I do have trouble with the autocorrect on my phone… But you can’t blame autocorrect for everything!

Ppl just don’t care to be precise with their language (which is astounding with the current generation of coders!), and that attitude manifests in very many ways of “unintended consequences.”

Adding to that is the phenomenon that, somewhere along the way, it became commonplace for ppl to invent words as they go. (Unfortunately, I am guilty of that, too.)

Sorry for the inappropriate rant in a uPy article comments section! 🤪

But it’s true! As a writer, I see it too much. It actually jumps out at me.

Maybe if computer languages had homonym commands that performed something quite different (e.g. loupe, four, fthan, ells commands) programmers might become careful about their spelling.

B^)

This is a big problem with people learning using the likes or Arduino and MicroPython, they progress to the point of using libraries and then stop, they never learn how any of it actually works. Hand them a sensor and a datasheet and tell them to use it with a microcontroller and they can’t. They can’t use a sensor if it doesn’t have a library already made for it and they can’t use an MCU unless it is supported in Arduino or MicroPython. It is a big issue even with university students now, even after graduating.

The problem is everyone thinks they are making it easier developing the likes of MicroPython or writing libraries but if you have experience writing your own libraries and reading datasheets it is often just as quick to write it yourself than faff about with someone else’s library that may contain errors or not function totally as expected.

Writing it yourself has a lot of extra advantages. You can add only what you need and make it more efficient and you can tailor it to your application instead of using a library which may have unnecessary checks or delays. One of the biggest issues I see are people putting delays in their library, that is just wasting time. Then if you need more performance and for example try removing or shortening the delays it doesn’t work properly, if you just wrote it yourself from the start then you can often end up with a better result.

Most hobbyists won’t know how to debug hardware faults either, they don’t know what the protocols or communication methods are actually doing.

By making things too easy it is actually producing worse programmers. You can write a whole program without having to write any more than basic code yourself, just call the right libraries in the right order and hope it kind of works out okay. It is lowering the barrier to entry too low, people now don’t have what would have been classed as the basic or common knowledge required to start working with electronics before.

Lowering the barrier to entry too much is not a good thing, they are missing a lot of things they should know which leads to them making silly mistakes or doing things in an unsafe or bad way. Like running high current motor drivers on a breadboard, not having hardware or software protections and things like that.

Fully agree with your point on knowing less and less about what’s actually happening.

You could argue, it depends on what you’re after and how much time you want to invest going down another rabbit hole. If it’s just getting your sensor to work with Home Assistant for instance, you can forget about even the libraries and just focus on putting your hardware together and configure the rest.

If, on the other side, you really want to get into this for hobby or work, at some point, you have to look behind the curtain, what the libraries actually do. This will propel your understanding and eventually make you that senior to go to for asking questions.

My view is, leave it with everyone to decide how much time they want to spend on learning to achieve their goals. At least, in a non-professional context.

I’ve written small amounts of code in machine language back in the 8 and 16 bit Atari and early x86 PC days when that was necessary. However, I do not want to waste my time creating a library for every complex MEMS sensor I attach to my microcontrollers, a sensor which many thousands of hobbyists have used before me. To compare that with modern vehicles, I’m sure you’re familiar with the sophisticated tablet-based scanners that display the vast amounts of data recorded from the 20 or more microcontrollers used in a typical car. Without that data and those scanners it would be virtually impossible to troubleshoot a modern car to find most problems without being the engineer(s) responsible for the faulty subsystem. We’ve moved on from programming for relatively simple individual parts to programming to use entire functional systems/blocks.

As someone who still makes a living writing assembly, it’s nice to see such high level languages as python eliminate all of the complexities. Sure it’s at the expense of hardware cost and performance, but we have that in excess. Can’t wait until that hardware excess justifies eliminating programmers/programming altogether with AI.

You know you can write inline assembly in MicroPython?

https://docs.micropython.org/en/latest/pyboard/tutorial/assembler.html

I had a Dunning Kruger poster boy say the same thing to me the other day.

interactive comprehensive low-level access is one of my favorite things about forth, which can easily accomplish all this comfortably in less than 20kB. the fact that even a $4 ‘microcontroller’ board has enough memory for a pretty complete micropython environment these days is just wild.

I presume this is the new logo as an SVG file: https://upload.wikimedia.org/wikipedia/commons/a/a7/MicroPython_new_logo.svg

And this the old one: https://upload.wikimedia.org/wikipedia/commons/4/4e/Micropython-logo.svg

I haven’t followed µPy as such so I’m not really sure.

Micropython is great, but I really like “PicoMite”, a variant of BASIC. It’s quite powerful, very fast, and even has a very nice built in editor that runs on the serial terminal.

it can use i2c,spi,uart, has support for lcd’s, various temperature, humidity sensors, sdcards, wav file playback, just about everything.

mind you, this variant of BASIC supports subs, functions, and even the PIO programming language.

A lot of people tend to knock BASIC, but for rapid prototyping, especially VisualBASIC, nothing comes close.

I really don’t see any other projects, aside from maybe some very niche ones, that have the “edit, compile, run” loop this tight.

a built in editor, where all you really need is the serial terminal.

Thanks Bill for this useful language: “PicoMite”.

I would argue that MicroPython isn’t really any faster to write than someone who knows what they are doing with C. MicroPython may be good for beginners or as a very quick test of something but doing the same thing in C or C++ really doesn’t take very long either.

I would say that MicroPython is not suitable for writing a whole project in unless the project is very simple. The inefficiencies are just too high and you have the issue of it potentially being more unstable due to memory allocation.

The amount of storage needed is very inefficient too. First you need space for MicroPython itself but then all your code is stored as python files, which is much less efficient than compiled code.

MicroPython does work but it has given people any easy option rather than spending more time to learn how to do things in a better way and writing C or C++ for microcontrollers is not that complicated, at least for the basics.

I think things like MicroPython have lowered the barrier to entry too much, people can now start working with hardware whilst knowing nothing about the hardware. To use a sensor people now need to know nothing about the sensor, they can start using motors with no idea what they should be doing, leading to silly mistakes and a total lack of ability to debug or troubleshoot their problems.

Being a bit head myself, I think you are looking at it wrong. The whole idea is for most users (who really don’t have the time, education, or even care what internal registers need to be accessed) to be removed from the hardware and just ‘do’ things without having a set of datasheets to figure out what’s what :) . Opens up a whole new world to some. Meanwhile those that ‘want’ to go low(er) level still can with C or assembly. A win win. I think there is a place for everyone.

I don’t know if you noticed, but writing a Pico project in C requires the SDK. I noticed even on my fast development machine a compile/link cycle is way longer than just dropping a Python script onto the device (say Pico). Python is MUCH faster turn around as the source code is the run-time code.

I don’t like the rp2040 in general and it in no way requires the SDK, that’s just the recommended way to do it.

Python may be fast in some areas but it is slow in others. Make a syntax mistake in a piece of code that isn’t called until quite far on in the process or is only called occasionally and you will only find out about it when it tries to run that code, unlike C where it will tell you when you compile it.

listen, you’re straight up just wrong on how Python works. A syntax problem will be caught immediately, and, even if it’s a typo, that’s a tradeoff of Python in the same way that C has memory management tradeoffs. If you’re not interpreting the C vs Python as “languages operating on different abstraction levels, each with their own associated and unique tradeoffs”, I have to wonder what your experience with Python is. Yes, Python is faster to write, for a number of reasons, in large part because it’s a high-level language and it allows you to tackle larger concepts in less code. Some of that is thanks to a healthy standard library, some of that is due to tons of third party libraries others can write and maintain quickly, and some of that is because the language is more accessible as a result of it being higher-level.

Is it an ideal tool in all circumstances? No; I wouldn’t want my browser to be written in Python. At the same time, I would heavily mistrust a browser extension API written in C, if only because because of just how much ground you have to cover to ensure safe operation.

One can “freeze” Python modules into bytecode to save space. And one can generally mix and match MicroPython with as much C code as one would like :) Either by writing additional modules in C. Or one could even have a C program which embeds and calls into MicroPython for a particular function or subsystem. Useful if one wants to expose some flexible extension points in specific areas.

“MicroPython have lowered the barrier to entry too much, people can now start working with hardware whilst knowing nothing about the hardware.”

For Adafruit, CircuitPython sells a lot of their sensor and host board products and thus there is an underlying economic agenda. I concede that Limor has and continues to be a major player in advancing the uC platforms, but Adafruit is a business and profit is the underpinning of staying relevant.

Referring to my previous analogy about drivers and mechanics, I cannot really criticize any Maker that simply wishes to utilize an uC in a project and not learn the low-level details.; after all, the Arduino concept was the results of providing hardware and sufficient library routines to allow non-programmers to utilize advanced electronics and microcontrollers outside the traditional techie environments.

Wikipedia: “The Arduino project began in 2005 as a tool for students at the Interaction Design Institute Ivrea, Italy,[3] aiming to provide a low-cost and easy way for novices and professionals to create devices that interact with their environment using sensors and actuators.”

>I would argue that MicroPython isn’t really any faster to write than someone who knows what they are doing with C

You would lose that argument. :P Our estimates – based on a number of projects we’re run – show that a typical non-trivial project will have reductions of 20-30% in terms of software effort (of course, it depends on the project but that’s a good rule of thumb). We expect that to *increase* as we see MicroPython, especially with regard to tooling and libraries, mature.

Further, I’ve used C (several decades at this point) for a lot longer than MicroPython and it’s a no-brainer. I can write most solutions in MicroPython *significantly* faster than in C.

>MicroPython does work but it has given people any easy option rather than spending more time to learn how to do things

This I agree with. Using MicroPython does not excuse you from learning the embedded domain. But it does make the on-ramp less steep.

yes, MP might be faster to write than C if everything you want to do has been done for you (most likely in c/c++) so that you can just call it…

But as soon as that is not the case, you’ve hit a brick wall..

The thing with doing c/c++ on embedded stuff is that you can do it on any processor, with any amount of ram etc. I’ve got some of my code that I now use across 4 different processors, which would be not doable with MP as three of them are to small to run it..

The whole thing with python, let alone micropython, is that it’s a scripting language to control efficient code that some else has written in some other language..

There is a saying “Give a man a fish, and you’ll feed him for a day, teach a man to fish and you’ll feed him for a lifetime.”

In the embedded world, it could be stated as “Program in python, and you’ll get something done for a day, teach yourself c/c++ and you can do anything you want on an embeded processor for the rest of your life”

:-)

Yes, but pushing through that brick wall is barely any harder in MicroPython. Implement that small part of the solution in C if you need to and then expose it to MicroPython. You’ll only write a small portion of your system in C and get the benefits of rapid development by sticking with MicroPython for the majority.

But you’re right; if your processor is small (say, <32KB RAM and <128KB flash), and cents in the BOM are critical, then MicroPython probably isn't for you. But there are an awful lot of cheap microcontrollers with a plethora of resources these days!

there are a lot of cheap ones out there with a lot of resources, though many of them mean your power budget goes out the window…

My point was the JUST knowing micropython isn’t a good way for many of us to get things done on an embedded processor. If yoy also write C/C++ and want to cal lit from MP, then go for it – you should be able to do what you want.

However, once you have written the ‘low level’ bits in C, I don’t see a hugh benefit in wrapping them up and calling them from python – if you were restricted to just C and python I would, but you aren’t – we have c++! So you encapsulate what you are doing as a classes, then do the high level stuff with no more difficulty or time than doing it in python (indeed probably easier..) . Which is particularly useful when you go multi threaded muti core on a fat embedded processor (ie esp32)..

What is the point of using MicroPython then? If you organise your code well using C or C++ then python shouldn’t really be any better for rapid development if you already need to write bits of it in C or C++.

Your main file in C should be simple and mainly just consist of functions calls to libraries you have written, that way you can easily substitute functions, reorder the function calls, etc, pretty much everything you would be doing in MicroPython if you are using C libraries.

With any decent compiler it also shouldn’t recompile everything every time either, it should just compile the files that have changed which if you have organised your code well and made it as modular as possible it means that most files shouldn’t be recompiled for simple changes meaning the development process is quick too.

Add in a debugger with the ability to add breakpoints in C and view variable and register values and you have then matched pretty much all of the advantages of using MicroPython.

What do you mean brick wall? If the things you need are not exposed in MicroPython, you write some additional C modules for that. You can mix and match as much as you want. Documentation here: https://docs.micropython.org/en/latest/develop/cmodules.html

And there are more code examples in the MicroPython git repository.

Knowing C is very very useful in embedded. This remains true with MicroPython also :)

No, knowing C is essential for embedded at any more than a basic level but knowing MicroPython whilst it could be useful in some situations is not essential in any.

What is someone doesn’t want to spend their life as a “fisherman?” The whole thing with civilization is specialization of labor.

You’re welcome to be a fisherman, if that’s what floats your boat, but me, I’d rather spend my time doing lots of different other things, maybe even specializing in one of them.

Then, when I’m in the mood to eat fish, I’ll buy some from you or another fisherman.

lol, lmao.

🤪

Your analogy is off. A consumer buying fish is not the same as a hobbyist wanting to do basic embedded programming. A consumer buying fish would be the same as a consumer buying a finished device, not someone trying to program their own.

You may not want to become a fisherman but you want to fish as a hobby, you will need to learn at least some of a fisherman’s skills if you want to succeed.

You may not want to become an embedded engineer but you want to do embedded engineering as a hobby, so you will need to learn at least some of an embedded engineers skills.

Conor, it really ain’t that intense and we don’t benefit from having it look this intense. If you try and set a higher bar for things people do as a hobby, you gotta back it up really well; going in and debating others’ experiences is a subpar start.

If you have bee doing it for “several decades..” you should already have the code you wrote to cover just about anything you need to do. So how can writing it in MP be faster than a simple copy and paste say your I2C routines into your new project.

Yes, because if you’ve been doing software development for a few decades then that’s it! You have no new code to write.

Who is this “we” and who has decided on this rule of thumb? Some commenter known only as “Matt”. If you are going to go on about projects you have run and estimates from those projects then it helps to know who you are.

How are you coming up with these estimates anyway? A standardised task you are giving to a large group of programmers of varying skill with half using each language and comparing the average time taken? Or just something like rewriting a code base which will always be less effort the second time around anyway? Or something even less accurate like comparing average project time among a few different projects?

It also seems our definitions of “non trivial” would differ, if you have common libraries and tools for it then it is trivial, non trivial to me would be implementing custom algorithms or just implementing them yourself or having to write everything yourself as it doesn’t exist in a way that is usable to you or for the platform you are using.

it’s not reasonable to expect people to run scientific level standard studies like this before they share their experiences; at the same time, it’s pretty reasonable to trust personal experiences when they’re laid out as well as this. Also, especially having the backend data, I’d ask you refrain from doing the “some commenter” part, you’re not the one looking baseless.

Pbpbpbpbpbttt!

* You can (and should) freeze the uPy code after it has been finalized & tested. (Even multiple times!)

* Libraries are useful for rapid prototyping.

* It’s up to each individual to decide how much “under the hood” they want to look at (&understand). You don’t have to hire the ones that don’t know anything about the hardware or what’s in the libs they use.

Ok, all arguments are settled.

It’s final.

😎 🤪

i’m the biggest fan of C, and a huge hater of python, but

of course for a large project you will inevitably have some compile-upload-execute delay, and C comes to an equal footing. but for a small project, you can prototype it interactively at your serial console. that interactivity is the point, in my opinion. especially if you’re cobbling the hardware together at the same time. you build your little circuit on the breadboard and directly trigger the PIOs by typing commands into python. so you don’t have to over-think how it works as a cohesive piece of software before you can start sending test signals into it. there’s definitely magic to a ‘REPL’ loop that it’s hard to replicate with C.

for myself, i’ll still use C, and i’ll just try to get the compile-upload-execute delay down to where i can comfortably prototype different ways of playing with the hardware. but imo there’s no denying the appeal of this, even though it isn’t all things to all people.

I’ve used C since ’85 or so. My background is real-time programming for SCADA master/rtu systems. So I kind of know when timing is important, interrupt driven code, and of course multi-tasking… And of course very familiar with the C language. Over the last few years, I’ve found I can do my ‘home’ projects faster with Python than with C for those SBCs and controllers that support it. And since the projects don’t revolve around having to be ‘real-time’, the results are more than satisfactory for tasks at hand. Nothing says I can’t drop to C or Assembly when needed. Win Win… I guess what I am trying to say is we now live in a golden age of ‘choices’ when it comes to electronics projects and software to support ’em. I am a fan of Python and a fan of C/Assembly and try to use where appropriate. There is no one fits all language (even though the buzz now is ‘Rust’ is the greatest language since punched cards were standard).

“To be clear, all of code is running on your microcontroller,”

Once your app is developed can you remove those parts on micropython not referenced by your app so as to boot directly into you app?

NSA 1980s requirement was that all code not directly addressed by their app on a hardware platform must be considered potential malware vulnerable and removed.

Impressive information this article imo.

Look forward to test micropython and gcc c compiler on several recently purchased sbc platforms.

While pursuing . anti-hacking ~32 [42] year project, of course.

https://prosefights2.org/irp2023/mvdnow2.htm

“Microchip Launches PIC64 Portfolio for Embedded and Space Apps”

No, the entire MicroPython interpreter is still there. You can of course configure the build quite a lot, including disabling most (all?) modules that you do not use. But it is typically on the module level – so unused functions in a used module will still be there.

But if one really has a requirement of zero unused codepaths, then MicroPython is not for that project.

The company I work for develops medical devices and MicroPython is one of the languages we employ for some of our producst. It’s been an extremely positive experience.

Pity that Parallax Spin did not get much love. That was a high level microcontroller programming done right…

“Pity that Parallax Spin did not get much love. That was a high level microcontroller programming done right…”

I agree!

I think that project might be the inspiration for a few others.

FWIW the Propeller 2 folks are investing in porting MicroPython to their device…

I wonder how micropython compares to Espruino (embedded Javascript). I also has REPL and quite some modules. It would be interesting to have a comparison between the two…

Yes, I like MicroPython, but it has its limits. In C, for example, I can get an 8-bit value from a UART. Directly. No buffer to contend with and no goofy data returned in an unusual format that requires extra code to get the 8-bit value. So, yes, MicroPython is great, when it works. I’m more of a bare-metal programmer, though. Sadly none of the MicroPython documentation clearly explains what a buffer is, how it works, or how to use it. The same thing goes for interrupts. There’s no easy way to disable them! It should take a couple of machine-language to do that–and to re-enable them. Some parts of MicroPython are too complicated.

A bufffer used for I/O such as USART is typically a bytearray. So if you want to get a single byte, pass a bytearray of length one, and access the first element using [0]. It is exactly analogous to C APIs which take uint8_t *buffer, size_t length (as found in Zephyr etc). In MicroPython, you disable interrupts using the function machine.disable_irq().

There are also ways to poke at memory-mapped registers directly, should you have a need for that.

Micropython is great, but I really like “PicoMite”, a variant of BASIC. It’s quite powerful, very fast, and even has a very nice built in editor that runs on the serial terminal.

it can use i2c,spi,uart, has support for lcd’s, various temperature, humidity sensors, sdcards, wav file playback, just about everything.

What about using Lua? I would assume it is faster since it is compiled. The compiled machine code is then run in the Lua VM.

I’ve been programming for 50 years but I didn’t do much with hardware until I retired a couple of years ago. Last winter I wanted to use a Pico W to take periodic temperature measurements and push the values to the cloud so I could monitor overnight temperatures. (In this part of the South we rarely get freezing temperatures, so when it happens you must make sure your pipes are properly insulated or they will freeze.) I used MicroPython for the project and found the programming to be very simple. I programmed the Pico to wake up every ten minutes, establish an MQTT connection through WiFi, read the temperature from two waterproof DS18b20 sensors (one for pipe temperature and one for ambient), post the numbers, and go to sleep again. There were easy-to-use libraries for networking, mqtt, and the DS18b20, so coding was very easy. This device is not taxing the RP2040 at all, so I didn’t need to worry about efficiency and MicroPython was a great choice. I spent as much time learning Python as I did learning how to control the hardware. The only thing I found wanting was the sleep function, which didn’t reduce power consumption as much as I hoped. I got 18 hours of battery life, though, which was enough for the overnight application. I’m going to use C for my next project, but honestly the learning curve is much, much steeper. I learned C from reading K&R in the 1980s, so the language isn’t the issue. It’s learning VS Code, CMake, and the C SDK. These are complex tools and take a little while to get your head around. But I’m looking forward to it because I believe the investment will be well worthwhile.

I too used K&R during those days. But I see no reason to ‘learn’ VS Code on Linux. Any text editor will do. I have been learning CMake though as that isn’t just relevant to the Pico. At work, our Energy Management System vendor is switching over to cmake rather an rely on Visual Studio project files. I can see their reason as they have feet both in Red Hat Linux and Windows. Help their cross platform effort.

Good old K&R. We were largely a Fortran shop when I picked up a copy of K&R to read on a business trip. I was blown away! I haven’t written any Fortran in decades, but I still write C.

You may be right about VS Code. I’ve used Notepad++ for other projects and like it. But I used Visual Studio for years before I retired and found it a fantastic IDE. Now in retirement I’m trying to branch out a bit, as I indicated above. Also, VS Code seemed to be path of least resistance. It’s actually installed with the Pico SDK on Windows, and referenced in the examples and tutorials. It seems to be really popular these days, so I figure I should give it a chance.

Other than college, I never did write a ‘useful’ Fortran application. I did help maintain some Fortran apps for plasma scientists (space vehicle re-entry problem). No punched cards here :) . When I graduated from college, I was hired to help with real-time code for SCADA applications and cross compiled C (68xxx to PowerPc) was ‘the’ language. On the non-real time side, we used Borland products on DOS/Windows and stuck with them. From Turbo Pascal to Delphi and C/C++, Tasm, were the development tools of choice. Wasn’t tell later when we wanted to see about running on Linux (to begin with on a SBC) as well as Linux that we started using gcc for both Windows and Linux platforms.

At home, all my machines SBCs, servers, laptops, desktops, etc. all run Linux. Left Windows behind. Micro Controllers like the Pico are Python based. Haven’t needed to drop down to ‘C’ yet, though I’ve tried a few simple apps for RP2040 boards with the SDK.

VS Code just doesn’t appeal to me. Since you worked with VS, you should feel right at home I suspect. Good luck with it.

Interesting background. I worked on chemical engineering software tools – first on process simulators and later on advanced process control.

One final note: VS Code is absolutely nothing like Visual Studio, other than the reductive observation that they’re both IDEs. And even that is a bit of a stretch. Out of the box VS Code is mainly just a text editor, but a very extensible one. Visual Studio has tons of powerful tools built in, with everything available through context menus or the “ribbon”, in addition to keyboard shortcuts. VS Code is primarily keyboard driven, with a command search capability if you don’t remember the keystrokes. I’m tempted to say that makes VS Code harder to learn than Visual Studio, but honestly I learned my way around Visual Studio so long ago it’s probably not a completely fair statement. Time will tell!

Cool. As close as I got to chemicals was a load shed project I was on using our equipment and software for a chemical company in Louisiana. They had on-site generation and also fed by Gulf States Utility. When the plant lost power feed from GSU, they had to shed load (by tripping breakers of processes in a priority order) down to what the site generators were producing (power in MUST equal power used). Shedding load had to be very quick, or the gas generators would also trip off-line (big safety issues if plant lost all power)… Several trips to the site, but got it done… Those were fun rewarding hectic days working on projects for Hydro Dams, substations, and communications monitoring and control. Now I just have to be content with home projects to turn lights on/off, sense people entering rooms, simple robotics, etc…. Ha! :rolleyes: :)