DEC’s LAN Bridge 100 was a major milestone in the history of Ethernet which made it a viable option for the ever-growing LANs of yesteryear and today. Its history is also the topic of a recent video by [The Serial Port], in which [Mark] covers the development history of this device. We previously covered the LANBridge 100 Ethernet bridge and what it meant as Ethernet saw itself forced to scale from a shared medium (ether) to a star topology featuring network bridges and switches.

Featured in the video is also an interview with [John Reed], a field service network technician who worked at DEC from 1980 to 1998. He demonstrates what the world was like with early Ethernet, with thicknet coax (10BASE5) requiring a rather enjoyable way to crimp on connectors. Even with the relatively sluggish 10 Mbit of thicknet Ethernet, adding an Ethernet store and forward bridge in between two of these networks required significant amounts of processing power due to the sheer number of packets, but the beefy Motorola 68k CPU was up to the task.

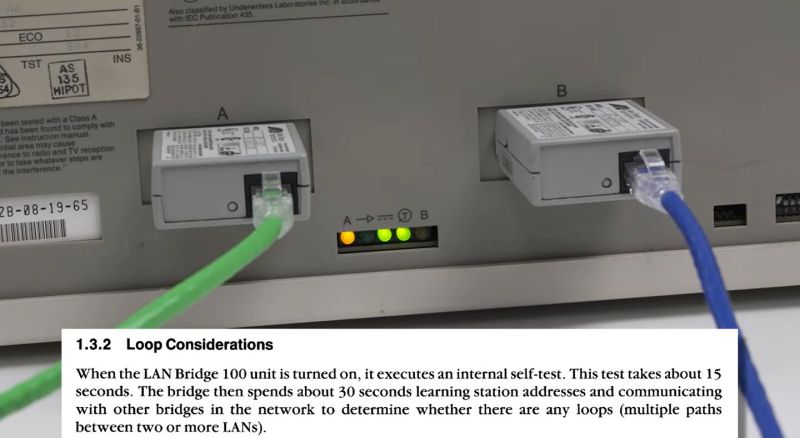

To prevent issues with loops in the network, the spanning tree algorithm was developed and implemented, forming the foundations of the modern-day Ethernet LANs, as demonstrated by the basic LAN Bridge 100 unit that [Mark] fires up and which works fine in a modern-day LAN after its start-up procedure. Even if today’s Ethernet bridges and switches got smarter and more powerful, it all started with that first LAN Bridge.

This is an important part of modern networkign

Lead photo shows a pair of AUI to UTP adapters! I actually have one of these to connect my SGI Indigo to the network…got it off eBay. The box was free from work, but I must have spent over $200 on adapters to get it working :-)

I also have a few lengths of thick net coax…two of which are currently serving as feedlines to antennas in the backyard. I do not for a minute regret the demise of AUI thicknet. The AUI slide-latch connectors were a terrible design (thanks, AMP!) and the AUI cable itself was less than flexible. The less said about “vampire” taps, the better. Crimped N connectors were a huge improvement. And debugging shared media was…less than fun.

I never worked on Ethernet hubs, but I did work on switches and bridges. Thanks for the memories.

Don’t forget the DELNI, a 8-port hub for AUI connections. They were all over the computer room back in the day. Cheaper than 8 “vampire taps.”

It was a dark day when those things showed up; the whole architecture of extended networks got polluted by a fundamentally inferior approach.

Radia Perlman has a lot to answer for.

what? i think i disagree but i’m not sure but i don’t care who is right i just want to understand this unusual perspective

With a real network-layer protocol, like IP or any of the competitors IP had back when bridges were introduced, the destination address on a packet tells you something about where to find that destination in the network at large. So not every forwarding device has to know about every node in the entire network. Distant routers just have to know how to get to some larger part of the network that includes any given node.

Bridges, on the other hand, have to learn where every node is, which they do by snooping on source addresses and building a giant table (which often involves expensive specialized hardware). If a bridge gets a packet destined for a node it hasn’t heard from, it has to flood replicas of that packet everywhere. And note that there’s no rule that says a node ever has to actually transmit a packet.

But it’s not just that the flooding itself wastes bandwidth; in fact, that’s the least of it. The worse waste is that the flooding will run out of control if there are any loops in the topology. So bridges set up in a complex/redundant network have to completely shut down enough interfaces to remove all loops. That’s what the spanning tree protocol is doing.

Because everything has to go over the spanning tree, you can get packets taking extremely inefficient paths, especially if the network operators don’t go out of their way to make the physical topology spanning-tree-friendly. The cheapest, simplest form of path redundancy is a ring. Set up a ring in a bridged network, and watch packets from two directly adjacent bridges pass through every other bridge in the network.

If a link comes up or goes down, there can potentially be massive chaos as the whole tree is reorganized. Again you can engineer the network to reduce the probability of that, but you shouldn’t have to do that. And you still end up with something unavoidably worse than the convergence behavior of any sane routing protocol.

None of that is so bad at rack scale, but the thing is that bridges are seductive. You can split a network without having to do anything to the endpoints. So you do. Your bridged networks grow beyond what bridging was originally meant for or can sanely support. And, by the way, since we’re still in relatively early days here, your dependence on transparency to the endpoints delays and weakens the development of good dynamic endpoint configuration (and ancillary stuff like dynamic DNS updating)… to the point where it arguably never becomes universal.

You’re used to bridges, so you start thinking you should use them for campuses and even WANs, where their problems really start to show up. But at this point you’re addicted to one big flat network, so you keep pushing it as far as you possibly can, wasting resources and damaging reliability in the process.

…and you get yourself into a different mess. You’ve gone and invented VLANs for various reasons. And since, because you’re bridging, you’re not using the network layer’s structured addresses so much for routing any more, you start seeing subnets less as routing targets and more as security domains. Not only do you not want to manage the configurations of your endpoints, but you don’t want to secure them either. So you assign them to VLAN-based subnets, and install a bunch of layer-violating, separation-of-concerns-ignoring firewalls and allied middleboxes.

The firewalls don’t work particularly well, but they work well enough to retard the development of endpoint security and entrench bad habits both in software development and system administration. By the time the firewalls start to break down, those practices are much harder to fix than it would have been to do things right to begin with.

The firewalls also have the absolutely terrible architectural effect of introducing numerous, complicated, interlocking dependencies between software written by different people running on devices managed by different people. Nothing new can cross the network until the firewalls understand it… and it’s “not worth the effort” to change the firewalls to understand anything until it’s already widely used. Both stasis and horrendous kludgery ensue… with the kludgery leading to even more lock-ins and technical debt. Plus weird effects like deterring the adoption of good cryptography.

Bridges aren’t the worst thing to happen to networks. That would be NAT, which laid waste to a huge part of the potential of the Internet. But bridges are bad enough.

hahaha thank you! that was a great explanation. i agree that bridging can’t scale and that different groups have gone through different growing pains as they’ve learned this fact. for at least 25 years, i have known that bridging is just a kind of niche convenience feature.

but i do think it’s kind of hyperbolic to see “you start seeing subnets less as routing targets and more as security domains” being blamed on bridging. i agree that is a troubling way to think!

though i confess i’m guilty myself. i have a subnet for my local VPN and i try to keep wild traffic off of it just to minimize nuissance, but i also try not to rely on that as my only line of defense. my network is small so i can afford this kind of approach — security through reducing the number of portscans i experience. advanced persistent threat isn’t in my site’s vocabulary.

and of course not only is VPN similar to bridging, but my VPN wouldn’t make sense without NAT! but NAT works great :)

the fact that we keep reinventing this kind of system just shows how useful it is, which is why it’s great that bridging is a tool in the toolbox imo

I really enjoyed reading your explanation! 😃👍

I can barely imagine how much traffic rather chatty protocols like CIFS or AppleTalk must have caused here in the early days of LANs.

“Bridges aren’t the worst thing to happen to networks. That would be NAT, which laid waste to a huge part of the potential of the Internet. But bridges are bad enough.”

Hey, NAT was used for good reason! The flaw lies within the philosophy of internet protocol that each end point is visible and reachable.

It was made by hippies and they didn’t see that malware or hackers would eventually attack the end-points.

Weak devices like microcontrollers must be hidden, must be made not directly addressable. Because they can’t fend of attacks so easily.

That’s why NAT had been repurposed to cloak individual devices.

If it wasn’t for NAT, the internet would have collapsed long ago already. Just think about Windows 98SE!

It was less secure than a smart washing machine. It had no protection, no software firewall, no packet filters.

We can be glad that most DSL modems with routers had a simple “firewall” and NAT functionality built-in.

Because that’s first time when PCs were left on unattended (in dial-up days the internet connection was cut after use).

Otherwise the damage would be much higher.

Not even Windows 2000 was safe. It wasn’t until Windows XP SP2 (2004) that Windows got a basic security software.

And then there’s IPv6, which is a total mess. Again, there had been this whacky philosophy that each device should be directly addressable.

But not even that, the IPv6 designers even recommended static using addresses because IPv6 has such a huge address space.

And that in the era of malware, cyber crime and hacker attacks..

From a security point of view, that’s a nightmare. No wonder network administrators and security people hang on NAT. Because they must handle the reality.

If that’s your concern, you can do stateful firewalling without NAT. They’re almost totally unrelated. In fact, stateful firewalling is slightly easier without NAT. NAT was adopted because people didn’t want to update their IP stacks, not for security.

But the everything-reachable philosophy isn’t a problem. It’s a good thing, and because of abandoning it we’ve ended up with a highly centralized, corporate-controlled network that’s both brittle and exposed to horrible (but perfectly legal )abuse from various business interests.

What is a problem is the idea that it’s OK to write and deploy bad software, and to not have any way to deal with problems on the endpoint. It’s not a law of nature that microcontrollers are vulnerable. It’s bad programming. Firewalls, EDR, antiviruses, and whateever else are ugly band-aids on bad systems. Including, of course, Windows 98. In fact, those band-aids often impede the deployment of actually secure systems.

Having met some of the people who built the Internet, I’d say it was a lot less that they were hippies and a lot more that they were hardasses who wouldn’t tolerate excuses for lazy, crappy code.

It’s really sad that the kids today have started identifying “security” with the nasty hacks that are used to paper over a lack of security.

Note to mods: accidentally hit “report” instead of reply on the parent comment. Sorry for the error.

Actually Win98 had configurable packet filtering and NAT, well hidden inside the “internet connection sharing” feature, which was neither enabled nor installed by default. Very rudimentary, but better than nothing. Most users simply didn’t know that it existed.

It’s the same philosophy as having every room in a house have an external-facing doorway, even the bathrooms and closets. There’s a difference in having a wall of fire to protect a door rather than not defining a door in the first place. Especially since you can be seen leaving your own door, but if the man at the front door runs your errands for you and brings back whatever the result is, then observers can only infer that you probably exist because of the patterns in what you have him do.

I worked with Telex during that time, then transitioned to DEC right before their dissolution by Compaq. The company was on the backend of transitioning mainframes, muxes, and thicknet to client server based Ethernet. Fun times!

This makes me remember my IT career.

First step a Tandberg “PC” with wit 8″ floppys and a 2780 connection to IBM and a tape drive to write compiled software for AXE phone exchanges. Also used for local editing of software.

2 – apprentice as Unix system manager for a Dec VAX-750 BSD.

Applications:

Email with in house written gateway sw between mainframe internal system and the uucp worldwide mail system before DNS, this was running on 2400 boud modems and later Telebit Trailblazer at 19200 baud.

Also News system.

3 – Building the first thick ethernet with vampire taps to connect the VAX to one of the 3 first early assembled SUN 1 direct imported to sweden. Later we added a few Apollo a Perq before going all in with SUN.

4 – First 64 kb leased line to the world wide university network, this was later upgrades and moved Internet.

if I remember correctly when I retired we had a 5 Gbit connection in Sweden, and one web proxy in each country with a large office.

5 – Part of the SNUS (Swedish Network User Society ) that was created as a result of the need to move the commercial companies away from the university network.

As chairman of SNUS I signed the agreement that initiated the first commercial internet provider to sart up in Sweden named Swipnet.