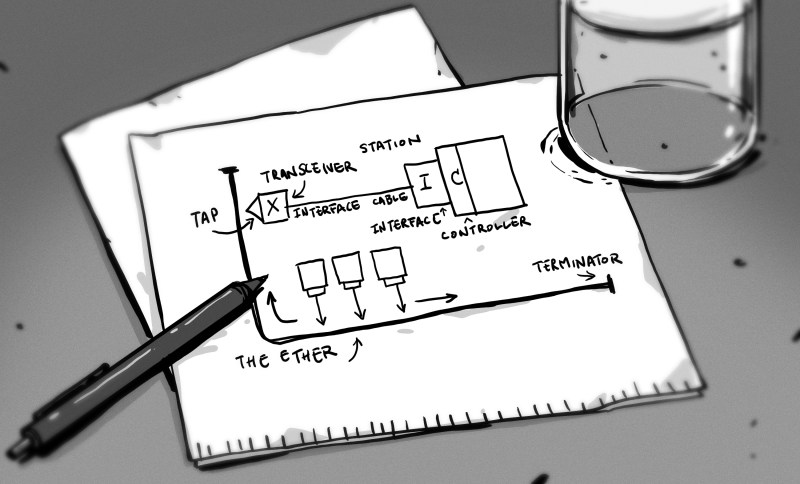

When Ethernet was originally envisioned, it would use a common, shared medium (the ‘Ether’ part), with transmitting and collision resolution handled by the carrier sense multiple access with collision detection (CSMA/CD) method. While effective and cheap, this limited Ethernet to a 1.5 km cable run and 10 Mb/s transfer rate. As [Alan Kirby] worked at Digital Equipment Corp. (DEC) in the 1980s and 1990s, he saw how competing network technologies including Fiber Distributed Data Interface (FDDI) – that DEC also worked on – threatened to extinguish Ethernet despite these alternatives being more expensive. The solution here would be store-and-forward switching, [Alan] figured.

After teaming up with Mark Kempf, both engineers managed to convince DEC management to give them a chance to develop such a switch for Ethernet, which turned into the LANBridge 100. As a so-called ‘learning bridge’, it operated on Layer 2 of the network stack, learning the MAC addresses of the connected systems and forwarding only those packets that were relevant for the other network. This instantly prevented collisions between thus connected networks, allowed for long (fiber) runs between bridges and would be the beginning of the transformation of Ethernet as a shared medium (like WiFi today) into a star topology network, with each connected system getting its very own Ethernet cable to a dedicated switch port.

Now having read it, it’s rather obvious, but it hadn’t occured to me that the “Ether” part in Ethernet refers to the hypothetical ether. Learned something new again.

Same, I’m big into etymology, but somehow never looked into ethernet’s name origin.

Wireshark’s original name was Ethereal. We could never work out whether to pronounce it as ethereal or as Ether – real.

In Yorkshire, it’s pronounced “Ee there, Al”

“Sniffing the glue that holds the Internet together.”

Honestly, the loss of that catchphrase was one of the biggest losses of OSS history.

That is way my W-LAN has “Aether” in its SSID ;-)

Spent sometime developing FDDI stack. It seemed the future, as did all token ring type networks. Ethernet was used, but did not scale. If you had told me then that Ethernet would be used for both backbone and local network connections I would not of believed you.

Saying that one advantage FDDI had was it was redundant in that it had two rings. At the time we were working on a submarine control system and we needed that redundancy. This is something ethernet has never really had, and required customised solutions. PRP/HSR provides it, but at cost and outside the grid, you don’t see many implementations

The LANBridge might have saved Ethernet from infant mortality, but it was PC networking with Novell NetWare that created the volume network market.

I recall using those in the early 1990s. They cost £8000 each – maybe triple that for inflation. A multiport 1Gb ethernet switch nowadays costs a few pounds.

I recall the same thing. We had this guy on top that wanted everything to have access to everything in our department and that broke down into about 4 big labs and a few smaller ones. Back in the days of the collision light, ours were pretty much on like pilot lights. We put the first bridge in a logical place, between the floors, and things got markedly better, by the end we had one in each of the labs and that worked well as 99% of the chatter in any one of them was within that room.

I don’t miss the days of troubleshooting vampire taps and concentrators, but I remember thinking how great it was to run DECnet with the microvax and terminals.

DEC was a great company and VMS was a good operating system.

DECUS forever!

Ethernet of today has little conceptually in common with its original design, with layer 2 and 3 switching being the norm.

A wire protocol that falls over at around 30% capacity is far from ideal, far from even tolerable. And yet we somehow not only made it work but now it’s by far the dominant paradigm.

History is weird.

Strangely, a lot of history’s outcomes seem to have more to do with chance, foul-ups, failures, and a lot of other goofiness than with design and intent.

My introduction to Ethernet was in the 1980’s at NCR. The first was 10Base5 where a small hole had to be cored and the MAU (Medium Attachment Unut) was clamped over it using a vampire tap. The 10Base5 cable was a bit ticker than a garden hose and not as flexible.

I have 50m of yellow ‘thick net’ cable at home. Red bands every 3 yards where the ‘vampire’ taps were screwed in.

I loved the ‘collision detection ‘. Each transmitter added -1.4 volts to the coax. If the voltage was over -2 volts everyone stopped talking and picked a random number and started counting down before talking again ( after checking the bias voltage).

Is this a Hub vs Switch thing?

I remember those devices. We used a mix of thickwire and thinwire. Someone once made a network loop by connecting two thinwire lines that were on different sides of the switch. I think they fixed the firmware after that.

Thickwire was another problem. We (DEC) had a lab where network performance went down the tubes even though the traffic was not that high, but collision rate was 30-50%. Turns out the terminator on the thickwire had a cracked resistor and if it made contact, it worked; if not, the reflections caused a lot of collisions.

What fun debugging that. Note in the lab we had to get to the cable trays on the ceiling, but they wouldn’t give us ladders because we weren’t supposed to climb up, not that they’d get anyone in to fix it. So we stood on chairs. Fortunately no one got hurt.