Programming languages are generally defined as a more human-friendly way to program computers than using raw machine code. Within the realm of these languages there is a wide range of how close the programmer is allowed to get to the bare metal, which ultimately can affect the performance and efficiency of the application. One metric that has become more important over the years is that of energy efficiency, as datacenters keep growing along with their power demand. If picking one programming language over another saves even 1% of a datacenter’s electricity consumption, this could prove to be highly beneficial, assuming it weighs up against all other factors one would consider.

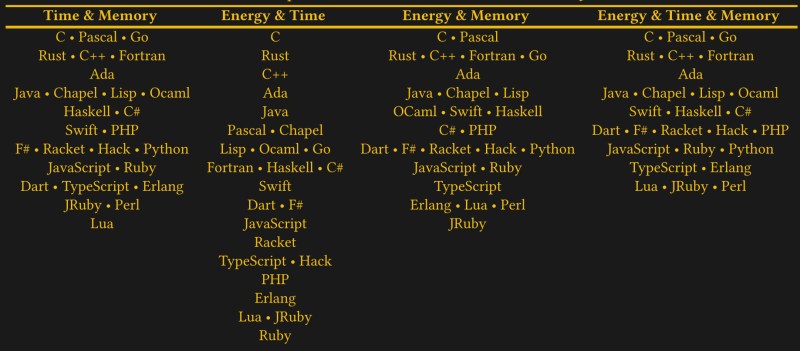

There have been some attempts over the years to put a number on the energy efficiency of specific programming languages, with a paper by Rui Pereira et al. from 2021 (preprint PDF) as published in Science of Computer Programming covering the running a couple of small benchmarks, measuring system power consumption and drawing conclusions based on this. When Hackaday covered the 2017 paper at the time, it was with the expected claim that C is the most efficient programming language, while of course scripting languages like JavaScript, Python and Lua trailed far behind.

With C being effectively high-level assembly code this is probably no surprise, but languages such as C++ and Ada should see no severe performance penalty over C due to their design, which is the part where this particular study begins to fall apart. So what is the truth and can we even capture ‘efficiency’ in a simple ranking?

Defining Energy Efficiency

At its core, ‘energy efficiency’ is pretty simple to define: it’s the total amount of energy required to accomplish a specific task. In the case of a software application, this means the whole-system power usage, including memory, disk and processor(s). Measuring the whole-system power usage is also highly relevant, as not every programming and scripting language taxes these subsystems in the same way. In the case of Java, for example, its CPU usage isn’t that dissimilar from the same code written in C, but it will use significantly more memory in the process of doing so.

Two major confounding factors when it comes to individual languages are:

- Idiomatic styles versus a focus on raw efficiency.

- Native language features versus standard library features.

The idiomatic style factor is effectively some kind of agreed-upon language usage, which potentially eschews more efficient ways of accomplishing the exact same thing. Consider here for example C++, and the use of smart pointers versus raw pointers, with the former being part of the Standard Template Library (STL) instead of a native language feature. Some would argue that using an STL ‘smart pointer’ like a unique_ptr or auto_ptr is the idiomatic way to use C++, rather than the native language support for raw pointers, despite the overhead that these add.

A similar example is also due to C++ being literally just an extension to C, namely that of printf() and similar functions found in the <cstdio> standard library header. The idiomatic way to use C++ is to use the stream-based functions found in the <iostream> header, so that instead of employing low-level functions like putc() and straightforward formatted output functions like printf() to write this:

printf("Printing %d numbers and this string: %s.\n", number, string);

The idiomatic stream equivalent is:

std::cout << "Printing " << number << " numbers and this string: " << string << "." << std::endl;

Not only is the idiomatic version longer, harder to read, more convoluted and easier to get formatting wrong with, it is internally also significantly more complicated than a simple parse-and-replace and thus causes more overhead. This is why C++20 decided to double-down on stream formatting and fudging in printf-like support with std::format and other functions in the new <format> header. Because things can always get worse.

Know What You’re Measuring

At this point we have defined what energy efficiency with programming languages is, and touched upon a few confounding factors. All of this leads to the golden rule in science: know what you’re measuring. Or in less fanciful phrasing: ‘garbage in, garbage out’, as conclusions drawn from data using flawed assumptions truly are a complete waste of anyone’s time. Whether it was deliberate, due to wishful thinking or a flawed experimental setup, the end result is the same: a meticulously crafted document that can go straight into the shredder.

In this particular comparative analysis, the pertinent question is whether the used code is truly equivalent, as looking across the papers by Rui et al. (2017, 2021), Gordillo et al. (2024) or even a 2000 paper by Lutz Prechelt all reveal stark differences between the results, with seemingly the only constant being that ‘C is pretty good’, while a language such as C++ ends up being either very close to C (Gordillo et al., Prechelt) or wildly varying in tests (Rui et al.), all pointing towards an issue with the code being used, as power usage measurement and time measurement is significantly more straightforward to verify.

In the case of the 2021 paper by Rui et al., the code examples used come from Rosetta Code, with the code-as-used also provided on GitHub. Taking as example the Hailstone Sequence, we can see a number of fascinating differences between the C, C++ and Ada versions, particularly as it pertains to the use of console output and standard library versus native language features.

The C version of the Hailstone Sequence has two printf() statements, while the C++ version has no fewer than five instances of std::cout. The Ada version comes in at two put(), two new_line (which should be merged with put_line) and one put_line. This difference in console output is already a red flag, even considering that when benchmarking you should never have console output enabled as this draws in significant parts of the operating system, with resulting high levels of variability due to task scheduling, etc.

The next red flag is that while the Ada and C versions uses the native array type, the C++ version uses std::vector, which is absolutely not equivalent to an array and should not be used if efficiency is at all a concern due to the internal copying and house-keeping performed by the std::vector data structure.

If we consider that Rosetta Code is a communal wiki that does not guarantee that the code snippets provided are ‘absolutely equivalent’, that means that the resulting paper by Rui et al. is barely worth the trip to the shredder and consequently a total waste of a tree.

Not All Bad

None of this should come as a surprise, of course, as it is well-known (or should be) that C++ produces the exact same code as C unless you use specific constructs like RTTI or the horror show that is C++ exceptions. Similarly, Ada code with similar semantics as C code should not show significant performance differences. The problem with many of the ‘programming language efficiency’ studies is simply that they take a purported authoritative source of code without being fluent in the chosen languages, run them in a controlled environment and then draw conclusions based on the mangled garbage that comes out at the end.

That said, there are some conclusions that can be drawn from the fancy-but-horrifically-flawed tables, such as how comically inefficient scripting languages like Python are. This was also the take-away by Bryan Lunduke in a recent video when he noted that Python is 71 times slower and uses 75 times more energy based on the Rui et al. paper. Even if it’s not exactly 71 times slower, Python is without question a total snail even among scripting languages, where it trades blows with Perl, PHP and Ruby at the bottom of every ranking.

The take-away here is thus perhaps that rather than believing anything you see on the internet (or read in scientific papers), it pays to keep an open mind and run your own benchmarks. As eating your own dogfood is crucial in engineering, I can point to my own remote-procedure call (NymphRPC) library in C++ on which I performed a range of optimizations to reduce overhead. This mostly involved getting rid of std::string and moving to a zero-copy system involving C-isms like memcpy and every bit of raw pointer arithmetic and bit-wise operators goodness that is available.

The result for NymphRPC was a four-fold increase in performance, which is probably a good indication of how much performance you can gain if you stick close to C-style semantics. It also makes it obvious how limited these small code snippets are, as with a real application you also deal with cache access, memory alignment and cache eviction issues, all of which can turn a seemingly efficient approach into a sluggish affair.

Having worked at two large household name tech companies in the last couple years I am afraid that I’ve seen firsthand that even where performance is supposed to matter (embedded systems and data mining) things are being written in C++ with smart pointers (thus incurring slow atomic operations to spin the refcount) even in cases where the object’s lifetime is well constrained, knowj in advance, and the object it only ever touched by one thread. Given that this was mandated by a misguided security policy I was at least able to get rid of many of the refcount spins by passing the shared pointer down the call stack by reference. Similarly, I saw a gazillion instances of vectors where arrays would have been faster. Here again, you can mitigate it by hinting the maximum size you’ll need (at which point you might as well have used an array, but again, these pathological practices are often enforced by misguided style guides).

In contrast, I’ve also worked for a company that made network gear that had to be NEBS compliant to live in telecom racks which gave it hard limits on power and thermal, and the code that ran the data plane was written in C and extensively profiles and optimized with an eye to things like cache coloring, locality, minimizing mispredicted branches, etc. and our performance per watt was between 2x and 4x our nearest competitors because we took the time to understand and leverage the underlying hardware in a way the competition did not.

This understanding takes time, practice, and thought. So long as advances in programming languages are aimed at reducing the amount of time, effort, and thought required to write software people will be seduced into taking the easy route and their software will be slower and take more energy to run. The sad thing is that modern C++, if used judiciously, can actually produce more energy efficient code simply because it has a richer, more detailed, and more nuanced set of constraints you can use to let the optimizer know the intent of your code and thus be able to prove that certain optimizations are safe which it could not prove safe with the cruder set of hints available in ANSI C, but by and large in my experience idiomatic C++ is bloated and clunky because people are focused on saving up-front costs (developer time) rather than long term costs (the extra hardware, power, and cooling required to support that bloat).

I am glad that I don’t have to nickel and dime CPUs to death in my software development career, but I do admire people who have the skills to do so.

Sounds like a job for AI to come on and optimize.

AI is a very resource inefficient way to get things wrong 20% of the time…

Ada, C, C++, and Java are in every single column. How does that make sense?

Ah is this just ranking in each column?

Thanks for the comment, I was confused about exactly the same thing until you cleared it up.

i agree with Maya’s critique of the Rui paper.

there’s something kind of distasteful to me in the kind of management-level decision making that might result from a paper like this. execution time (and energy) is usually dominated by the leaves of the call tree, and often the root simply doesn’t matter at all. i don’t really object to mandating that even high-level code is written in C…but it seems an undue constraint. and there are certainly idioms and practices when writing C that produce abysmal performance as well. (fwiw i’m a huge huge C supremacist).

some anecdotes…

on a whim, and as my only exposure to ocaml, i translated a complicated recursive datatype from C to ML. i was pretty upset at ocaml’s limitation as i did it — iirc it effectively only allowed 16-bit ints (my use case required a power of 2, that might have limited me), but took a 32-bit cell to store it in. something about how the garbage collector works. ghastly. so imagine my surprise when i benchmarked this datatype, with half the density as its direct C cousin, and found that the ocaml version was a little bit faster. and it was much easier to write — the result was a much clearer statement of the idea than the C version. of course, i don’t know what it’d be like to write the rest of my program in ML but that really surprised me and mostly it just raises a bunch of questions in my mind about what’s possible. and it makes me suspicious of a ranking that puts ocaml below C++.

because of the article’s mention of printf vs cout, i tried an experiment…just printf(“hello world %d\n”, i); vs std::cout<<“hello world “<<i<<std::endl;. i genuinely believe that the C++ version intrinsically does less work than the C version — it has a constant branch to the int output stage while printf has to parse the string “%d” to branch to it. but when i compiled it without -O, the C++ version took minutes to execute it 10,000,000 times. when i added -O, the C++ version is only 3x as long as the C version: 2.1s vs 0.7s. only 3x the time to do 0.95x the work. the idioms people actually use with C++ are always like this…the whole design of the STL, for example, is based around meditating on what could be inlined, and how much faster it could be if the callee was compiled in the context of knowing the caller’s constant arguments. somehow that meditation produces incoherent deeply nested hierarchies of calls and templates that it’s simply too large a volume of garbage for the compiler to magically undo all of that overhead. and the compiles take forever!

Your C/C++ code is not equivalent, std::endl contains an implicit flush, comparable to fflush(stdout).

With std::endl replaced by “\n”, the results are still in C’s favor, but not that much – on my old PC the C version runs in 0.7s vs 0.8s for C++.

This actually serves as a good illustration of how easy it is to mess up such language comparisons.

far out! an explicit flush on top of the implicit one guaranteed by cstdio if stdout might be a terminal. learn something new every day. thanks

Also, it would be a more direct comparison to use puts() rather than printf(), since printf() does formatting where iostream does not as used here.

GCC converts printf to puts in some circumstances.

I’ve never seen it do that even in the simplest of situations, but I don’t tend to go beyond -O2.

Of course, it’s also been a few years since I’ve written anything in C or C++, so…

One has to be extremely bored in life to do a paper on how much energy a language takes to compile.

Have you met a CS researcher lately? They love this kind of thing. (I work in a university CS dept.)

Think about computational complexity. Many useful algorithms can be implemented in a slow and resource heavy language. My recent PRNG research led me to abandon Python in favor of C/Rust when I found that Python took 10 hours to do what C took 1 hour to do. The rewrite of my Python code to C was a smart change since I gained time I would have spent waiting. A 1 hour rewrite saved me months of waiting for my code to run.

I hate it that my lab uses M$ excel scripts for calculating some curve fitting and integrals.

I want to rework this code as I know, there is a guy sitting at his desk, drinking coffe and staring out of his window while he waits for excel to finish where a program written in another language could finish it in seconds.

But I was told to not do it because it’s not my area of work and someone who is really good in writing excel scripts made this and it works and it produces correct results. Until the point where it doesn’t because the script hangs on flaed data.

Very likely the guy who told you this, just does not care how much time you waste waiting for software to finish. He’s probably also lazy and not interested in verifying any “untested” method. But of course he can’t admit he’s lazy, so he comes up with some lame excuse instead.

If it ain’t broke…

There are many factors involved: My favorite is “design time”. Is the cost of designing a function in a different tool worth the savings in time for execution? Would the function work well with other tools available?

Looking at a single aspect of a system ignores other important aspects.

DISCLAIMER: I have been widely criticized for my insistence on thorough requirements analysis. I insist on clear requirements for costs, function, performance, usability, aesthetics, and maintenance EVEN FOR SMALL (almost trivial) PRODUCTS.) For instance, one project I have is an Excel system for Real Estate Investment analysis. Excel is available to almost everyone, it is easy to understand what the app does, design is consistent, and we have added 200 functions in the last 3 years that are easy to maintain and communicate with other functions seamlessly because they are all developed in the same environment. On the other hand, Morgan Stanley had a system to close their daily operations that took 13 hours to complete. They spent a LOT of money getting the system converted to parallel FPGA and reduced the process to less than 5 minutes. Pick your battles.

Disagree – even if it’s flawed, it’s useful to encourage people to think about this, data centres and computers in general today represent a vast amount of the energy we use, if some simple switches or smart decisions could be made to shave even a few percent off that usage it would be no bad thing.

I’d have thought that the number 1 parameter in all metrics is how good the programmer is at writing good, efficient code. When I was at uni, I wrote a VB6 app that was quicker than a peer VC6 counterpart. The lesson here is not that I’m good, but that he was even worse than me at writing code.

Nowadays, with e-stores, you’ll see everyone and their dog trying to sell you apps (e.g. a clone of a NES game). Except their game is 100MB and lags on a multi core multi GHz, whereas the original fitted on a 8KB cartridge and worked fine on a circa 1MHz CPU. Then they’ll tell you “you need a new computer with a faster CPU and more RAM”. No, what we need is people who actually know how to write proper code. That won’t be me by the way, so don’t ask!

The writing of really efficient code seems to be at the bottom of the requirements list now days. I optimized the OS for a Lockheed SUE 16 bit minicomputer. It read magtape and printed to microfilm, 62k Bytes of ram, 0.9 MIPS, 22,000 lines per minute. Not bad for a system done 40 years ago.

It’s replacement was by a 68020 and 68010 multiprocessor system, using a commercial OS (I don’t remember what it was now.) The replacement wasn’t any faster… It was newer, bigger, had multiple megabytes of ram. Oh well.

“C being effectively high-level assembly code”

This is far from truth. In fact, there is no programming language which provides a good thin abstraction over a modern cpu instruction set. SIMD, cache manipulation, core communication mechanism, etc. are not properly abstracted and most programming languages just assume we have a single threaded machine with a linear memory model and no vector operations.

Just using C without intrinsic or specialized libraries will take you so far, and they don’t blend with the native features. In fact, C++ operator overload is one of the few things I missed on C.

you’re right but there’s a lot of gray area. intel made proprietary __sync_xxx() builtins for their atomic compare and swap family of instructions, which everyone adopted and eventually became relatively standardized as __atomic_xxx(), and then wrappers for those became formally standardized as stdatomic.h in C11. that covers most user-level core communication, i think. the common cache manipulation is covered by most compilers under __builtin_prefetch(). there are a smorgasbord of approaches to SIMD but the fact of the matter is it’s just not that generally useful — now that opencl / cuda is more common, that’s the more productive way to program a true vector engine. and for the really obscure stuff you can use __asm(“…”), which lets you access the non-standardized things…assembler isn’t standardized either so i think that’s exactly a way that C is just like a high-level assembler.

really, the biggest reason C is like high-level assembler is all the things it doesn’t do. ultimately, any language can access these obscure features one way or the other, but most of them are going to put a bunch of fluff in the way, or make you fight to avoid the fluff.

Wot no FORTH?

Please leave that abomination die. RPN works quite nicely on my HP calculator which I still treasure, but it’s horrible for documenting more complex things. When you read any normal computer language, you read things in the order they happen without having to remember what was a bunch of levels deep pushed onto the stack.

i understand frustration reading forth, but you got it exactly backwards :)

in forth, you read things in the order they happen. in most other languages, you read things in the order that makes sense to you to express them in. there’s no more well-ordered language than forth! it’s a strength and a curse :)

Obviously you have not used a Forth with modern amenities such as local variables, modules, objects, allocators (e.g. heaps, pools), proper multitasking constructs, and so on. These are things that I have included in my Forth for ARM Cortex-M microcontrollers, zeptoforth, and they make life so much nicer when programming in Forth.

Sounds like it combines the best of Forth and C++, the world has been waiting for this to happen.

I love FORTH. I have written my own versions many times. Unfortunately, I spend as much time writing a good FORTH program as I do an Assembly Language program. I use it mainly only for embedded systems and robots.

But for clear thinking, quick and elegant programming solutions, and sheer Logic, I prefer LISP-like languages: I spend over half my time programming in LISP, Scheme, Racket, or Haskell and I get work done. They don’t run as fast as I would like all the time, but that is the tradeoff for getting something running in 2 days rather than 2 weeks.

Over the last 59 years I’ve been exposed to MANY different languages. Too many. I regret spending so many years learning languages that I don’t use any more. A good programmer who settles on one or two languages to be an expert in can usually create a product in a shorter time than I can, until it comes to LISP. I learned LISP back in the late ’60s (I was doing Artificial Intelligence back then.) but got talked into moving to “Structured Programming” languages like Pascal because “nobody will be using LISP in a few years.” Now that I’m old enough to do programming to please myself instead of catering to corporate superstitions I love programming again. I’ve done a lot of experimenting. If I was a young guy just starting out I would learn Assembly, C, C++, and Python and ignore all the current programming fads to concentrate on producing the BEST products with the tools I know. There is a huge learning curve with RUST, technically and conceptually, but it might be better than C/C++ in the long run.

Also, I would spend a lot of time increasing my ARCHITECTURE/DESIGN skills. I would make sure I was an advanced Black Belt in MDD, UML, and Logic, because I believe design skills are more important than coding skills. You can find me in my basement writing code-generators in LISP that do round-trip engineering in UML while being processed through my favorite genetic algorithm arrays to optimize the speed and efficiency…

isn’t FORTH much the same as LISP, just with the reading order of the predicate and arguments reversed?

So many moons ago, I was programming Z80-based devices using assembly language, where we had storage and speed constraints. This means I was counting bytes and machine cycles. I also knew there would come a time when all that was irrelevant, where nobody would care how big your program was, neither how fast it ran. Nowadays we see simple Go and Rust executables taking up megabytes of space. We also see Python and JavaScript programs being compiled at runtime producing no executables. In between we find Java and Elixir running on virtual machines.

I left college knowing exactly and in minute details what happens behind the scenes of any computer program. Some of the most incompetent programmers I have ever met have no idea; they abuse the computer with poorly written code that performs badly. As an example, not too long ago, I converted a series of programs that had to run in sequence into a single one, reducing the execution time from several long hours to a handful of minutes. What was the trick? Nothing special: just knowing how a computer operates.

With all that said, I’m particularly appreciative of this study. If I can contribute to making computers (and data centers, by extension) conserve energy, then I’m benefiting myself (among others). With limited resources, the less energy is being used to fuel computers, the more energy will be available for me.

I’ve spent years writing in assembly (entire products and bootloaders for same), and using that knowledge writing a debugging engine. My current work is embedded development, which involves a number of languages, including some scripting. Nothing beats well-written assembler for speed and size. Well written C code and an optimizing compiler can come close.

However, the typical employer these days usually wants platform portability, and a commodity language which a lot of developers are familiar with. Assembly isn’t going to fly there.

One of the more egregious efficiency failings is not identifying system static parameters – things that will not change during program execution, up front ONE TIME, and instead querying them every time some function is called.

Almost all scripting languages have pretty hefty initialization-time overheads (runtime compilation, loading and parsing a lot of scripted libraries. etc), and that will weigh heavily against them in short lived invocations, versus longer lived processes (such as a daemon) where those initialization overheads will average out.. Conversely, long lived script processes can starve the system for resources because of poor garbage collection implementations (including, but not limited to, running as if the scripted process is the only thing that needs to run), forcing the system to swapping.

I’m happy to see that Visual Basic isn’t in the list.

Compiled languages tend to find gross coding faults (like simple typos or undefined variables, etc) during compilation, before ever deploying to the target system. Scripted languages often conceal such blatant code errors until the specific line of code is executed, if ever. The test and debug overhead for such things should be considered as an efficiency factor. Of course, pointer errors and error handling can still get you, but a simple typo shouldn’t.

“I also knew there would come a time when all that was irrelevant, where nobody would care how big your program was, neither how fast it ran.”

wow! i didn’t have that foresight. one day i was using borland turbo C++ 3 on MS-DOS, fighting with EMS just to keep a few hundred kb in ram at once, and then the next day i remember the feeling…i called malloc() with more than 64kb and i asked myself, “well what do i need to do to make that succeed?” and the answer was nothing! i didn’t need no __far, or ems_malloc() or paging or anything like that. i was astonished! i had no idea that was the world i was entering when i installed linux on a 486. i never looked back but the transition itself was a complete surprise to me. a deeply euphoric experience :)

a few years ago i called malloc() with more than 4GB, trying to recapture the feeling. it was certainly a pleasant experience but by then i knew it was coming and it wasn’t astounding any longer.

What does this have to do with language?

It either compiles into machine code, compiles into byte code to run in a VM/VE, or it is run as a script (which is translating to machine/byte code)

The compiler is what really matters.

If you add two integers in C, the code can do whatever it wants on overflow. Even with -fwrapv, the compiler doesn’t have to do anything special on most CPUs.

In Ada, the compiler has to issue an exception on overflow. That has a cost; it can’t be optimized out in all cases. There are several other cases where Ada requires checks that C doesn’t.

On the flip side, Fortran doesn’t permit pointers to alias, so a compiler doesn’t have to worry that changes through one pointer will change what the other pointer points to. C99 added the restrict attribute, but with earlier C code, and C code without the restrict attribute, the compiler has to assume two pointers passed to a function might point to the same item.

Except your CPU isn’t running C or ada.

It is running machine code.

I get that a compiler needs to be compliant with the language, so the language might have some hard requirements about what machine code gets generated, but that can be fixed with an addendum and a compiler optimization flag.

Also, different compilers are already going to give different results even with the exact same source, meaning something in C doesn’t necessarily run the same even on the exact same hardware if a different compiler is used.

It just seems silly.

It’s like comparing the works of two authors after reading books that 3rd parties have localized and translated. Sure, the story is probably the same, but all the little details are from the 3rd party, not the original author.

And then there are libraries like Pandas or NumPy in a “slow” language like Python that are just thin abstractions to well written C code. Multithreaded, fast and easy to use. You write a few lines and get results in no time.

If I don’t have to code for days that is very energy efficient.

This. For many applications there are a few sections of code that dominate performance. Being able to optimize these either by using a built in package.like NumPy or linking to efficient compiled code like MATLAB MEX files can provide a good balance between ease of development for overall program structure and optimizing execution.

Micro-benchmarks are never a good way to measure things when it comes to computing.

Today I learned that scripting languages are less energy and execution speed efficient than compiled languages. Great !

I worked in a big company with a huge IT department where we did COBOL CICS transactions and batch to output invoices and payrolls.

The main concern of the department management was that there would be no bugs or too many delivery delays. The rest was irrelevant.

However, there was in an office at the end of the corridor a bearded programmer who only coded in IBM assembly language. We would go see him when we had problems with the COBOL compiler and he could spot the errors on the dump list. When we asked him why he coded in assembly, he told us that it was more efficient, both for him in terms of coding time, but also for the users thanks to a higher speed. We listened to him as one listens to an old magician.

We didn’t understand much of his explanations about our code but by applying his recommendations things worked better.

Yeah, In 1965 I was doing Cryptology on IBM 1401/1440 systems. I was programming in an assembly language called AUTOCODER. I still love the control I have over my systems when I do Assembly language.

However, back in 1990, I noticed that the programs I wrote in Turbo Pascal ran 10-20 times faster than the same programs written in Turbo C. I never had the time to figure out why, and I find little articles like this one pretty interesting.

I also had that experience, but I took it a step further and analyzed the assembly code they generated.

And then I tried to write more efficient code myself in assembly code.

I couldn’t ! I spent a couple of weeks trying ..

The turbo Pascal 5.0 code was the most efficient .. all hail Borland ;-)

Philippe Kahn didn’t like the C language. I guess that affected the quality of the generated code.

Factor in the disruption/costs that bad C code can cause and you may find that Rust wins in the long term.

Factor in the long compile time that rustc incurs on every compilation cycle and you may find that it’s not the winner.

Nah, that is ridiculous given the number of CPU cycles the deployed code would ultimately consume.

You left out COBOL. Don’t laugh, a huge percentage of the world’s transactions run on it. Its proponents will tell you it’s very efficient because it compiles into machine code and doesn’t (typically) use dynamic memory, pointers, etc.

I do think it’s important for us to look at this because data centers are using significant amounts of natural resources these days.

Reducing waste is admirable, but this is silly.

This is like saving fuel by fiddling with the nozzle on the TruckZilla flamethrower.

Saving 0.1% fuel is always good. But maybe burning 200 gallons of fuel during each show for the entertainment value is a bigger problem.

Even if we ignore the fact that image/video generation and LLMs are explicitly theft, they are enormously wasteful, and they are just toys.

We should not be allowing people to waste a significant amount of all human electrical generation on this crap, just so some executives and shareholders can make some money.

Glad to see Pascal getting favorable coverage. I wish we hadn’t quit using that language.

I am with you. Borland Pascal came out while I was still in college and ran on my Dec Rainbow — first home computer. Faaaast compiling… I used Pascal/Object Pascal for a good chunk of my career along side of C/C++ and some assembly. Could really rock the world with Pascal and Delphi…. A lot of ‘what-ifs’ became production code in those days :) . I use free Pascal and Lazarus now for a few projects, but Python and C/C++ seem to be used the most now in the latter part of my career.

As for energy efficiency, I don’t worry about that. I just pick the best language for the job at hand to efficiently implement the project. Time is money and writing everything in assemble is not where to go except for some special cases… or just for fun hobby projects. That would be frowned upon in the work place.

“This is why C++20 decided to double-down on stream formatting and fudging in printf-like support with std::format and other functions in the new header.”

I’m not sure I understand this. As far as I see it, std::format is a deliberate step away from stream formatting and toward what is functionally a typesafe sprintf. Neither the libstdc++ header nor the defacto-standard fmt include , though both implement some iterator-based stream formatting functionality because sometimes that’s the right tool for the job. Afaik C++20 doesn’t provide a standard means to print/write a string without using iostream, but C++23 rectifies that with std::print.

Where did the table at the top of the article come from?

I thought this was going to be about the efficiency of writing the program. Our team uses Kotlin where a single line of Kotlin can create pages of Java Runtime Code. However if you do not have a Kotlin Function that does exactly what you want you are out of luck.

FORTRAN consumes a lot of trees, for the 80×25 coding sheet paper, and the #2 pencils.

My method in research is simple , write on python ,measure complexity, if it optimize so C or others language do the same . so in next steps i have to use a technical named macro optimization, to do that in many language such as C ,C++ or Rust , with supported LLM , i must to know 6 things , 1st is pattern of these language, 2nd tool/language doing best of which ? , 3rd workflow,4th detailed of work flow ,memory management of the program which is located in workflow, 5th library supported, 6th set up the project before doing previous steps.

I mainly write on C .but in personal project it s terrible, that why i using python LLM and C . because python is open source that mean library also open, some of which are written in C , so i could take it for my C code project, other syntax or structure on python could be use to describe in C except pointer , but it could be use a list or dict as virtual memory for heap and stack in C , so to make its have relevant together, i added a call back in python, it also mean in C , we use pointer to point a memory area. It work not perfect but at least i cant develope and research, and testing , and coding as a same times . to solve memory created from this process, i use a lot of google colab account .

LLM is language model yes ,but the way the tool analysis language is the same human did on many programming language nowaday especially high level language, so basically it could convert together if we know definition of the language . that why i write on python , it s more readable ,and LLM do the rest of coding , that why at begin i said detailed of work flow is crucial key , it took me 2 year to understand untill i could design for my own . hope it help , that is my experience. I work in small company make measurement equipment suit in industry.

I managed to live over half a century rarely encountering the word “idiomatic.” Then I started trying to learn Rust. After the first few days of online searches it became a trigger word.

By the way, if Rust fanatics are “Rustaceans”, can we call Java fanatics “Them Duke Boys”? …Told ya I was old.

haha i’ve been programming java for 25 years and i never saw or heard of that mascot though

How does Rust fair?

Look at the Title Photo.

Maybe you (the author) should release a paper using these considerations and check the results against Rui’s.

My co-workers are responsible for 99.999% of our prototype code. They spend months programming in languages like Python. They use power-hungry 32bit processors with a hundred pins. They’ll require an OS for a bluetooth light bulb.

When they’re done, I get to make it efficient by replacing most of it with hardware or assembly using an 8-bit uc.

Well, if they’re using a “chrestomathy”, then it’s gotta be true, right?

Hey, it’s a student paper. Or at least it reads like one.

would other factors not be more important than plain choice of language ?

things like :

– how much disk i/o (I’m old, I’m thinking platters, might be less relevant in SSD’s)

– how much backup versions (adding to the i/o budget)

– the interface apparatus and it’s motors/fans/lights (screens can consume a lot of juice..)

– tight loops (causing cooling fans to switch on) and/or

– the required response times (again : slower processing-> less hotspots to cool)

– distributedness (that cloud is a lot of extra power-hungry hardware compared to your local under the desk server)

One month later and already time for an update!

Please take a look at — “It’s Not Easy Being Green: On the Energy Efficiency of Programming Languages”

https://arxiv.org/html/2410.05460v1

and “Programming-Languages-Ranking-based-on-Energy–Measurements”

https://github.com/GrupoAlarcos/Programming-Languages-Ranking-based-on-Energy–Measurements