Have you wanted to get into GPU programming with CUDA but found the usual textbooks and guides a bit too intense? Well, help is at hand in the form of a series of increasingly difficult programming ‘puzzles’ created by [Sasha Rush]. The first part of the simplification is to utilise the excellent NUMBA python JIT compiler to allow easy-to-understand code to be deployed as GPU machine code. Working on these puzzles is even easier if you use this linked Google Colab as your programming environment, launching you straight into a Jupyter notebook with the puzzles laid out. You can use your own GPU if you have one, but that’s not detailed.

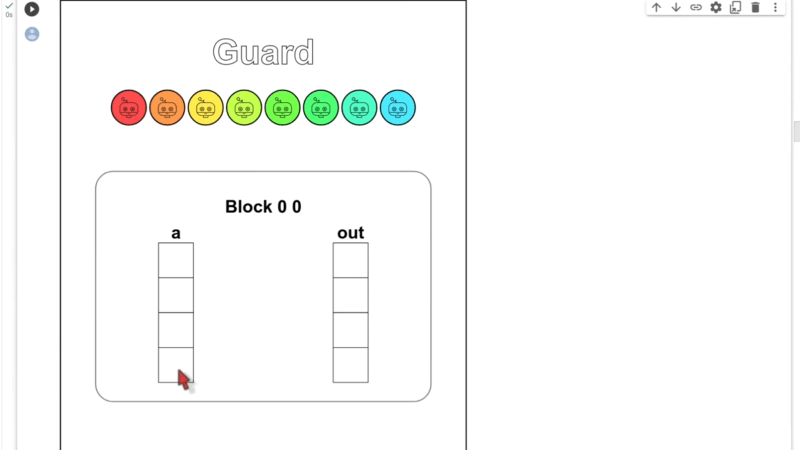

The puzzles start, assuming you know nothing at all about GPU programming, which is totally the case for some of us! What’s really nice is the way the result of the program operation is displayed, showing graphically how data are read and written to the input and output arrays you’re working with. Each essential concept for CUDA programming is identified one at a time with a real programming example, making it a breeze to follow along. Just make sure you don’t watch the video below all the way through the first time, as in it [Sasha] explains all the solutions!

Confused about why you’d want to do this? Then perhaps check out our guide to CUDA first. We know what you’re thinking: how do we use non-nVIDIA hardware? Well, there’s SCALE for that! Finally, once you understand CUDA, why not have a play with WebGPU?

Great graphics stuff :-) To hack is to learn, to make a hack to learn, is to hack a hack.

i’m still discouraged because my first attempt to learn opencl (last year) revealed a bunch of kernel / driver bugs that resulted in unkillable tasks. it’s definitely still an uphill challenge — “steep learning curve”. i hope tutorials like this reflect a greater amount of foot traffic shining a light on these low-hanging bugs

Biggest thing with a gpu

Is that unlike a CPU, one instruction can modify more than one memory location or you can do several different instructions on different memory location at the same time

In parallel, not serially like a CPU

So things like branching and conditionals and boolean may work different

Though gpu Instructions are more simple than CPU, gpu also have hardware support for floating point operations

You can think gpu instruction set like risc but the instructions work in parallel and somewhat non linear fashion

And the instructions are tailored more to algebra, calculus, and trigonometry based math

But you technically can run an entire is on a gpu

Like my old amd Fiji with 3rd party overclock support and 4GB hbm ram

1.4 ghz and slight memory overclock

Use a few shaders and some floating point and mmu

You could get windows running on a gpu running on a windows PC cpu

Both natively and in real time

Won’t be play gta v tho

Well someone was dedicated https://bford.info/pub/os/gpufs-cacm.pdf

This reminds me of a thought I’ve had brewing for awhile now. I was imagining a CPU with very basic stripped down cores but loads of them. Use a segment of them for software defined fetching and scheduling.

Could strip them down real far and do multiplication as addition across a bunch of cores for example.

I wonder if you could get the small to fit enough and get a high enough clock that, with compiler optimization for it, it would be performant.

I think you’re reinventing bitslice design. It’s a thing with a long history. I designed a hybrid structure of FPGA (for routing and data munging tasks) and bitslice ‘cores’ back in college 25 years ago. Well, I wrote it down anyway, it never made it to reality. I did come across an EE researcher who was studying 1-bit CPU cores, optimised for super high clock rates. I didn’t hear much more about that either.

Can’t reply to you directly for some reason Dave but I would say it seems like it is somewhat similar but more like splitting up complex operations across cores instead of a set of bits.

I think of it like hyperthreading to the exteme where instead of unused sections working on a separate operation within a core the unused portions are minimized and devoted to more cores entirely.