If you need to measure the temperature of something, chances are good that you could think up half a dozen ways to do it, pretty much all of which would involve some kind of thermometer, thermistor, thermocouple, or other thermo-adjacent device. But what if you need to measure something really hot, hot enough to destroy your instrument? How would you get the job done then?

Should you find yourself in this improbable situation, relax — [Anthony Francis-Jones] has you covered with this calorimetric method for measuring high temperatures. The principle is simple; rather than directly measuring the temperature of the flame, use it to heat up something of known mass and composition and then dunk that object in some water. If you know the amount of water and its temperature before and after, you can figure out how much energy was in the object. From that, you can work backward and calculate the temperature the object must have been at to have that amount of energy.

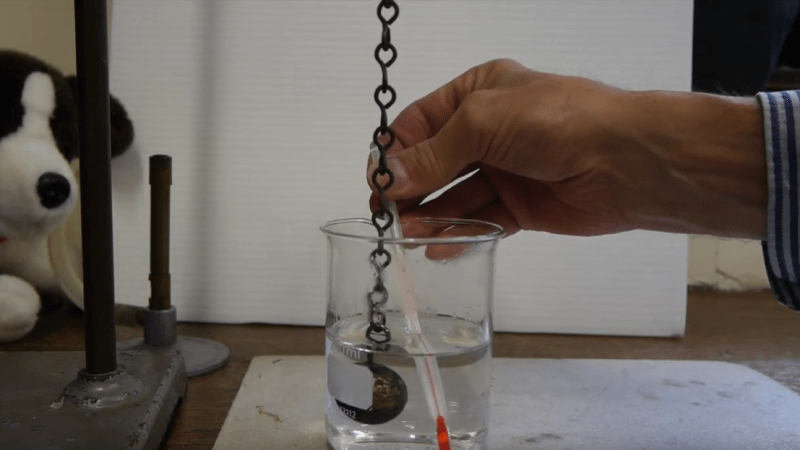

For the demonstration in the video below, [F-J] dangled a steel ball from a chain into a Bunsen burner flame and dunked it into 150 ml of room-temperature water. After a nice long toasting, the ball went into the drink, raising the temperature by 27 degrees. Knowing the specific heat capacity of water and steel and the mass of each, he worked the numbers and came up with an estimate of about 600°C for the flame. That’s off by a wide margin; typical estimates for a natural gas-powered burner are in the 1,500°C range.

We suspect the main source of error here is not letting the ball and flame come into equilibrium, but no matter — this is mainly intended as a demonstration of calorimetry. It might remind you of bomb calorimetry experiments in high school physics lab, which can also be used to explore human digestive efficiency, if you’re into that sort of thing.

Insert your own “not a hack” comment here. You’re actually using a thermometer to MEASURE TWO temperatures, then CALCULATING a third.

Alright, here’s a hack for you: drop the thermometer. The Centigrade scale is based on chopping up the range between ice and steam into 100 pieces. Use your hot object to turn some ice to steam and compute the temperature from that.

Ditch the steam and use a water/ice mixture in equilibrium and a kitchen scale.

Ice can be colder than 0, steam can be hotter than 100.

Yes but water and ice in equilibrium is 0c (granted the ice is melting because the water is absorbing heat from its vessel and surroundings and some of the water is slowly evaporating into the air) but it’s weight and temperature is considered a constant

Wasn’t talking about “in equilibrium” was talking about ice on one hand and steam on the other. The ice in your home freezer is <0 C. The next transition for steam is plasma and steam can get quite hot on the way to that.

That was the point.

Yes, you are right about that – exactly what I do without being too concerned about its accuracy. I have always loved this experiment and feel it may have been rather forgotten. It, of course, is less about the answer and more about the method and its limitations. Kids used to enjoy it!

The primary source error is probably the hissing and bubbling you hear as the ball is dropped into the water, i.e., the phase change of the water into steam. Even if the steam didn’t escape, that is energy spent on breaking high-energy hydrogen bonds instead of increasing the oscillation of the molecules.

There is also energy absorbed into the glass walls of the beaker, energy which causes the expansion of the water, the radiated energy, and energy lost during transfer. (Plus a small amount of kinetic energy which gets converted to heat from the deceleration of the ball.)

More careful calorimetry would perform the combustion of the flame inside the (insulated) calorimeter itself.

Yes, you are totally right about that! It was just a demonstration rather than a set up which would be a bit more complex to get a more accurate value! F-J

I suppose I have to chime in on this one.

There are several additional confounding factors with this technique beyond what’s mentioned in the video. The specific heat capacity of stuff changes with temperature, and that steel is changing temperature enough that its heat capacity is probably 50% higher when hot than at room temperature. Relating the heat transferred to the temperature change is a matter of integration, not multiplication.

Possibly more significant is that the ball will never come to thermodynamic equilibrium with the gas around it. Radiation moves heat around very fast at the temperatures involved, and the flame is mostly transparent to infrared so the ball is free to radiate heat away to the room. Even if you wait long enough to reach a steady state, the ball will be significantly cooler than the flame.

You’d have to surround it with an insulating material that also reaches flame temperature to remove that problem, after which you’ll run into the fun of phase transitions screwing with your specific heat calculations.

50% is rather a bit of a stretch.

“But no matter..” – just like the title “NOT Measuring…”.

Any device or setup that measures temperature is per se a thermometer.

But this thermometer measures temperature in the past.

The main losses are from boiling/evaporating water. That should be avoided.

P.S. Thanks for featuring this Dan. The losses due to the steam are indeed and issue too!

Have a look at this one of mine https://youtu.be/u2f7Ib7KoYo and if you want to see how to determine the Latent Heat of Vaporisation of water and can bear a lesson of mine: https://youtu.be/rnyrsaU4Y-M

This hot ball thing seems like a bust. People who work with metals can estimate the temperature of the sample by the color that it is glowing. That still doesn’t tell the flame temperature.

I believe that high temperature, like a flame or the sun can be measured by measuring the spectrum of the light being emitted.

The author zigs and zags and bebops and scats between measuring the temperature of “the flame” and of “the object”. The temperature of the flame is the same from start to finish because it is continually replaced. “The object” after 5 seconds and “the object” after 5 minutes, probably different. So now we need a timer.

Isn’t any device or contraption designed to measure temperature technically a “thermometer”?

That’s equivalent to saying “this article is a thermometer”. Again, no. This “hack” purports to “measure” a temperature in the past, the temperature of the flame, using a Rube Goldberg setup of metal ball and water.

I measure temperature all the time. “Gee it’s hot out!” “Brrr, can’t wait to get inside!” You’re saying the human body is a thermometer.

“I measure temperature all the time. “Gee it’s hot out!” “Brrr, can’t wait to get inside!” You’re saying the human body is a thermometer.”

Making a change from one temperature to another, makes me think you are more like a thermostat!

B^)