A little while ago Oasis was showcased on social media, billing itself as the world’s first playable “AI video game” that responds to complex user input in real-time. Code is available on GitHub for a down-scaled local version if you’d like to take a look. There’s a bit more detail and background in the accompanying project write-up, which talks about both the potential as well as the numerous limitations.

We suspect the focus on supporting complex user input (such as mouse look and an item inventory) is what the creators feel distinguishes it meaningfully from AI-generated DOOM. The latter was a concept that demonstrated AI image generators could (kinda) function as real-time game engines.

We suspect the focus on supporting complex user input (such as mouse look and an item inventory) is what the creators feel distinguishes it meaningfully from AI-generated DOOM. The latter was a concept that demonstrated AI image generators could (kinda) function as real-time game engines.

Image generators are, in a sense, prediction machines. The idea is that by providing a trained model with a short history of what just happened plus the user’s input as context, it can generate a pretty usable prediction of what should happen next, and do it quickly enough to be interactive. Run that in a loop, and you get some pretty impressive clips to put on social media.

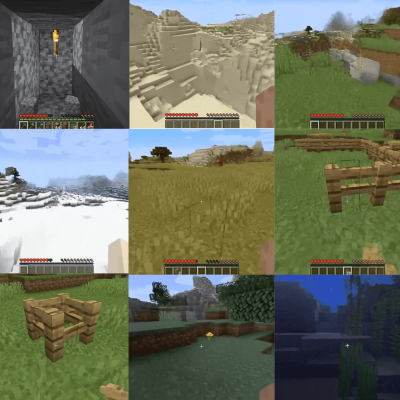

It is a neat idea, and we certainly applaud the creativity of bending an image generator to this kind of application, but we can’t help but really notice the limitations. Sit and stare at something, or walk through dark or repetitive areas, and the system loses its grip and things rapidly go in a downward spiral we can only describe as “dreamily broken”.

It may be more a demonstration of a concept than a properly functioning game, but it’s still a very clever way to leverage image generation technology. Although, if you’d prefer AI to keep the game itself untouched take a look at neural networks trained to use the DOOM level creator tools.

my only complaint is that it was only compatible with the worst possible browser (chrome).

My only complaint is that Chrome is basically taking over and most alternatives are still Chrome based.

While I think Chrome seems mostly okay, Google being at the root of all modern browsers seems like a bad path to take.

Hopefully Firefox can make a resurgence or at least Chromium forked to not be crippled by some of the choices being made for Google’s bottom line.

I didn’t have a problem with Firefox Mobile. Maybe a user-agent switcher could help?

66.6% browser market share (Hello chthonic Outside powers btw)

What can you do

DOOMCraft?

I took a look and there is no code, not really. There is code to run an AI model which is to say everything about how it operates is hidden inside a neural network-based black box.

I’m failing to see the real “point” of this – it’s mimicking an existing game’s visuals and mechanics, a game which is already procedurally generated that also “responds to complex user input in real-time”. It doesn’t look like it can/will be able to track what the player already did, just create endless new environments… which, again, already exists as a core feature in the thing it’s mimicking, except these look like they lack consistency and even cohesion, so you can’t even go back to places you’ve already explored or things you’ve already built. Kind of like a dream, where everything changes from moment to moment.

As a tech demo, I guess it’s pretty cool, but again… what’s this doing beside showing that Minecraft is an easily predictable game?

It’s purpose is to be a tech demo and experiment to progress AI.

Just like how people where mocking AI generated images when they first came out looking like nightmares, this is just a first step.

My guess is that the ideal goal would be to make an AI capable of long-term consistency and advanced enough to extrapolate.

As in, an AI that can take in a game, simulate it accurately for a user and then expand on it. A basic example would be if it could add a new mob or block type to the game autonomously with behavior that makes sense.

Combine this with AI that with how LLMs are being experimented with for writing dialogue and interactivity and other generative models making audio, then you have a massively dynamic game engine.

the part that is neat to me is that when it dreams, we are able to subjectively recognize that it is dreaming, that it is analogous to our process.

That’s a huge insight. I hadn’t looked at it that way yet.

But is it because we subconsciously put those patterns into the AI, or are those patterns really unexpectedly analogous to our dreaming process?

Did we create the ‘dreaming’ of an AI ourselves because of the limited way we understand our own brains, and accidentally and unknowingly added it into the mechanism that makes an AI tick, because we modeled the mechanism to how we think our brain works? Or is it really a side-effect of real intelligence, and not even related to how our brain works?

The implication might be that ‘dreaming’ is something universal that even aliens would do, because it’s part of what makes ‘intelligence’ tick.

To be honest, we could actually consider an AI ‘alien’. Even though it was made on this Earth, by people from this Earth, it didn’t originate from this Earth… It originated from the worlds inside people’s heads, which are quite similar to Earth, but not quite. :)

As far as I can tell, it’s designed to show exactly how explicitly AI can commit copyright infringement.

I mean, this isn’t fair use. It’s using copyrighted content to generate something that can be confused for the original. It’s practically the definition. It’s like stitching together clips from a movie into the full one.

Heck, fan versions of games are more legal than this.

Bad faith take

The purpose of the proof of concept is to quickly and easily carve a path for a more serious application of the technique to follow. He’s not going to try and design a whole original game just to show it can be done, that would be 100x the effort and probably fail

I’d call it a benchmark. As AIs become better, they should be able to gain all those things that you identify as lacking. And the more complete and precise they can emulate the games, the better the AI is. Or at least some of an AI’s aspects.

But to be honest, let’s not call it ‘AI’. It’s ‘ML’: Machine Learning. It’s not autonomous, it’s still a machine. Even the smallest insect, even plants, are autonomous within the rules that their physics set for them. What we call AIs are not autonomous.

When an ‘AI’ decides all by itself, without even having been asked, to e.g. emulate the game in all its details and display it to other I’s and AI’s as an art object, for other’s entertainment and its own feeling of worth, or even just doing it for its own entertainment, THEN we are beginning to talk about an Intelligence. Because it made an autonomous decision, not random but based on reasoning and its own free will, to do something for its own or for other’s good.

Of course there are thousands of ways an AI might demonstrate autonomy. Waiting for it to decide to emulate a whole game just for the fun of it is probably a bit high reached. Waiting for it to decide to binge Facebook videos is probably more realistic. :+)

And also another ‘of course’… Of course we want AIs to be tools and not be autonomous at all. Oscar Wilde already knew that. And I think a real AI will only be created by accident or by someone who was not thinking straight and got carried away. Which means that it’s fully certain that one day a real AI will be born. :D

“Civilization requires slaves. Human slavery is wrong, insecure and demoralizing. On mechanical slavery, on the slavery of the machine, the future of the world depends.”

– Oscar Wilde

I think this will have applications in robotics one day, something like a prefrontal cortex maybe? As in, “I wonder what would happen if I tried that?”, based on lots of learned world models.

Assuming your input was “game state” (e.g. entity positions) and not user input, could an approach like this be used as a photo-realistic rendering engine?

I imagine the data-set wouldn’t be too hard to generate: Take a ton of real-world video, have an AI annotate that into something resembling a “game-state”, ?, profit?

That would be similar to the GTA project that came out awhile back though it would skip the traditional rendering step.

I definitely think this sort of thing could use integration of standard game state data for consistency sake.

the whole development of AI is increasing the definition of ‘a ton’. early models in the 60s could only meaningfully learn from a few kb. now we can feed a zillion images into a model, and soon it will be able to digest a zillion videos (a zillion-zillion images). everything it digests, it creates a reduced representation of in its internal nodes.

which is to say, yes, that is exactly how it works and the direction it is going in.

does it run on python???????