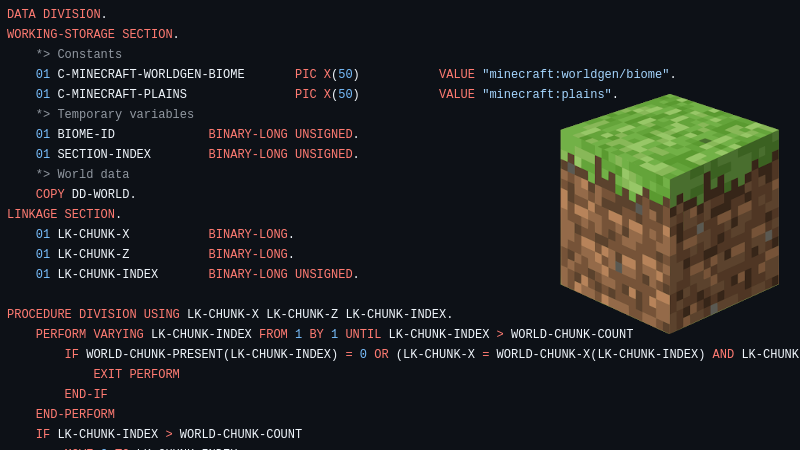

When you think of languages you might read about on Hackaday, COBOL probably isn’t one of them. The language is often considered mostly for business applications and legacy ones, at that. The thing is, there are a lot of legacy business applications out there, so there is still plenty of COBOL. Not only is it used, but it is still improved, too. So [Meyfa] wanted to set the record straight and created a Minecraft server called CobolCraft.

The system runs on GnuCOBOL and has only been tested on Linux. There are a few limitations, but nothing too serious. The most amazing thing? Apparently, [Meyfa] had no prior COBOL experience before starting this project!

Even if you don’t care about COBOL or Minecraft, the overview of the program is interesting because it shows how many things require workarounds. According to the author:

Writing a Minecraft server was perhaps not the best idea for a first COBOL project, since COBOL is intended for business applications, not low-level data manipulation (bits and bytes) which the Minecraft protocol needs lots of. However, quitting before having a working prototype was not on the table! A lot of this functionality had to be implemented completely from scratch, but with some clever programming, data encoding and decoding is not just fully working, but also quite performant.

Got the urge for Cobol? We’ve been there. Or write Minecraft in… Minecraft.

COBOL gave me PTSD in the early 90’s.

I learned COBOL back in 1985 during my Air Force daze… No PTSD… yet. But learning the limits of a programming language like this from Admiral Grace Hopper was very helpful in my programming career.

James’ Admiral reference is to the main designer of the COBOL language Grace Hopper: https://en.wikipedia.org/wiki/Grace_Hopper?wprov=sfla1

It’s there some reason for them to be more specific? A story was told, go back to bed.

No, you have to woo me, perhaps with a tale of an Air Force Admiral. Jackin’ it in San Diego poo.

Now, do it on a punch card server like I used when taking COBOL in high school. THAT would impress me.

This shouldn’t come as a surprise honestly. Even though COBOL doesn’t really work with data chunks that newer languages can easily manipulate, given the vintage it does allocate memory in a fixed format (at least we had to do that with the old mainframes we used in high school). I’m beyond rusty, so I’m not sure how the code for Minecraft matches up with the stuff I used to run. I could have been many versions behind this and have no basis for comparison.

Still, keep up the good work.

I wonder how long it would take just to feed the cards

IIRC, about 10-20 seconds per thousand. Those batch card readers were pretty fast…until they hit a bad card. Then they ripped it and the next few in half before posting a fault.

I’m old but not punchcard-old. How much information did each card hold? With that we could estimate how long it would take to load.. How long it would take to process on period-correct hardware is another story of course, I’m sure that would be prohibitive.

Remember, the cards go in the reader facedown, nine-edge first.

I am punch card old.

I am punchcard-old.

Remember that the cards go in the reader facedown, nine-edge first.

The IBM style punched card contained 80 columns and 12 rows of hole locations. For text data, it would hold 80 characters (usually 72 of data/program and 8 of serial number.) The serial number was for sorting in case you dropped the deck. For binary data, each hole represented a bit, and they could be partitioned to match the word size of a particular computer.

It varies by format, but OG Fortran (F60) only allowed 80 characters per line, because you could fit 80 characters on a punchcard.

So it’d be one line of code per card, with the card limiting the length of a line.

I am older than punch card old. My first real job was repairing key punch machines in San Francisco for IBM. Punchcards have 80 columns, one character each.

In early 80s my company, January Systems was selling a text editor for programmers that was written by me, in Cobol, and ran on NCR (formerly National Cash Register) main frames. It cost $3000.

1 line per card.

You had punch cards in high school? And a COBOL class? Dang, all we had was a single TRS-80 model 1 and no CS classes (thank God… The teachers were idiots and would have turned me off on CS). I had my own TRS-80 at home to bootstrap what became my career.

Only Cobol program I ‘had’ to write was for a class in college (we were exposed to a lot of the languages at the time like Fortran/Ada/Cobol. Pascal was the learning language). Since then I’ve installed various Cobol compilers (currently GnuCobol) and kept ‘meaning’ to write something ‘useful’ in it beyond ‘Hello World’. Never have got around to it. Maybe when I retire :rolleyes: ….

Kudos. I’ve never played with Minecraft, but looks like quite a feat to port to Cobol!

Should add that the best way to learn a language is to have a project in mind. This may have been a little over the top as a ‘project’ … but hey. Got’r done!

Yes! A project is how I learned (the beginnings of) PHP and MySQL and Javascript, CRUD on a database and mousedragging fields and labels in the browser for display purposes, no static HTML tables, trawling records using a slider. No names hard-coded so completely portable. I know others have done more challenging stuff but this was my self-education.

COBOL was my first language course in college so I have a fond feeling about it. It’s perfect for what it normally does and this project is supercool.

I dunno, my COBOL was used to massage data and output it in an understandable format on an output device. Could accept keyboard entries mid run and it would wait patiently at $300 an hour until it got one, and if you could define your I/O devices properly there wasn’t much of a limit on what they were. Sounds like a video game to me except i was limited to 26Kb so ir had better be tiny

But did you know that you can use OTHERWISE instead of ELSE in COBOL?

“OTHERWISE instead of ELSE”, that’s a fun phrase.

But still waiting for the language that will define the DO … OR ELSE construct

I’d love to see it ported to a OS/390 or similar mainframe. Those are super performant and the compilers are advanced as hell.

COBOL gets a lot of derision for its age and the look and feel of its source code, but it had a lot of design goals which were laudable. Because it had to do something useful on ancient machines that ran slowly and had limited memory, it was designed from the ground up for speed and memory efficiency, traits which continue to be useful today. It was designed to be easily learned by non-computer people like MBA’s, but it was also designed to be easy for those people to use, so it is fully bounds checked and relatively immune to the kind of bugs and security vulnerabilities we take for granted in C and its successors. Although the idea of being “self documenting” sounds laughable in retrospect it does enforce some code formatting which makes it more likely that the next guy will be able to read your code, a very important factor for an organization that is likely to outlive all of its employees. The fact that so much of the world’s software is still written in COBOL 50 years after it was considered yesterday’s castoff hilarity says Admiral Hopper must have done something right.

You missed a major point, it was designed to be readily used by accountants, not mbas, and that “self-documentation” translates directly to extremely literal hard coded printouts baked into code. It’s ugly as hell, but really did (and often still does) serve a real purpose.

If that sounds crazy a fríend wrote a program in COBOL for first responders to find addresses, back in the late 80s. Dude was with a volunteer rescue team/CB ham club. IIRC they had their own ambulance and their hangout was also the dispatch.

COBOL. The bacon in my table for years. Thanks whoever it may be that they switched to SQL from the old IMS manager when I was hired or I’d still be seeing a shrink.

COBOL would look a lot less old fashioned if they dropped the ALL UPPERCASE HABIT. This is the 21st century; we can do less shouty and eyestraining lower case now, and modern COBOL compilers can cope with either.

Matter of choice :) .

One of the things that I don’t like with Rust. A nanny language. By default it ‘complains’ if you use camelCase. The designers want you to fit into their mold and format variables ‘their’ way. Don’t like that. I am capable of formatting variables my way as well as where I want to put braces { } in the source code…. Luckily you can head your source file with pragmas to suppress the warnings , but why force you in the first place??? Ah, give me nice predictable C :) .

Worrying about ‘old fashion’ is not what you think of a language (or life in general). It is what you can ‘DO’ with the language that counts. Use the tool that fits the job at hand professionally or just have fun with Cobol/Fortran/Forth/etc for the heck of it as a hobby. Seems to me calling something ‘old fashion’ is sort of like looking to celebrities for your direction in life (what they wear/how they think/what they spend money on)… and calling anything that clashes as ‘old fashion/not with it’…. Rather than thinking/doing for yourself… Right?

gcc c is a leap back into 1960s software technology?

gcc c:

1 batch compiler

2 requires a batch file input.

3 Output a file ready to be loaded and linked by an OS

4 Mistakes of one:

a changed

b omitted

c inserted

character can result in long list of terminal error message which

may or not be related to the issue cause.

Rather than try to discover the issue, this gcc c student finds

reverting to a backup is better solution. :)

Example of updating 8080 fig forth ENCLOSE to 2024 using gcc c Ubuntu on

a $120 Lenovo Celeron N4020 4/128 GB laptop, using transparent portable

c technology, of course:

fig Forth ENCLOSE

i0 = offset to first unexamined character.

i1 = offset to first delimiter after text.

i2 = offset to first non-delimiter character. */

int main()

{ unsigned char argstack[32] ; // tib, i2,o1,i0

c

har tib[1024] = ” ONE TWO THREE \0″ ;

long int i0, i1 ,i2 ; long int i;

for (i=0; i<32; i++) { argstack[i]=0x0; }

for (i=0; i<40; i++)

// { tib[i]=0x20; };

{ printf(“%c”,tib[i]) ;}

printf(“\n”);

for (i=0; i<40; i++) //{ tib[i]=0x20; };

{ printf(“%X”,tib[i]) ;}

//printf(“\n\n”);

//printf(“\nHello\n\n”);

i=0;

l1: if (tib[i]==0x20)

{i++; //printf(“x%x”, tib[i]);

goto l1; }

i0=i; printf(“\ni0 = %ld”, i0);

//printf(“\n\n”); l4: i++;

if(tib[i]!=0x20)

{ goto l4;

};

i2=i;

i++;

if(tib[i]==0x20) {i2=i;

}; i++;

if(tib[i]!=0x0)

{i1=i;goto l3;

};

i1=i++; l3: printf(“\ni2 = %ld “, i2);

//printf(“\n\n

printf(“\ni1 = %ld”, i1);

printf(“\n\n”);

return 0;

}

Output.

ONE TWO THREE 202020204F4E45202020202020202054574F2020202020202054485245452020A00000

00

i0 = 15

i2 = 19

i1 = 20

COBOL not dead?

FORTAN math libraries still needed?

c/c++ 1 buggy 2 malware vulnerable 3 unmaintainable obfuscated software

resulting in an never-ending series of updates/upgrades have caught the attention of investors?

Along with those in computing who continue to invent new languages>

This programmer recently learned from Wikipedia that Python is a byte code

version of Forth … along with Java, of course.

Impressive… Next, he will be creating a COBOL compiler in his world with redstone! (I actually removed COBOL from my resume, I would hate to have a job fixing/maintaining it.)

Considering minecraft was originally written in ridiculously inefficient Java that he wouldn’t let people help him optimize, it wouldn’t surprise me in the least to find out it could run great in cobol. There’s a reason that Microsoft was able to port the code to C (++?, #? I forget) and the same game that had kids screaming to their parents for new nvidia cards suddenly ran smooth as silk on 5 year old netbooks.

The only reason lighting, data objects and networking worked were because he did let other people help him, so what are you talking about? He’s a paranoid nut now, but he used to be a regular shut-in who socialized online in programming communities, that’s how he found infiniminer in the first place.

COBOL is best for what it was designed for. Processing data in a structured format. When compiled it’s tiny and runs fast, and it’s maintainable. Don’t try to use it for graphics, it wasn’t designed for that.

Today I program in Transact SQL and VBA. VBA is another under appreciated language with a massive installed base that won’t go away (as much as Microsoft would like to get rid of it). Neither Transact SQL or VBA is perfect, both continue to evolve, but used in tandem they are a dynamic duo.

Modern COBOL continues to evolve and when used in tandem with other specific purpose languages is a great tool, if only the industry had not abandoned it for the latest whizz-bang language of the month.

Which industry? Scientific data processing? It’s still used sometimes, but python took over because it was easier to learn and, perhaps more importantly, could dump tasks (like an entire genome) into the addressable memory of an entire cluster with relative ease 25 years ago, in a day’s work. Meanwhile you needed several computer scientists to work out path optimization and confer with the Cray engineer for a week before seeing if your finely tuned turbulence simulation worked or crashed and burned because you accidentally went over CPU or memory budget in the scheduler.

… Or do you mean accounting, where it’s still used for a lot of real work even though financiers buy shiny new things for the stock market every year?