Although there are plenty of methods for effectively imaging a 3D space, LIDAR is widely regarded as one of the most effective methods. These systems use a rapid succession of laser pulses over a wide area to create an accurate 3D map. Early LIDAR systems were cumbersome and expensive but as the march of time continues on, these systems have become much more accessible to the average person. So much so that you can quickly attach one to a Raspberry Pi and perform LiDAR imaging for a very reasonable cost.

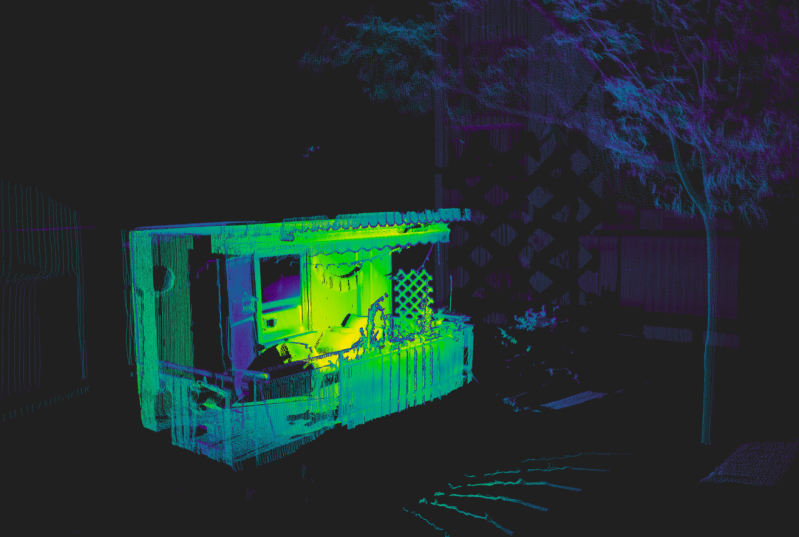

This software suite is a custom serial driver and scanning system for the Raspberry Pi, designed to work with LDRobot LIDAR modules like the LD06, LD19, and STL27L. Although still in active development, it offers an impressive set of features: real-time 2D visualizations, vertex color extraction, generation of 360-degree panoramic maps using fisheye camera images, and export capabilities for integration with other tools. The hardware setup includes a stepper motor for quick full-area scanning, and power options that include either a USB battery bank or a pair of 18650 lithium cells—making the system portable and self-contained during scans.

LIDAR systems are quickly becoming a dominant player for anything needing to map out or navigate a complex 3D space, from self-driving cars to small Arduino-powered robots. The capabilities a system like this brings are substantial for a reasonable cost, and we expect to see more LiDAR modules in other hardware as the technology matures further.

Thanks to [Dirk] for the tip!

First suggestion: Use a newer Trinamic driver (like TMC220x) instead of the older, very noisy A4988.

No need to capitalize lidar, and radar. Check the Wikipedia page or other entomology. Yes I am a lidar scientist and yes I’ve had long day am in a cranky mood.

I think there is a bug in your spelling. Muphry’s Law strikes again.

Nothing is so smiple that it can’t be screwed up.

IEFBR14

Your right I broke my hammer making s’mores.

Doesn’t the iPhone 16 pro support lidar?

My god! Puns are clearly wasted on the uneducated.

Who doesn’t love a good bug, etymologically speaking.

Definitely sounds like a YOU problem, but thanks for sharing!

I, for one appreciate the valuable use of your time, feel free to bleed off heat by ranting about grammar issues.

That said I’ve been ironing to build my own fur a while, penalty not write this way though.

No need for a comma after lidar.

😂

Sounds like you’re having entomological bug up your butt kinda day; clearly something’s buggin’ ya :p

I always thought it was an acronym meant to be in caps. Thanks for the education. hope you gave your crank the shaft.

To Ralph>. You are indeed correct in stating that according to the rule of grammar, an acronym which forms a proper noun (ie: sonar, scuba, laser, radar) does not need to be capitalized. Acronym lettering which does not form a proper noun (ie: NASA, FBI and USA) should remain capitalized. I cite the following source:

https://i.postimg.cc/nVTJGmyN/Screenshot-20250419-070938.png

https://www.grammarly.com/blog/acronyms-abbreviations/abbreviations/#:~:text=(When fully spelled out%2C the,they entail a proper noun.)

I feel like that’s backwards; NASA is a proper noun, lidar is a portmanteau of light and radar and does not refer to a specific entity, so it is a common noun.

There is no NEED, but it’s still technically correct. So if you have the time, why not? There is no need NOT to.

Wikipedia: “Lidar (/ˈlaɪdɑːr/), also LIDAR…”

I’m fine either way. We draw the line at laser and scuba.

But maybe lidar’s time has arrived as well?

entomology – the branch of zoology concerned with the study of insects.

etymology – the study of the origin of words and the way in which their meanings have changed throughout history.

On a slightly related note.. people who confuse entomology with etymology bug me in ways I can’t put into words.

😜🤣🤪

No need to post about it either. ;)

😂 I hope you have a better day tomorrow.

Me read

Me like

Me think funny comments

Does anyone know how the lidar point cloud gets converted to color? I see there is a camera and fisheye lens, but then what?

The lidar is mounted near the camera, so for each point it uses the color of the pixel which was in the same direction.

Insert side eye gif here.

not really. the mechanical 2D lidar shots a circle orthogonal to the camera direction, but instead the fisheye photos are stitched into a 360×180° spherical map from 4-8 angles, each with ±2 HDR bracketing using Hugin.

the point cloud is assembled from the laser planes (0.16° steps around the vertical axis) using the absolute stepper angle and calibrated the offset translation and rotation between lidar and rotational axis. the lens’ focal center sits exactly over the rotation, so there is no offset in the photos (otherwise you couldn’t really stitch them together but had to rely on a far more complex process).

Each point in the 3D point cloud can be interpreted as a 3D vector from zero, where it’s latitude and longitude component can be directly used to sample the pixel color in the pherical panorama, because conveniently, it uses the same axes ;)

I described it a bit more in detail here:

https://www.reddit.com/r/LiDAR/s/Fbz9ukEJ63

because I’m actually the author 😄

Hi guys, I’m actually the author. you’ll find the original release post on Reddit:

https://www.reddit.com/r/LiDAR/s/a2pCEyimDo

feel free to reach out if you’ve questions.