It’s amazing how quickly medical science made radiography one of its main diagnostic tools. Medicine had barely emerged from its Dark Age of bloodletting and the four humours when X-rays were discovered, and the realization that the internal structure of our bodies could cast shadows of this mysterious “X-Light” opened up diagnostic possibilities that went far beyond the educated guesswork and exploratory surgery doctors had relied on for centuries.

The problem is, X-rays are one of those things that you can’t see, feel, or smell, at least mostly; X-rays cause visible artifacts in some people’s eyes, and the pencil-thin beam of a CT scanner can create a distinct smell of ozone when it passes through the nasal cavity — ask me how I know. But to be diagnostically useful, the varying intensities created by X-rays passing through living tissue need to be translated into an image. We’ve already looked at how X-rays are produced, so now it’s time to take a look at how X-rays are detected and turned into medical miracles.

Taking Pictures

For over a century, photographic film was the dominant way to detect medical X-rays. In fact, years before Wilhelm Conrad Röntgen’s first systematic study of X-rays in 1895, fogged photographic plates during experiments with a Crooke’s tube were among the first indications of their existence. But it wasn’t until Röntgen convinced his wife to hold her hand between one of his tubes and a photographic plate to create the first intentional medical X-ray that the full potential of radiography could be realized.

The chemical mechanism that makes photographic film sensitive to X-rays is essentially the same as the process that makes light photography possible. X-ray film is made by depositing a thin layer of photographic emulsion on a transparent substrate, originally celluloid but later polyester. The emulsion is a mixture of high-grade gelatin, a natural polymer derived from animal connective tissue, and silver halide crystals. Incident X-ray photons ionize the halogens, creating an excess of electrons within the crystals to reduce the silver halide to atomic silver. This creates a latent image on the film that is developed by chemically converting sensitized silver halide crystals to metallic silver grains and removing all the unsensitized crystals.

Other than in the earliest days of medical radiography, direct X-ray imaging onto photographic emulsions was rare. While photographic emulsions can be exposed by X-rays, it takes a lot of energy to get a good image with proper contrast, especially on soft tissues. This became a problem as more was learned about the dangers of exposure to ionizing radiation, leading to the development of screen-film radiography.

In screen-film radiography, X-rays passing through the patient’s tissues are converted to light by one or more intensifying screens. These screens are made from plastic sheets coated with a phosphorescent material that glows when exposed to X-rays. Calcium tungstate was common back in the day, but rare earth phosphors like gadolinium oxysulfate became more popular over time. Intensifying screens were attached to the front and back covers of light-proof cassettes, with double-emulsion film sandwiched between them; when exposed to X-rays, the screens would glow briefly and expose the film.

By turning one incident X-ray photon into thousands or millions of visible light photons, intensifying screens greatly reduce the dose of radiation needed to create diagnostically useful images. That’s not without its costs, though, as the phosphors tend to spread out each X-ray photon across a physically larger area. This results in a loss of resolution in the image, which in most cases is an acceptable trade-off. When more resolution is needed, single-screen cassettes can be used with one-sided emulsion films, at the cost of increasing the X-ray dose.

Wiggle Those Toes

Intensifying screens aren’t the only place where phosphors are used to detect X-rays. Early on in the history of radiography, doctors realized that while static images were useful, continuous images of body structures in action would be a fantastic diagnostic tool. Originally, fluoroscopy was performed directly, with the radiologist viewing images created by X-rays passing through the patient onto a phosphor-covered glass screen. This required an X-ray tube engineered to operate with a higher duty cycle than radiographic tubes and had the dual disadvantages of much higher doses for the patient and the need for the doctor to be directly in the line of fire of the X-rays. Cataracts were enough of an occupational hazard for radiologists that safety glasses using leaded glass lenses were a common accessory.

One ill-advised spin-off of medical fluoroscopy was the shoe-fitting fluoroscopes that started popping up in shoe stores in the 1920s. Customers would stick their feet inside the machine and peer at a fluorescent screen to see how well their new shoes fit. It was probably not terribly dangerous for the once-a-year shoe shopper, but pity the shoe salesman who had to peer directly into a poorly regulated X-ray beam eight hours a day to show every Little Johnny’s mother how well his new Buster Browns fit.

As technology improved, image intensifiers replaced direct screens in fluoroscopy suites. Image intensifiers were vacuum tubes with a large input window coated with a fluorescent material such as zinc-cadmium sulfide or sodium-cesium iodide. The phosphors convert X-rays passing through the patient to visible light photons, which are immediately converted to photoelectrons by a photocathode made of cesium and antimony. The electrons are focused by coils and accelerated across the image intensifier tube by a high-voltage field on a cylindrical anode. The electrons pass through the anode and strike a phosphor-covered output screen, which is much smaller in diameter than the input screen. Incident X-ray photons are greatly amplified by the image intensifier, making a brighter image with a lower dose of radiation.

Originally, the radiologist viewed the output screen using a microscope, which at least put a little more hardware between his or her eyeball and the X-ray source. Later, mirrors and lenses were added to project the image onto a screen, moving the doctor’s head out of the direct line of fire. Later still, analog TV cameras were added to the optical path so the images could be displayed on high-resolution CRT monitors in the fluoroscopy suite. Eventually, digital cameras and advanced digital signal processing were introduced, greatly streamlining the workflow for the radiologist and technologists alike.

Get To The Point

So far, all the detection methods we’ve discussed fall under the general category of planar detectors, in that they capture an entire 2D shadow of the X-ray beam after having passed through the patient. While that’s certainly useful, there are cases where the dose from a single, well-defined volume of tissue is needed. This is where point detectors come into play.

In medical X-ray equipment, point detectors often rely on some of the same gas-discharge technology that DIYers use to build radiation detectors at home. Geiger tubes and ionization chambers measure the current created when X-rays ionize a low-pressure gas inside an electric field. Geiger tubes generally use a much higher voltage than ionization chambers, and tend to be used more for radiological safety, especially in nuclear medicine applications, where radioisotopes are used to diagnose and treat diseases. Ionization chambers, on the other hand, were often used as a sort of autoexposure control for conventional radiography. Tubes were placed behind the film cassette holders in the exam tables of X-ray suites and wired into the control panels of the X-ray generators. When enough radiation had passed through the patient, the film, and the cassette into the ion chamber to yield a correct exposure, the generator would shut off the X-ray beam.

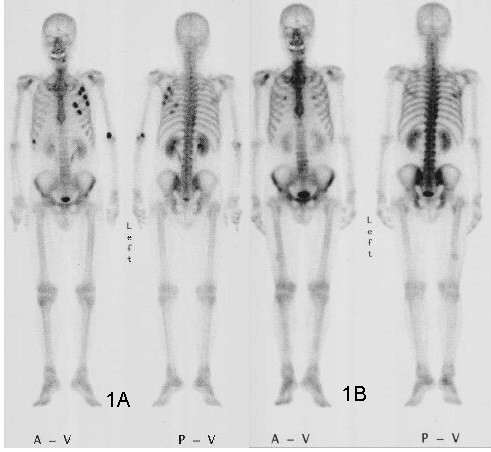

Another kind of point detector for X-rays and other kinds of radiation is the scintillation counter. These use a crystal, often cesium iodide or sodium iodide doped with thallium, that releases a few visible light photons when it absorbs ionizing radiation. The faint pulse of light is greatly amplified by one or more photomultiplier tubes, creating a pulse of current proportional to the amount of radiation. Nuclear medicine studies use a device called a gamma camera, which has a hexagonal array of PM tubes positioned behind a single large crystal. A patient is injected with a radioisotope such as the gamma-emitting technetium-99, which accumulates mainly in the bones. Gamma rays emitted are collected by the gamma camera, which derives positional information from the differing times of arrival and relative intensity of the light pulse at the PM tubes, slowly building a ghostly skeletal map of the patient by measuring where the 99Tc accumulated.

Going Digital

Despite dominating the industry for so long, the days of traditional film-based radiography were clearly numbered once solid-state image sensors began appearing in the 1980s. While it was reliable and gave excellent results, film development required a lot of infrastructure and expense, and resulted in bulky films that required a lot of space to store. The savings from doing away with all the trappings of film-based radiography, including the darkrooms, automatic film processors, chemicals, silver recycling, and often hundreds of expensive film cassettes, is largely what drove the move to digital radiography.

After briefly flirting with phosphor plate radiography, where a sensitized phosphor-coated plate was exposed to X-rays and then “developed” by a special scanner before being recharged for the next use, radiology departments embraced solid-state sensors and fully digital image capture and storage. Solid-state sensors come in two flavors: indirect and direct. Indirect sensor systems use a large matrix of photodiodes on amorphous silicon to measure the light given off by a scintillation layer directly above it. It’s basically the same thing as a film cassette with intensifying screens, but without the film.

Direct sensors, on the other hand, don’t rely on converting the X-ray into light. Rather, a large flat selenium photoconductor is used; X-rays absorbed by the selenium cause electron-hole pairs to form, which migrate to a matrix of fine electrodes on the underside of the sensor. The current across each pixel is proportional to the amount measured to the amount of radiation received, and can be read pixel-by-pixel to build up a digital image.

Dental digital X-ray sensors and sources are about $1600 new online (Both the sensor and source are about $800). They seem like the right size and power for electronics use (what’s inside a chip, BGA inspection, etc.). Has anyone tried one?

“the pencil-thin beam of a CT scanner can create a distinct smell of ozone when it passes through the nasal cavity”

What? How is that?

It’s more often reported as smelling like burned plastic/rubber, metal, or just a general chemical smell. I believe the cause is still unknown but it’s thought to either be a the radiation interacting with materials within the scanner and being smelled by the patient, or the radiation interacting with something within the patient. Those being either the olfactory neurons/epithelium, or some sort of interaction with other materials within the sinus or airways.

” Medicine had barely emerged from its Dark Age of bloodletting and the four humours when X-rays were discovered”

Come on man. I know you’re trying to be cute or whatever but that’s pretty offensive to the medical field.

.

First open heart surgery was in like 1893 (before X-rays)

First use of ether anesthetic was in 1846 and so on.

Medicine has never been nor ever will be perfect but in this current climate of pseudoscience and medical doubting this type of stuff isn’t helping.

Science is supposed to be full of ‘doubt’, which is the basis of a scientific process.

As for medical practitioners, few are doing science, and fewer think like a scientist. Most practitioners follow a rote recipe. We can no longer accept the decree of a physician without a pass for our further investigation. Of course, the exception would be for ER patients, where you pay your money and take your chances at the wheel.

Doubt has no basis. Scrutiny. Repeatability. Skepticism. Testability. Doubt IMO implies writing things off out hand without actually giving thought to it. And you are right medical “practitioners” …. practice. Medical researchers do research and (typically) also practice. And I’ll continue to stand by how medicine is definitely imperfect – but I suspect if you get injured or have respiratory problems or a stroke it’s up to you to “spin the wheel”. Your call

I’m not entirely certain that there’s a meaningful difference between doubt and skepticism in this context.

When I was in grad school, my roommate was a med school student doing the MD/Ph.D. program. His study was the promising new field of “Evidence-Based Medicine”, which started to be a big deal in the late 1990s and early 2000s.

So Iasked what exactly they were basing their practice on beforehand. Mic drop.

Now of course, they all understand physiology, immunology, and a lot of the other relevant bio/chemistry/physics. It’s not like they were doing medicine without science. But actually doing hypothesis testing, with sample sizes of more than a handful, to see which of various treatments worked best, became a lot more of a thing around that time.

Lots of other factors make a good doctor: empathy, intuition, curiosity, dexterity, etc. But there has to be some place for testing out what works well too, right?

https://en.wikipedia.org/wiki/Evidence-based_medicine

Anyway, my feel is that there’s a lot more of the repeatability/testability in medicine these days, even among the pure practioners.

The standard of care now is Evidence Based Medicine. In the words of one of my mentors, himself hugely famous in some circles, “the era of eminence over evidence is over.” Not everyone has the message though, today, for sure. Studying human subjects is super hard for a lot of reasons. We’d all love to see huge sample size prospective trials to answer every clinical question but they are super hard to do at best, impossible more often. So we’re stuck with best guess way more often than we’d like. And despite the many, many missteps along the way medicine is doing pretty good. Mortality from previously mortal dumb stuff is way down compared to even 100 years ago. Hopefully most would call that pretty good. Not bad for a bunch of hacksaw wielding barber-surgeons.

You would like to think so, but in practice I would say it is spotty at best. You will regularly see doctors handing out antibiotics etc with ZERO measuring much of anything. “Evidence based” might be a goal, but medicine is often just not scientific. We saw the rubbish with the commie-china-fauci virus, where doctors and actual scientists were forbidden to do science or question what they were handed as the “solution”, all with abbreviated or non-existent safety testing. That is not science.

I literally defined what I was trying (poorly it seems) to articulate. If you want to question my knowledge of English fine.

I’ll try again. Roundly dismissing modern medicine as a bunch of hookus (I won’t give you a definition of that) without thinking about anything objectively or with out let’s say honest or healthy skepticism is a problem. At least in the US and very much more recently. You are free to disagree with allllllll those assertions, strawman my grammar, whatever.

And also literally for the third time I’ll repeat. Science isn’t perfect. Maybe not even good. Doctors are also not perfect. Or good sometimes. But unless you yourself are a dishonest, unscrupulous doctor then maybe don’t bad mouth them. The vast majority are hard working and honestly care about their patients much more than, IMO, the public realizes largely.

“Doubt is the same as skepticism.

You don’t believe, without evidence.”

Ok fine you win.

I’m an atheist for what that’s worth. I didn’t get my made up definition from anywhere (why are you persevering on that?) I was defining it as I saw fit to make a point that still seems lost entirely on you. Change my language to however you please, if it means you can get a look at what I’m trying to say. Pretty please.

“Science is supposed to be full of ‘doubt’, which is the basis of a scientific process.”

Yes, but there’s a limit to it, too.

For example, questioning if other living beeings are sentinent or not.

Denying it, because “it’s not scientifically proven yet” is cynical violates common sense.

Because we as human beings are sentinent and know its existence, its meaning.

Assuming that other live forms do posses the same ability, should be the norm and not other way round.

It’s the same principle as “innocent until proven guilty”.

Unfortunately, science in its ignorance doesn’t follow this reasonable logic here.

Science as such can’t make up for wisdom and life experience, thus.

Dark ages: DaVinci did his anatomical chicken-scratches in the 15th century, and I understand they’re still used because there are some things that just aren’t captured well by photographs.

Ok, Europe’s so-called “dark ages” is considered to be 5th-10th centuries.

I’m reminded of the James Herriot books. Set in the early 1900s, there was a story where Herriot’s boss, Sigfried, used bloodletting on a horse. Apparently to good effect, and apparently it wasn’t the only time he’d done it. I would not be surprised to find that some physicians of that era might have used the treatment on human patients.

Whoever decided to put “Schrödinger” on the lab coat took it too far IMO, the illustration was perfect without.

Art was repurposed from another piece where it made more sense, but yeah. If you get it, you get it.

It has to be said that the image of Röntgen’s wife’s fingers is the first digital x-ray image.

nice